Egor Ershov

Modulate and Reconstruct: Learning Hyperspectral Imaging from Misaligned Smartphone Views

Jul 02, 2025

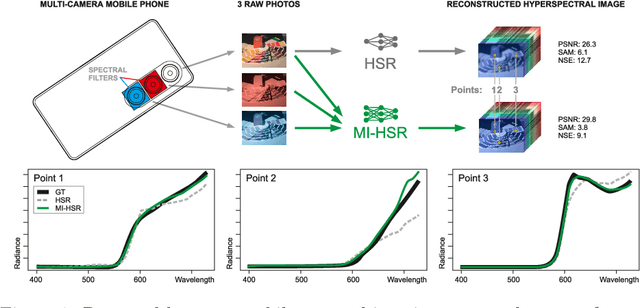

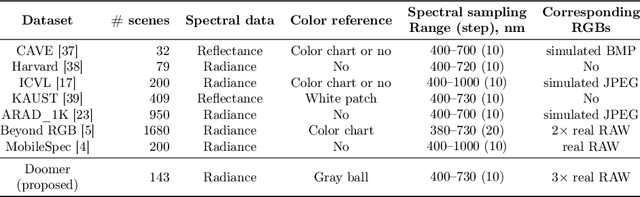

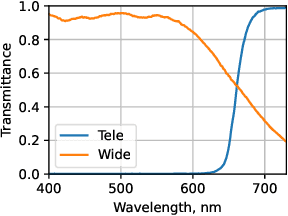

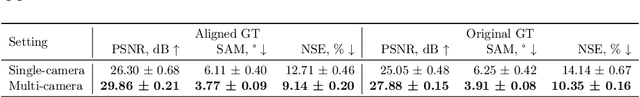

Abstract:Hyperspectral reconstruction (HSR) from RGB images is a fundamentally ill-posed problem due to severe spectral information loss. Existing approaches typically rely on a single RGB image, limiting reconstruction accuracy. In this work, we propose a novel multi-image-to-hyperspectral reconstruction (MI-HSR) framework that leverages a triple-camera smartphone system, where two lenses are equipped with carefully selected spectral filters. Our configuration, grounded in theoretical and empirical analysis, enables richer and more diverse spectral observations than conventional single-camera setups. To support this new paradigm, we introduce Doomer, the first dataset for MI-HSR, comprising aligned images from three smartphone cameras and a hyperspectral reference camera across diverse scenes. We show that the proposed HSR model achieves consistent improvements over existing methods on the newly proposed benchmark. In a nutshell, our setup allows 30% towards more accurately estimated spectra compared to an ordinary RGB camera. Our findings suggest that multi-view spectral filtering with commodity hardware can unlock more accurate and practical hyperspectral imaging solutions.

Color Matching Using Hypernetwork-Based Kolmogorov-Arnold Networks

Mar 14, 2025Abstract:We present cmKAN, a versatile framework for color matching. Given an input image with colors from a source color distribution, our method effectively and accurately maps these colors to match a target color distribution in both supervised and unsupervised settings. Our framework leverages the spline capabilities of Kolmogorov-Arnold Networks (KANs) to model the color matching between source and target distributions. Specifically, we developed a hypernetwork that generates spatially varying weight maps to control the nonlinear splines of a KAN, enabling accurate color matching. As part of this work, we introduce a first large-scale dataset of paired images captured by two distinct cameras and evaluate the efficacy of our and existing methods in matching colors. We evaluated our approach across various color-matching tasks, including: (1) raw-to-raw mapping, where the source color distribution is in one camera's raw color space and the target in another camera's raw space; (2) raw-to-sRGB mapping, where the source color distribution is in a camera's raw space and the target is in the display sRGB space, emulating the color rendering of a camera ISP; and (3) sRGB-to-sRGB mapping, where the goal is to transfer colors from a source sRGB space (e.g., produced by a source camera ISP) to a target sRGB space (e.g., from a different camera ISP). The results show that our method outperforms existing approaches by 37.3% on average for supervised and unsupervised cases while remaining lightweight compared to other methods. The codes, dataset, and pre-trained models are available at: https://github.com/gosha20777/cmKAN

Expert-aware uncertainty estimation for quality control of neural-based blood typing

Jul 15, 2024Abstract:In medical diagnostics, accurate uncertainty estimation for neural-based models is essential for complementing second-opinion systems. Despite neural network ensembles' proficiency in this problem, a gap persists between actual uncertainties and predicted estimates. A major difficulty here is the lack of labels on the hardness of examples: a typical dataset includes only ground truth target labels, making the uncertainty estimation problem almost unsupervised. Our novel approach narrows this gap by integrating expert assessments of case complexity into the neural network's learning process, utilizing both definitive target labels and supplementary complexity ratings. We validate our methodology for blood typing, leveraging a new dataset "BloodyWell" unique in augmenting labeled reaction images with complexity scores from six medical specialists. Experiments demonstrate enhancement of our approach in uncertainty prediction, achieving a 2.5-fold improvement with expert labels and a 35% increase in performance with estimates of neural-based expert consensus.

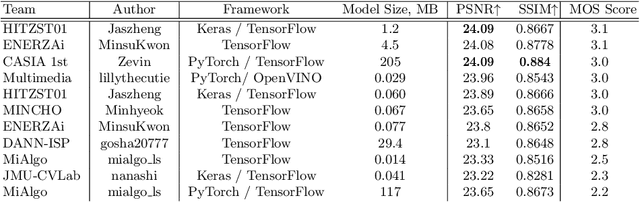

NTIRE 2024 Challenge on Night Photography Rendering

Jun 18, 2024

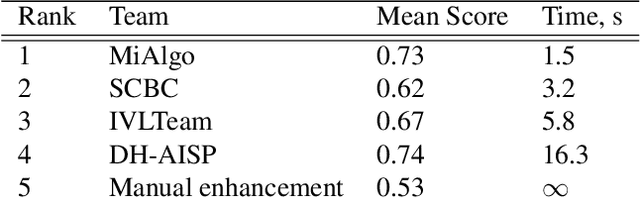

Abstract:This paper presents a review of the NTIRE 2024 challenge on night photography rendering. The goal of the challenge was to find solutions that process raw camera images taken in nighttime conditions, and thereby produce a photo-quality output images in the standard RGB (sRGB) space. Unlike the previous year's competition, the challenge images were collected with a mobile phone and the speed of algorithms was also measured alongside the quality of their output. To evaluate the results, a sufficient number of viewers were asked to assess the visual quality of the proposed solutions, considering the subjective nature of the task. There were 2 nominations: quality and efficiency. Top 5 solutions in terms of output quality were sorted by evaluation time (see Fig. 1). The top ranking participants' solutions effectively represent the state-of-the-art in nighttime photography rendering. More results can be found at https://nightimaging.org.

Learned Smartphone ISP on Mobile GPUs with Deep Learning, Mobile AI & AIM 2022 Challenge: Report

Nov 07, 2022

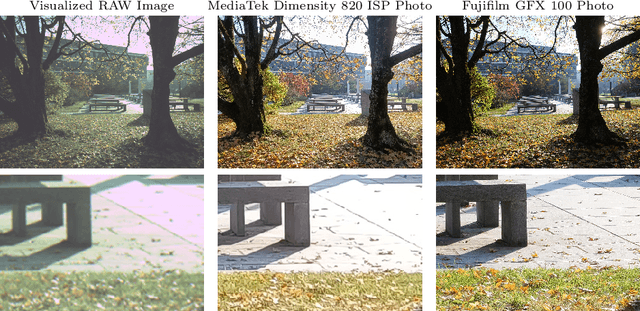

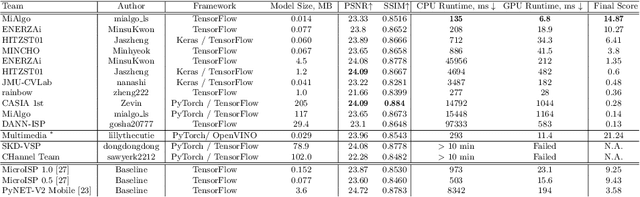

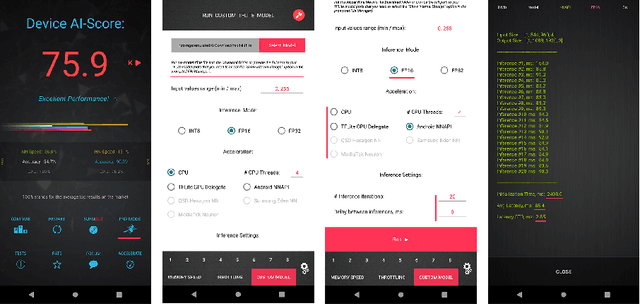

Abstract:The role of mobile cameras increased dramatically over the past few years, leading to more and more research in automatic image quality enhancement and RAW photo processing. In this Mobile AI challenge, the target was to develop an efficient end-to-end AI-based image signal processing (ISP) pipeline replacing the standard mobile ISPs that can run on modern smartphone GPUs using TensorFlow Lite. The participants were provided with a large-scale Fujifilm UltraISP dataset consisting of thousands of paired photos captured with a normal mobile camera sensor and a professional 102MP medium-format FujiFilm GFX100 camera. The runtime of the resulting models was evaluated on the Snapdragon's 8 Gen 1 GPU that provides excellent acceleration results for the majority of common deep learning ops. The proposed solutions are compatible with all recent mobile GPUs, being able to process Full HD photos in less than 20-50 milliseconds while achieving high fidelity results. A detailed description of all models developed in this challenge is provided in this paper.

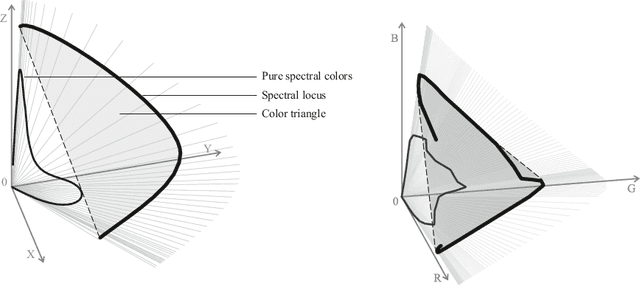

On the properties of some low-parameter models for color reproduction in terms of spectrum transformations and coverage of a color triangle

Oct 21, 2021

Abstract:One of the classical approaches to solving color reproduction problems, such as color adaptation or color space transform, is the use of low-parameter spectral models. The strength of this approach is the ability to choose a set of properties that the model should have, be it a large coverage area of a color triangle, an accurate description of the addition or multiplication of spectra, knowing only the tristimulus corresponding to them. The disadvantage is that some of the properties of the mentioned spectral models are confirmed only experimentally. This work is devoted to the theoretical substantiation of various properties of spectral models. In particular, we prove that the banded model is the only model that simultaneously possesses the properties of closure under addition and multiplication. We also show that the Gaussian model is the limiting case of the von Mises model and prove that the set of protomers of the von Mises model unambiguously covers the color triangle in both the case of convex and non-convex spectral locus.

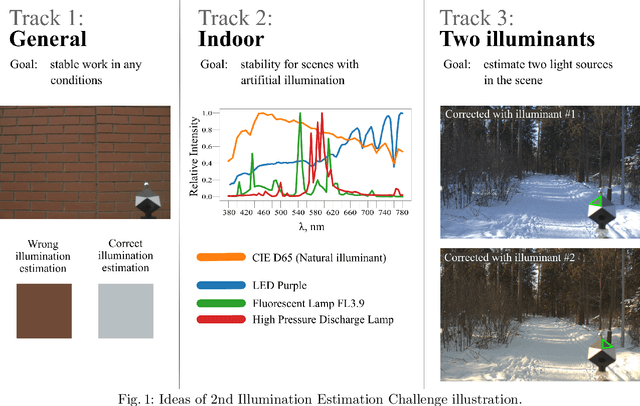

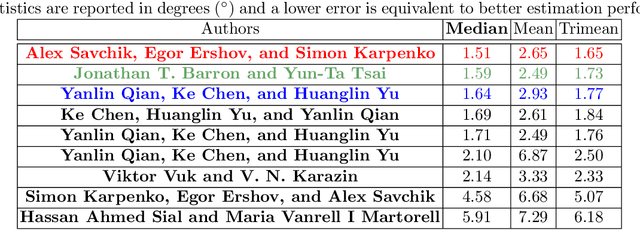

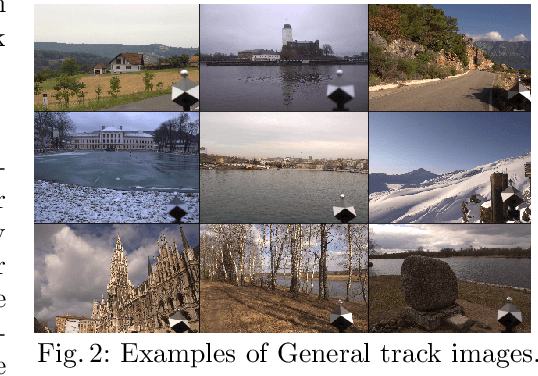

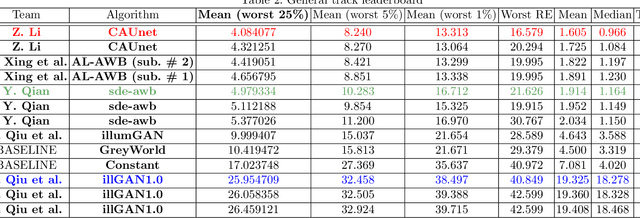

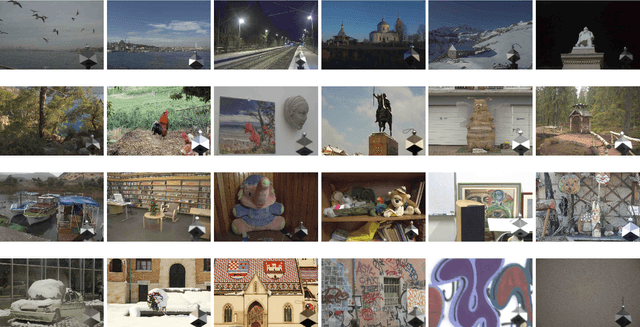

Illumination Estimation Challenge: experience of past two years

Dec 31, 2020

Abstract:Illumination estimation is the essential step of computational color constancy, one of the core parts of various image processing pipelines of modern digital cameras. Having an accurate and reliable illumination estimation is important for reducing the illumination influence on the image colors. To motivate the generation of new ideas and the development of new algorithms in this field, the 2nd Illumination estimation challenge~(IEC\#2) was conducted. The main advantage of testing a method on a challenge over testing in on some of the known datasets is the fact that the ground-truth illuminations for the challenge test images are unknown up until the results have been submitted, which prevents any potential hyperparameter tuning that may be biased. The challenge had several tracks: general, indoor, and two-illuminant with each of them focusing on different parameters of the scenes. Other main features of it are a new large dataset of images (about 5000) taken with the same camera sensor model, a manual markup accompanying each image, diverse content with scenes taken in numerous countries under a huge variety of illuminations extracted by using the SpyderCube calibration object, and a contest-like markup for the images from the Cube+ dataset that was used in IEC\#1. This paper focuses on the description of the past two challenges, algorithms which won in each track, and the conclusions that were drawn based on the results obtained during the 1st and 2nd challenge that can be useful for similar future developments.

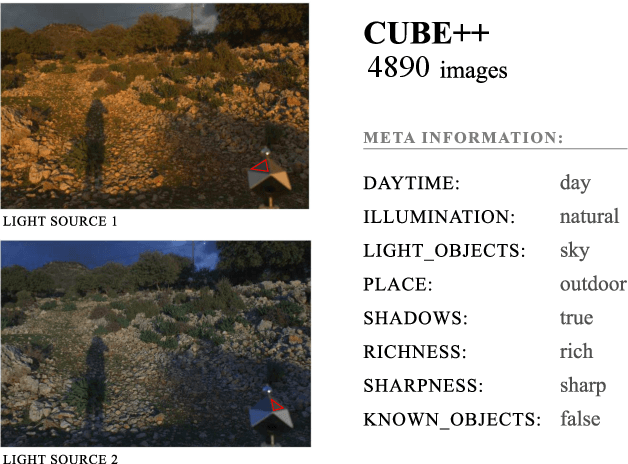

The Cube++ Illumination Estimation Dataset

Nov 19, 2020

Abstract:Computational color constancy has the important task of reducing the influence of the scene illumination on the object colors. As such, it is an essential part of the image processing pipelines of most digital cameras. One of the important parts of the computational color constancy is illumination estimation, i.e. estimating the illumination color. When an illumination estimation method is proposed, its accuracy is usually reported by providing the values of error metrics obtained on the images of publicly available datasets. However, over time it has been shown that many of these datasets have problems such as too few images, inappropriate image quality, lack of scene diversity, absence of version tracking, violation of various assumptions, GDPR regulation violation, lack of additional shooting procedure info, etc. In this paper, a new illumination estimation dataset is proposed that aims to alleviate many of the mentioned problems and to help the illumination estimation research. It consists of 4890 images with known illumination colors as well as with additional semantic data that can further make the learning process more accurate. Due to the usage of the SpyderCube color target, for every image there are two ground-truth illumination records covering different directions. Because of that, the dataset can be used for training and testing of methods that perform single or two-illuminant estimation. This makes it superior to many similar existing datasets. The datasets, it's smaller version SimpleCube++, and the accompanying code are available at https://github.com/Visillect/CubePlusPlus/.

Multiple Light Source Dataset for Colour Research

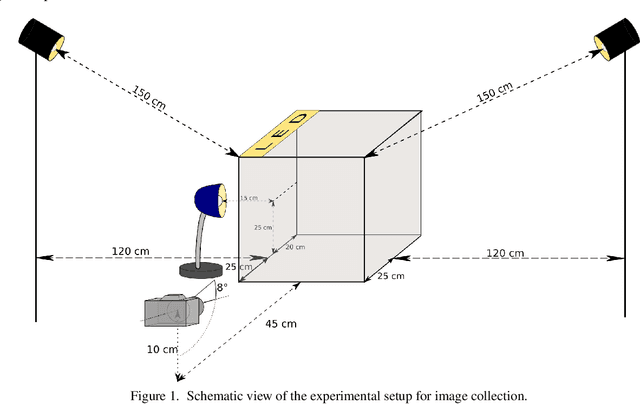

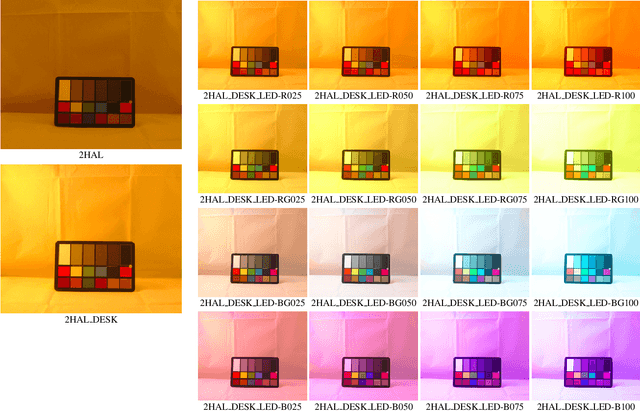

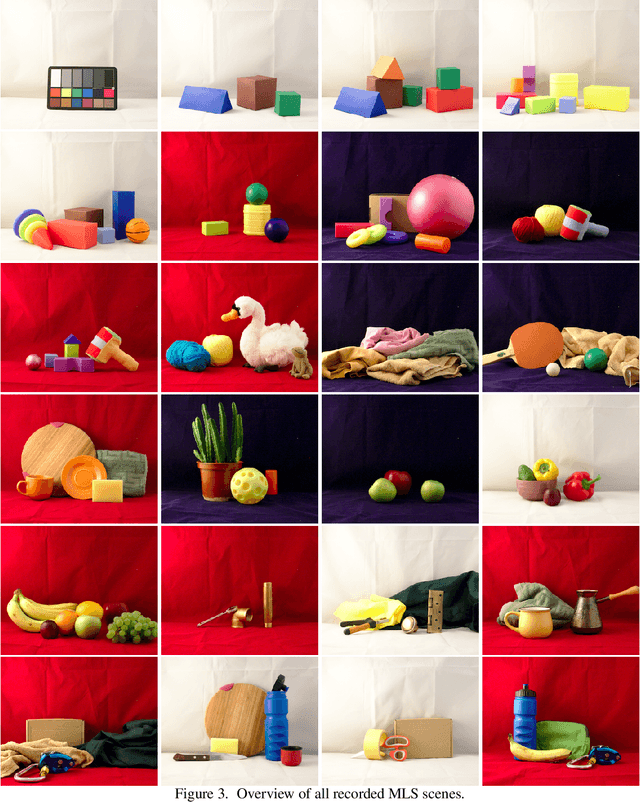

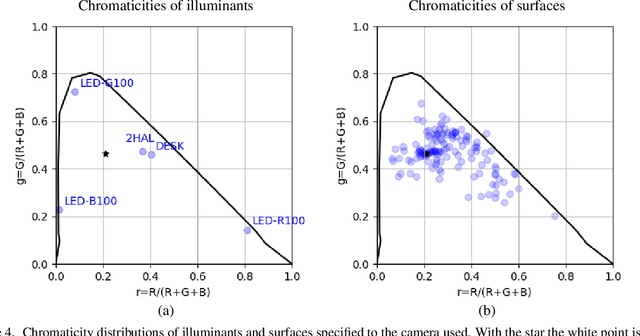

Sep 16, 2019

Abstract:We present a collection of 24 multiple object scenes each recorded under 18 multiple light source illumination scenarios. The illuminants are varying in dominant spectral colours, intensity and distance from the scene. We mainly address the realistic scenarios for evaluation of computational colour constancy algorithms, but also have aimed to make the data as general as possible for computational colour science and computer vision. Along with the images of the scenes, we provide spectral characteristics of the camera, light sources and the objects and include pixel-by-pixel ground truth annotation of uniformly coloured object surfaces thus making this useful for benchmarking colour-based image segmentation algorithms. The dataset is freely available at https://github.com/visillect/mls-dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge