Arseniy Terekhin

Generalization of Brady-Yong Algorithm for Fast Hough Transform to Arbitrary Image Size

Nov 11, 2024Abstract:Nowadays, the Hough (discrete Radon) transform (HT/DRT) has proved to be an extremely powerful and widespread tool harnessed in a number of application areas, ranging from general image processing to X-ray computed tomography. Efficient utilization of the HT to solve applied problems demands its acceleration and increased accuracy. Along with this, most fast algorithms for computing the HT, especially the pioneering Brady-Yong algorithm, operate on power-of-two size input images and are not adapted for arbitrary size images. This paper presents a new algorithm for calculating the HT for images of arbitrary size. It generalizes the Brady-Yong algorithm from which it inherits the optimal computational complexity. Moreover, the algorithm allows to compute the HT with considerably higher accuracy compared to the existing algorithm. Herewith, the paper provides a theoretical analysis of the computational complexity and accuracy of the proposed algorithm. The conclusions of the performed experiments conform with the theoretical results.

NTIRE 2024 Challenge on Night Photography Rendering

Jun 18, 2024

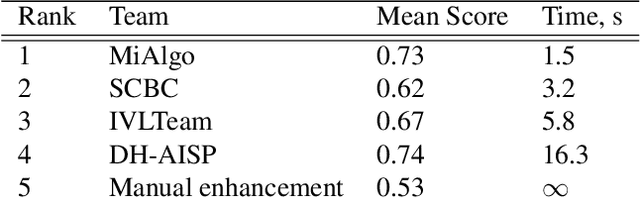

Abstract:This paper presents a review of the NTIRE 2024 challenge on night photography rendering. The goal of the challenge was to find solutions that process raw camera images taken in nighttime conditions, and thereby produce a photo-quality output images in the standard RGB (sRGB) space. Unlike the previous year's competition, the challenge images were collected with a mobile phone and the speed of algorithms was also measured alongside the quality of their output. To evaluate the results, a sufficient number of viewers were asked to assess the visual quality of the proposed solutions, considering the subjective nature of the task. There were 2 nominations: quality and efficiency. Top 5 solutions in terms of output quality were sorted by evaluation time (see Fig. 1). The top ranking participants' solutions effectively represent the state-of-the-art in nighttime photography rendering. More results can be found at https://nightimaging.org.

Illumination Estimation Challenge: experience of past two years

Dec 31, 2020

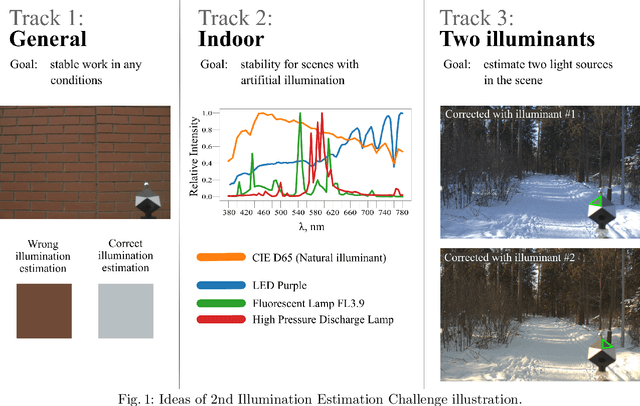

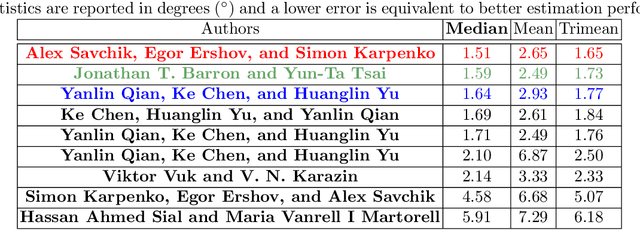

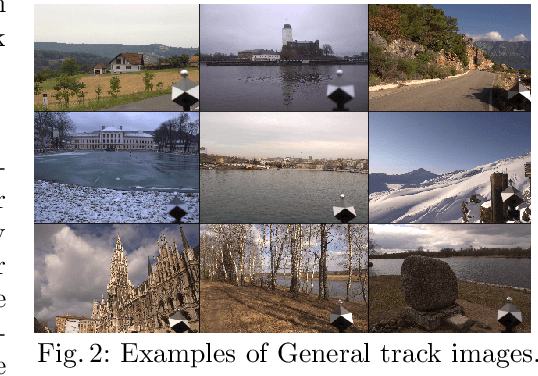

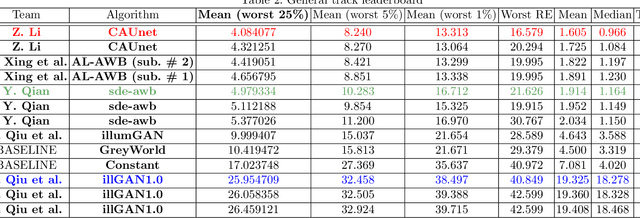

Abstract:Illumination estimation is the essential step of computational color constancy, one of the core parts of various image processing pipelines of modern digital cameras. Having an accurate and reliable illumination estimation is important for reducing the illumination influence on the image colors. To motivate the generation of new ideas and the development of new algorithms in this field, the 2nd Illumination estimation challenge~(IEC\#2) was conducted. The main advantage of testing a method on a challenge over testing in on some of the known datasets is the fact that the ground-truth illuminations for the challenge test images are unknown up until the results have been submitted, which prevents any potential hyperparameter tuning that may be biased. The challenge had several tracks: general, indoor, and two-illuminant with each of them focusing on different parameters of the scenes. Other main features of it are a new large dataset of images (about 5000) taken with the same camera sensor model, a manual markup accompanying each image, diverse content with scenes taken in numerous countries under a huge variety of illuminations extracted by using the SpyderCube calibration object, and a contest-like markup for the images from the Cube+ dataset that was used in IEC\#1. This paper focuses on the description of the past two challenges, algorithms which won in each track, and the conclusions that were drawn based on the results obtained during the 1st and 2nd challenge that can be useful for similar future developments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge