Dimos Tzoumanikas

Department of Computing, Imperial College London, London UK, SW7 2AZ

Scalable Autonomous Drone Flight in the Forest with Visual-Inertial SLAM and Dense Submaps Built without LiDAR

Mar 14, 2024

Abstract:Forestry constitutes a key element for a sustainable future, while it is supremely challenging to introduce digital processes to improve efficiency. The main limitation is the difficulty of obtaining accurate maps at high temporal and spatial resolution as a basis for informed forestry decision-making, due to the vast area forests extend over and the sheer number of trees. To address this challenge, we present an autonomous Micro Aerial Vehicle (MAV) system which purely relies on cost-effective and light-weight passive visual and inertial sensors to perform under-canopy autonomous navigation. We leverage visual-inertial simultaneous localization and mapping (VI-SLAM) for accurate MAV state estimates and couple it with a volumetric occupancy submapping system to achieve a scalable mapping framework which can be directly used for path planning. As opposed to a monolithic map, submaps inherently deal with inevitable drift and corrections from VI-SLAM, since they move with pose estimates as they are updated. To ensure the safety of the MAV during navigation, we also propose a novel reference trajectory anchoring scheme that moves and deforms the reference trajectory the MAV is tracking upon state updates from the VI-SLAM system in a consistent way, even upon large changes in state estimates due to loop-closures. We thoroughly validate our system in both real and simulated forest environments with high tree densities in excess of 400 trees per hectare and at speeds up to 3 m/s - while not encountering a single collision or system failure. To the best of our knowledge this is the first system which achieves this level of performance in such unstructured environment using low-cost passive visual sensors and fully on-board computation including VI-SLAM.

Finding Things in the Unknown: Semantic Object-Centric Exploration with an MAV

Mar 03, 2023

Abstract:Exploration of unknown space with an autonomous mobile robot is a well-studied problem. In this work we broaden the scope of exploration, moving beyond the pure geometric goal of uncovering as much free space as possible. We believe that for many practical applications, exploration should be contextualised with semantic and object-level understanding of the environment for task-specific exploration. Here, we study the task of both finding specific objects in unknown space as well as reconstructing them to a target level of detail. We therefore extend our environment reconstruction to not only consist of a background map, but also object-level and semantically fused submaps. Importantly, we adapt our previous objective function of uncovering as much free space as possible in as little time as possible with two additional elements: first, we require a maximum observation distance of background surfaces to ensure target objects are not missed by image-based detectors because they are too small to be detected. Second, we require an even smaller maximum distance to the found objects in order to reconstruct them with the desired accuracy. We further created a Micro Aerial Vehicle (MAV) semantic exploration simulator based on Habitat in order to quantitatively demonstrate how our framework can be used to efficiently find specific objects as part of exploration. Finally, we showcase this capability can be deployed in real-world scenes involving our drone equipped with an Intel RealSense D455 RGB-D camera.

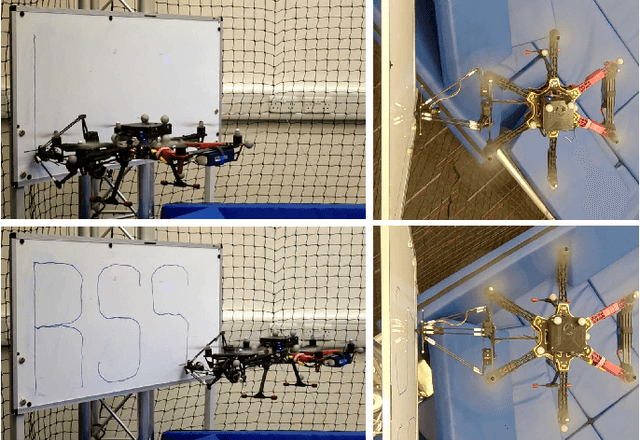

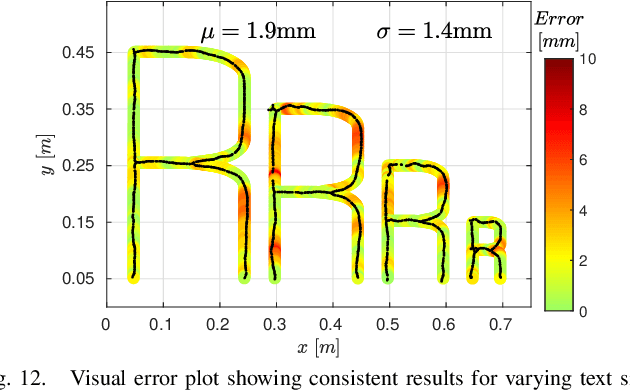

Aerial Manipulation Using Hybrid Force and Position NMPC Applied to Aerial Writing

Jun 03, 2020

Abstract:Aerial manipulation aims at combining the manoeuvrability of aerial vehicles with the manipulation capabilities of robotic arms. This, however, comes at the cost of the additional control complexity due to the coupling of the dynamics of the two systems. In this paper we present a NMPC specifically designed for MAVs equipped with a robotic arm. We formulate a hybrid control model for the combined MAV-arm system which incorporates interaction forces acting on the end effector. We explain the practical implementation of our algorithm and show extensive experimental results of our custom built system performing multiple aerial-writing tasks on a whiteboard, revealing accuracy in the order of millimetres.

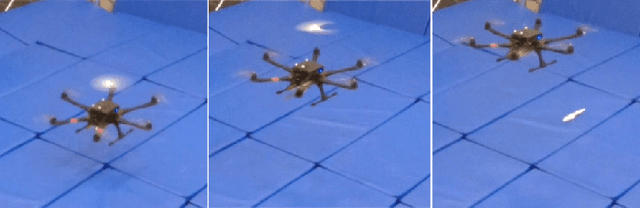

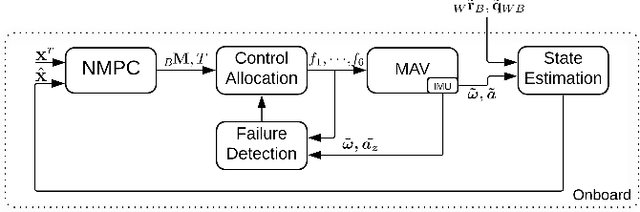

Nonlinear MPC with Motor Failure Identification and Recovery for Safe and Aggressive Multicopter Flight

Feb 16, 2020

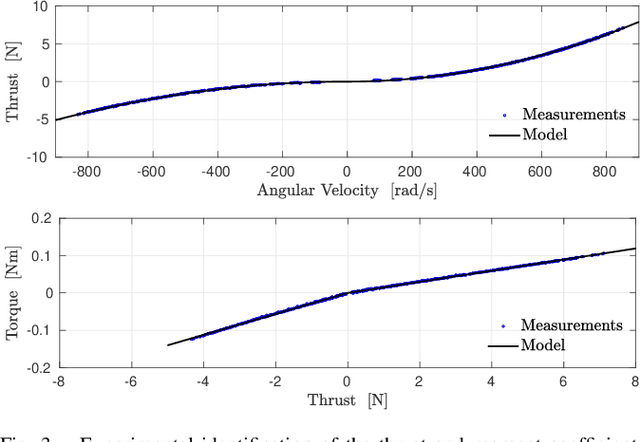

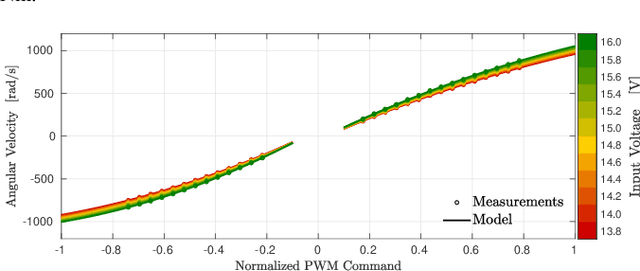

Abstract:Safe and precise reference tracking is a crucial characteristic of MAVs that have to operate under the influence of external disturbances in cluttered environments. In this paper, we present a NMPC that exploits the fully physics based non-linear dynamics of the system. We furthermore show how the moment and thrust control inputs can be transformed into feasible actuator commands. In order to guarantee safe operation despite potential loss of a motor under which we show our system keeps operating safely, we developed an EKF based motor failure identification algorithm. We verify the effectiveness of the developed pipeline in flight experiments with and without motor failures.

Fast Frontier-based Information-driven Autonomous Exploration with an MAV

Feb 13, 2020

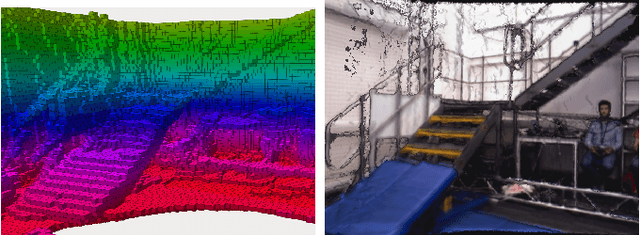

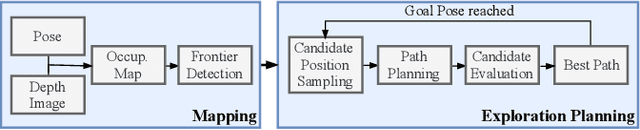

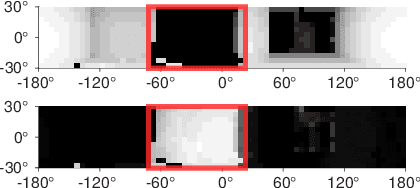

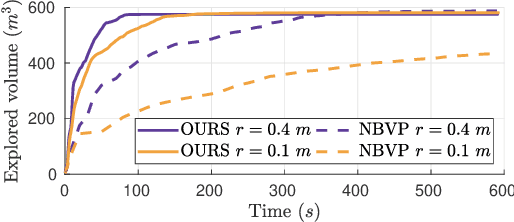

Abstract:Exploration and collision-free navigation through an unknown environment is a fundamental task for autonomous robots. In this paper, a novel exploration strategy for Micro Aerial Vehicles (MAVs) is presented. The goal of the exploration strategy is the reduction of map entropy regarding occupancy probabilities, which is reflected in a utility function to be maximised. We achieve fast and efficient exploration performance with tight integration between our octree-based occupancy mapping approach, frontier extraction, and motion planning-as a hybrid between frontier-based and sampling-based exploration methods. The computationally expensive frontier clustering employed in classic frontier-based exploration is avoided by exploiting the implicit grouping of frontier voxels in the underlying octree map representation. Candidate next-views are sampled from the map frontiers and are evaluated using a utility function combining map entropy and travel time, where the former is computed efficiently using sparse raycasting. These optimisations along with the targeted exploration of frontier-based methods result in a fast and computationally efficient exploration planner. The proposed method is evaluated using both simulated and real-world experiments, demonstrating clear advantages over state-of-the-art approaches.

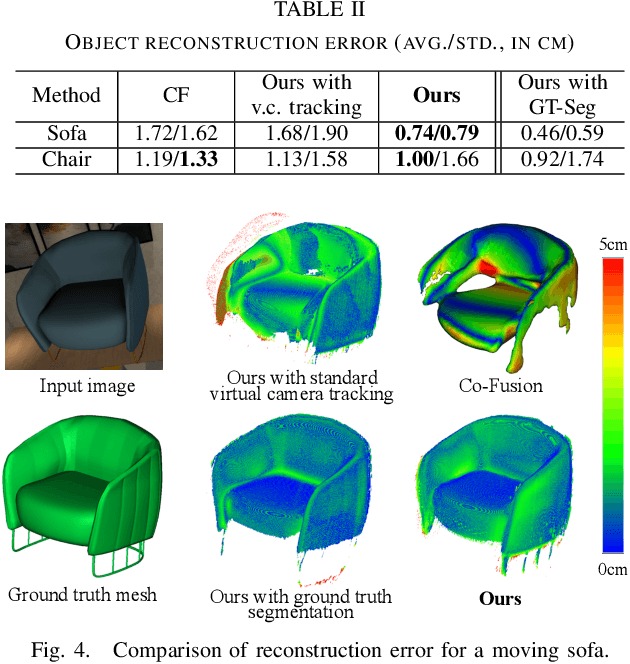

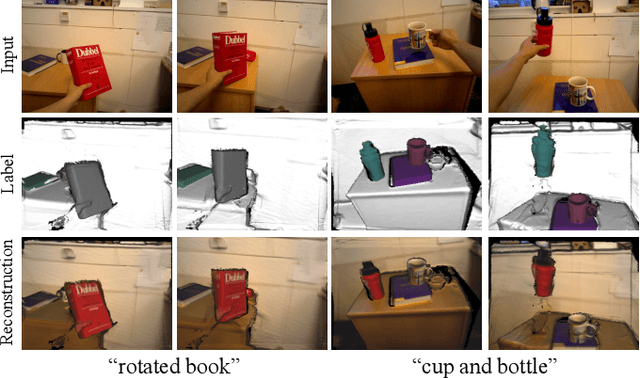

MID-Fusion: Octree-based Object-Level Multi-Instance Dynamic SLAM

Mar 21, 2019

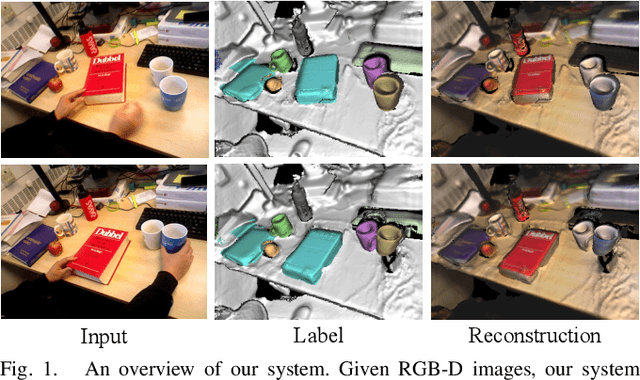

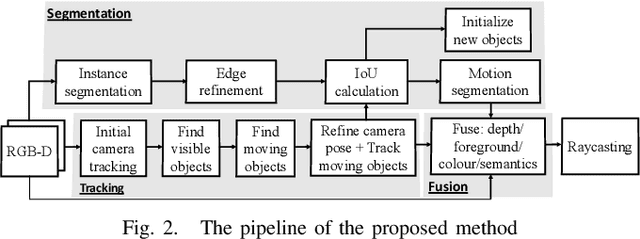

Abstract:We propose a new multi-instance dynamic RGB-D SLAM system using an object-level octree-based volumetric representation. It can provide robust camera tracking in dynamic environments and at the same time, continuously estimate geometric, semantic, and motion properties for arbitrary objects in the scene. For each incoming frame, we perform instance segmentation to detect objects and refine mask boundaries using geometric and motion information. Meanwhile, we estimate the pose of each existing moving object using an object-oriented tracking method and robustly track the camera pose against the static scene. Based on the estimated camera pose and object poses, we associate segmented masks with existing models and incrementally fuse corresponding colour, depth, semantic, and foreground object probabilities into each object model. In contrast to existing approaches, our system is the first system to generate an object-level dynamic volumetric map from a single RGB-D camera, which can be used directly for robotic tasks. Our method can run at 2-3 Hz on a CPU, excluding the instance segmentation part. We demonstrate its effectiveness by quantitatively and qualitatively testing it on both synthetic and real-world sequences.

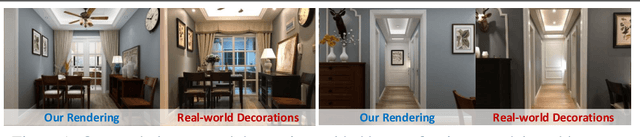

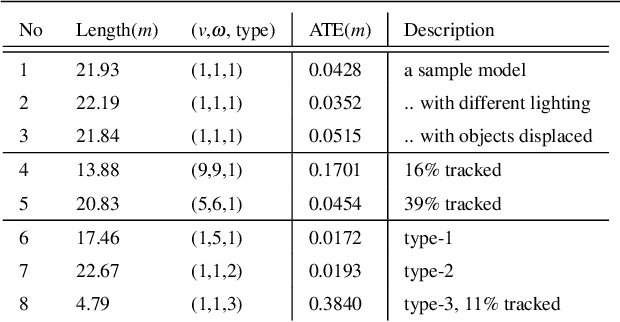

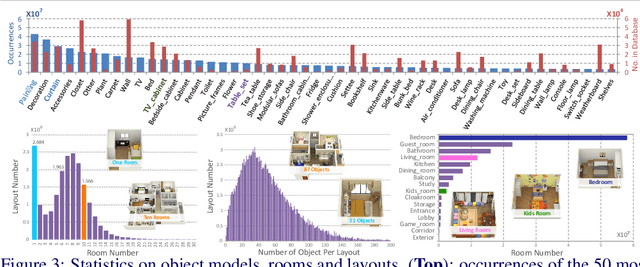

InteriorNet: Mega-scale Multi-sensor Photo-realistic Indoor Scenes Dataset

Sep 03, 2018

Abstract:Datasets have gained an enormous amount of popularity in the computer vision community, from training and evaluation of Deep Learning-based methods to benchmarking Simultaneous Localization and Mapping (SLAM). Without a doubt, synthetic imagery bears a vast potential due to scalability in terms of amounts of data obtainable without tedious manual ground truth annotations or measurements. Here, we present a dataset with the aim of providing a higher degree of photo-realism, larger scale, more variability as well as serving a wider range of purposes compared to existing datasets. Our dataset leverages the availability of millions of professional interior designs and millions of production-level furniture and object assets -- all coming with fine geometric details and high-resolution texture. We render high-resolution and high frame-rate video sequences following realistic trajectories while supporting various camera types as well as providing inertial measurements. Together with the release of the dataset, we will make executable program of our interactive simulator software as well as our renderer available at https://interiornetdataset.github.io. To showcase the usability and uniqueness of our dataset, we show benchmarking results of both sparse and dense SLAM algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge