Simon Boche

OKVIS2-X: Open Keyframe-based Visual-Inertial SLAM Configurable with Dense Depth or LiDAR, and GNSS

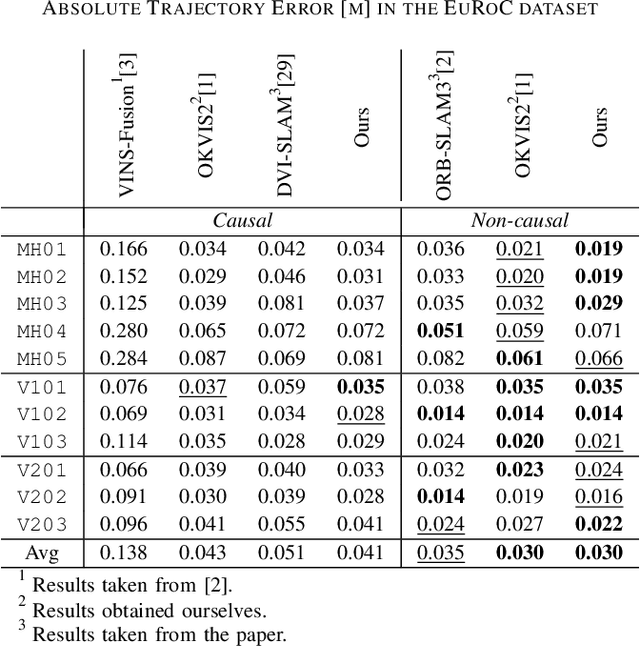

Oct 06, 2025Abstract:To empower mobile robots with usable maps as well as highest state estimation accuracy and robustness, we present OKVIS2-X: a state-of-the-art multi-sensor Simultaneous Localization and Mapping (SLAM) system building dense volumetric occupancy maps, while scalable to large environments and operating in realtime. Our unified SLAM framework seamlessly integrates different sensor modalities: visual, inertial, measured or learned depth, LiDAR and Global Navigation Satellite System (GNSS) measurements. Unlike most state-of-the-art SLAM systems, we advocate using dense volumetric map representations when leveraging depth or range-sensing capabilities. We employ an efficient submapping strategy that allows our system to scale to large environments, showcased in sequences of up to 9 kilometers. OKVIS2-X enhances its accuracy and robustness by tightly-coupling the estimator and submaps through map alignment factors. Our system provides globally consistent maps, directly usable for autonomous navigation. To further improve the accuracy of OKVIS2-X, we also incorporate the option of performing online calibration of camera extrinsics. Our system achieves the highest trajectory accuracy in EuRoC against state-of-the-art alternatives, outperforms all competitors in the Hilti22 VI-only benchmark, while also proving competitive in the LiDAR version, and showcases state of the art accuracy in the diverse and large-scale sequences from the VBR dataset.

CoRe-GS: Coarse-to-Refined Gaussian Splatting with Semantic Object Focus

Sep 05, 2025Abstract:Mobile reconstruction for autonomous aerial robotics holds strong potential for critical applications such as tele-guidance and disaster response. These tasks demand both accurate 3D reconstruction and fast scene processing. Instead of reconstructing the entire scene in detail, it is often more efficient to focus on specific objects, i.e., points of interest (PoIs). Mobile robots equipped with advanced sensing can usually detect these early during data acquisition or preliminary analysis, reducing the need for full-scene optimization. Gaussian Splatting (GS) has recently shown promise in delivering high-quality novel view synthesis and 3D representation by an incremental learning process. Extending GS with scene editing, semantics adds useful per-splat features to isolate objects effectively. Semantic 3D Gaussian editing can already be achieved before the full training cycle is completed, reducing the overall training time. Moreover, the semantically relevant area, the PoI, is usually already known during capturing. To balance high-quality reconstruction with reduced training time, we propose CoRe-GS. We first generate a coarse segmentation-ready scene with semantic GS and then refine it for the semantic object using our novel color-based effective filtering for effective object isolation. This is speeding up the training process to be about a quarter less than a full training cycle for semantic GS. We evaluate our approach on two datasets, SCRREAM (real-world, outdoor) and NeRDS 360 (synthetic, indoor), showing reduced runtime and higher novel-view-synthesis quality.

FindAnything: Open-Vocabulary and Object-Centric Mapping for Robot Exploration in Any Environment

Apr 11, 2025Abstract:Geometrically accurate and semantically expressive map representations have proven invaluable to facilitate robust and safe mobile robot navigation and task planning. Nevertheless, real-time, open-vocabulary semantic understanding of large-scale unknown environments is still an open problem. In this paper we present FindAnything, an open-world mapping and exploration framework that incorporates vision-language information into dense volumetric submaps. Thanks to the use of vision-language features, FindAnything bridges the gap between pure geometric and open-vocabulary semantic information for a higher level of understanding while allowing to explore any environment without the help of any external source of ground-truth pose information. We represent the environment as a series of volumetric occupancy submaps, resulting in a robust and accurate map representation that deforms upon pose updates when the underlying SLAM system corrects its drift, allowing for a locally consistent representation between submaps. Pixel-wise vision-language features are aggregated from efficient SAM (eSAM)-generated segments, which are in turn integrated into object-centric volumetric submaps, providing a mapping from open-vocabulary queries to 3D geometry that is scalable also in terms of memory usage. The open-vocabulary map representation of FindAnything achieves state-of-the-art semantic accuracy in closed-set evaluations on the Replica dataset. This level of scene understanding allows a robot to explore environments based on objects or areas of interest selected via natural language queries. Our system is the first of its kind to be deployed on resource-constrained devices, such as MAVs, leveraging vision-language information for real-world robotic tasks.

REGRACE: A Robust and Efficient Graph-based Re-localization Algorithm using Consistency Evaluation

Mar 05, 2025

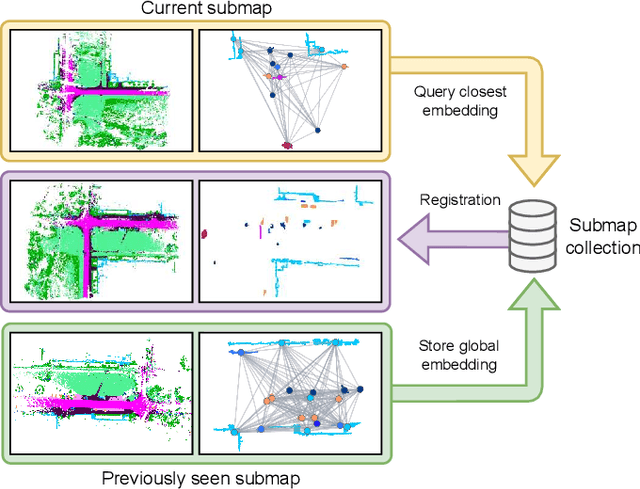

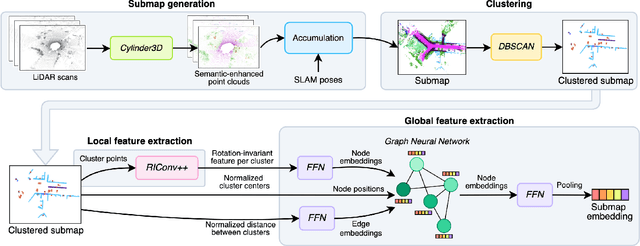

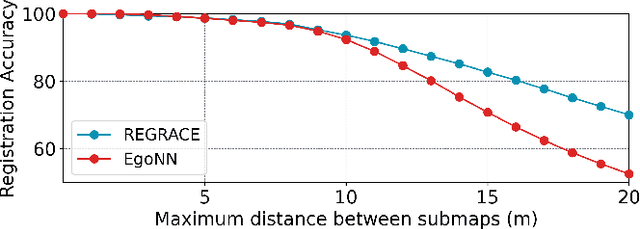

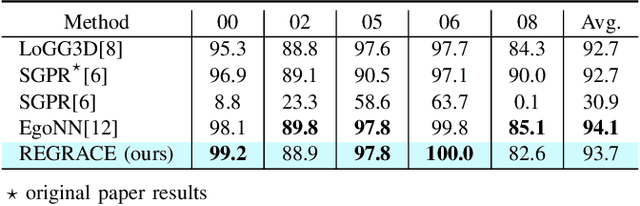

Abstract:Loop closures are essential for correcting odometry drift and creating consistent maps, especially in the context of large-scale navigation. Current methods using dense point clouds for accurate place recognition do not scale well due to computationally expensive scan-to-scan comparisons. Alternative object-centric approaches are more efficient but often struggle with sensitivity to viewpoint variation. In this work, we introduce REGRACE, a novel approach that addresses these challenges of scalability and perspective difference in re-localization by using LiDAR-based submaps. We introduce rotation-invariant features for each labeled object and enhance them with neighborhood context through a graph neural network. To identify potential revisits, we employ a scalable bag-of-words approach, pooling one learned global feature per submap. Additionally, we define a revisit with geometrical consistency cues rather than embedding distance, allowing us to recognize far-away loop closures. Our evaluations demonstrate that REGRACE achieves similar results compared to state-of-the-art place recognition and registration baselines while being twice as fast.

Efficient Submap-based Autonomous MAV Exploration using Visual-Inertial SLAM Configurable for LiDARs or Depth Cameras

Sep 25, 2024

Abstract:Autonomous exploration of unknown space is an essential component for the deployment of mobile robots in the real world. Safe navigation is crucial for all robotics applications and requires accurate and consistent maps of the robot's surroundings. To achieve full autonomy and allow deployment in a wide variety of environments, the robot must rely on on-board state estimation which is prone to drift over time. We propose a Micro Aerial Vehicle (MAV) exploration framework based on local submaps to allow retaining global consistency by applying loop-closure corrections to the relative submap poses. To enable large-scale exploration we efficiently compute global, environment-wide frontiers from the local submap frontiers and use a sampling-based next-best-view exploration planner. Our method seamlessly supports using either a LiDAR sensor or a depth camera, making it suitable for different kinds of MAV platforms. We perform comparative evaluations in simulation against a state-of-the-art submap-based exploration framework to showcase the efficiency and reconstruction quality of our approach. Finally, we demonstrate the applicability of our method to real-world MAVs, one equipped with a LiDAR and the other with a depth camera. Video available at https://youtu.be/Uf5fwmYcuq4 .

Uncertainty-Aware Visual-Inertial SLAM with Volumetric Occupancy Mapping

Sep 18, 2024

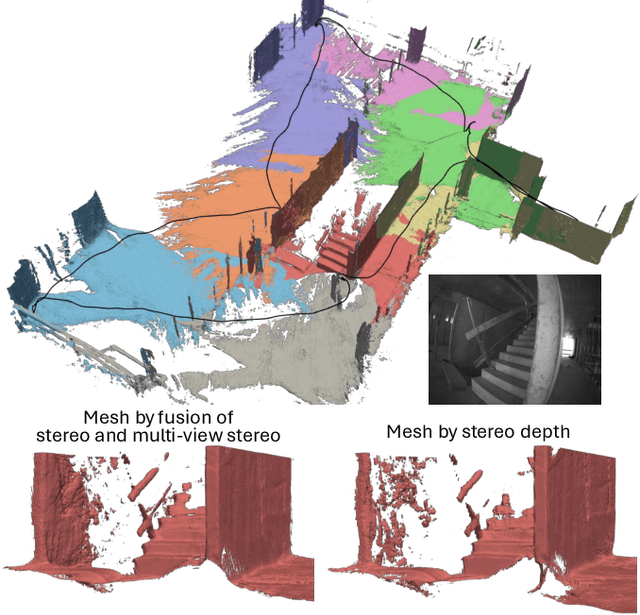

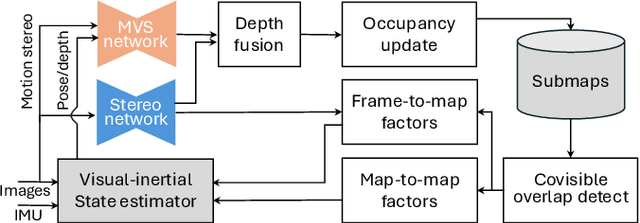

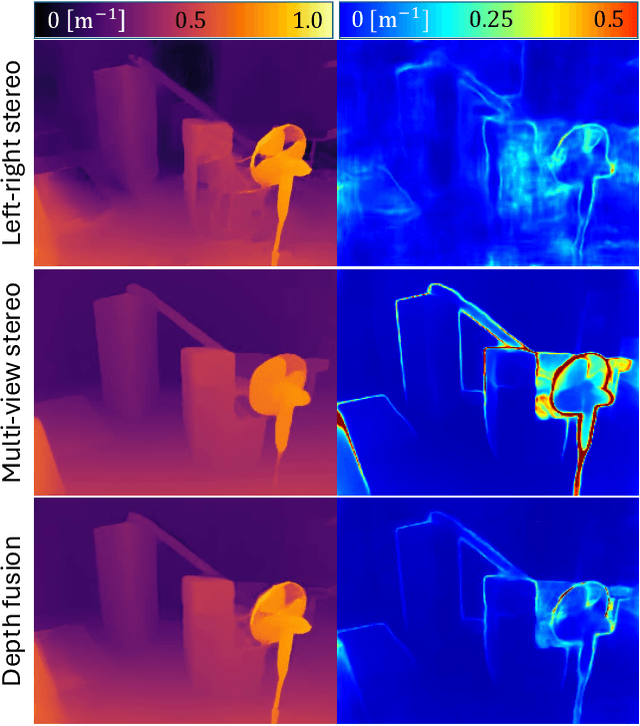

Abstract:We propose visual-inertial simultaneous localization and mapping that tightly couples sparse reprojection errors, inertial measurement unit pre-integrals, and relative pose factors with dense volumetric occupancy mapping. Hereby depth predictions from a deep neural network are fused in a fully probabilistic manner. Specifically, our method is rigorously uncertainty-aware: first, we use depth and uncertainty predictions from a deep network not only from the robot's stereo rig, but we further probabilistically fuse motion stereo that provides depth information across a range of baselines, therefore drastically increasing mapping accuracy. Next, predicted and fused depth uncertainty propagates not only into occupancy probabilities but also into alignment factors between generated dense submaps that enter the probabilistic nonlinear least squares estimator. This submap representation offers globally consistent geometry at scale. Our method is thoroughly evaluated in two benchmark datasets, resulting in localization and mapping accuracy that exceeds the state of the art, while simultaneously offering volumetric occupancy directly usable for downstream robotic planning and control in real-time.

Scalable Autonomous Drone Flight in the Forest with Visual-Inertial SLAM and Dense Submaps Built without LiDAR

Mar 14, 2024

Abstract:Forestry constitutes a key element for a sustainable future, while it is supremely challenging to introduce digital processes to improve efficiency. The main limitation is the difficulty of obtaining accurate maps at high temporal and spatial resolution as a basis for informed forestry decision-making, due to the vast area forests extend over and the sheer number of trees. To address this challenge, we present an autonomous Micro Aerial Vehicle (MAV) system which purely relies on cost-effective and light-weight passive visual and inertial sensors to perform under-canopy autonomous navigation. We leverage visual-inertial simultaneous localization and mapping (VI-SLAM) for accurate MAV state estimates and couple it with a volumetric occupancy submapping system to achieve a scalable mapping framework which can be directly used for path planning. As opposed to a monolithic map, submaps inherently deal with inevitable drift and corrections from VI-SLAM, since they move with pose estimates as they are updated. To ensure the safety of the MAV during navigation, we also propose a novel reference trajectory anchoring scheme that moves and deforms the reference trajectory the MAV is tracking upon state updates from the VI-SLAM system in a consistent way, even upon large changes in state estimates due to loop-closures. We thoroughly validate our system in both real and simulated forest environments with high tree densities in excess of 400 trees per hectare and at speeds up to 3 m/s - while not encountering a single collision or system failure. To the best of our knowledge this is the first system which achieves this level of performance in such unstructured environment using low-cost passive visual sensors and fully on-board computation including VI-SLAM.

Tightly-Coupled LiDAR-Visual-Inertial SLAM and Large-Scale Volumetric Occupancy Mapping

Mar 04, 2024Abstract:Autonomous navigation is one of the key requirements for every potential application of mobile robots in the real-world. Besides high-accuracy state estimation, a suitable and globally consistent representation of the 3D environment is indispensable. We present a fully tightly-coupled LiDAR-Visual-Inertial SLAM system and 3D mapping framework applying local submapping strategies to achieve scalability to large-scale environments. A novel and correspondence-free, inherently probabilistic, formulation of LiDAR residuals is introduced, expressed only in terms of the occupancy fields and its respective gradients. These residuals can be added to a factor graph optimisation problem, either as frame-to-map factors for the live estimates or as map-to-map factors aligning the submaps with respect to one another. Experimental validation demonstrates that the approach achieves state-of-the-art pose accuracy and furthermore produces globally consistent volumetric occupancy submaps which can be directly used in downstream tasks such as navigation or exploration.

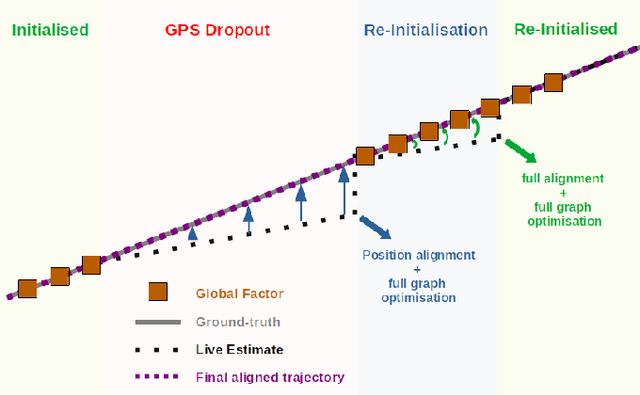

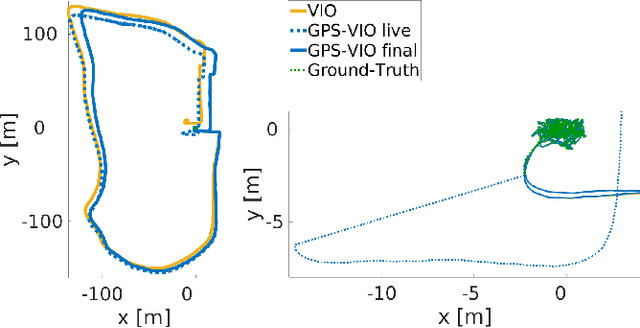

Visual-Inertial SLAM with Tightly-Coupled Dropout-Tolerant GPS Fusion

Aug 01, 2022

Abstract:Robotic applications are continuously striving towards higher levels of autonomy. To achieve that goal, a highly robust and accurate state estimation is indispensable. Combining visual and inertial sensor modalities has proven to yield accurate and locally consistent results in short-term applications. Unfortunately, visual-inertial state estimators suffer from the accumulation of drift for long-term trajectories. To eliminate this drift, global measurements can be fused into the state estimation pipeline. The most known and widely available source of global measurements is the Global Positioning System (GPS). In this paper, we propose a novel approach that fully combines stereo Visual-Inertial Simultaneous Localisation and Mapping (SLAM), including visual loop closures, with the fusion of global sensor modalities in a tightly-coupled and optimisation-based framework. Incorporating measurement uncertainties, we provide a robust criterion to solve the global reference frame initialisation problem. Furthermore, we propose a loop-closure-like optimisation scheme to compensate drift accumulated during outages in receiving GPS signals. Experimental validation on datasets and in a real-world experiment demonstrates the robustness of our approach to GPS dropouts as well as its capability to estimate highly accurate and globally consistent trajectories compared to existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge