Christopher Choi

BodySLAM++: Fast and Tightly-Coupled Visual-Inertial Camera and Human Motion Tracking

Sep 03, 2023

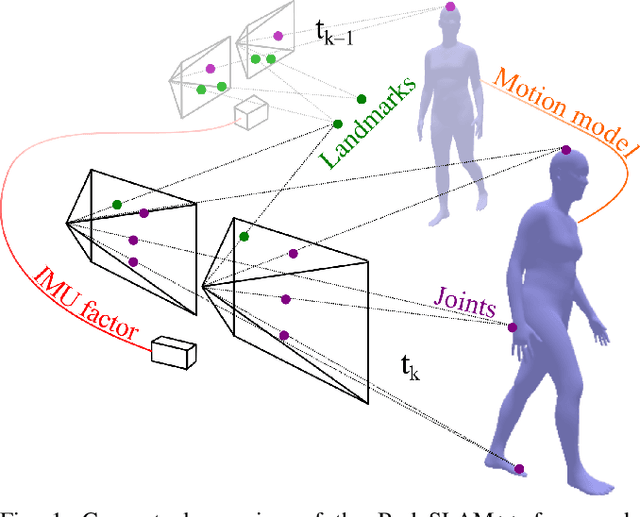

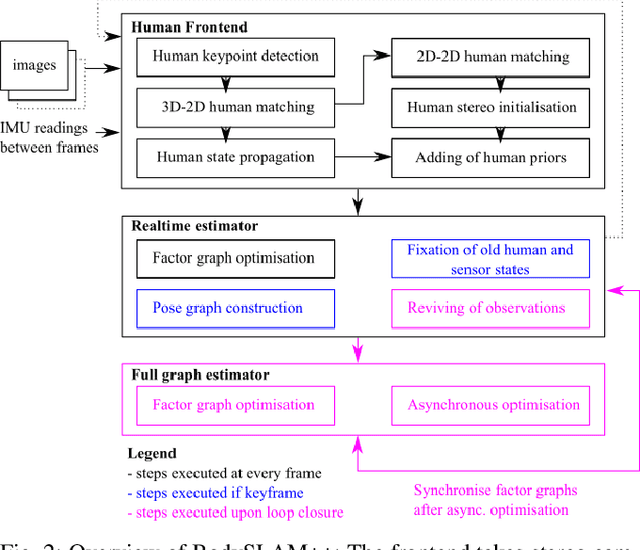

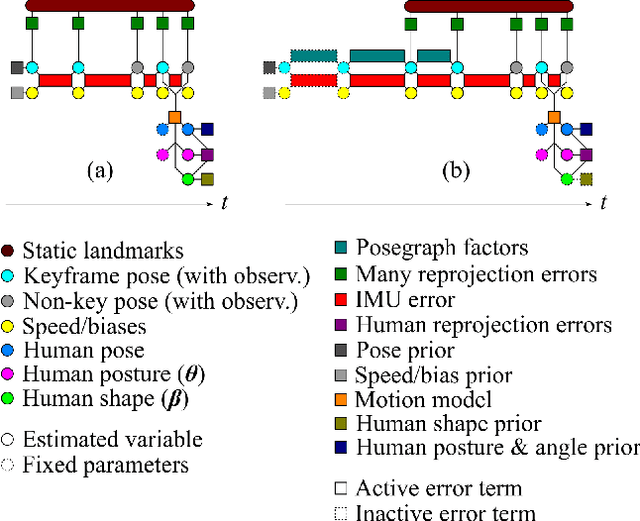

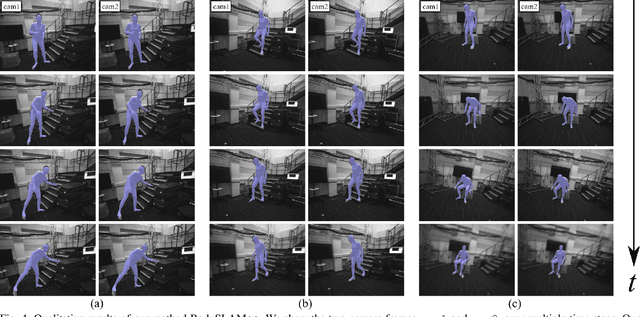

Abstract:Robust, fast, and accurate human state - 6D pose and posture - estimation remains a challenging problem. For real-world applications, the ability to estimate the human state in real-time is highly desirable. In this paper, we present BodySLAM++, a fast, efficient, and accurate human and camera state estimation framework relying on visual-inertial data. BodySLAM++ extends an existing visual-inertial state estimation framework, OKVIS2, to solve the dual task of estimating camera and human states simultaneously. Our system improves the accuracy of both human and camera state estimation with respect to baseline methods by 26% and 12%, respectively, and achieves real-time performance at 15+ frames per second on an Intel i7-model CPU. Experiments were conducted on a custom dataset containing both ground truth human and camera poses collected with an indoor motion tracking system.

Finding Things in the Unknown: Semantic Object-Centric Exploration with an MAV

Mar 03, 2023

Abstract:Exploration of unknown space with an autonomous mobile robot is a well-studied problem. In this work we broaden the scope of exploration, moving beyond the pure geometric goal of uncovering as much free space as possible. We believe that for many practical applications, exploration should be contextualised with semantic and object-level understanding of the environment for task-specific exploration. Here, we study the task of both finding specific objects in unknown space as well as reconstructing them to a target level of detail. We therefore extend our environment reconstruction to not only consist of a background map, but also object-level and semantically fused submaps. Importantly, we adapt our previous objective function of uncovering as much free space as possible in as little time as possible with two additional elements: first, we require a maximum observation distance of background surfaces to ensure target objects are not missed by image-based detectors because they are too small to be detected. Second, we require an even smaller maximum distance to the found objects in order to reconstruct them with the desired accuracy. We further created a Micro Aerial Vehicle (MAV) semantic exploration simulator based on Habitat in order to quantitatively demonstrate how our framework can be used to efficiently find specific objects as part of exploration. Finally, we showcase this capability can be deployed in real-world scenes involving our drone equipped with an Intel RealSense D455 RGB-D camera.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge