David Doermann

AutoEdit: Automatic Hyperparameter Tuning for Image Editing

Sep 18, 2025Abstract:Recent advances in diffusion models have revolutionized text-guided image editing, yet existing editing methods face critical challenges in hyperparameter identification. To get the reasonable editing performance, these methods often require the user to brute-force tune multiple interdependent hyperparameters, such as inversion timesteps and attention modification, \textit{etc.} This process incurs high computational costs due to the huge hyperparameter search space. We consider searching optimal editing's hyperparameters as a sequential decision-making task within the diffusion denoising process. Specifically, we propose a reinforcement learning framework, which establishes a Markov Decision Process that dynamically adjusts hyperparameters across denoising steps, integrating editing objectives into a reward function. The method achieves time efficiency through proximal policy optimization while maintaining optimal hyperparameter configurations. Experiments demonstrate significant reduction in search time and computational overhead compared to existing brute-force approaches, advancing the practical deployment of a diffusion-based image editing framework in the real world.

On Measuring Intrinsic Causal Attributions in Deep Neural Networks

May 14, 2025Abstract:Quantifying the causal influence of input features within neural networks has become a topic of increasing interest. Existing approaches typically assess direct, indirect, and total causal effects. This work treats NNs as structural causal models (SCMs) and extends our focus to include intrinsic causal contributions (ICC). We propose an identifiable generative post-hoc framework for quantifying ICC. We also draw a relationship between ICC and Sobol' indices. Our experiments on synthetic and real-world datasets demonstrate that ICC generates more intuitive and reliable explanations compared to existing global explanation techniques.

YOLOv12: Attention-Centric Real-Time Object Detectors

Feb 18, 2025Abstract:Enhancing the network architecture of the YOLO framework has been crucial for a long time, but has focused on CNN-based improvements despite the proven superiority of attention mechanisms in modeling capabilities. This is because attention-based models cannot match the speed of CNN-based models. This paper proposes an attention-centric YOLO framework, namely YOLOv12, that matches the speed of previous CNN-based ones while harnessing the performance benefits of attention mechanisms. YOLOv12 surpasses all popular real-time object detectors in accuracy with competitive speed. For example, YOLOv12-N achieves 40.6% mAP with an inference latency of 1.64 ms on a T4 GPU, outperforming advanced YOLOv10-N / YOLOv11-N by 2.1%/1.2% mAP with a comparable speed. This advantage extends to other model scales. YOLOv12 also surpasses end-to-end real-time detectors that improve DETR, such as RT-DETR / RT-DETRv2: YOLOv12-S beats RT-DETR-R18 / RT-DETRv2-R18 while running 42% faster, using only 36% of the computation and 45% of the parameters. More comparisons are shown in Figure 1.

Personalized Large Vision-Language Models

Dec 23, 2024

Abstract:The personalization model has gained significant attention in image generation yet remains underexplored for large vision-language models (LVLMs). Beyond generic ones, with personalization, LVLMs handle interactive dialogues using referential concepts (e.g., ``Mike and Susan are talking.'') instead of the generic form (e.g., ``a boy and a girl are talking.''), making the conversation more customizable and referentially friendly. In addition, PLVM is equipped to continuously add new concepts during a dialogue without incurring additional costs, which significantly enhances the practicality. PLVM proposes Aligner, a pre-trained visual encoder to align referential concepts with the queried images. During the dialogues, it extracts features of reference images with these corresponding concepts and recognizes them in the queried image, enabling personalization. We note that the computational cost and parameter count of the Aligner are negligible within the entire framework. With comprehensive qualitative and quantitative analyses, we reveal the effectiveness and superiority of PLVM.

ETLNet: An Efficient TCN-BiLSTM Network for Road Anomaly Detection Using Smartphone Sensors

Dec 06, 2024Abstract:Road anomalies can be defined as irregularities on the road surface or in the surface itself. Some may be intentional (such as speedbumps), accidental (such as materials falling off a truck), or the result of roads' excessive use or low or no maintenance, such as potholes. Despite their varying origins, these irregularities often harm vehicles substantially. Speed bumps are intentionally placed for safety but are dangerous due to their non-standard shape, size, and lack of proper markings. Potholes are unintentional and can also cause severe damage. To address the detection of these anomalies, we need an automated road monitoring system. Today, various systems exist that use visual information to track these anomalies. Still, due to poor lighting conditions and improper or missing markings, they may go undetected and have severe consequences for public transport, automated vehicles, etc. In this paper, the Enhanced Temporal-BiLSTM Network (ETLNet) is introduced as a novel approach that integrates two Temporal Convolutional Network (TCN) layers with a Bidirectional Long Short-Term Memory (BiLSTM) layer. This combination is tailored to detect anomalies effectively irrespective of lighting conditions, as it depends not on visuals but smartphone inertial sensor data. Our methodology employs accelerometer and gyroscope sensors, typically in smartphones, to gather data on road conditions. Empirical evaluations demonstrate that the ETLNet model maintains an F1-score for detecting speed bumps of 99.3%. The ETLNet model's robustness and efficiency significantly advance automated road surface monitoring technologies.

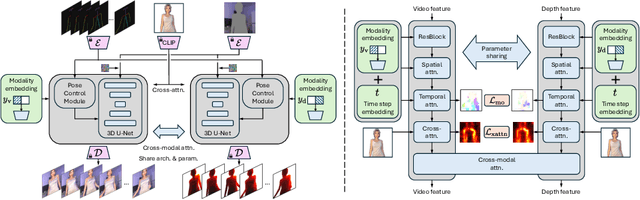

IDOL: Unified Dual-Modal Latent Diffusion for Human-Centric Joint Video-Depth Generation

Jul 15, 2024

Abstract:Significant advances have been made in human-centric video generation, yet the joint video-depth generation problem remains underexplored. Most existing monocular depth estimation methods may not generalize well to synthesized images or videos, and multi-view-based methods have difficulty controlling the human appearance and motion. In this work, we present IDOL (unIfied Dual-mOdal Latent diffusion) for high-quality human-centric joint video-depth generation. Our IDOL consists of two novel designs. First, to enable dual-modal generation and maximize the information exchange between video and depth generation, we propose a unified dual-modal U-Net, a parameter-sharing framework for joint video and depth denoising, wherein a modality label guides the denoising target, and cross-modal attention enables the mutual information flow. Second, to ensure a precise video-depth spatial alignment, we propose a motion consistency loss that enforces consistency between the video and depth feature motion fields, leading to harmonized outputs. Additionally, a cross-attention map consistency loss is applied to align the cross-attention map of the video denoising with that of the depth denoising, further facilitating spatial alignment. Extensive experiments on the TikTok and NTU120 datasets show our superior performance, significantly surpassing existing methods in terms of video FVD and depth accuracy.

ClawMachine: Fetching Visual Tokens as An Entity for Referring and Grounding

Jun 17, 2024Abstract:An essential topic for multimodal large language models (MLLMs) is aligning vision and language concepts at a finer level. In particular, we devote efforts to encoding visual referential information for tasks such as referring and grounding. Existing methods, including proxy encoding and geometry encoding, incorporate additional syntax to encode the object's location, bringing extra burdens in training MLLMs to communicate between language and vision. This study presents ClawMachine, offering a new methodology that notates an entity directly using the visual tokens. It allows us to unify the prompt and answer of visual referential tasks without additional syntax. Upon a joint vision-language vocabulary, ClawMachine unifies visual referring and grounding into an auto-regressive format and learns with a decoder-only architecture. Experiments validate that our model achieves competitive performance across visual referring and grounding tasks with a reduced demand for training data. Additionally, ClawMachine demonstrates a native ability to integrate multi-source information for complex visual reasoning, which prior MLLMs can hardly perform without specific adaptions.

Motion Consistency Model: Accelerating Video Diffusion with Disentangled Motion-Appearance Distillation

Jun 11, 2024Abstract:Image diffusion distillation achieves high-fidelity generation with very few sampling steps. However, applying these techniques directly to video diffusion often results in unsatisfactory frame quality due to the limited visual quality in public video datasets. This affects the performance of both teacher and student video diffusion models. Our study aims to improve video diffusion distillation while improving frame appearance using abundant high-quality image data. We propose motion consistency model (MCM), a single-stage video diffusion distillation method that disentangles motion and appearance learning. Specifically, MCM includes a video consistency model that distills motion from the video teacher model, and an image discriminator that enhances frame appearance to match high-quality image data. This combination presents two challenges: (1) conflicting frame learning objectives, as video distillation learns from low-quality video frames while the image discriminator targets high-quality images; and (2) training-inference discrepancies due to the differing quality of video samples used during training and inference. To address these challenges, we introduce disentangled motion distillation and mixed trajectory distillation. The former applies the distillation objective solely to the motion representation, while the latter mitigates training-inference discrepancies by mixing distillation trajectories from both the low- and high-quality video domains. Extensive experiments show that our MCM achieves the state-of-the-art video diffusion distillation performance. Additionally, our method can enhance frame quality in video diffusion models, producing frames with high aesthetic scores or specific styles without corresponding video data.

Artemis: Towards Referential Understanding in Complex Videos

Jun 01, 2024

Abstract:Videos carry rich visual information including object description, action, interaction, etc., but the existing multimodal large language models (MLLMs) fell short in referential understanding scenarios such as video-based referring. In this paper, we present Artemis, an MLLM that pushes video-based referential understanding to a finer level. Given a video, Artemis receives a natural-language question with a bounding box in any video frame and describes the referred target in the entire video. The key to achieving this goal lies in extracting compact, target-specific video features, where we set a solid baseline by tracking and selecting spatiotemporal features from the video. We train Artemis on the newly established VideoRef45K dataset with 45K video-QA pairs and design a computationally efficient, three-stage training procedure. Results are promising both quantitatively and qualitatively. Additionally, we show that \model can be integrated with video grounding and text summarization tools to understand more complex scenarios. Code and data are available at https://github.com/qiujihao19/Artemis.

ChartReformer: Natural Language-Driven Chart Image Editing

Mar 01, 2024

Abstract:Chart visualizations are essential for data interpretation and communication; however, most charts are only accessible in image format and lack the corresponding data tables and supplementary information, making it difficult to alter their appearance for different application scenarios. To eliminate the need for original underlying data and information to perform chart editing, we propose ChartReformer, a natural language-driven chart image editing solution that directly edits the charts from the input images with the given instruction prompts. The key in this method is that we allow the model to comprehend the chart and reason over the prompt to generate the corresponding underlying data table and visual attributes for new charts, enabling precise edits. Additionally, to generalize ChartReformer, we define and standardize various types of chart editing, covering style, layout, format, and data-centric edits. The experiments show promising results for the natural language-driven chart image editing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge