Dava Newman

GEO-Bench: Toward Foundation Models for Earth Monitoring

Jun 06, 2023

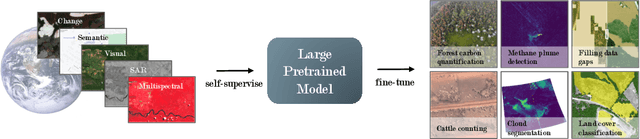

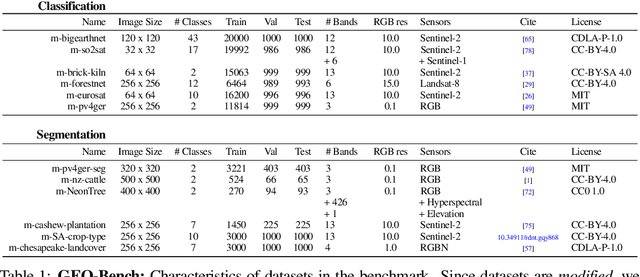

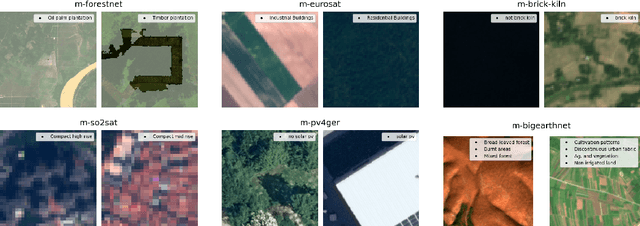

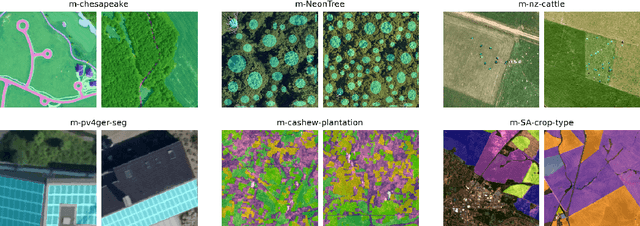

Abstract:Recent progress in self-supervision has shown that pre-training large neural networks on vast amounts of unsupervised data can lead to substantial increases in generalization to downstream tasks. Such models, recently coined foundation models, have been transformational to the field of natural language processing. Variants have also been proposed for image data, but their applicability to remote sensing tasks is limited. To stimulate the development of foundation models for Earth monitoring, we propose a benchmark comprised of six classification and six segmentation tasks, which were carefully curated and adapted to be both relevant to the field and well-suited for model evaluation. We accompany this benchmark with a robust methodology for evaluating models and reporting aggregated results to enable a reliable assessment of progress. Finally, we report results for 20 baselines to gain information about the performance of existing models. We believe that this benchmark will be a driver of progress across a variety of Earth monitoring tasks.

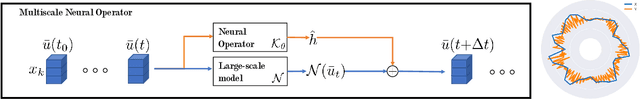

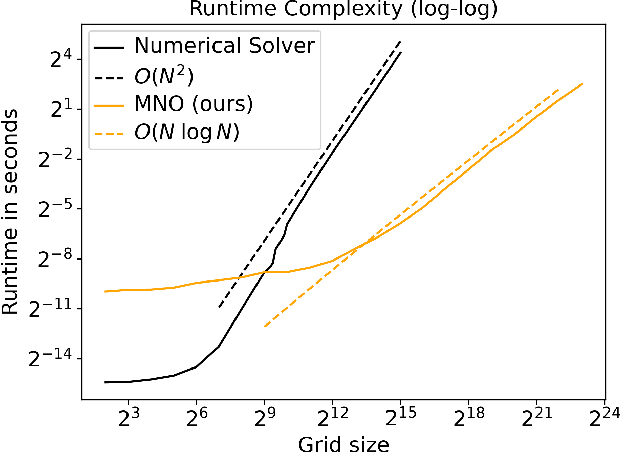

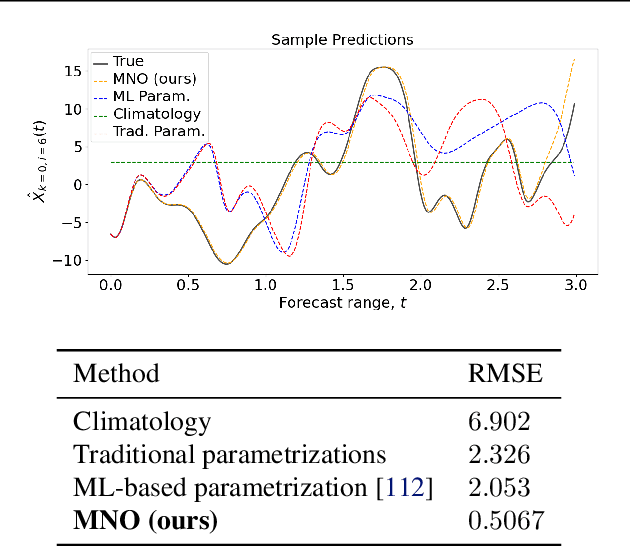

Multiscale Neural Operator: Learning Fast and Grid-independent PDE Solvers

Jul 23, 2022

Abstract:Numerical simulations in climate, chemistry, or astrophysics are computationally too expensive for uncertainty quantification or parameter-exploration at high-resolution. Reduced-order or surrogate models are multiple orders of magnitude faster, but traditional surrogates are inflexible or inaccurate and pure machine learning (ML)-based surrogates too data-hungry. We propose a hybrid, flexible surrogate model that exploits known physics for simulating large-scale dynamics and limits learning to the hard-to-model term, which is called parametrization or closure and captures the effect of fine- onto large-scale dynamics. Leveraging neural operators, we are the first to learn grid-independent, non-local, and flexible parametrizations. Our \textit{multiscale neural operator} is motivated by a rich literature in multiscale modeling, has quasilinear runtime complexity, is more accurate or flexible than state-of-the-art parametrizations and demonstrated on the chaotic equation multiscale Lorenz96.

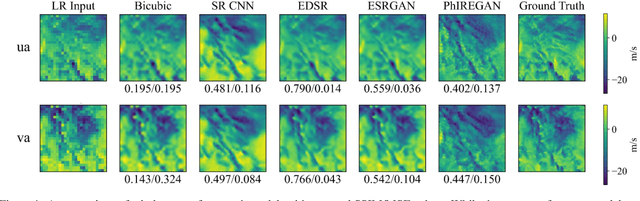

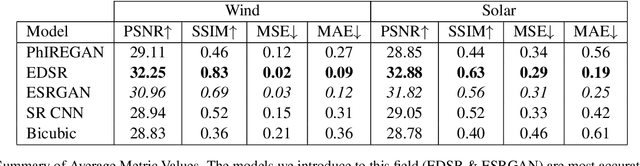

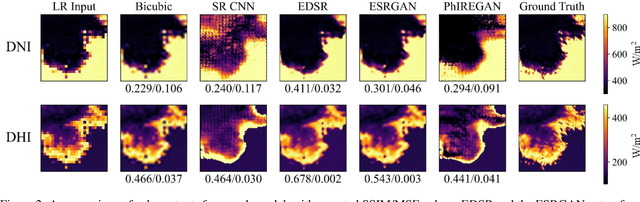

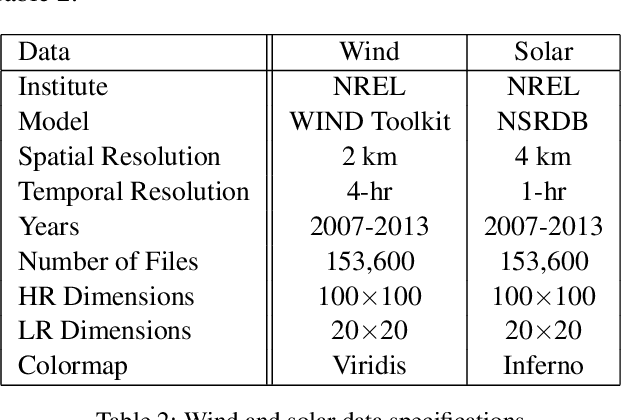

WiSoSuper: Benchmarking Super-Resolution Methods on Wind and Solar Data

Sep 23, 2021

Abstract:The transition to green energy grids depends on detailed wind and solar forecasts to optimize the siting and scheduling of renewable energy generation. Operational forecasts from numerical weather prediction models, however, only have a spatial resolution of 10 to 20-km, which leads to sub-optimal usage and development of renewable energy farms. Weather scientists have been developing super-resolution methods to increase the resolution, but often rely on simple interpolation techniques or computationally expensive differential equation-based models. Recently, machine learning-based models, specifically the physics-informed resolution-enhancing generative adversarial network (PhIREGAN), have outperformed traditional downscaling methods. We provide a thorough and extensible benchmark of leading deep learning-based super-resolution techniques, including the enhanced super-resolution generative adversarial network (ESRGAN) and an enhanced deep super-resolution (EDSR) network, on wind and solar data. We accompany the benchmark with a novel public, processed, and machine learning-ready dataset for benchmarking super-resolution methods on wind and solar data.

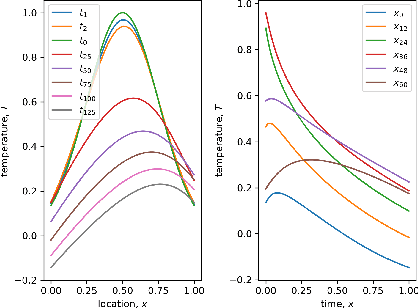

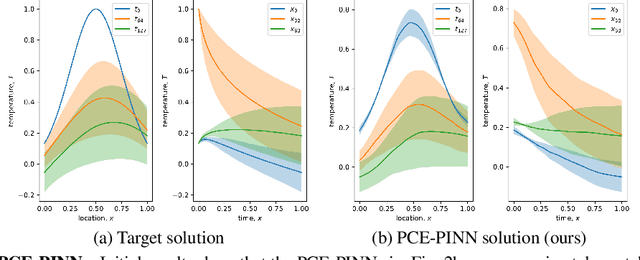

PCE-PINNs: Physics-Informed Neural Networks for Uncertainty Propagation in Ocean Modeling

May 05, 2021

Abstract:Climate models project an uncertainty range of possible warming scenarios from 1.5 to 5 degree Celsius global temperature increase until 2100, according to the CMIP6 model ensemble. Climate risk management and infrastructure adaptation requires the accurate quantification of the uncertainties at the local level. Ensembles of high-resolution climate models could accurately quantify the uncertainties, but most physics-based climate models are computationally too expensive to run as ensemble. Recent works in physics-informed neural networks (PINNs) have combined deep learning and the physical sciences to learn up to 15k faster copies of climate submodels. However, the application of PINNs in climate modeling has so far been mostly limited to deterministic models. We leverage a novel method that combines polynomial chaos expansion (PCE), a classic technique for uncertainty propagation, with PINNs. The PCE-PINNs learn a fast surrogate model that is demonstrated for uncertainty propagation of known parameter uncertainties. We showcase the effectiveness in ocean modeling by using the local advection-diffusion equation.

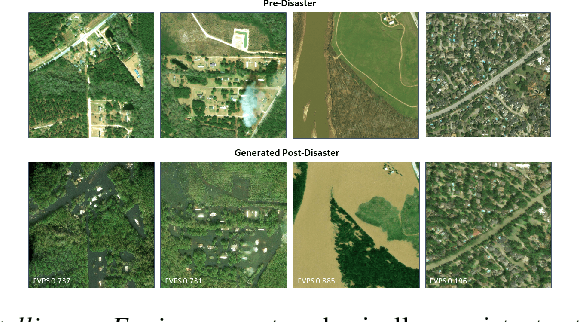

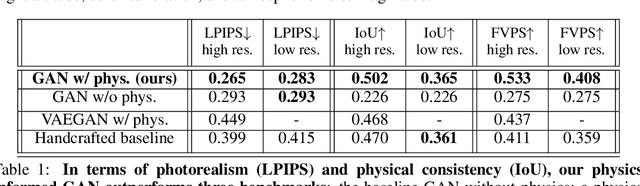

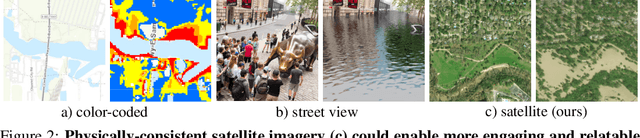

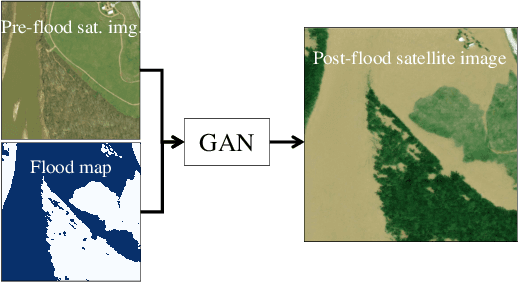

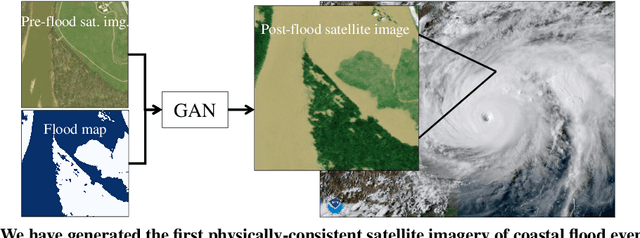

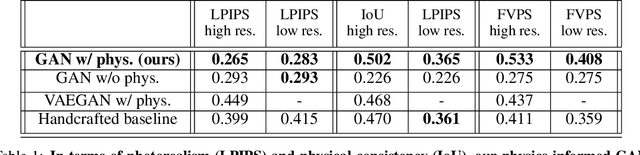

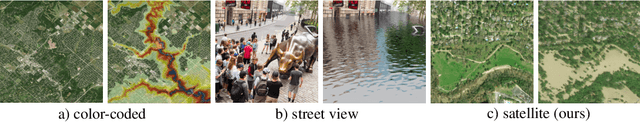

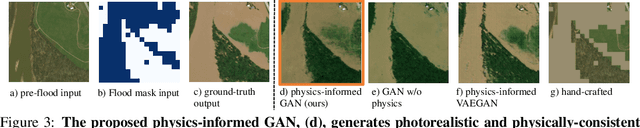

Physically-Consistent Generative Adversarial Networks for Coastal Flood Visualization

May 05, 2021

Abstract:As climate change increases the intensity of natural disasters, society needs better tools for adaptation. Floods, for example, are the most frequent natural disaster, and better tools for flood risk communication could increase the support for flood-resilient infrastructure development. Our work aims to enable more visual communication of large-scale climate impacts via visualizing the output of coastal flood models as satellite imagery. We propose the first deep learning pipeline to ensure physical-consistency in synthetic visual satellite imagery. We advanced a state-of-the-art GAN called pix2pixHD, such that it produces imagery that is physically-consistent with the output of an expert-validated storm surge model (NOAA SLOSH). By evaluating the imagery relative to physics-based flood maps, we find that our proposed framework outperforms baseline models in both physical-consistency and photorealism. We envision our work to be the first step towards a global visualization of how climate change shapes our landscape. Continuing on this path, we show that the proposed pipeline generalizes to visualize arctic sea ice melt. We also publish a dataset of over 25k labelled image-pairs to study image-to-image translation in Earth observation.

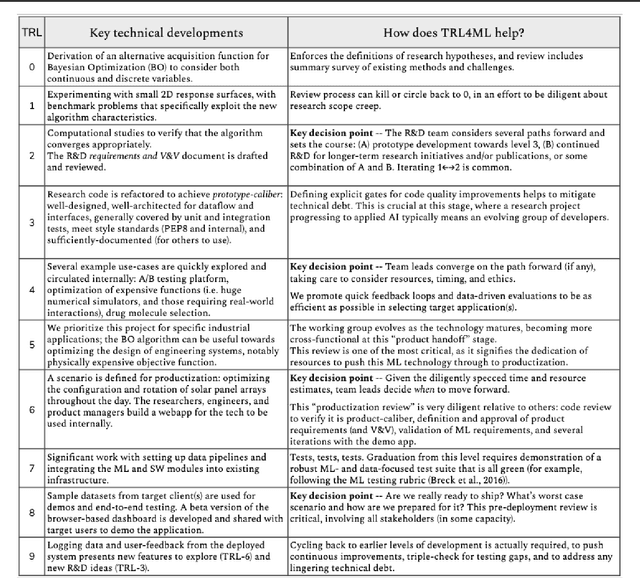

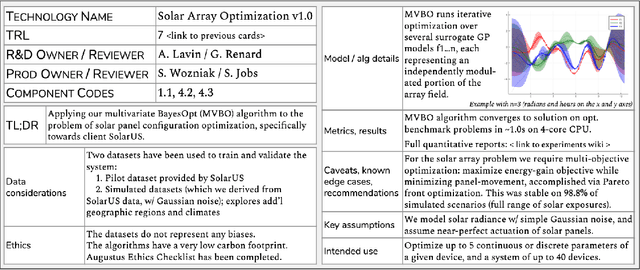

Technology Readiness Levels for Machine Learning Systems

Jan 11, 2021

Abstract:The development and deployment of machine learning (ML) systems can be executed easily with modern tools, but the process is typically rushed and means-to-an-end. The lack of diligence can lead to technical debt, scope creep and misaligned objectives, model misuse and failures, and expensive consequences. Engineering systems, on the other hand, follow well-defined processes and testing standards to streamline development for high-quality, reliable results. The extreme is spacecraft systems, where mission critical measures and robustness are ingrained in the development process. Drawing on experience in both spacecraft engineering and ML (from research through product across domain areas), we have developed a proven systems engineering approach for machine learning development and deployment. Our "Machine Learning Technology Readiness Levels" (MLTRL) framework defines a principled process to ensure robust, reliable, and responsible systems while being streamlined for ML workflows, including key distinctions from traditional software engineering. Even more, MLTRL defines a lingua franca for people across teams and organizations to work collaboratively on artificial intelligence and machine learning technologies. Here we describe the framework and elucidate it with several real world use-cases of developing ML methods from basic research through productization and deployment, in areas such as medical diagnostics, consumer computer vision, satellite imagery, and particle physics.

Physics-informed GANs for Coastal Flood Visualization

Oct 16, 2020

Abstract:As climate change increases the intensity of natural disasters, society needs better tools for adaptation. Floods, for example, are the most frequent natural disaster, but during hurricanes the area is largely covered by clouds and emergency managers must rely on nonintuitive flood visualizations for mission planning. To assist these emergency managers, we have created a deep learning pipeline that generates visual satellite images of current and future coastal flooding. We advanced a state-of-the-art GAN called pix2pixHD, such that it produces imagery that is physically-consistent with the output of an expert-validated storm surge model (NOAA SLOSH). By evaluating the imagery relative to physics-based flood maps, we find that our proposed framework outperforms baseline models in both physical-consistency and photorealism. While this work focused on the visualization of coastal floods, we envision the creation of a global visualization of how climate change will shape our earth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge