Mark Veillette

Developing a Series of AI Challenges for the United States Department of the Air Force

Jul 14, 2022

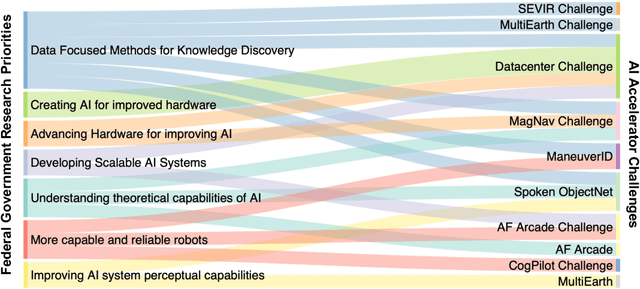

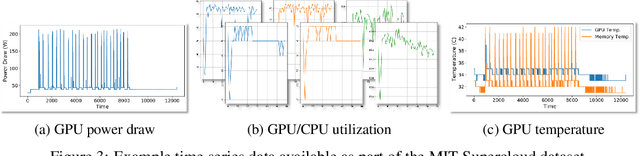

Abstract:Through a series of federal initiatives and orders, the U.S. Government has been making a concerted effort to ensure American leadership in AI. These broad strategy documents have influenced organizations such as the United States Department of the Air Force (DAF). The DAF-MIT AI Accelerator is an initiative between the DAF and MIT to bridge the gap between AI researchers and DAF mission requirements. Several projects supported by the DAF-MIT AI Accelerator are developing public challenge problems that address numerous Federal AI research priorities. These challenges target priorities by making large, AI-ready datasets publicly available, incentivizing open-source solutions, and creating a demand signal for dual use technologies that can stimulate further research. In this article, we describe these public challenges being developed and how their application contributes to scientific advances.

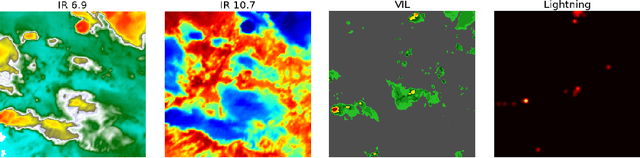

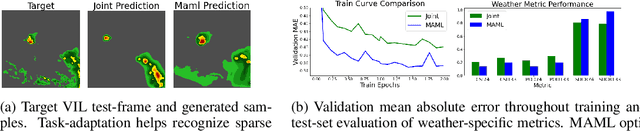

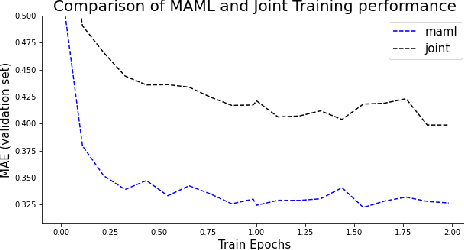

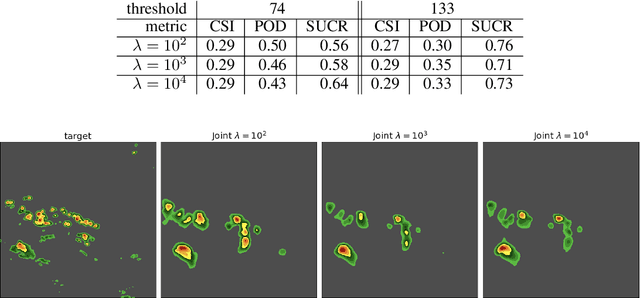

Meta-Learning and Self-Supervised Pretraining for Real World Image Translation

Dec 22, 2021

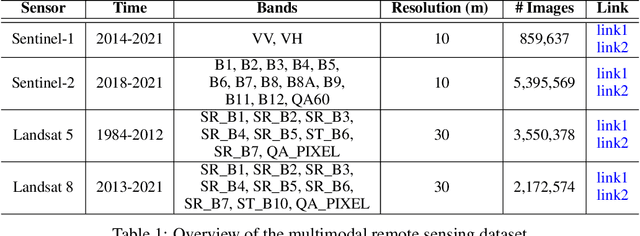

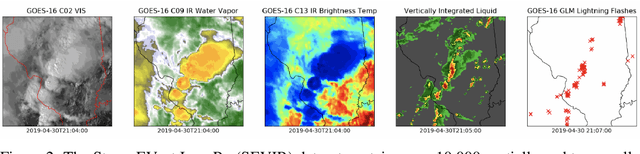

Abstract:Recent advances in deep learning, in particular enabled by hardware advances and big data, have provided impressive results across a wide range of computational problems such as computer vision, natural language, or reinforcement learning. Many of these improvements are however constrained to problems with large-scale curated data-sets which require a lot of human labor to gather. Additionally, these models tend to generalize poorly under both slight distributional shifts and low-data regimes. In recent years, emerging fields such as meta-learning or self-supervised learning have been closing the gap between proof-of-concept results and real-life applications of machine learning by extending deep-learning to the semi-supervised and few-shot domains. We follow this line of work and explore spatio-temporal structure in a recently introduced image-to-image translation problem in order to: i) formulate a novel multi-task few-shot image generation benchmark and ii) explore data augmentations in contrastive pre-training for image translation downstream tasks. We present several baselines for the few-shot problem and discuss trade-offs between different approaches. Our code is available at https://github.com/irugina/meta-image-translation.

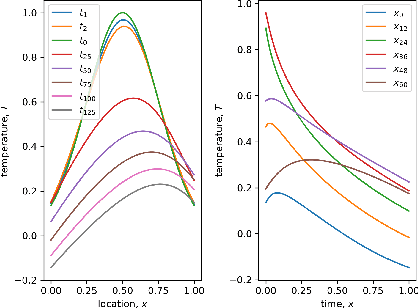

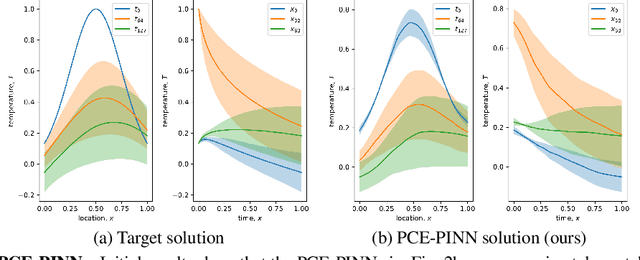

PCE-PINNs: Physics-Informed Neural Networks for Uncertainty Propagation in Ocean Modeling

May 05, 2021

Abstract:Climate models project an uncertainty range of possible warming scenarios from 1.5 to 5 degree Celsius global temperature increase until 2100, according to the CMIP6 model ensemble. Climate risk management and infrastructure adaptation requires the accurate quantification of the uncertainties at the local level. Ensembles of high-resolution climate models could accurately quantify the uncertainties, but most physics-based climate models are computationally too expensive to run as ensemble. Recent works in physics-informed neural networks (PINNs) have combined deep learning and the physical sciences to learn up to 15k faster copies of climate submodels. However, the application of PINNs in climate modeling has so far been mostly limited to deterministic models. We leverage a novel method that combines polynomial chaos expansion (PCE), a classic technique for uncertainty propagation, with PINNs. The PCE-PINNs learn a fast surrogate model that is demonstrated for uncertainty propagation of known parameter uncertainties. We showcase the effectiveness in ocean modeling by using the local advection-diffusion equation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge