Vijay Gadepally

LLM Inference Serving: Survey of Recent Advances and Opportunities

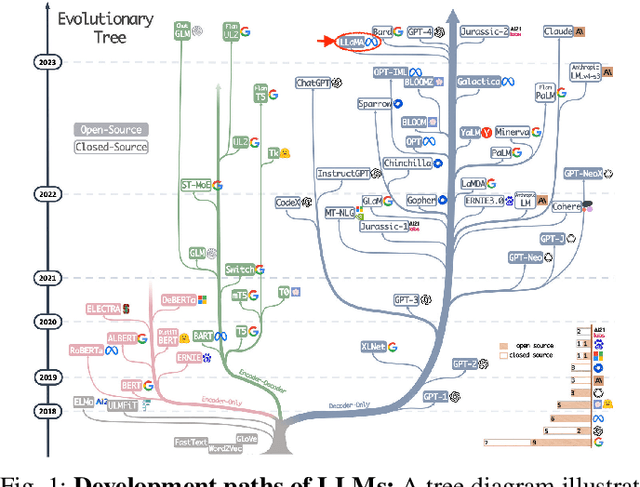

Jul 17, 2024Abstract:This survey offers a comprehensive overview of recent advancements in Large Language Model (LLM) serving systems, focusing on research since the year 2023. We specifically examine system-level enhancements that improve performance and efficiency without altering the core LLM decoding mechanisms. By selecting and reviewing high-quality papers from prestigious ML and system venues, we highlight key innovations and practical considerations for deploying and scaling LLMs in real-world production environments. This survey serves as a valuable resource for LLM practitioners seeking to stay abreast of the latest developments in this rapidly evolving field.

Toward Sustainable GenAI using Generation Directives for Carbon-Friendly Large Language Model Inference

Mar 19, 2024Abstract:The rapid advancement of Generative Artificial Intelligence (GenAI) across diverse sectors raises significant environmental concerns, notably the carbon emissions from their cloud and high performance computing (HPC) infrastructure. This paper presents Sprout, an innovative framework designed to address these concerns by reducing the carbon footprint of generative Large Language Model (LLM) inference services. Sprout leverages the innovative concept of "generation directives" to guide the autoregressive generation process, thereby enhancing carbon efficiency. Our proposed method meticulously balances the need for ecological sustainability with the demand for high-quality generation outcomes. Employing a directive optimizer for the strategic assignment of generation directives to user prompts and an original offline quality evaluator, Sprout demonstrates a significant reduction in carbon emissions by over 40% in real-world evaluations using the Llama2 LLM and global electricity grid data. This research marks a critical step toward aligning AI technology with sustainable practices, highlighting the potential for mitigating environmental impacts in the rapidly expanding domain of generative artificial intelligence.

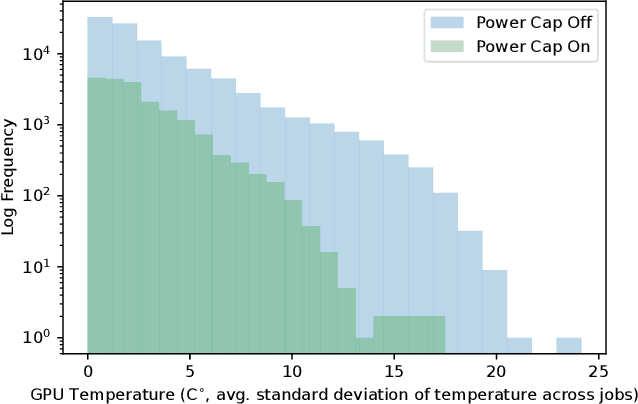

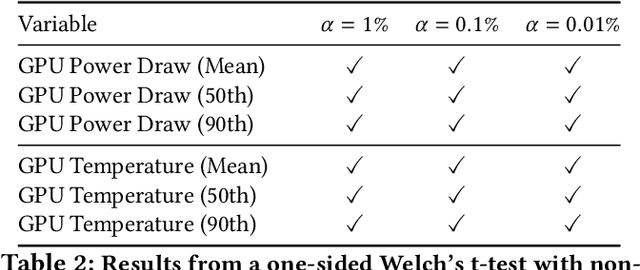

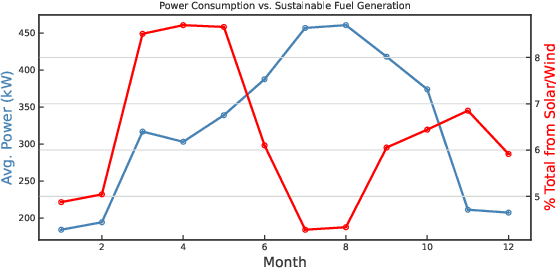

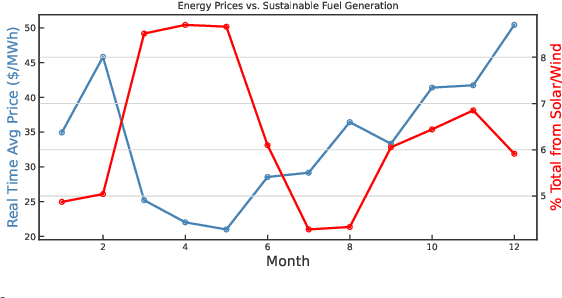

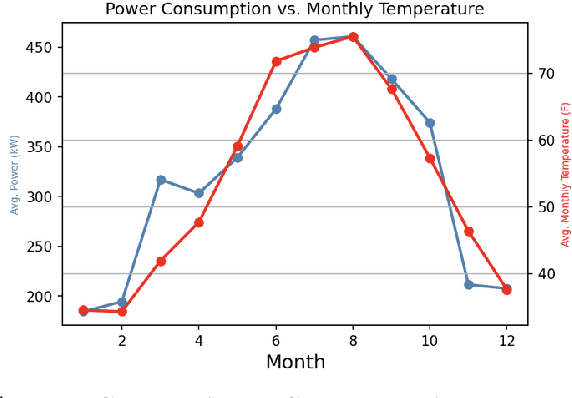

Sustainable Supercomputing for AI: GPU Power Capping at HPC Scale

Feb 25, 2024

Abstract:As research and deployment of AI grows, the computational burden to support and sustain its progress inevitably does too. To train or fine-tune state-of-the-art models in NLP, computer vision, etc., some form of AI hardware acceleration is virtually a requirement. Recent large language models require considerable resources to train and deploy, resulting in significant energy usage, potential carbon emissions, and massive demand for GPUs and other hardware accelerators. However, this surge carries large implications for energy sustainability at the HPC/datacenter level. In this paper, we study the aggregate effect of power-capping GPUs on GPU temperature and power draw at a research supercomputing center. With the right amount of power-capping, we show significant decreases in both temperature and power draw, reducing power consumption and potentially improving hardware life-span with minimal impact on job performance. While power-capping reduces power draw by design, the aggregate system-wide effect on overall energy consumption is less clear; for instance, if users notice job performance degradation from GPU power-caps, they may request additional GPU-jobs to compensate, negating any energy savings or even worsening energy consumption. To our knowledge, our work is the first to conduct and make available a detailed analysis of the effects of GPU power-capping at the supercomputing scale. We hope our work will inspire HPCs/datacenters to further explore, evaluate, and communicate the impact of power-capping AI hardware accelerators for more sustainable AI.

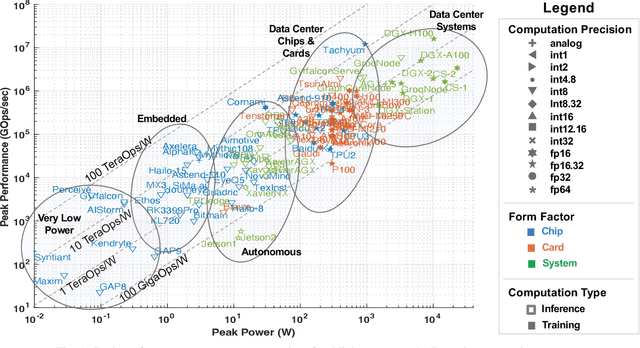

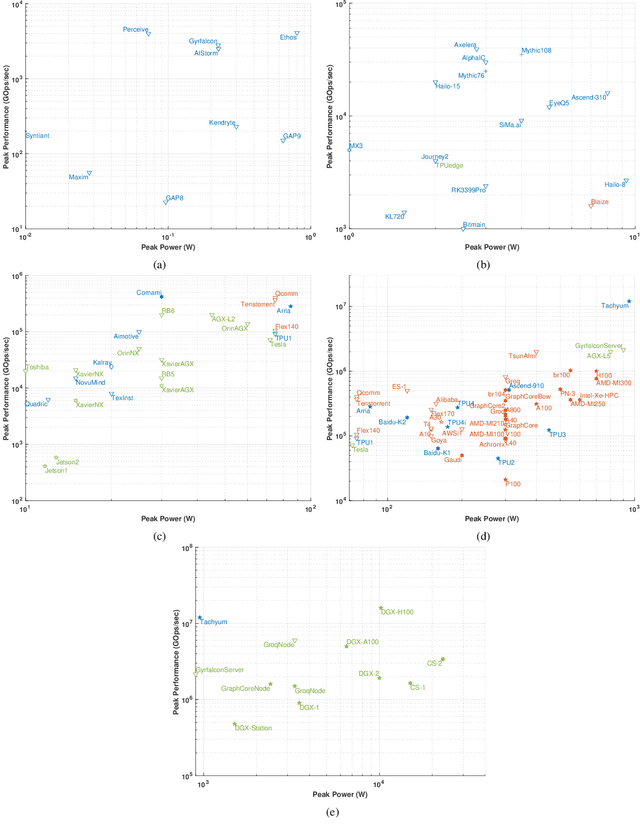

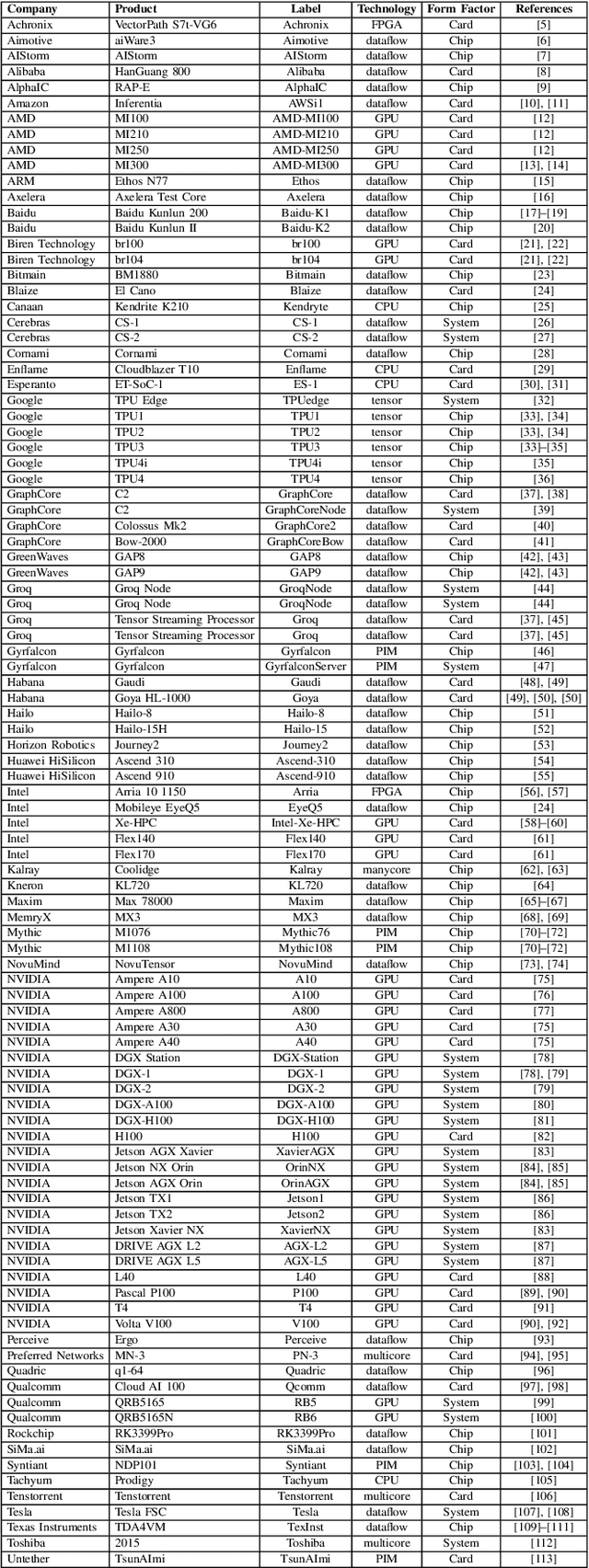

Lincoln AI Computing Survey (LAICS) Update

Oct 13, 2023

Abstract:This paper is an update of the survey of AI accelerators and processors from past four years, which is now called the Lincoln AI Computing Survey - LAICS (pronounced "lace"). As in past years, this paper collects and summarizes the current commercial accelerators that have been publicly announced with peak performance and peak power consumption numbers. The performance and power values are plotted on a scatter graph, and a number of dimensions and observations from the trends on this plot are again discussed and analyzed. Market segments are highlighted on the scatter plot, and zoomed plots of each segment are also included. Finally, a brief description of each of the new accelerators that have been added in the survey this year is included.

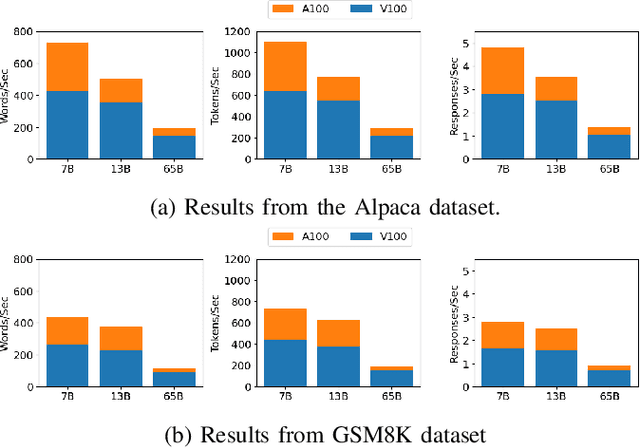

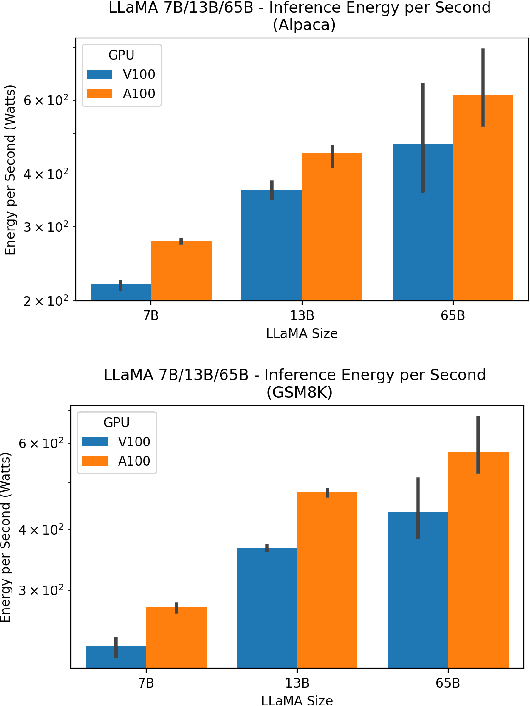

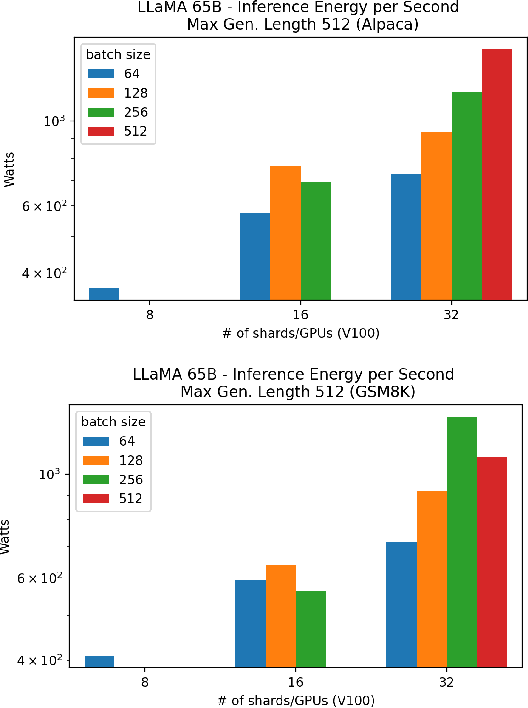

From Words to Watts: Benchmarking the Energy Costs of Large Language Model Inference

Oct 04, 2023

Abstract:Large language models (LLMs) have exploded in popularity due to their new generative capabilities that go far beyond prior state-of-the-art. These technologies are increasingly being leveraged in various domains such as law, finance, and medicine. However, these models carry significant computational challenges, especially the compute and energy costs required for inference. Inference energy costs already receive less attention than the energy costs of training LLMs -- despite how often these large models are called on to conduct inference in reality (e.g., ChatGPT). As these state-of-the-art LLMs see increasing usage and deployment in various domains, a better understanding of their resource utilization is crucial for cost-savings, scaling performance, efficient hardware usage, and optimal inference strategies. In this paper, we describe experiments conducted to study the computational and energy utilization of inference with LLMs. We benchmark and conduct a preliminary analysis of the inference performance and inference energy costs of different sizes of LLaMA -- a recent state-of-the-art LLM -- developed by Meta AI on two generations of popular GPUs (NVIDIA V100 \& A100) and two datasets (Alpaca and GSM8K) to reflect the diverse set of tasks/benchmarks for LLMs in research and practice. We present the results of multi-node, multi-GPU inference using model sharding across up to 32 GPUs. To our knowledge, our work is the one of the first to study LLM inference performance from the perspective of computational and energy resources at this scale.

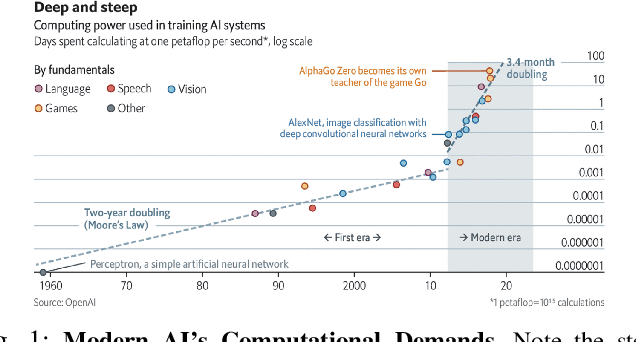

A Green(er) World for A.I

Jan 27, 2023

Abstract:As research and practice in artificial intelligence (A.I.) grow in leaps and bounds, the resources necessary to sustain and support their operations also grow at an increasing pace. While innovations and applications from A.I. have brought significant advances, from applications to vision and natural language to improvements to fields like medical imaging and materials engineering, their costs should not be neglected. As we embrace a world with ever-increasing amounts of data as well as research and development of A.I. applications, we are sure to face an ever-mounting energy footprint to sustain these computational budgets, data storage needs, and more. But, is this sustainable and, more importantly, what kind of setting is best positioned to nurture such sustainable A.I. in both research and practice? In this paper, we outline our outlook for Green A.I. -- a more sustainable, energy-efficient and energy-aware ecosystem for developing A.I. across the research, computing, and practitioner communities alike -- and the steps required to arrive there. We present a bird's eye view of various areas for potential changes and improvements from the ground floor of AI's operational and hardware optimizations for datacenters/HPCs to the current incentive structures in the world of A.I. research and practice, and more. We hope these points will spur further discussion, and action, on some of these issues and their potential solutions.

* 8 pages, published in 2022 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW)

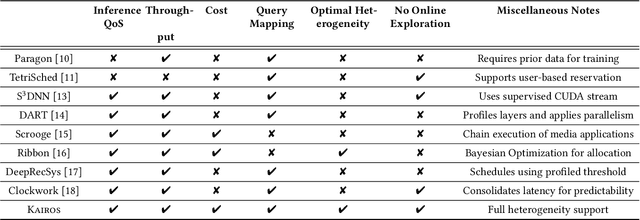

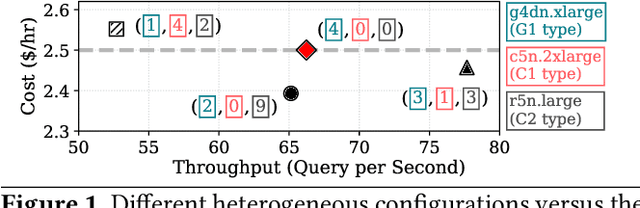

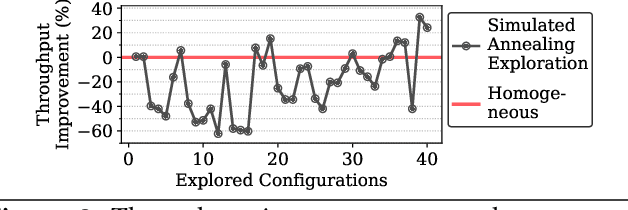

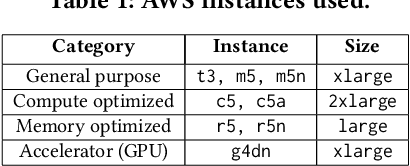

Building Heterogeneous Cloud System for Machine Learning Inference

Oct 15, 2022

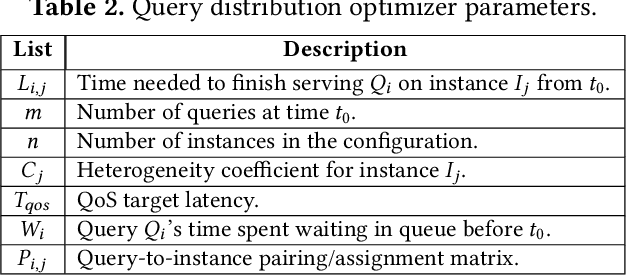

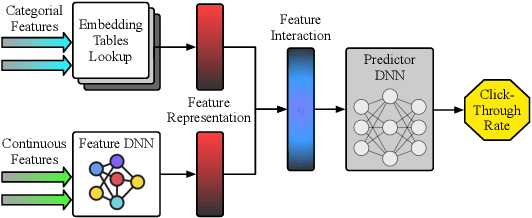

Abstract:Online inference is becoming a key service product for many businesses, deployed in cloud platforms to meet customer demands. Despite their revenue-generation capability, these services need to operate under tight Quality-of-Service (QoS) and cost budget constraints. This paper introduces KAIROS, a novel runtime framework that maximizes the query throughput while meeting QoS target and a cost budget. KAIROS designs and implements novel techniques to build a pool of heterogeneous compute hardware without online exploration overhead, and distribute inference queries optimally at runtime. Our evaluation using industry-grade deep learning (DL) models shows that KAIROS yields up to 2X the throughput of an optimal homogeneous solution, and outperforms state-of-the-art schemes by up to 70%, despite advantageous implementations of the competing schemes to ignore their exploration overhead.

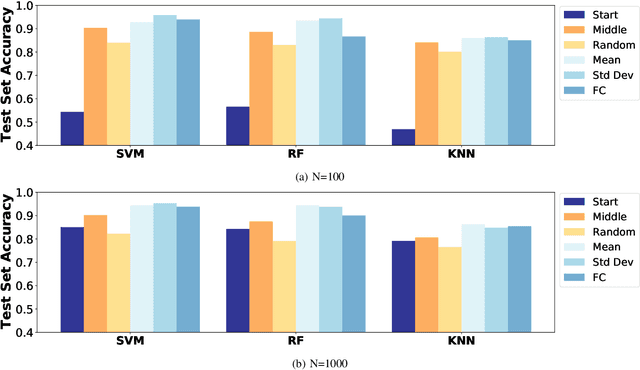

An Evaluation of Low Overhead Time Series Preprocessing Techniques for Downstream Machine Learning

Sep 12, 2022

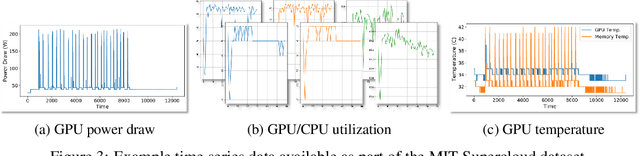

Abstract:In this paper we address the application of pre-processing techniques to multi-channel time series data with varying lengths, which we refer to as the alignment problem, for downstream machine learning. The misalignment of multi-channel time series data may occur for a variety of reasons, such as missing data, varying sampling rates, or inconsistent collection times. We consider multi-channel time series data collected from the MIT SuperCloud High Performance Computing (HPC) center, where different job start times and varying run times of HPC jobs result in misaligned data. This misalignment makes it challenging to build AI/ML approaches for tasks such as compute workload classification. Building on previous supervised classification work with the MIT SuperCloud Dataset, we address the alignment problem via three broad, low overhead approaches: sampling a fixed subset from a full time series, performing summary statistics on a full time series, and sampling a subset of coefficients from time series mapped to the frequency domain. Our best performing models achieve a classification accuracy greater than 95%, outperforming previous approaches to multi-channel time series classification with the MIT SuperCloud Dataset by 5%. These results indicate our low overhead approaches to solving the alignment problem, in conjunction with standard machine learning techniques, are able to achieve high levels of classification accuracy, and serve as a baseline for future approaches to addressing the alignment problem, such as kernel methods.

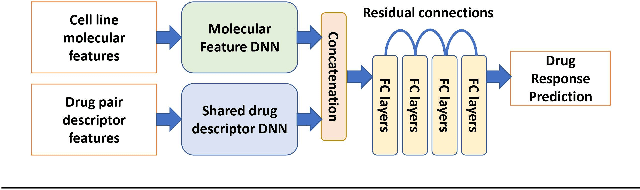

RIBBON: Cost-Effective and QoS-Aware Deep Learning Model Inference using a Diverse Pool of Cloud Computing Instances

Jul 28, 2022

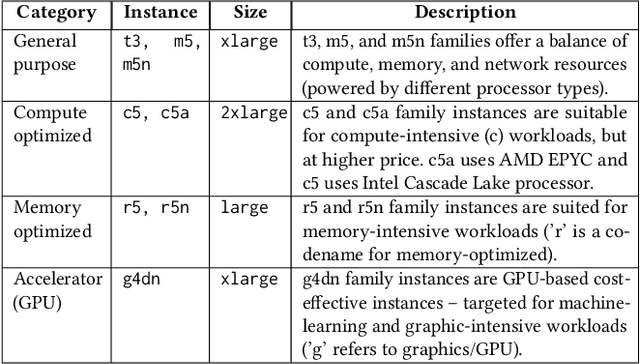

Abstract:Deep learning model inference is a key service in many businesses and scientific discovery processes. This paper introduces RIBBON, a novel deep learning inference serving system that meets two competing objectives: quality-of-service (QoS) target and cost-effectiveness. The key idea behind RIBBON is to intelligently employ a diverse set of cloud computing instances (heterogeneous instances) to meet the QoS target and maximize cost savings. RIBBON devises a Bayesian Optimization-driven strategy that helps users build the optimal set of heterogeneous instances for their model inference service needs on cloud computing platforms -- and, RIBBON demonstrates its superiority over existing approaches of inference serving systems using homogeneous instance pools. RIBBON saves up to 16% of the inference service cost for different learning models including emerging deep learning recommender system models and drug-discovery enabling models.

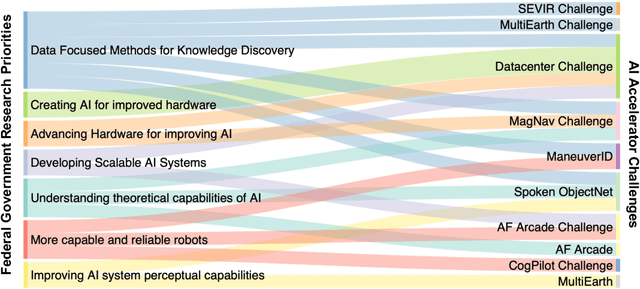

Developing a Series of AI Challenges for the United States Department of the Air Force

Jul 14, 2022

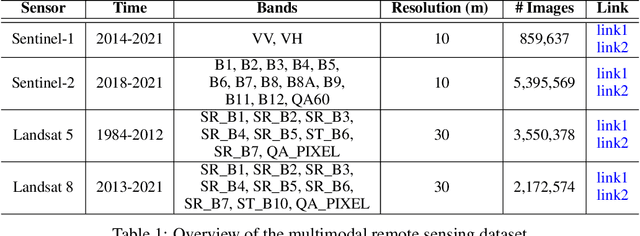

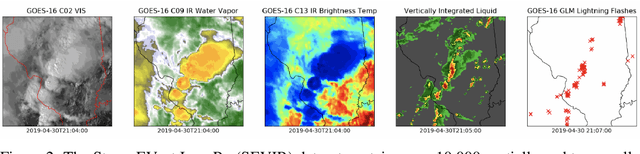

Abstract:Through a series of federal initiatives and orders, the U.S. Government has been making a concerted effort to ensure American leadership in AI. These broad strategy documents have influenced organizations such as the United States Department of the Air Force (DAF). The DAF-MIT AI Accelerator is an initiative between the DAF and MIT to bridge the gap between AI researchers and DAF mission requirements. Several projects supported by the DAF-MIT AI Accelerator are developing public challenge problems that address numerous Federal AI research priorities. These challenges target priorities by making large, AI-ready datasets publicly available, incentivizing open-source solutions, and creating a demand signal for dual use technologies that can stimulate further research. In this article, we describe these public challenges being developed and how their application contributes to scientific advances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge