Armando Cabrera

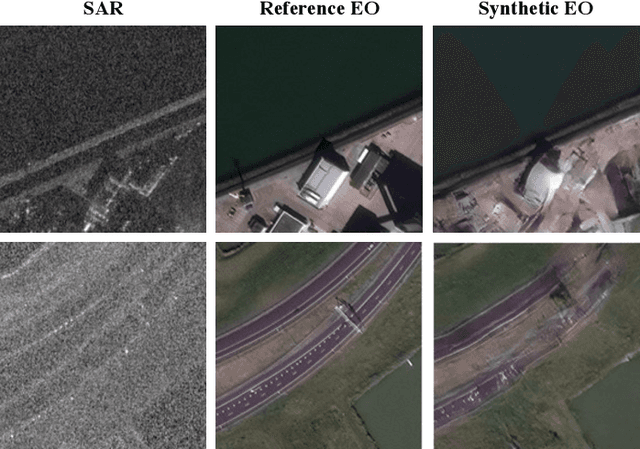

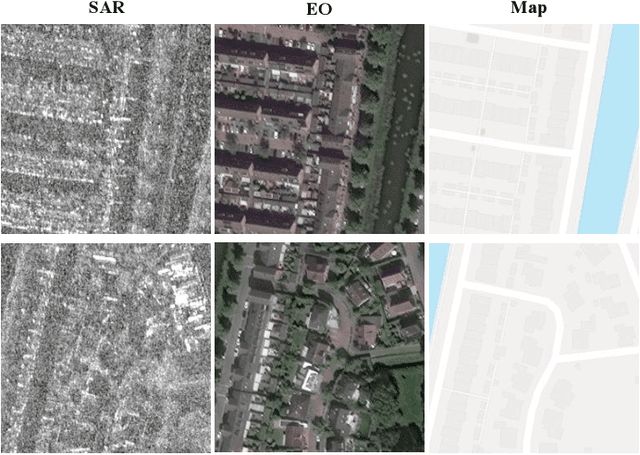

SAR-to-EO Image Translation with Multi-Conditional Adversarial Networks

Jul 26, 2022

Abstract:This paper explores the use of multi-conditional adversarial networks for SAR-to-EO image translation. Previous methods condition adversarial networks only on the input SAR. We show that incorporating multiple complementary modalities such as Google maps and IR can further improve SAR-to-EO image translation especially on preserving sharp edges of manmade objects. We demonstrate effectiveness of our approach on a diverse set of datasets including SEN12MS, DFC2020, and SpaceNet6. Our experimental results suggest that additional information provided by complementary modalities improves the performance of SAR-to-EO image translation compared to the models trained on paired SAR and EO data only. To best of our knowledge, our approach is the first to leverage multiple modalities for improving SAR-to-EO image translation performance.

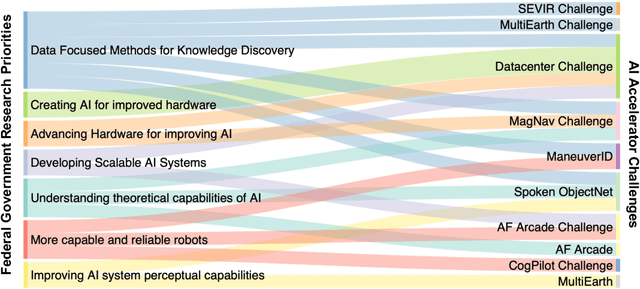

Developing a Series of AI Challenges for the United States Department of the Air Force

Jul 14, 2022

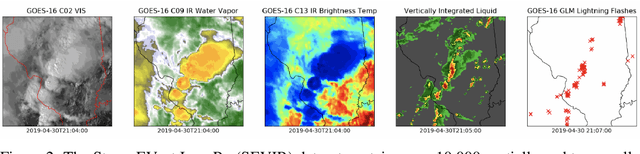

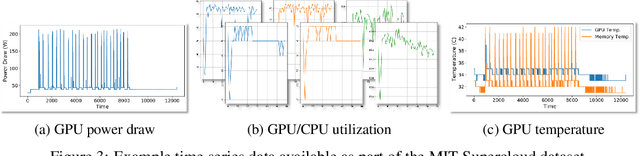

Abstract:Through a series of federal initiatives and orders, the U.S. Government has been making a concerted effort to ensure American leadership in AI. These broad strategy documents have influenced organizations such as the United States Department of the Air Force (DAF). The DAF-MIT AI Accelerator is an initiative between the DAF and MIT to bridge the gap between AI researchers and DAF mission requirements. Several projects supported by the DAF-MIT AI Accelerator are developing public challenge problems that address numerous Federal AI research priorities. These challenges target priorities by making large, AI-ready datasets publicly available, incentivizing open-source solutions, and creating a demand signal for dual use technologies that can stimulate further research. In this article, we describe these public challenges being developed and how their application contributes to scientific advances.

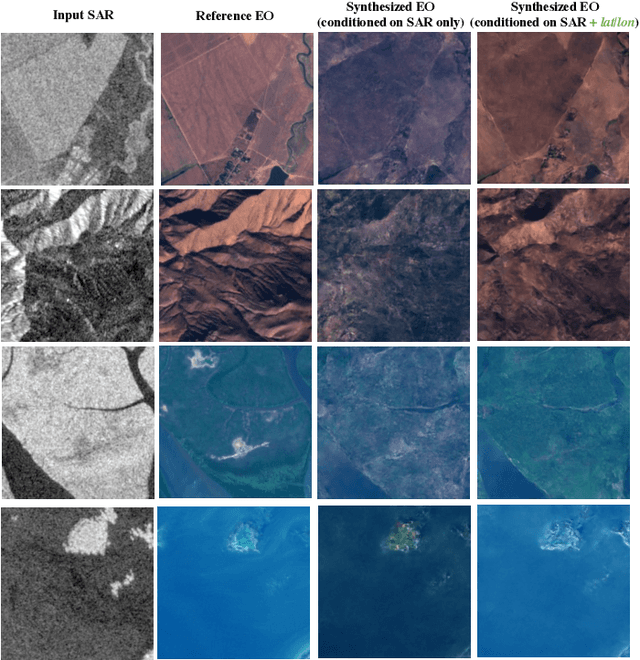

MultiEarth 2022 -- Multimodal Learning for Earth and Environment Workshop and Challenge

Apr 27, 2022

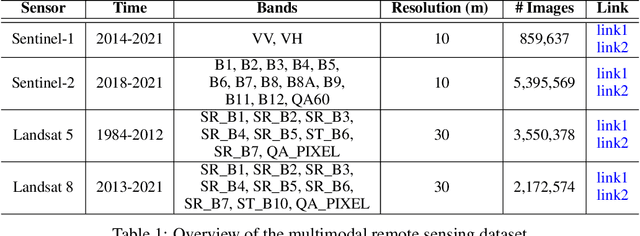

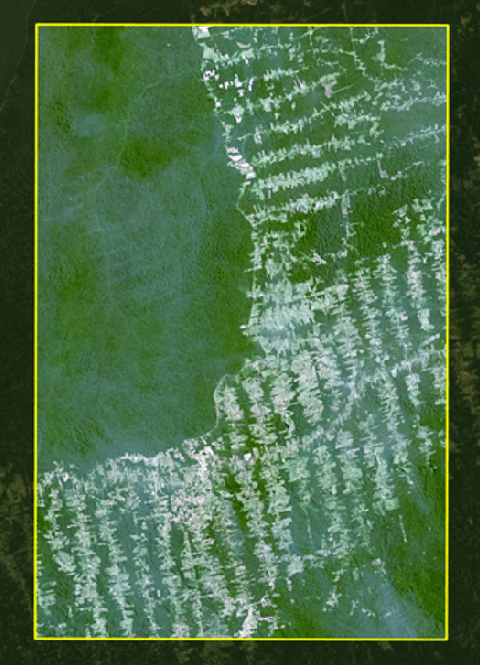

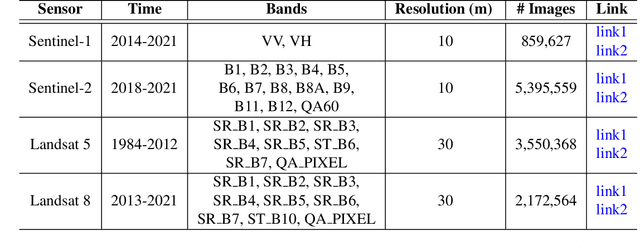

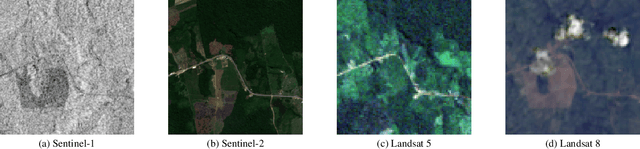

Abstract:The Multimodal Learning for Earth and Environment Challenge (MultiEarth 2022) will be the first competition aimed at the monitoring and analysis of deforestation in the Amazon rainforest at any time and in any weather conditions. The goal of the Challenge is to provide a common benchmark for multimodal information processing and to bring together the earth and environmental science communities as well as multimodal representation learning communities to compare the relative merits of the various multimodal learning methods to deforestation estimation under well-defined and strictly comparable conditions. MultiEarth 2022 will have three sub-challenges: 1) matrix completion, 2) deforestation estimation, and 3) image-to-image translation. This paper presents the challenge guidelines, datasets, and evaluation metrics for the three sub-challenges. Our challenge website is available at https://sites.google.com/view/rainforest-challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge