Kuan Wei Huang

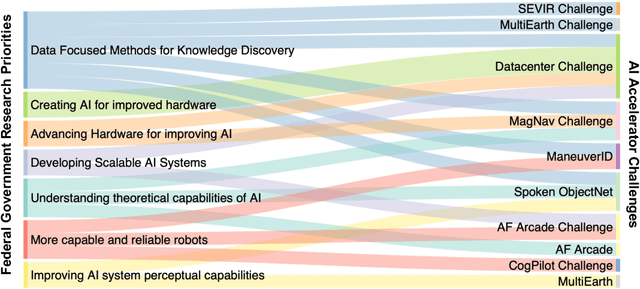

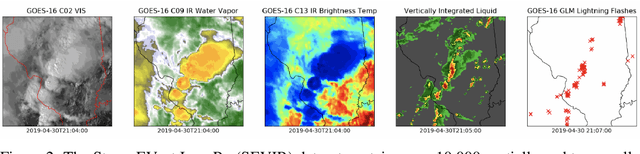

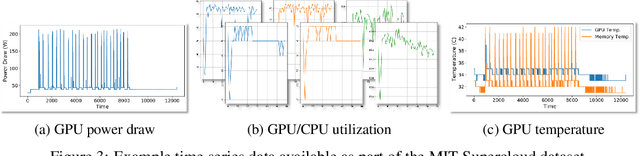

Developing a Series of AI Challenges for the United States Department of the Air Force

Jul 14, 2022

Abstract:Through a series of federal initiatives and orders, the U.S. Government has been making a concerted effort to ensure American leadership in AI. These broad strategy documents have influenced organizations such as the United States Department of the Air Force (DAF). The DAF-MIT AI Accelerator is an initiative between the DAF and MIT to bridge the gap between AI researchers and DAF mission requirements. Several projects supported by the DAF-MIT AI Accelerator are developing public challenge problems that address numerous Federal AI research priorities. These challenges target priorities by making large, AI-ready datasets publicly available, incentivizing open-source solutions, and creating a demand signal for dual use technologies that can stimulate further research. In this article, we describe these public challenges being developed and how their application contributes to scientific advances.

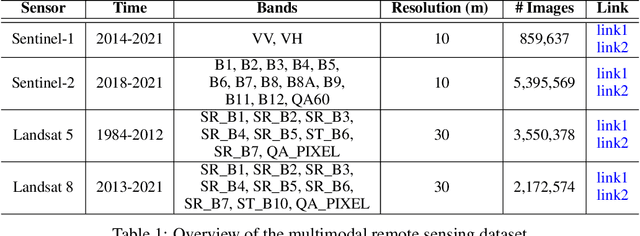

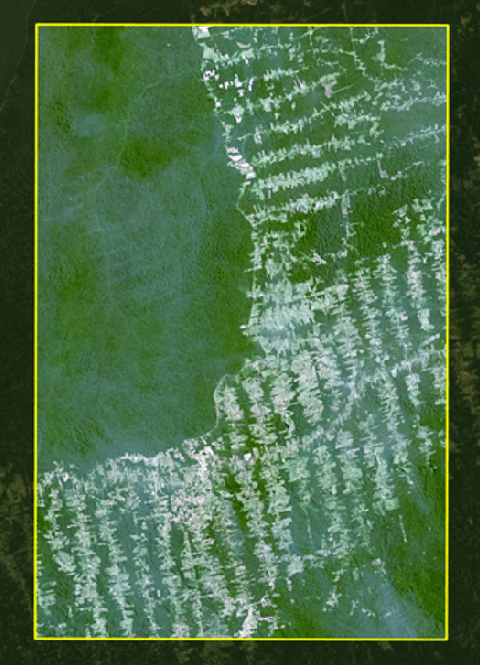

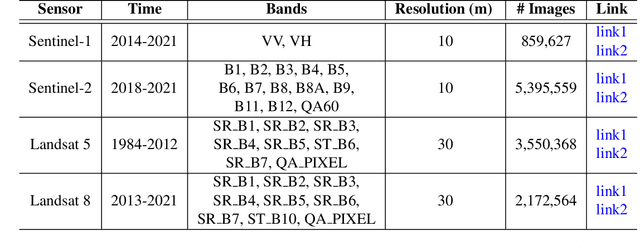

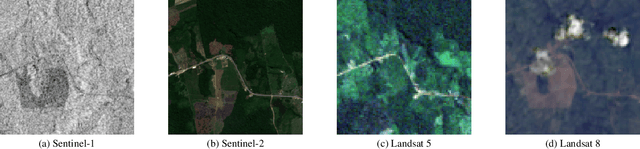

MultiEarth 2022 -- Multimodal Learning for Earth and Environment Workshop and Challenge

Apr 27, 2022

Abstract:The Multimodal Learning for Earth and Environment Challenge (MultiEarth 2022) will be the first competition aimed at the monitoring and analysis of deforestation in the Amazon rainforest at any time and in any weather conditions. The goal of the Challenge is to provide a common benchmark for multimodal information processing and to bring together the earth and environmental science communities as well as multimodal representation learning communities to compare the relative merits of the various multimodal learning methods to deforestation estimation under well-defined and strictly comparable conditions. MultiEarth 2022 will have three sub-challenges: 1) matrix completion, 2) deforestation estimation, and 3) image-to-image translation. This paper presents the challenge guidelines, datasets, and evaluation metrics for the three sub-challenges. Our challenge website is available at https://sites.google.com/view/rainforest-challenge.

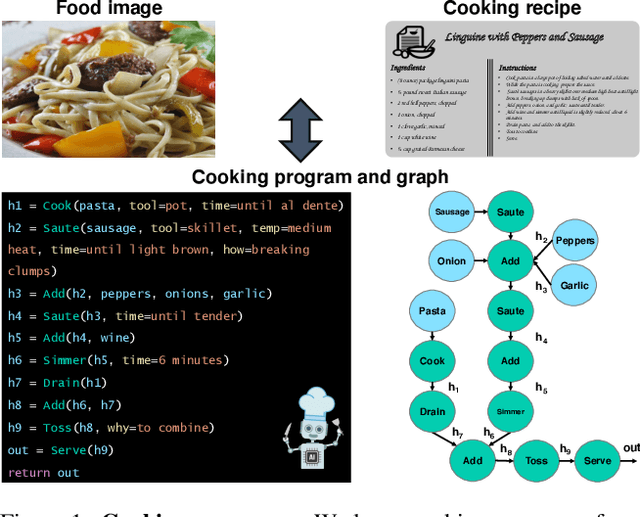

Learning Program Representations for Food Images and Cooking Recipes

Mar 30, 2022

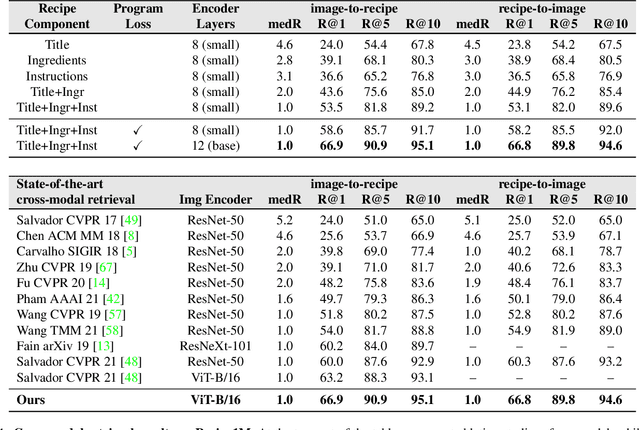

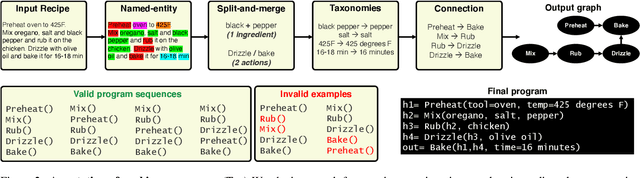

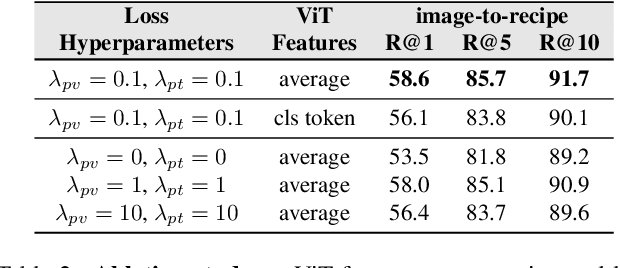

Abstract:In this paper, we are interested in modeling a how-to instructional procedure, such as a cooking recipe, with a meaningful and rich high-level representation. Specifically, we propose to represent cooking recipes and food images as cooking programs. Programs provide a structured representation of the task, capturing cooking semantics and sequential relationships of actions in the form of a graph. This allows them to be easily manipulated by users and executed by agents. To this end, we build a model that is trained to learn a joint embedding between recipes and food images via self-supervision and jointly generate a program from this embedding as a sequence. To validate our idea, we crowdsource programs for cooking recipes and show that: (a) projecting the image-recipe embeddings into programs leads to better cross-modal retrieval results; (b) generating programs from images leads to better recognition results compared to predicting raw cooking instructions; and (c) we can generate food images by manipulating programs via optimizing the latent code of a GAN. Code, data, and models are available online.

Distributed Motion Control for Multiple Connected Surface Vessels

Jul 24, 2020

Abstract:We propose a scalable cooperative control approach which coordinates a group of rigidly connected autonomous surface vessels to track desired trajectories in a planar water environment as a single floating modular structure. Our approach leverages the implicit information of the structure's motion for force and torque allocation without explicit communication among the robots. In our system, a leader robot steers the entire group by adjusting its force and torque according to the structure's deviation from the desired trajectory, while follower robots run distributed consensus-based controllers to match their inputs to amplify the leader's intent using only onboard sensors as feedback. To cope with the complex and highly coupled system dynamics in the water, the leader robot employs a nonlinear model predictive controller (NMPC), where we experimentally estimated the dynamics model of the floating modular structure in order to achieve superior performance for leader-following control. Our method has a wide range of potential applications in transporting humans and goods in many of today's existing waterways. We conducted trajectory and orientation tracking experiments in hardware with three custom-built autonomous modular robotic boats, called Roboat, which are capable of holonomic motions and onboard state estimation. Simulation results with up to 65 robots also prove the scalability of our proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge