Karen Gettings

RIBBON: Cost-Effective and QoS-Aware Deep Learning Model Inference using a Diverse Pool of Cloud Computing Instances

Jul 28, 2022

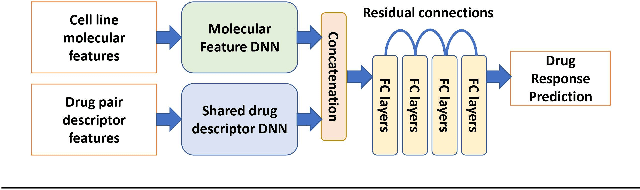

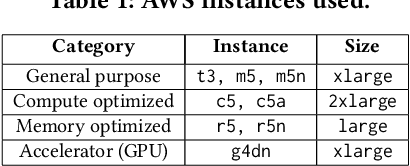

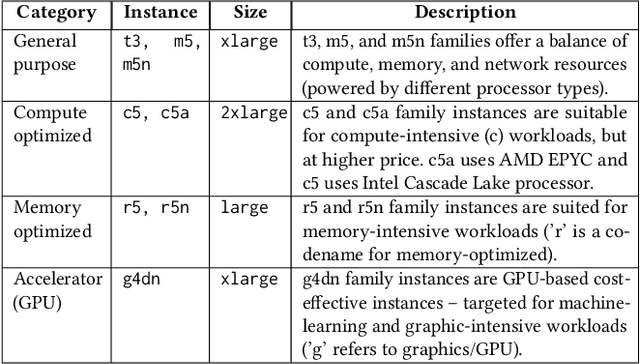

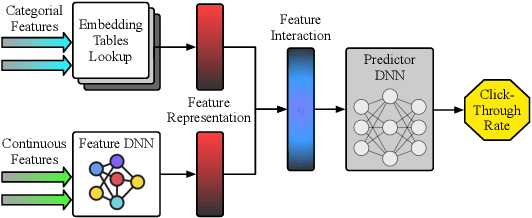

Abstract:Deep learning model inference is a key service in many businesses and scientific discovery processes. This paper introduces RIBBON, a novel deep learning inference serving system that meets two competing objectives: quality-of-service (QoS) target and cost-effectiveness. The key idea behind RIBBON is to intelligently employ a diverse set of cloud computing instances (heterogeneous instances) to meet the QoS target and maximize cost savings. RIBBON devises a Bayesian Optimization-driven strategy that helps users build the optimal set of heterogeneous instances for their model inference service needs on cloud computing platforms -- and, RIBBON demonstrates its superiority over existing approaches of inference serving systems using homogeneous instance pools. RIBBON saves up to 16% of the inference service cost for different learning models including emerging deep learning recommender system models and drug-discovery enabling models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge