Baolin Li

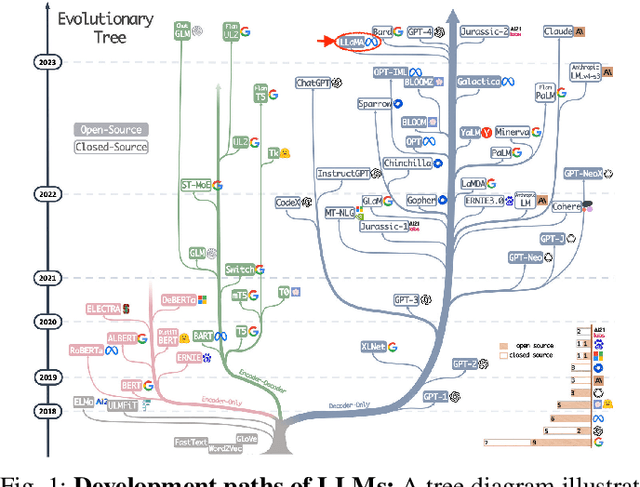

LLM Inference Serving: Survey of Recent Advances and Opportunities

Jul 17, 2024Abstract:This survey offers a comprehensive overview of recent advancements in Large Language Model (LLM) serving systems, focusing on research since the year 2023. We specifically examine system-level enhancements that improve performance and efficiency without altering the core LLM decoding mechanisms. By selecting and reviewing high-quality papers from prestigious ML and system venues, we highlight key innovations and practical considerations for deploying and scaling LLMs in real-world production environments. This survey serves as a valuable resource for LLM practitioners seeking to stay abreast of the latest developments in this rapidly evolving field.

Toward Sustainable GenAI using Generation Directives for Carbon-Friendly Large Language Model Inference

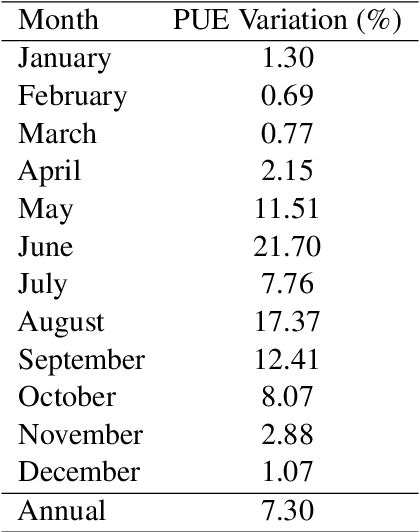

Mar 19, 2024Abstract:The rapid advancement of Generative Artificial Intelligence (GenAI) across diverse sectors raises significant environmental concerns, notably the carbon emissions from their cloud and high performance computing (HPC) infrastructure. This paper presents Sprout, an innovative framework designed to address these concerns by reducing the carbon footprint of generative Large Language Model (LLM) inference services. Sprout leverages the innovative concept of "generation directives" to guide the autoregressive generation process, thereby enhancing carbon efficiency. Our proposed method meticulously balances the need for ecological sustainability with the demand for high-quality generation outcomes. Employing a directive optimizer for the strategic assignment of generation directives to user prompts and an original offline quality evaluator, Sprout demonstrates a significant reduction in carbon emissions by over 40% in real-world evaluations using the Llama2 LLM and global electricity grid data. This research marks a critical step toward aligning AI technology with sustainable practices, highlighting the potential for mitigating environmental impacts in the rapidly expanding domain of generative artificial intelligence.

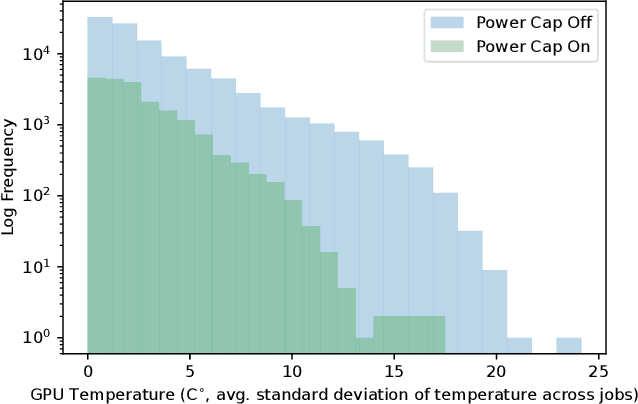

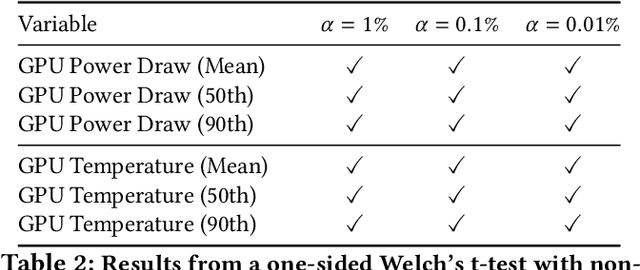

Sustainable Supercomputing for AI: GPU Power Capping at HPC Scale

Feb 25, 2024

Abstract:As research and deployment of AI grows, the computational burden to support and sustain its progress inevitably does too. To train or fine-tune state-of-the-art models in NLP, computer vision, etc., some form of AI hardware acceleration is virtually a requirement. Recent large language models require considerable resources to train and deploy, resulting in significant energy usage, potential carbon emissions, and massive demand for GPUs and other hardware accelerators. However, this surge carries large implications for energy sustainability at the HPC/datacenter level. In this paper, we study the aggregate effect of power-capping GPUs on GPU temperature and power draw at a research supercomputing center. With the right amount of power-capping, we show significant decreases in both temperature and power draw, reducing power consumption and potentially improving hardware life-span with minimal impact on job performance. While power-capping reduces power draw by design, the aggregate system-wide effect on overall energy consumption is less clear; for instance, if users notice job performance degradation from GPU power-caps, they may request additional GPU-jobs to compensate, negating any energy savings or even worsening energy consumption. To our knowledge, our work is the first to conduct and make available a detailed analysis of the effects of GPU power-capping at the supercomputing scale. We hope our work will inspire HPCs/datacenters to further explore, evaluate, and communicate the impact of power-capping AI hardware accelerators for more sustainable AI.

Synergistic Signals: Exploiting Co-Engagement and Semantic Links via Graph Neural Networks

Dec 07, 2023

Abstract:Given a set of candidate entities (e.g. movie titles), the ability to identify similar entities is a core capability of many recommender systems. Most often this is achieved by collaborative filtering approaches, i.e. if users co-engage with a pair of entities frequently enough, the embeddings should be similar. However, relying on co-engagement data alone can result in lower-quality embeddings for new and unpopular entities. We study this problem in the context recommender systems at Netflix. We observe that there is abundant semantic information such as genre, content maturity level, themes, etc. that complements co-engagement signals and provides interpretability in similarity models. To learn entity similarities from both data sources holistically, we propose a novel graph-based approach called SemanticGNN. SemanticGNN models entities, semantic concepts, collaborative edges, and semantic edges within a large-scale knowledge graph and conducts representation learning over it. Our key technical contributions are twofold: (1) we develop a novel relation-aware attention graph neural network (GNN) to handle the imbalanced distribution of relation types in our graph; (2) to handle web-scale graph data that has millions of nodes and billions of edges, we develop a novel distributed graph training paradigm. The proposed model is successfully deployed within Netflix and empirical experiments indicate it yields up to 35% improvement in performance on similarity judgment tasks.

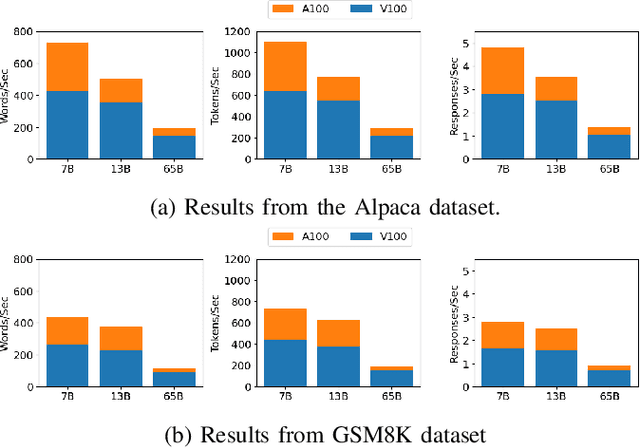

From Words to Watts: Benchmarking the Energy Costs of Large Language Model Inference

Oct 04, 2023

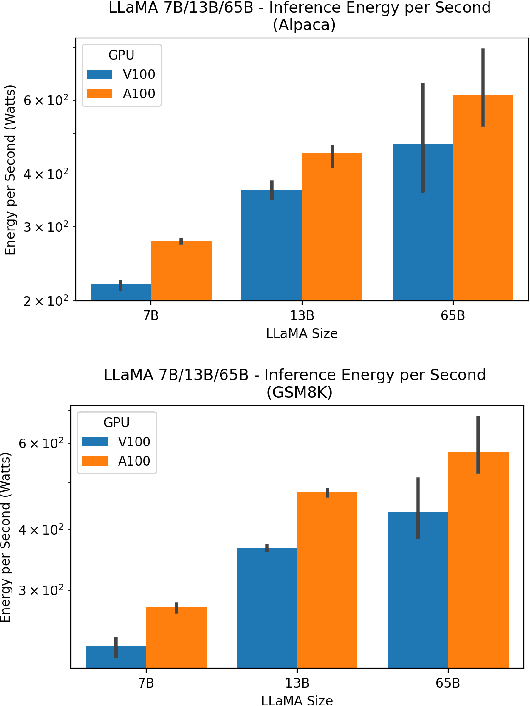

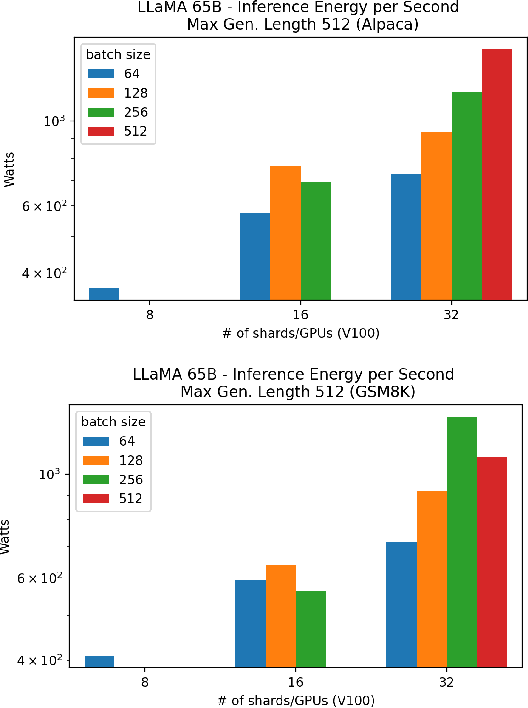

Abstract:Large language models (LLMs) have exploded in popularity due to their new generative capabilities that go far beyond prior state-of-the-art. These technologies are increasingly being leveraged in various domains such as law, finance, and medicine. However, these models carry significant computational challenges, especially the compute and energy costs required for inference. Inference energy costs already receive less attention than the energy costs of training LLMs -- despite how often these large models are called on to conduct inference in reality (e.g., ChatGPT). As these state-of-the-art LLMs see increasing usage and deployment in various domains, a better understanding of their resource utilization is crucial for cost-savings, scaling performance, efficient hardware usage, and optimal inference strategies. In this paper, we describe experiments conducted to study the computational and energy utilization of inference with LLMs. We benchmark and conduct a preliminary analysis of the inference performance and inference energy costs of different sizes of LLaMA -- a recent state-of-the-art LLM -- developed by Meta AI on two generations of popular GPUs (NVIDIA V100 \& A100) and two datasets (Alpaca and GSM8K) to reflect the diverse set of tasks/benchmarks for LLMs in research and practice. We present the results of multi-node, multi-GPU inference using model sharding across up to 32 GPUs. To our knowledge, our work is the one of the first to study LLM inference performance from the perspective of computational and energy resources at this scale.

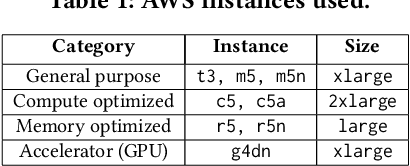

Building Heterogeneous Cloud System for Machine Learning Inference

Oct 15, 2022

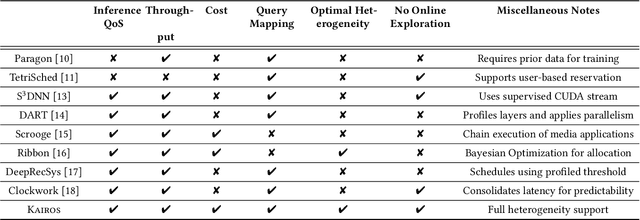

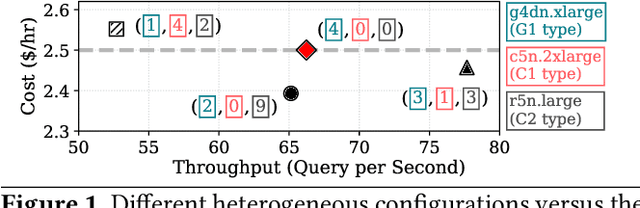

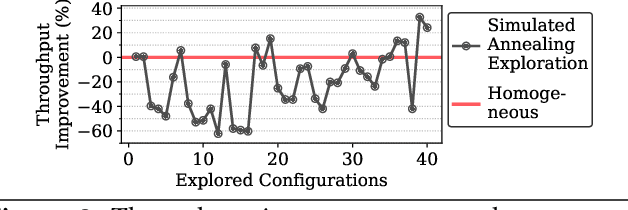

Abstract:Online inference is becoming a key service product for many businesses, deployed in cloud platforms to meet customer demands. Despite their revenue-generation capability, these services need to operate under tight Quality-of-Service (QoS) and cost budget constraints. This paper introduces KAIROS, a novel runtime framework that maximizes the query throughput while meeting QoS target and a cost budget. KAIROS designs and implements novel techniques to build a pool of heterogeneous compute hardware without online exploration overhead, and distribute inference queries optimally at runtime. Our evaluation using industry-grade deep learning (DL) models shows that KAIROS yields up to 2X the throughput of an optimal homogeneous solution, and outperforms state-of-the-art schemes by up to 70%, despite advantageous implementations of the competing schemes to ignore their exploration overhead.

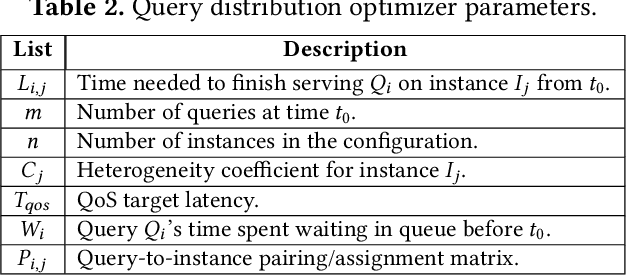

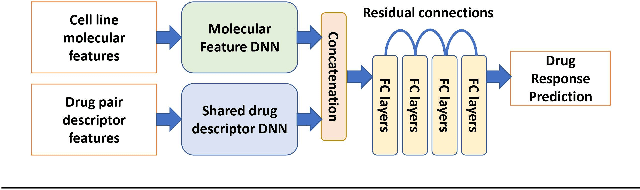

RIBBON: Cost-Effective and QoS-Aware Deep Learning Model Inference using a Diverse Pool of Cloud Computing Instances

Jul 28, 2022

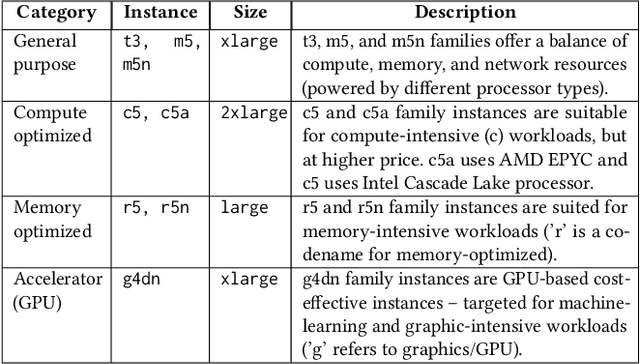

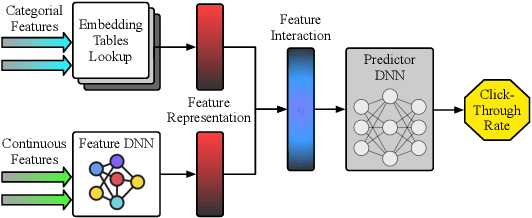

Abstract:Deep learning model inference is a key service in many businesses and scientific discovery processes. This paper introduces RIBBON, a novel deep learning inference serving system that meets two competing objectives: quality-of-service (QoS) target and cost-effectiveness. The key idea behind RIBBON is to intelligently employ a diverse set of cloud computing instances (heterogeneous instances) to meet the QoS target and maximize cost savings. RIBBON devises a Bayesian Optimization-driven strategy that helps users build the optimal set of heterogeneous instances for their model inference service needs on cloud computing platforms -- and, RIBBON demonstrates its superiority over existing approaches of inference serving systems using homogeneous instance pools. RIBBON saves up to 16% of the inference service cost for different learning models including emerging deep learning recommender system models and drug-discovery enabling models.

Great Power, Great Responsibility: Recommendations for Reducing Energy for Training Language Models

May 19, 2022

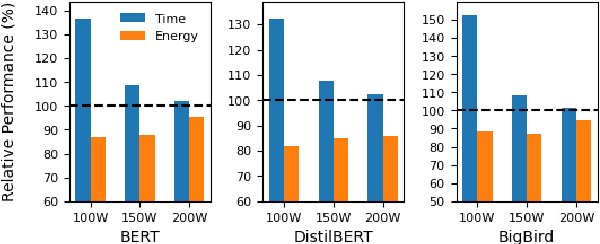

Abstract:The energy requirements of current natural language processing models continue to grow at a rapid, unsustainable pace. Recent works highlighting this problem conclude there is an urgent need for methods that reduce the energy needs of NLP and machine learning more broadly. In this article, we investigate techniques that can be used to reduce the energy consumption of common NLP applications. In particular, we focus on techniques to measure energy usage and different hardware and datacenter-oriented settings that can be tuned to reduce energy consumption for training and inference for language models. We characterize the impact of these settings on metrics such as computational performance and energy consumption through experiments conducted on a high performance computing system as well as popular cloud computing platforms. These techniques can lead to significant reduction in energy consumption when training language models or their use for inference. For example, power-capping, which limits the maximum power a GPU can consume, can enable a 15\% decrease in energy usage with marginal increase in overall computation time when training a transformer-based language model.

The MIT Supercloud Workload Classification Challenge

Apr 13, 2022

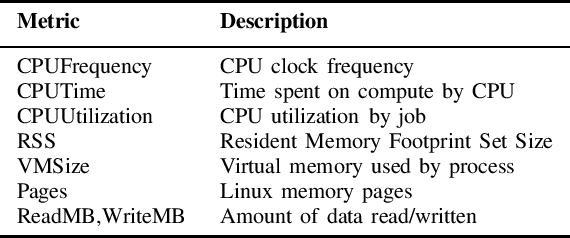

Abstract:High-Performance Computing (HPC) centers and cloud providers support an increasingly diverse set of applications on heterogenous hardware. As Artificial Intelligence (AI) and Machine Learning (ML) workloads have become an increasingly larger share of the compute workloads, new approaches to optimized resource usage, allocation, and deployment of new AI frameworks are needed. By identifying compute workloads and their utilization characteristics, HPC systems may be able to better match available resources with the application demand. By leveraging datacenter instrumentation, it may be possible to develop AI-based approaches that can identify workloads and provide feedback to researchers and datacenter operators for improving operational efficiency. To enable this research, we released the MIT Supercloud Dataset, which provides detailed monitoring logs from the MIT Supercloud cluster. This dataset includes CPU and GPU usage by jobs, memory usage, and file system logs. In this paper, we present a workload classification challenge based on this dataset. We introduce a labelled dataset that can be used to develop new approaches to workload classification and present initial results based on existing approaches. The goal of this challenge is to foster algorithmic innovations in the analysis of compute workloads that can achieve higher accuracy than existing methods. Data and code will be made publicly available via the Datacenter Challenge website : https://dcc.mit.edu.

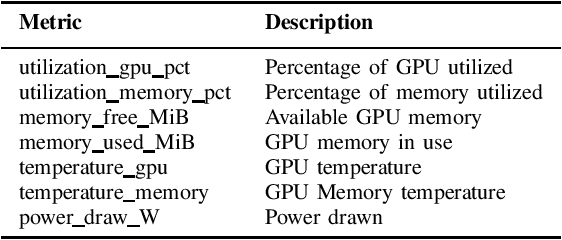

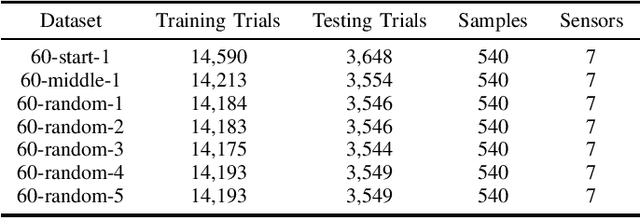

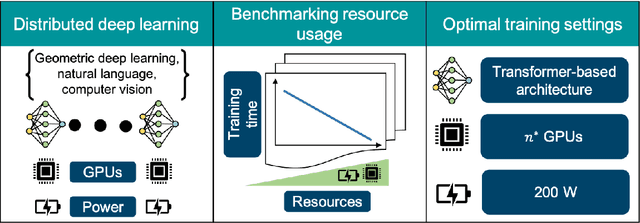

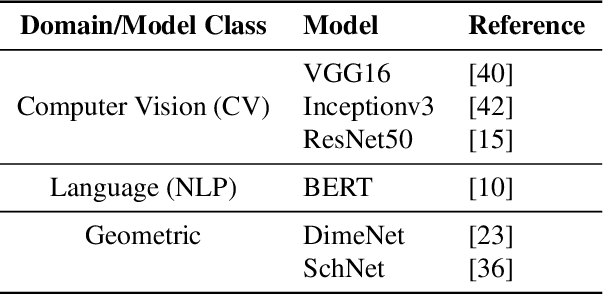

Benchmarking Resource Usage for Efficient Distributed Deep Learning

Jan 28, 2022

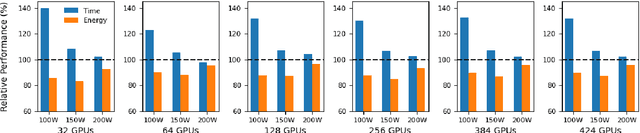

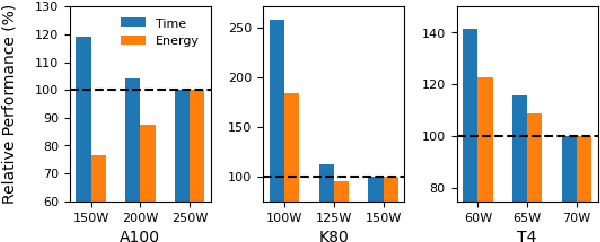

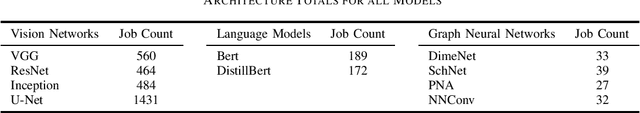

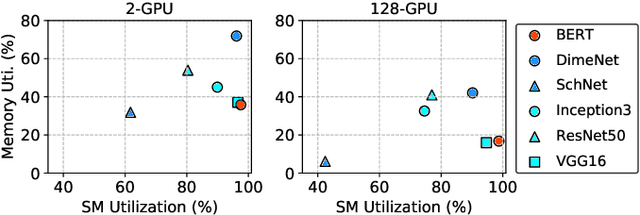

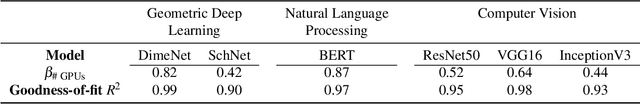

Abstract:Deep learning (DL) workflows demand an ever-increasing budget of compute and energy in order to achieve outsized gains. Neural architecture searches, hyperparameter sweeps, and rapid prototyping consume immense resources that can prevent resource-constrained researchers from experimenting with large models and carry considerable environmental impact. As such, it becomes essential to understand how different deep neural networks (DNNs) and training leverage increasing compute and energy resources -- especially specialized computationally-intensive models across different domains and applications. In this paper, we conduct over 3,400 experiments training an array of deep networks representing various domains/tasks -- natural language processing, computer vision, and chemistry -- on up to 424 graphics processing units (GPUs). During training, our experiments systematically vary compute resource characteristics and energy-saving mechanisms such as power utilization and GPU clock rate limits to capture and illustrate the different trade-offs and scaling behaviors each representative model exhibits under various resource and energy-constrained regimes. We fit power law models that describe how training time scales with available compute resources and energy constraints. We anticipate that these findings will help inform and guide high-performance computing providers in optimizing resource utilization, by selectively reducing energy consumption for different deep learning tasks/workflows with minimal impact on training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge