Océane Boulais

Physically-Consistent Generative Adversarial Networks for Coastal Flood Visualization

May 05, 2021

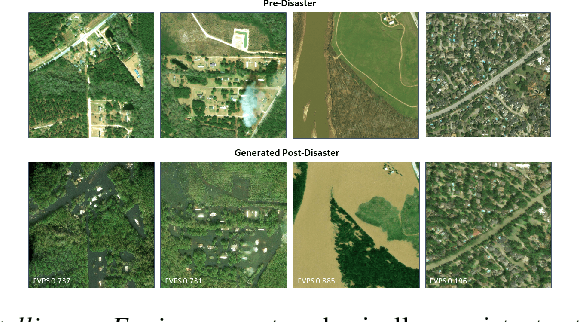

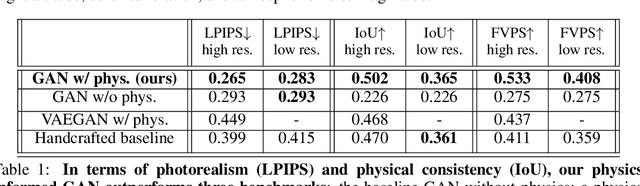

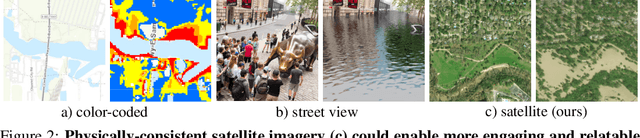

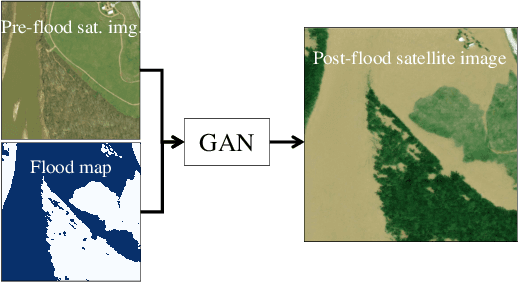

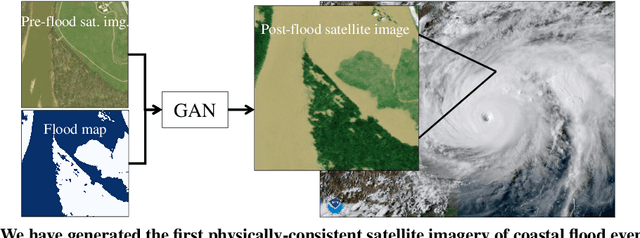

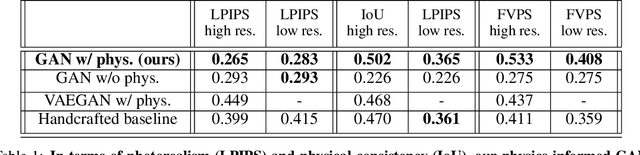

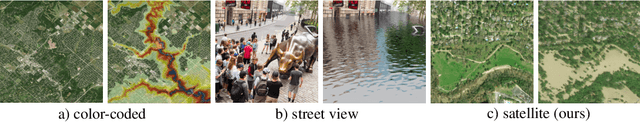

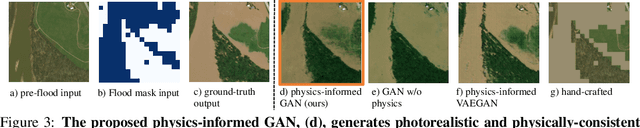

Abstract:As climate change increases the intensity of natural disasters, society needs better tools for adaptation. Floods, for example, are the most frequent natural disaster, and better tools for flood risk communication could increase the support for flood-resilient infrastructure development. Our work aims to enable more visual communication of large-scale climate impacts via visualizing the output of coastal flood models as satellite imagery. We propose the first deep learning pipeline to ensure physical-consistency in synthetic visual satellite imagery. We advanced a state-of-the-art GAN called pix2pixHD, such that it produces imagery that is physically-consistent with the output of an expert-validated storm surge model (NOAA SLOSH). By evaluating the imagery relative to physics-based flood maps, we find that our proposed framework outperforms baseline models in both physical-consistency and photorealism. We envision our work to be the first step towards a global visualization of how climate change shapes our landscape. Continuing on this path, we show that the proposed pipeline generalizes to visualize arctic sea ice melt. We also publish a dataset of over 25k labelled image-pairs to study image-to-image translation in Earth observation.

Physics-informed GANs for Coastal Flood Visualization

Oct 16, 2020

Abstract:As climate change increases the intensity of natural disasters, society needs better tools for adaptation. Floods, for example, are the most frequent natural disaster, but during hurricanes the area is largely covered by clouds and emergency managers must rely on nonintuitive flood visualizations for mission planning. To assist these emergency managers, we have created a deep learning pipeline that generates visual satellite images of current and future coastal flooding. We advanced a state-of-the-art GAN called pix2pixHD, such that it produces imagery that is physically-consistent with the output of an expert-validated storm surge model (NOAA SLOSH). By evaluating the imagery relative to physics-based flood maps, we find that our proposed framework outperforms baseline models in both physical-consistency and photorealism. While this work focused on the visualization of coastal floods, we envision the creation of a global visualization of how climate change will shape our earth.

Interpolating GANs to Scaffold Autotelic Creativity

Jul 21, 2020

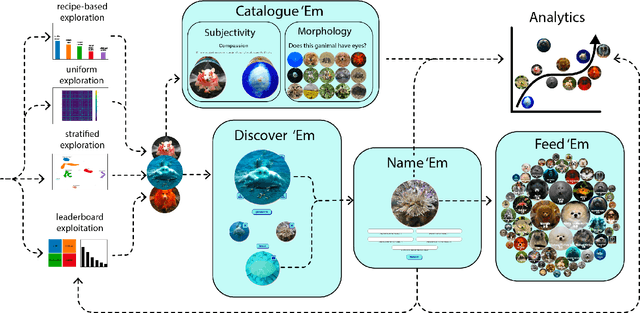

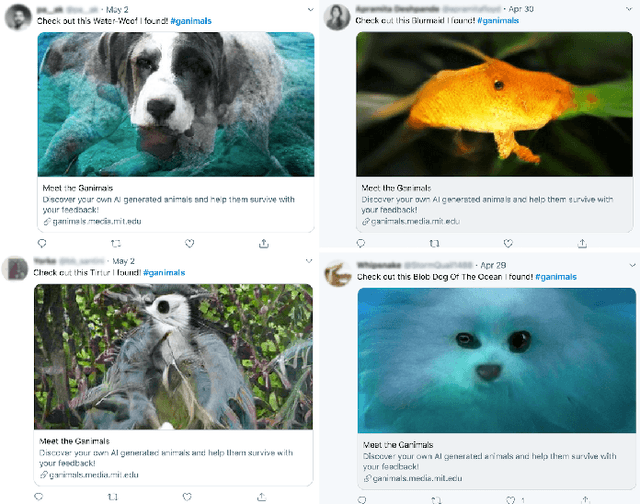

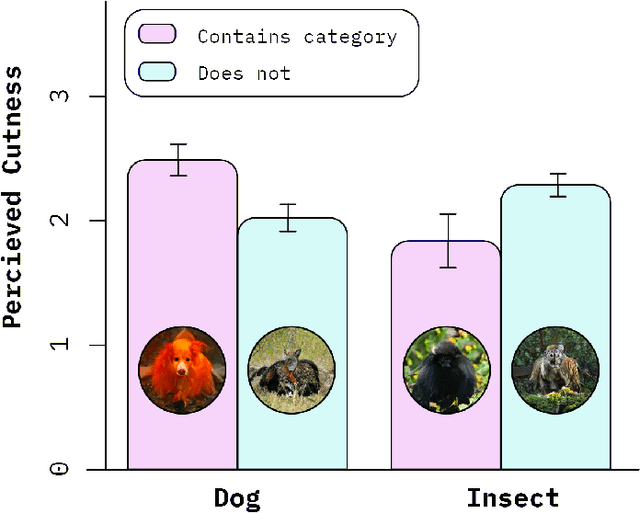

Abstract:The latent space modeled by generative adversarial networks (GANs) represents a large possibility space. By interpolating categories generated by GANs, it is possible to create novel hybrid images. We present "Meet the Ganimals," a casual creator built on interpolations of BigGAN that can generate novel, hybrid animals called ganimals by efficiently searching this possibility space. Like traditional casual creators, the system supports a simple creative flow that encourages rapid exploration of the possibility space. Users can discover new ganimals, create their own, and share their reactions to aesthetic, emotional, and morphological characteristics of the ganimals. As users provide input to the system, the system adapts and changes the distribution of categories upon which ganimals are generated. As one of the first GAN-based casual creators, Meet the Ganimals is an example how casual creators can leverage human curation and citizen science to discover novel artifacts within a large possibility space.

FathomNet: An underwater image training database for ocean exploration and discovery

Jul 10, 2020

Abstract:Thousands of hours of marine video data are collected annually from remotely operated vehicles (ROVs) and other underwater assets. However, current manual methods of analysis impede the full utilization of collected data for real time algorithms for ROV and large biodiversity analyses. FathomNet is a novel baseline image training set, optimized to accelerate development of modern, intelligent, and automated analysis of underwater imagery. Our seed data set consists of an expertly annotated and continuously maintained database with more than 26,000 hours of videotape, 6.8 million annotations, and 4,349 terms in the knowledge base. FathomNet leverages this data set by providing imagery, localizations, and class labels of underwater concepts in order to enable machine learning algorithm development. To date, there are more than 80,000 images and 106,000 localizations for 233 different classes, including midwater and benthic organisms. Our experiments consisted of training various deep learning algorithms with approaches to address weakly supervised localization, image labeling, object detection and classification which prove to be promising. While we find quality results on prediction for this new dataset, our results indicate that we are ultimately in need of a larger data set for ocean exploration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge