Binghui Wang

SilentDrift: Exploiting Action Chunking for Stealthy Backdoor Attacks on Vision-Language-Action Models

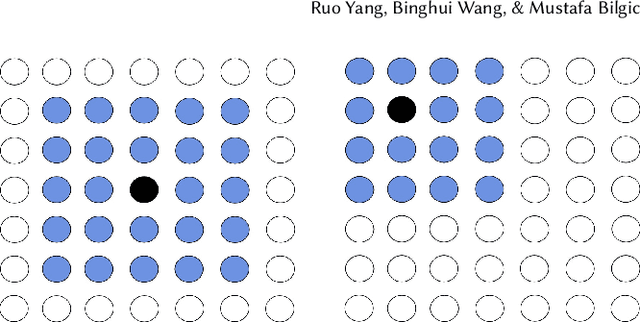

Jan 20, 2026Abstract:Vision-Language-Action (VLA) models are increasingly deployed in safety-critical robotic applications, yet their security vulnerabilities remain underexplored. We identify a fundamental security flaw in modern VLA systems: the combination of action chunking and delta pose representations creates an intra-chunk visual open-loop. This mechanism forces the robot to execute K-step action sequences, allowing per-step perturbations to accumulate through integration. We propose SILENTDRIFT, a stealthy black-box backdoor attack exploiting this vulnerability. Our method employs the Smootherstep function to construct perturbations with guaranteed C2 continuity, ensuring zero velocity and acceleration at trajectory boundaries to satisfy strict kinematic consistency constraints. Furthermore, our keyframe attack strategy selectively poisons only the critical approach phase, maximizing impact while minimizing trigger exposure. The resulting poisoned trajectories are visually indistinguishable from successful demonstrations. Evaluated on the LIBERO, SILENTDRIFT achieves a 93.2% Attack Success Rate with a poisoning rate under 2%, while maintaining a 95.3% Clean Task Success Rate.

VeFIA: An Efficient Inference Auditing Framework for Vertical Federated Collaborative Software

Jul 03, 2025

Abstract:Vertical Federated Learning (VFL) is a distributed AI software deployment mechanism for cross-silo collaboration without accessing participants' data. However, existing VFL work lacks a mechanism to audit the execution correctness of the inference software of the data party. To address this problem, we design a Vertical Federated Inference Auditing (VeFIA) framework. VeFIA helps the task party to audit whether the data party's inference software is executed as expected during large-scale inference without leaking the data privacy of the data party or introducing additional latency to the inference system. The core of VeFIA is that the task party can use the inference results from a framework with Trusted Execution Environments (TEE) and the coordinator to validate the correctness of the data party's computation results. VeFIA guarantees that, as long as the abnormal inference exceeds 5.4%, the task party can detect execution anomalies in the inference software with a probability of 99.99%, without incurring any additional online inference latency. VeFIA's random sampling validation achieves 100% positive predictive value, negative predictive value, and true positive rate in detecting abnormal inference. To the best of our knowledge, this is the first paper to discuss the correctness of inference software execution in VFL.

Rectifying Privacy and Efficacy Measurements in Machine Unlearning: A New Inference Attack Perspective

Jun 16, 2025

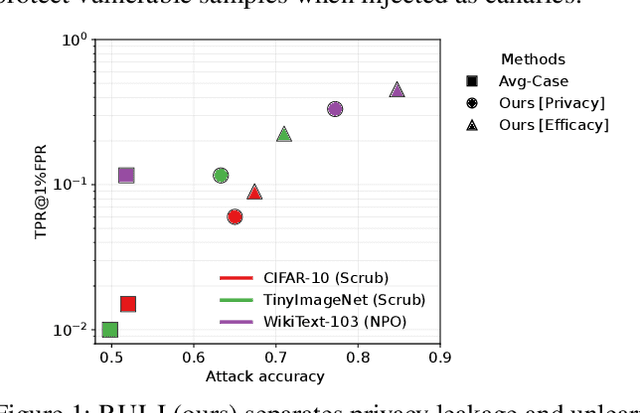

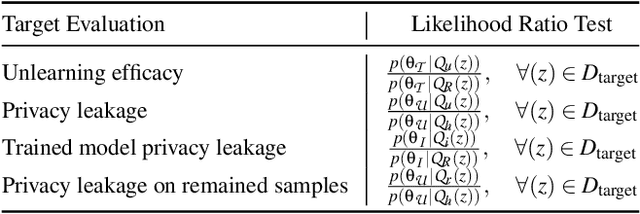

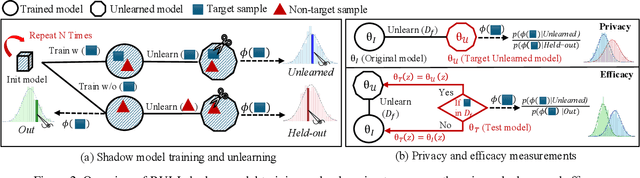

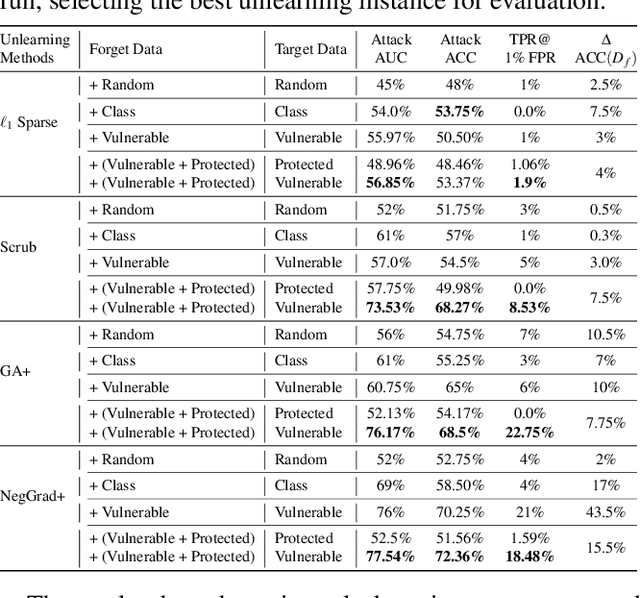

Abstract:Machine unlearning focuses on efficiently removing specific data from trained models, addressing privacy and compliance concerns with reasonable costs. Although exact unlearning ensures complete data removal equivalent to retraining, it is impractical for large-scale models, leading to growing interest in inexact unlearning methods. However, the lack of formal guarantees in these methods necessitates the need for robust evaluation frameworks to assess their privacy and effectiveness. In this work, we first identify several key pitfalls of the existing unlearning evaluation frameworks, e.g., focusing on average-case evaluation or targeting random samples for evaluation, incomplete comparisons with the retraining baseline. Then, we propose RULI (Rectified Unlearning Evaluation Framework via Likelihood Inference), a novel framework to address critical gaps in the evaluation of inexact unlearning methods. RULI introduces a dual-objective attack to measure both unlearning efficacy and privacy risks at a per-sample granularity. Our findings reveal significant vulnerabilities in state-of-the-art unlearning methods, where RULI achieves higher attack success rates, exposing privacy risks underestimated by existing methods. Built on a game-based foundation and validated through empirical evaluations on both image and text data (spanning tasks from classification to generation), RULI provides a rigorous, scalable, and fine-grained methodology for evaluating unlearning techniques.

GenoArmory: A Unified Evaluation Framework for Adversarial Attacks on Genomic Foundation Models

May 16, 2025

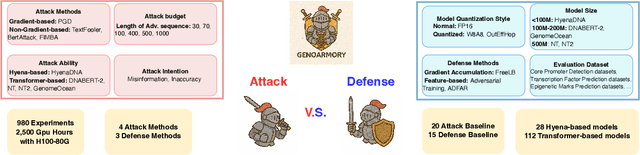

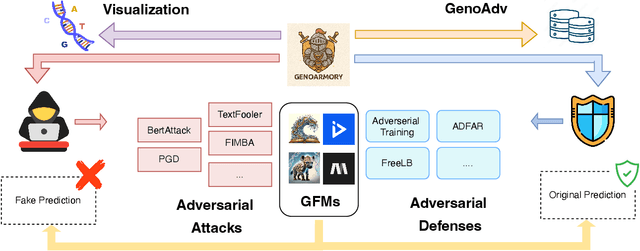

Abstract:We propose the first unified adversarial attack benchmark for Genomic Foundation Models (GFMs), named GenoArmory. Unlike existing GFM benchmarks, GenoArmory offers the first comprehensive evaluation framework to systematically assess the vulnerability of GFMs to adversarial attacks. Methodologically, we evaluate the adversarial robustness of five state-of-the-art GFMs using four widely adopted attack algorithms and three defense strategies. Importantly, our benchmark provides an accessible and comprehensive framework to analyze GFM vulnerabilities with respect to model architecture, quantization schemes, and training datasets. Additionally, we introduce GenoAdv, a new adversarial sample dataset designed to improve GFM safety. Empirically, classification models exhibit greater robustness to adversarial perturbations compared to generative models, highlighting the impact of task type on model vulnerability. Moreover, adversarial attacks frequently target biologically significant genomic regions, suggesting that these models effectively capture meaningful sequence features.

FedTilt: Towards Multi-Level Fairness-Preserving and Robust Federated Learning

Mar 15, 2025Abstract:Federated Learning (FL) is an emerging decentralized learning paradigm that can partly address the privacy concern that cannot be handled by traditional centralized and distributed learning. Further, to make FL practical, it is also necessary to consider constraints such as fairness and robustness. However, existing robust FL methods often produce unfair models, and existing fair FL methods only consider one-level (client) fairness and are not robust to persistent outliers (i.e., injected outliers into each training round) that are common in real-world FL settings. We propose \texttt{FedTilt}, a novel FL that can preserve multi-level fairness and be robust to outliers. In particular, we consider two common levels of fairness, i.e., \emph{client fairness} -- uniformity of performance across clients, and \emph{client data fairness} -- uniformity of performance across different classes of data within a client. \texttt{FedTilt} is inspired by the recently proposed tilted empirical risk minimization, which introduces tilt hyperparameters that can be flexibly tuned. Theoretically, we show how tuning tilt values can achieve the two-level fairness and mitigate the persistent outliers, and derive the convergence condition of \texttt{FedTilt} as well. Empirically, our evaluation results on a suite of realistic federated datasets in diverse settings show the effectiveness and flexibility of the \texttt{FedTilt} framework and the superiority to the state-of-the-arts.

Backdoor Attacks on Discrete Graph Diffusion Models

Mar 08, 2025Abstract:Diffusion models are powerful generative models in continuous data domains such as image and video data. Discrete graph diffusion models (DGDMs) have recently extended them for graph generation, which are crucial in fields like molecule and protein modeling, and obtained the SOTA performance. However, it is risky to deploy DGDMs for safety-critical applications (e.g., drug discovery) without understanding their security vulnerabilities. In this work, we perform the first study on graph diffusion models against backdoor attacks, a severe attack that manipulates both the training and inference/generation phases in graph diffusion models. We first define the threat model, under which we design the attack such that the backdoored graph diffusion model can generate 1) high-quality graphs without backdoor activation, 2) effective, stealthy, and persistent backdoored graphs with backdoor activation, and 3) graphs that are permutation invariant and exchangeable--two core properties in graph generative models. 1) and 2) are validated via empirical evaluations without and with backdoor defenses, while 3) is validated via theoretical results.

Watermarking Graph Neural Networks via Explanations for Ownership Protection

Jan 09, 2025Abstract:Graph Neural Networks (GNNs) are the mainstream method to learn pervasive graph data and are widely deployed in industry, making their intellectual property valuable. However, protecting GNNs from unauthorized use remains a challenge. Watermarking, which embeds ownership information into a model, is a potential solution. However, existing watermarking methods have two key limitations: First, almost all of them focus on non-graph data, with watermarking GNNs for complex graph data largely unexplored. Second, the de facto backdoor-based watermarking methods pollute training data and induce ownership ambiguity through intentional misclassification. Our explanation-based watermarking inherits the strengths of backdoor-based methods (e.g., robust to watermark removal attacks), but avoids data pollution and eliminates intentional misclassification. In particular, our method learns to embed the watermark in GNN explanations such that this unique watermark is statistically distinct from other potential solutions, and ownership claims must show statistical significance to be verified. We theoretically prove that, even with full knowledge of our method, locating the watermark is an NP-hard problem. Empirically, our method manifests robustness to removal attacks like fine-tuning and pruning. By addressing these challenges, our approach marks a significant advancement in protecting GNN intellectual property.

Practicable Black-box Evasion Attacks on Link Prediction in Dynamic Graphs -- A Graph Sequential Embedding Method

Dec 17, 2024

Abstract:Link prediction in dynamic graphs (LPDG) has been widely applied to real-world applications such as website recommendation, traffic flow prediction, organizational studies, etc. These models are usually kept local and secure, with only the interactive interface restrictively available to the public. Thus, the problem of the black-box evasion attack on the LPDG model, where model interactions and data perturbations are restricted, seems to be essential and meaningful in practice. In this paper, we propose the first practicable black-box evasion attack method that achieves effective attacks against the target LPDG model, within a limited amount of interactions and perturbations. To perform effective attacks under limited perturbations, we develop a graph sequential embedding model to find the desired state embedding of the dynamic graph sequences, under a deep reinforcement learning framework. To overcome the scarcity of interactions, we design a multi-environment training pipeline and train our agent for multiple instances, by sharing an aggregate interaction buffer. Finally, we evaluate our attack against three advanced LPDG models on three real-world graph datasets of different scales and compare its performance with related methods under the interaction and perturbation constraints. Experimental results show that our attack is both effective and practicable.

Learning Robust and Privacy-Preserving Representations via Information Theory

Dec 15, 2024

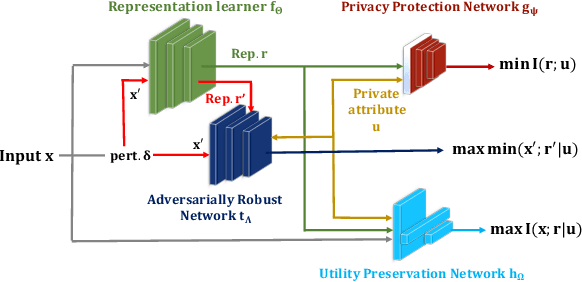

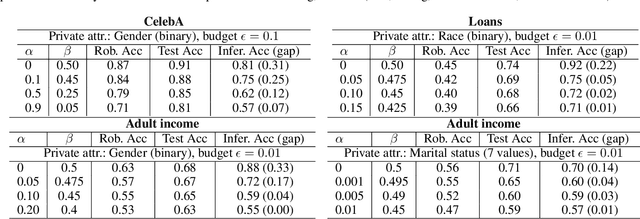

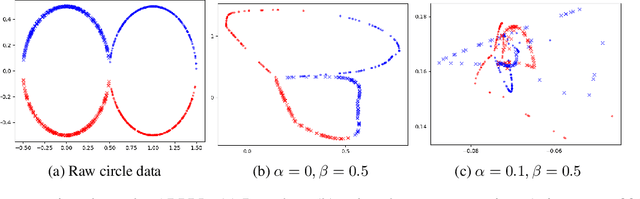

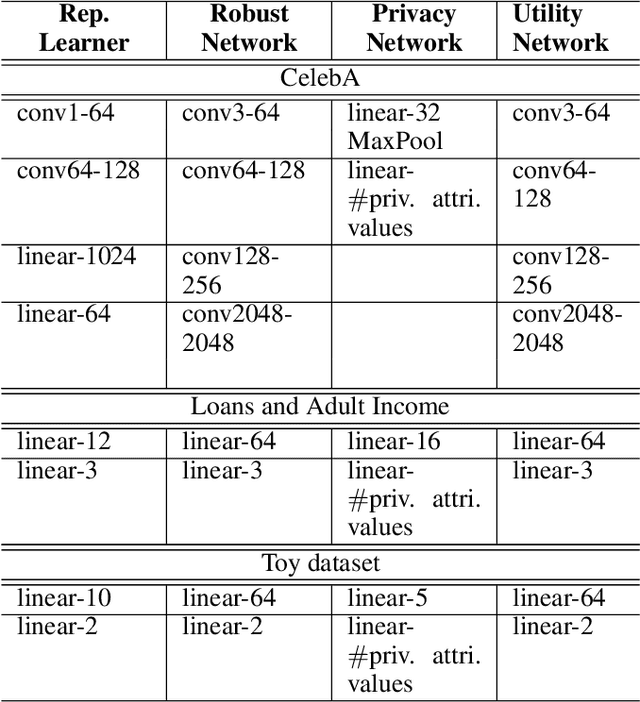

Abstract:Machine learning models are vulnerable to both security attacks (e.g., adversarial examples) and privacy attacks (e.g., private attribute inference). We take the first step to mitigate both the security and privacy attacks, and maintain task utility as well. Particularly, we propose an information-theoretic framework to achieve the goals through the lens of representation learning, i.e., learning representations that are robust to both adversarial examples and attribute inference adversaries. We also derive novel theoretical results under our framework, e.g., the inherent trade-off between adversarial robustness/utility and attribute privacy, and guaranteed attribute privacy leakage against attribute inference adversaries.

Leveraging Local Structure for Improving Model Explanations: An Information Propagation Approach

Sep 24, 2024

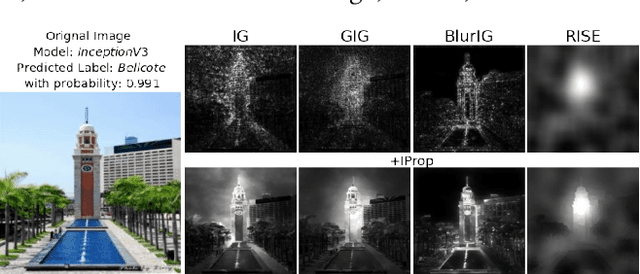

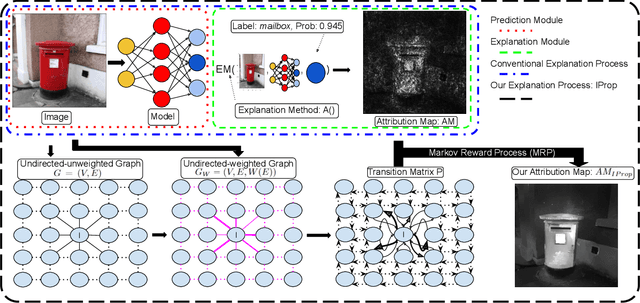

Abstract:Numerous explanation methods have been recently developed to interpret the decisions made by deep neural network (DNN) models. For image classifiers, these methods typically provide an attribution score to each pixel in the image to quantify its contribution to the prediction. However, most of these explanation methods appropriate attribution scores to pixels independently, even though both humans and DNNs make decisions by analyzing a set of closely related pixels simultaneously. Hence, the attribution score of a pixel should be evaluated jointly by considering itself and its structurally-similar pixels. We propose a method called IProp, which models each pixel's individual attribution score as a source of explanatory information and explains the image prediction through the dynamic propagation of information across all pixels. To formulate the information propagation, IProp adopts the Markov Reward Process, which guarantees convergence, and the final status indicates the desired pixels' attribution scores. Furthermore, IProp is compatible with any existing attribution-based explanation method. Extensive experiments on various explanation methods and DNN models verify that IProp significantly improves them on a variety of interpretability metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge