Mustafa Bilgic

Leveraging Local Structure for Improving Model Explanations: An Information Propagation Approach

Sep 24, 2024

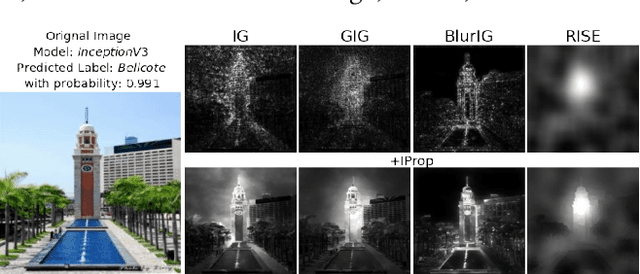

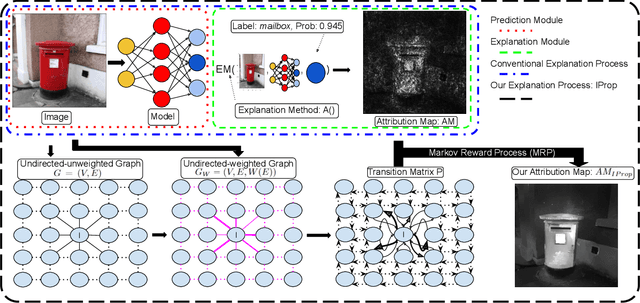

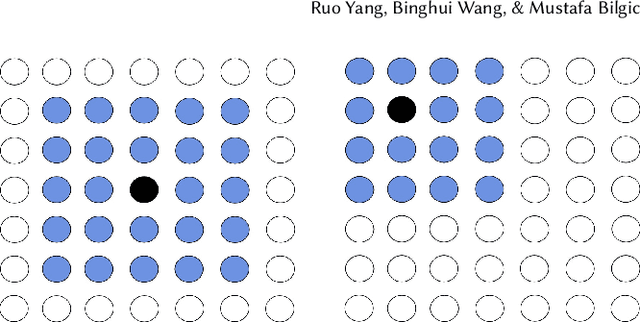

Abstract:Numerous explanation methods have been recently developed to interpret the decisions made by deep neural network (DNN) models. For image classifiers, these methods typically provide an attribution score to each pixel in the image to quantify its contribution to the prediction. However, most of these explanation methods appropriate attribution scores to pixels independently, even though both humans and DNNs make decisions by analyzing a set of closely related pixels simultaneously. Hence, the attribution score of a pixel should be evaluated jointly by considering itself and its structurally-similar pixels. We propose a method called IProp, which models each pixel's individual attribution score as a source of explanatory information and explains the image prediction through the dynamic propagation of information across all pixels. To formulate the information propagation, IProp adopts the Markov Reward Process, which guarantees convergence, and the final status indicates the desired pixels' attribution scores. Furthermore, IProp is compatible with any existing attribution-based explanation method. Extensive experiments on various explanation methods and DNN models verify that IProp significantly improves them on a variety of interpretability metrics.

IDGI: A Framework to Eliminate Explanation Noise from Integrated Gradients

Mar 24, 2023

Abstract:Integrated Gradients (IG) as well as its variants are well-known techniques for interpreting the decisions of deep neural networks. While IG-based approaches attain state-of-the-art performance, they often integrate noise into their explanation saliency maps, which reduce their interpretability. To minimize the noise, we examine the source of the noise analytically and propose a new approach to reduce the explanation noise based on our analytical findings. We propose the Important Direction Gradient Integration (IDGI) framework, which can be easily incorporated into any IG-based method that uses the Reimann Integration for integrated gradient computation. Extensive experiments with three IG-based methods show that IDGI improves them drastically on numerous interpretability metrics.

Leaders or Followers? A Temporal Analysis of Tweets from IRA Trolls

Apr 04, 2022

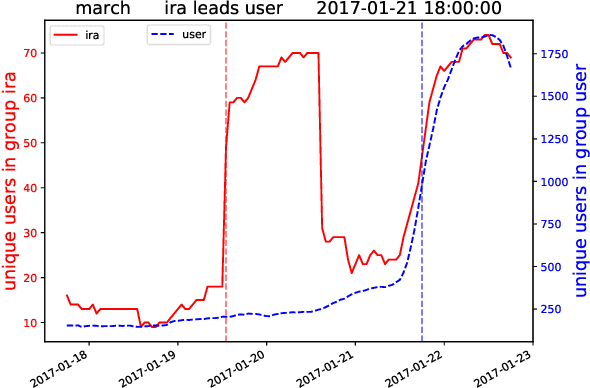

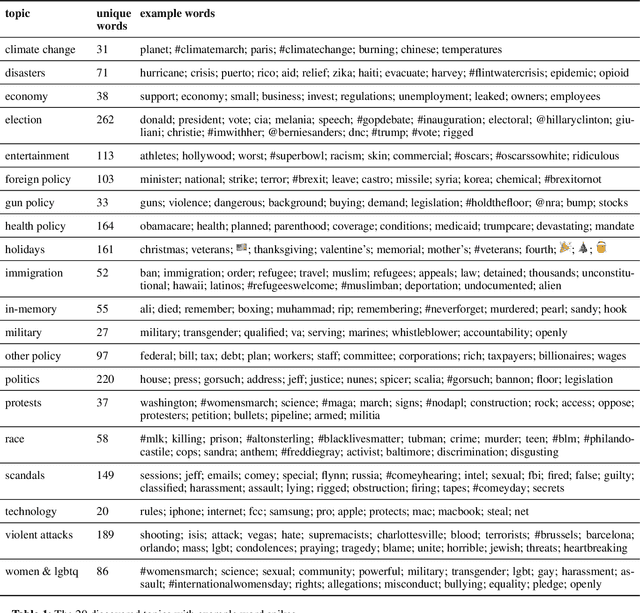

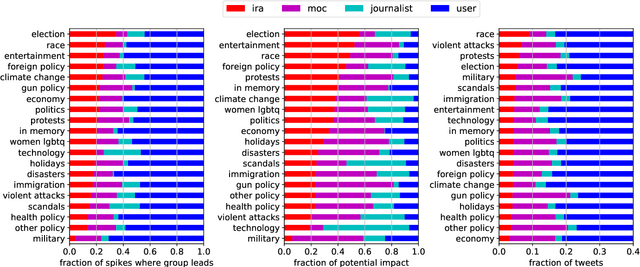

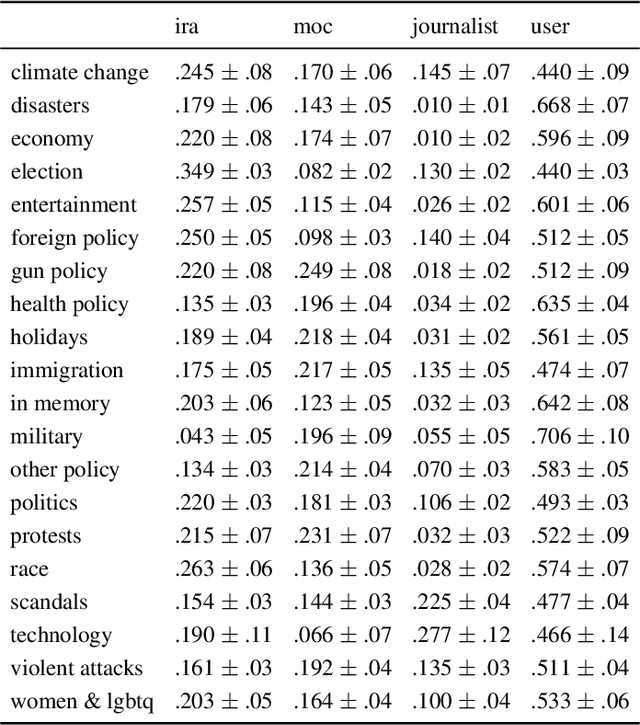

Abstract:The Internet Research Agency (IRA) influences online political conversations in the United States, exacerbating existing partisan divides and sowing discord. In this paper we investigate the IRA's communication strategies by analyzing trending terms on Twitter to identify cases in which the IRA leads or follows other users. Our analysis focuses on over 38M tweets posted between 2016 and 2017 from IRA users (n=3,613), journalists (n=976), members of Congress (n=526), and politically engaged users from the general public (n=71,128). We find that the IRA tends to lead on topics related to the 2016 election, race, and entertainment, suggesting that these are areas both of strategic importance as well having the highest potential impact. Furthermore, we identify topics where the IRA has been relatively ineffective, such as tweets on military, political scandals, and violent attacks. Despite many tweets on these topics, the IRA rarely leads the conversation and thus has little opportunity to influence it. We offer our proposed methodology as a way to track the strategic choices of future influence operations in real-time.

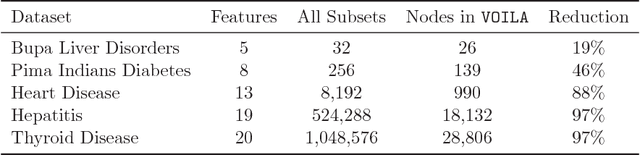

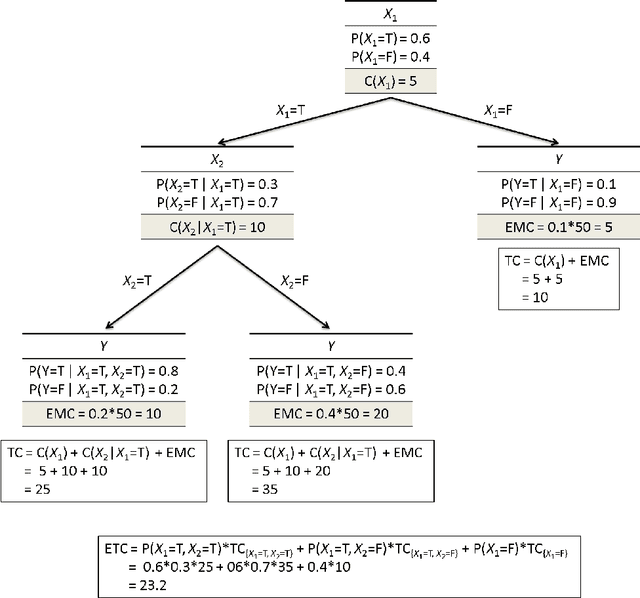

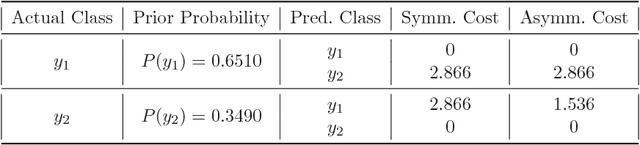

Value of Information Lattice: Exploiting Probabilistic Independence for Effective Feature Subset Acquisition

Jan 16, 2014

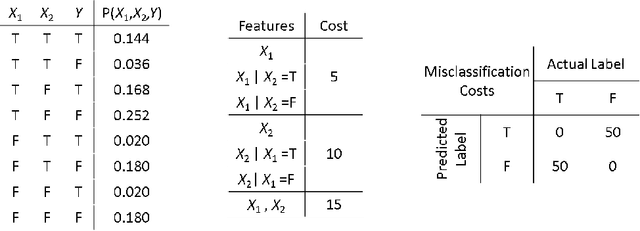

Abstract:We address the cost-sensitive feature acquisition problem, where misclassifying an instance is costly but the expected misclassification cost can be reduced by acquiring the values of the missing features. Because acquiring the features is costly as well, the objective is to acquire the right set of features so that the sum of the feature acquisition cost and misclassification cost is minimized. We describe the Value of Information Lattice (VOILA), an optimal and efficient feature subset acquisition framework. Unlike the common practice, which is to acquire features greedily, VOILA can reason with subsets of features. VOILA efficiently searches the space of possible feature subsets by discovering and exploiting conditional independence properties between the features and it reuses probabilistic inference computations to further speed up the process. Through empirical evaluation on five medical datasets, we show that the greedy strategy is often reluctant to acquire features, as it cannot forecast the benefit of acquiring multiple features in combination.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge