Meng Pang

You Are Your Own Best Teacher: Achieving Centralized-level Performance in Federated Learning under Heterogeneous and Long-tailed Data

Mar 10, 2025Abstract:Data heterogeneity, stemming from local non-IID data and global long-tailed distributions, is a major challenge in federated learning (FL), leading to significant performance gaps compared to centralized learning. Previous research found that poor representations and biased classifiers are the main problems and proposed neural-collapse-inspired synthetic simplex ETF to help representations be closer to neural collapse optima. However, we find that the neural-collapse-inspired methods are not strong enough to reach neural collapse and still have huge gaps to centralized training. In this paper, we rethink this issue from a self-bootstrap perspective and propose FedYoYo (You Are Your Own Best Teacher), introducing Augmented Self-bootstrap Distillation (ASD) to improve representation learning by distilling knowledge between weakly and strongly augmented local samples, without needing extra datasets or models. We further introduce Distribution-aware Logit Adjustment (DLA) to balance the self-bootstrap process and correct biased feature representations. FedYoYo nearly eliminates the performance gap, achieving centralized-level performance even under mixed heterogeneity. It enhances local representation learning, reducing model drift and improving convergence, with feature prototypes closer to neural collapse optimality. Extensive experiments show FedYoYo achieves state-of-the-art results, even surpassing centralized logit adjustment methods by 5.4\% under global long-tailed settings.

Practicable Black-box Evasion Attacks on Link Prediction in Dynamic Graphs -- A Graph Sequential Embedding Method

Dec 17, 2024

Abstract:Link prediction in dynamic graphs (LPDG) has been widely applied to real-world applications such as website recommendation, traffic flow prediction, organizational studies, etc. These models are usually kept local and secure, with only the interactive interface restrictively available to the public. Thus, the problem of the black-box evasion attack on the LPDG model, where model interactions and data perturbations are restricted, seems to be essential and meaningful in practice. In this paper, we propose the first practicable black-box evasion attack method that achieves effective attacks against the target LPDG model, within a limited amount of interactions and perturbations. To perform effective attacks under limited perturbations, we develop a graph sequential embedding model to find the desired state embedding of the dynamic graph sequences, under a deep reinforcement learning framework. To overcome the scarcity of interactions, we design a multi-environment training pipeline and train our agent for multiple instances, by sharing an aggregate interaction buffer. Finally, we evaluate our attack against three advanced LPDG models on three real-world graph datasets of different scales and compare its performance with related methods under the interaction and perturbation constraints. Experimental results show that our attack is both effective and practicable.

Understanding Data Reconstruction Leakage in Federated Learning from a Theoretical Perspective

Aug 22, 2024Abstract:Federated learning (FL) is an emerging collaborative learning paradigm that aims to protect data privacy. Unfortunately, recent works show FL algorithms are vulnerable to the serious data reconstruction attacks. However, existing works lack a theoretical foundation on to what extent the devices' data can be reconstructed and the effectiveness of these attacks cannot be compared fairly due to their unstable performance. To address this deficiency, we propose a theoretical framework to understand data reconstruction attacks to FL. Our framework involves bounding the data reconstruction error and an attack's error bound reflects its inherent attack effectiveness. Under the framework, we can theoretically compare the effectiveness of existing attacks. For instance, our results on multiple datasets validate that the iDLG attack inherently outperforms the DLG attack.

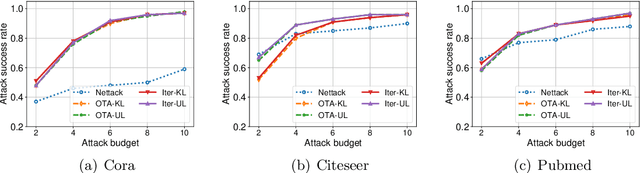

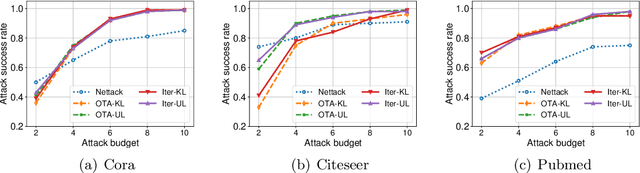

Graph Neural Network Explanations are Fragile

Jun 05, 2024Abstract:Explainable Graph Neural Network (GNN) has emerged recently to foster the trust of using GNNs. Existing GNN explainers are developed from various perspectives to enhance the explanation performance. We take the first step to study GNN explainers under adversarial attack--We found that an adversary slightly perturbing graph structure can ensure GNN model makes correct predictions, but the GNN explainer yields a drastically different explanation on the perturbed graph. Specifically, we first formulate the attack problem under a practical threat model (i.e., the adversary has limited knowledge about the GNN explainer and a restricted perturbation budget). We then design two methods (i.e., one is loss-based and the other is deduction-based) to realize the attack. We evaluate our attacks on various GNN explainers and the results show these explainers are fragile.

Reinforcement Learning-based Black-Box Evasion Attacks to Link Prediction in Dynamic Graphs

Sep 12, 2020

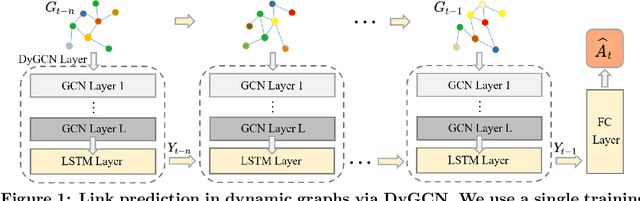

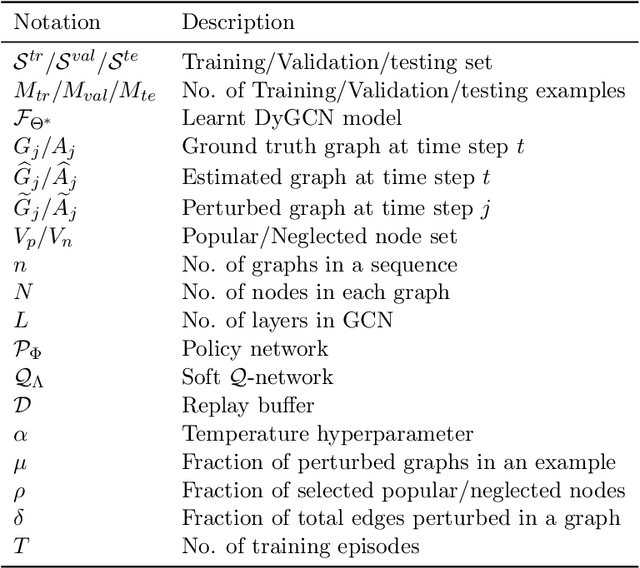

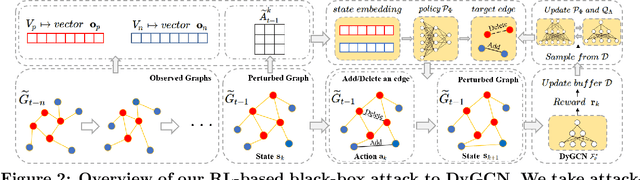

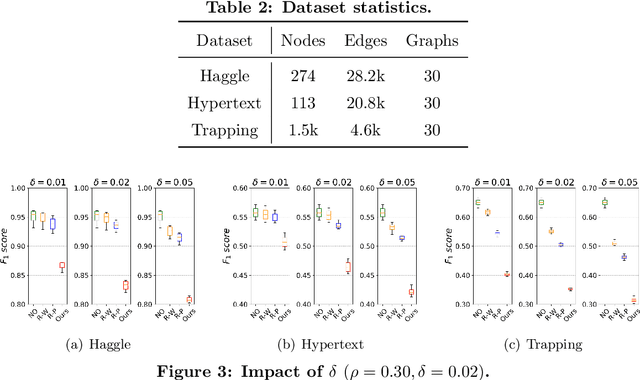

Abstract:Link prediction in dynamic graphs (LPDG) is an important research problem that has diverse applications such as online recommendations, studies on disease contagion, organizational studies, etc. Various LPDG methods based on graph embedding and graph neural networks have been recently proposed and achieved state-of-the-art performance. In this paper, we study the vulnerability of LPDG methods and propose the first practical black-box evasion attack. Specifically, given a trained LPDG model, our attack aims to perturb the graph structure, without knowing to model parameters, model architecture, etc., such that the LPDG model makes as many wrong predicted links as possible. We design our attack based on a stochastic policy-based RL algorithm. Moreover, we evaluate our attack on three real-world graph datasets from different application domains. Experimental results show that our attack is both effective and efficient.

Evasion Attacks to Graph Neural Networks via Influence Function

Sep 12, 2020

Abstract:Graph neural networks (GNNs) have achieved state-of-the-art performance in many graph-related tasks, e.g., node classification. However, recent works show that GNNs are vulnerable to evasion attacks, i.e., an attacker can slightly perturb the graph structure to fool GNN models. Existing evasion attacks to GNNs have several key drawbacks: 1) they are limited to attack two-layer GNNs; 2) they are not efficient; or/and 3) they need to know GNN model parameters. We address the above drawbacks in this paper and propose an influence-based evasion attack against GNNs. Specifically, we first introduce two influence functions, i.e., feature-label influence and label influence, that are defined on GNNs and label propagation (LP), respectively. Then, we build a strong connection between GNNs and LP in terms of influence. Next, we reformulate the evasion attack against GNNs to be related to calculating label influence on LP, which is applicable to multi-layer GNNs and does not need to know the GNN model. We also propose an efficient algorithm to calculate label influence. Finally, we evaluate our influence-based attack on three benchmark graph datasets. Our experimental results show that, compared to state-of-the-art attack, our attack can achieve comparable attack performance, but has a 5-50x speedup when attacking two-layer GNNs. Moreover, our attack is effective to attack multi-layer GNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge