Bing Su

Diffusion LMs Can Approximate Optimal Infilling Lengths Implicitly

Jan 31, 2026Abstract:Diffusion language models (DLMs) provide a bidirectional generation framework naturally suited for infilling, yet their performance is constrained by the pre-specified infilling length. In this paper, we reveal that DLMs possess an inherent ability to discover the correct infilling length. We identify two key statistical phenomena in the first-step denoising confidence: a local \textit{Oracle Peak} that emerges near the ground-truth length and a systematic \textit{Length Bias} that often obscures this signal. By leveraging this signal and calibrating the bias, our training-free method \textbf{CAL} (\textbf{C}alibrated \textbf{A}daptive \textbf{L}ength) enables DLMs to approximate the optimal length through an efficient search before formal decoding. Empirical evaluations demonstrate that CAL improves Pass@1 by up to 47.7\% over fixed-length baselines and 40.5\% over chat-based adaptive methods in code infilling, while boosting BLEU-2 and ROUGE-L by up to 8.5\% and 9.9\% in text infilling. These results demonstrate that CAL paves the way for robust DLM infilling without requiring any specialized training. Code is available at https://github.com/NiuHechang/Calibrated_Adaptive_Length.

Controlled LLM Training on Spectral Sphere

Jan 13, 2026Abstract:Scaling large models requires optimization strategies that ensure rapid convergence grounded in stability. Maximal Update Parametrization ($\boldsymbolμ$P) provides a theoretical safeguard for width-invariant $Θ(1)$ activation control, whereas emerging optimizers like Muon are only ``half-aligned'' with these constraints: they control updates but allow weights to drift. To address this limitation, we introduce the \textbf{Spectral Sphere Optimizer (SSO)}, which enforces strict module-wise spectral constraints on both weights and their updates. By deriving the steepest descent direction on the spectral sphere, SSO realizes a fully $\boldsymbolμ$P-aligned optimization process. To enable large-scale training, we implement SSO as an efficient parallel algorithm within Megatron. Through extensive pretraining on diverse architectures, including Dense 1.7B, MoE 8B-A1B, and 200-layer DeepNet models, SSO consistently outperforms AdamW and Muon. Furthermore, we observe significant practical stability benefits, including improved MoE router load balancing, suppressed outliers, and strictly bounded activations.

Enhancing Human Motion Prediction via Multi-range Decoupling Decoding with Gating-adjusting Aggregation

Mar 30, 2025

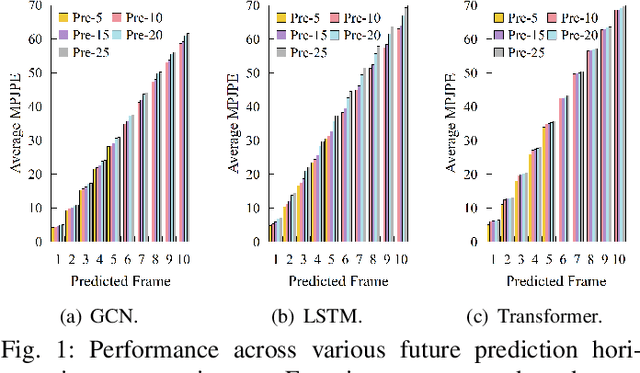

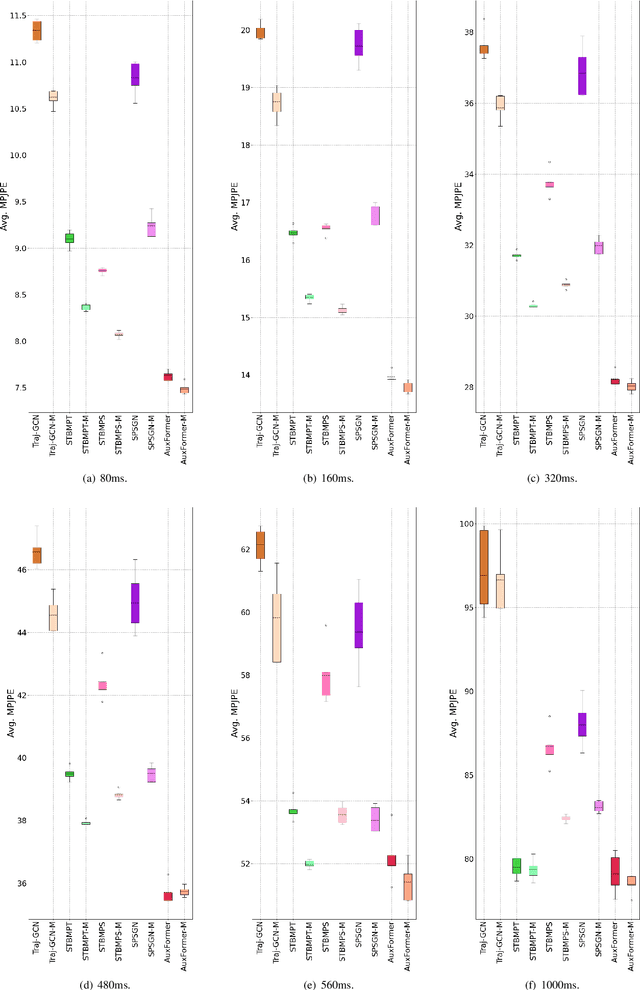

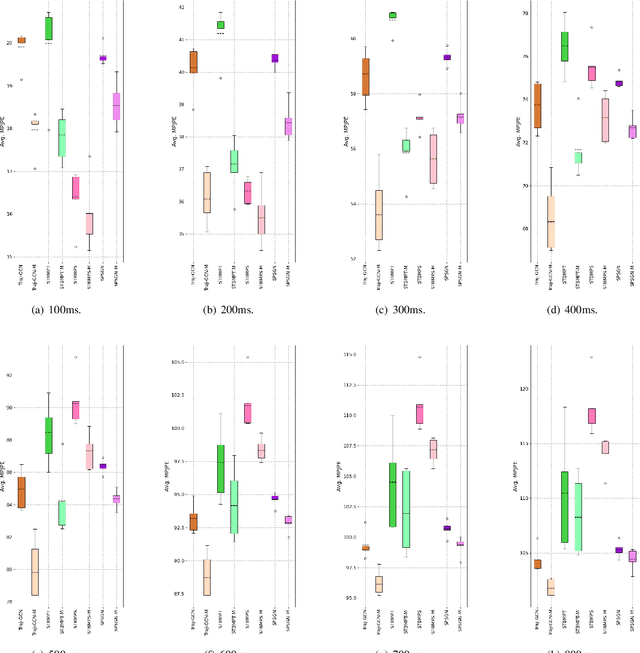

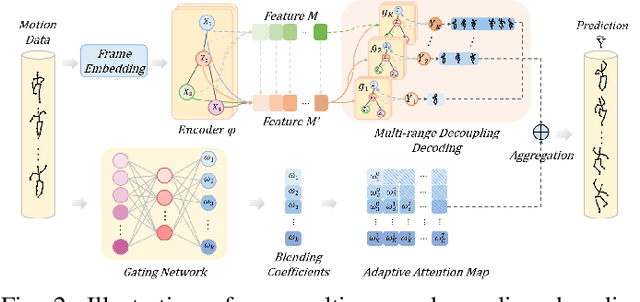

Abstract:Expressive representation of pose sequences is crucial for accurate motion modeling in human motion prediction (HMP). While recent deep learning-based methods have shown promise in learning motion representations, these methods tend to overlook the varying relevance and dependencies between historical information and future moments, with a stronger correlation for short-term predictions and weaker for distant future predictions. This limits the learning of motion representation and then hampers prediction performance. In this paper, we propose a novel approach called multi-range decoupling decoding with gating-adjusting aggregation ($MD2GA$), which leverages the temporal correlations to refine motion representation learning. This approach employs a two-stage strategy for HMP. In the first stage, a multi-range decoupling decoding adeptly adjusts feature learning by decoding the shared features into distinct future lengths, where different decoders offer diverse insights into motion patterns. In the second stage, a gating-adjusting aggregation dynamically combines the diverse insights guided by input motion data. Extensive experiments demonstrate that the proposed method can be easily integrated into other motion prediction methods and enhance their prediction performance.

Learning Structure-enhanced Temporal Point Processes with Gromov-Wasserstein Regularization

Mar 29, 2025

Abstract:Real-world event sequences are often generated by different temporal point processes (TPPs) and thus have clustering structures. Nonetheless, in the modeling and prediction of event sequences, most existing TPPs ignore the inherent clustering structures of the event sequences, leading to the models with unsatisfactory interpretability. In this study, we learn structure-enhanced TPPs with the help of Gromov-Wasserstein (GW) regularization, which imposes clustering structures on the sequence-level embeddings of the TPPs in the maximum likelihood estimation framework.In the training phase, the proposed method leverages a nonparametric TPP kernel to regularize the similarity matrix derived based on the sequence embeddings. In large-scale applications, we sample the kernel matrix and implement the regularization as a Gromov-Wasserstein (GW) discrepancy term, which achieves a trade-off between regularity and computational efficiency.The TPPs learned through this method result in clustered sequence embeddings and demonstrate competitive predictive and clustering performance, significantly improving the model interpretability without compromising prediction accuracy.

STOP: Integrated Spatial-Temporal Dynamic Prompting for Video Understanding

Mar 20, 2025

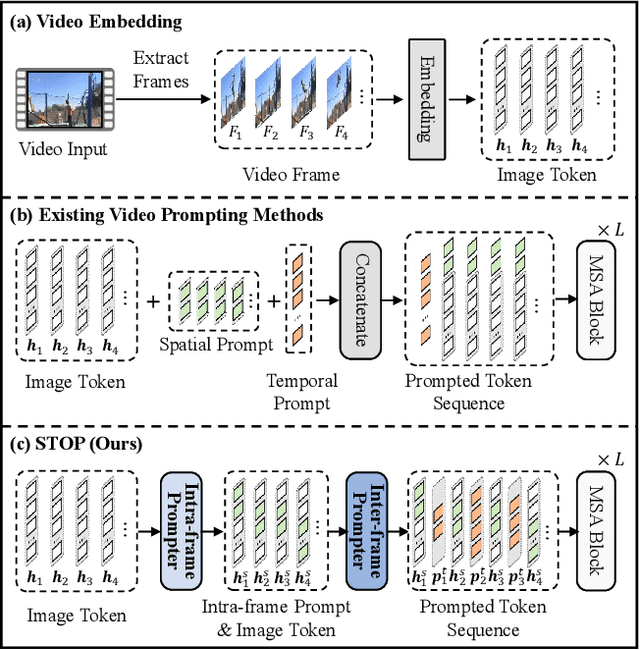

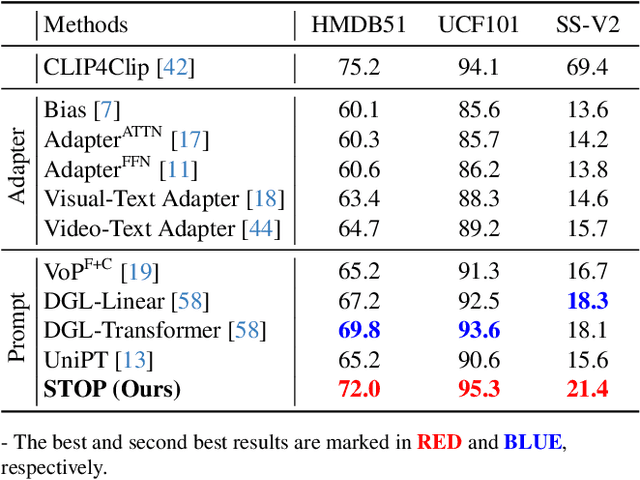

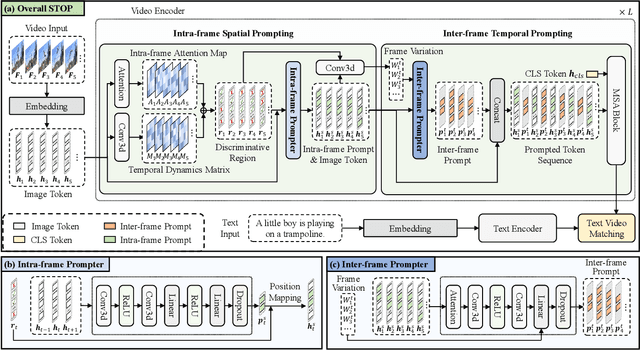

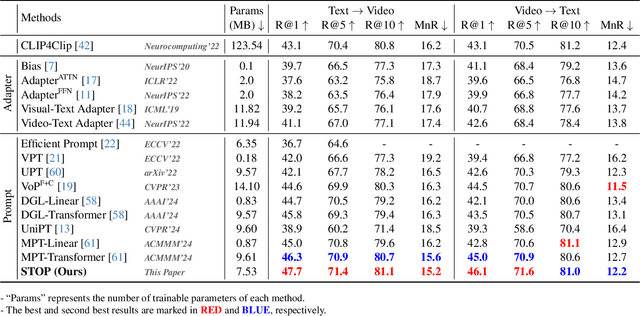

Abstract:Pre-trained on tremendous image-text pairs, vision-language models like CLIP have demonstrated promising zero-shot generalization across numerous image-based tasks. However, extending these capabilities to video tasks remains challenging due to limited labeled video data and high training costs. Recent video prompting methods attempt to adapt CLIP for video tasks by introducing learnable prompts, but they typically rely on a single static prompt for all video sequences, overlooking the diverse temporal dynamics and spatial variations that exist across frames. This limitation significantly hinders the model's ability to capture essential temporal information for effective video understanding. To address this, we propose an integrated Spatial-TempOral dynamic Prompting (STOP) model which consists of two complementary modules, the intra-frame spatial prompting and inter-frame temporal prompting. Our intra-frame spatial prompts are designed to adaptively highlight discriminative regions within each frame by leveraging intra-frame attention and temporal variation, allowing the model to focus on areas with substantial temporal dynamics and capture fine-grained spatial details. Additionally, to highlight the varying importance of frames for video understanding, we further introduce inter-frame temporal prompts, dynamically inserting prompts between frames with high temporal variance as measured by frame similarity. This enables the model to prioritize key frames and enhances its capacity to understand temporal dependencies across sequences. Extensive experiments on various video benchmarks demonstrate that STOP consistently achieves superior performance against state-of-the-art methods. The code is available at https://github.com/zhoujiahuan1991/CVPR2025-STOP.

Regulatory DNA sequence Design with Reinforcement Learning

Mar 11, 2025Abstract:Cis-regulatory elements (CREs), such as promoters and enhancers, are relatively short DNA sequences that directly regulate gene expression. The fitness of CREs, measured by their ability to modulate gene expression, highly depends on the nucleotide sequences, especially specific motifs known as transcription factor binding sites (TFBSs). Designing high-fitness CREs is crucial for therapeutic and bioengineering applications. Current CRE design methods are limited by two major drawbacks: (1) they typically rely on iterative optimization strategies that modify existing sequences and are prone to local optima, and (2) they lack the guidance of biological prior knowledge in sequence optimization. In this paper, we address these limitations by proposing a generative approach that leverages reinforcement learning (RL) to fine-tune a pre-trained autoregressive (AR) model. Our method incorporates data-driven biological priors by deriving computational inference-based rewards that simulate the addition of activator TFBSs and removal of repressor TFBSs, which are then integrated into the RL process. We evaluate our method on promoter design tasks in two yeast media conditions and enhancer design tasks for three human cell types, demonstrating its ability to generate high-fitness CREs while maintaining sequence diversity. The code is available at https://github.com/yangzhao1230/TACO.

Rethinking the Bias of Foundation Model under Long-tailed Distribution

Jan 27, 2025

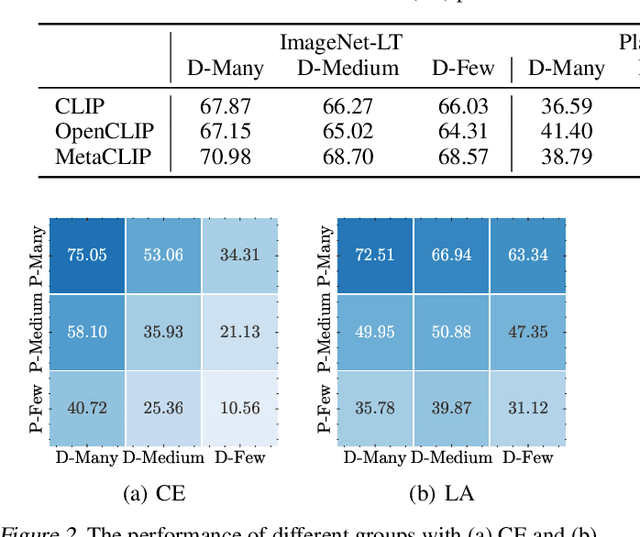

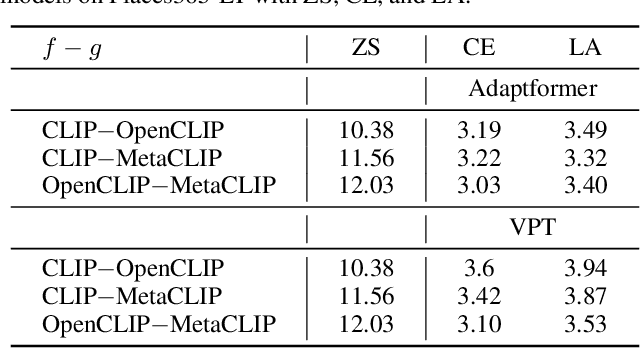

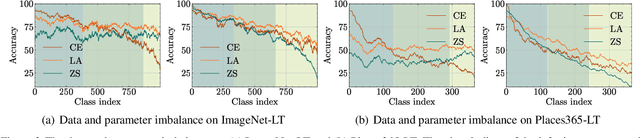

Abstract:Long-tailed learning has garnered increasing attention due to its practical significance. Among the various approaches, the fine-tuning paradigm has gained considerable interest with the advent of foundation models. However, most existing methods primarily focus on leveraging knowledge from these models, overlooking the inherent biases introduced by the imbalanced training data they rely on. In this paper, we examine how such imbalances from pre-training affect long-tailed downstream tasks. Specifically, we find the imbalance biases inherited in foundation models on downstream task as parameter imbalance and data imbalance. During fine-tuning, we observe that parameter imbalance plays a more critical role, while data imbalance can be mitigated using existing re-balancing strategies. Moreover, we find that parameter imbalance cannot be effectively addressed by current re-balancing techniques, such as adjusting the logits, during training, unlike data imbalance. To tackle both imbalances simultaneously, we build our method on causal learning and view the incomplete semantic factor as the confounder, which brings spurious correlations between input samples and labels. To resolve the negative effects of this, we propose a novel backdoor adjustment method that learns the true causal effect between input samples and labels, rather than merely fitting the correlations in the data. Notably, we achieve an average performance increase of about $1.67\%$ on each dataset.

Continual Test-Time Adaptation for Single Image Defocus Deblurring via Causal Siamese Networks

Jan 15, 2025

Abstract:Single image defocus deblurring (SIDD) aims to restore an all-in-focus image from a defocused one. Distribution shifts in defocused images generally lead to performance degradation of existing methods during out-of-distribution inferences. In this work, we gauge the intrinsic reason behind the performance degradation, which is identified as the heterogeneity of lens-specific point spread functions. Empirical evidence supports this finding, motivating us to employ a continual test-time adaptation (CTTA) paradigm for SIDD. However, traditional CTTA methods, which primarily rely on entropy minimization, cannot sufficiently explore task-dependent information for pixel-level regression tasks like SIDD. To address this issue, we propose a novel Siamese networks-based continual test-time adaptation framework, which adapts source models to continuously changing target domains only requiring unlabeled target data in an online manner. To further mitigate semantically erroneous textures introduced by source SIDD models under severe degradation, we revisit the learning paradigm through a structural causal model and propose Causal Siamese networks (CauSiam). Our method leverages large-scale pre-trained vision-language models to derive discriminative universal semantic priors and integrates these priors into Siamese networks, ensuring causal identifiability between blurry inputs and restored images. Extensive experiments demonstrate that CauSiam effectively improves the generalization performance of existing SIDD methods in continuously changing domains.

Interpretable Enzyme Function Prediction via Residue-Level Detection

Jan 10, 2025

Abstract:Predicting multiple functions labeled with Enzyme Commission (EC) numbers from the enzyme sequence is of great significance but remains a challenge due to its sparse multi-label classification nature, i.e., each enzyme is typically associated with only a few labels out of more than 6000 possible EC numbers. However, existing machine learning algorithms generally learn a fixed global representation for each enzyme to classify all functions, thereby they lack interpretability and the fine-grained information of some function-specific local residue fragments may be overwhelmed. Here we present an attention-based framework, namely ProtDETR (Protein Detection Transformer), by casting enzyme function prediction as a detection problem. It uses a set of learnable functional queries to adaptatively extract different local representations from the sequence of residue-level features for predicting different EC numbers. ProtDETR not only significantly outperforms existing deep learning-based enzyme function prediction methods, but also provides a new interpretable perspective on automatically detecting different local regions for identifying different functions through cross-attentions between queries and residue-level features. Code is available at https://github.com/yangzhao1230/ProtDETR.

A Plug-and-Play Bregman ADMM Module for Inferring Event Branches in Temporal Point Processes

Jan 08, 2025

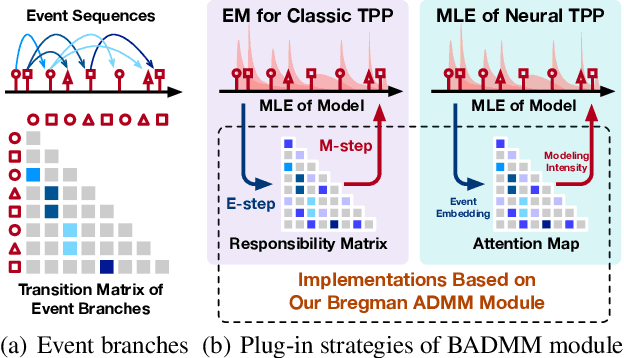

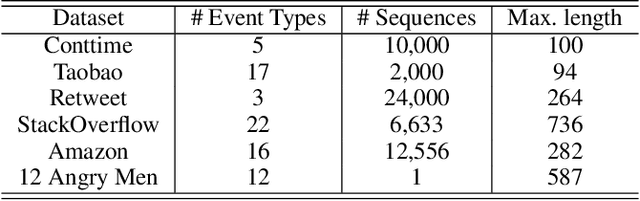

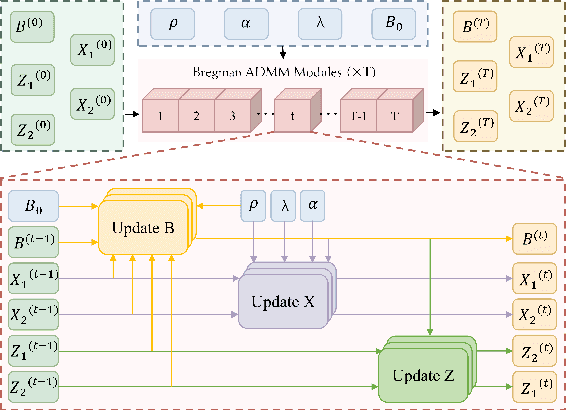

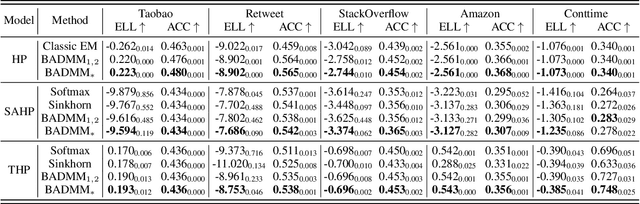

Abstract:An event sequence generated by a temporal point process is often associated with a hidden and structured event branching process that captures the triggering relations between its historical and current events. In this study, we design a new plug-and-play module based on the Bregman ADMM (BADMM) algorithm, which infers event branches associated with event sequences in the maximum likelihood estimation framework of temporal point processes (TPPs). Specifically, we formulate the inference of event branches as an optimization problem for the event transition matrix under sparse and low-rank constraints, which is embedded in existing TPP models or their learning paradigms. We can implement this optimization problem based on subspace clustering and sparse group-lasso, respectively, and solve it using the Bregman ADMM algorithm, whose unrolling leads to the proposed BADMM module. When learning a classic TPP (e.g., Hawkes process) by the expectation-maximization algorithm, the BADMM module helps derive structured responsibility matrices in the E-step. Similarly, the BADMM module helps derive low-rank and sparse attention maps for the neural TPPs with self-attention layers. The structured responsibility matrices and attention maps, which work as learned event transition matrices, indicate event branches, e.g., inferring isolated events and those key events triggering many subsequent events. Experiments on both synthetic and real-world data show that plugging our BADMM module into existing TPP models and learning paradigms can improve model performance and provide us with interpretable structured event branches. The code is available at \url{https://github.com/qingmeiwangdaily/BADMM_TPP}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge