Anqing Duan

Imagination at Inference: Synthesizing In-Hand Views for Robust Visuomotor Policy Inference

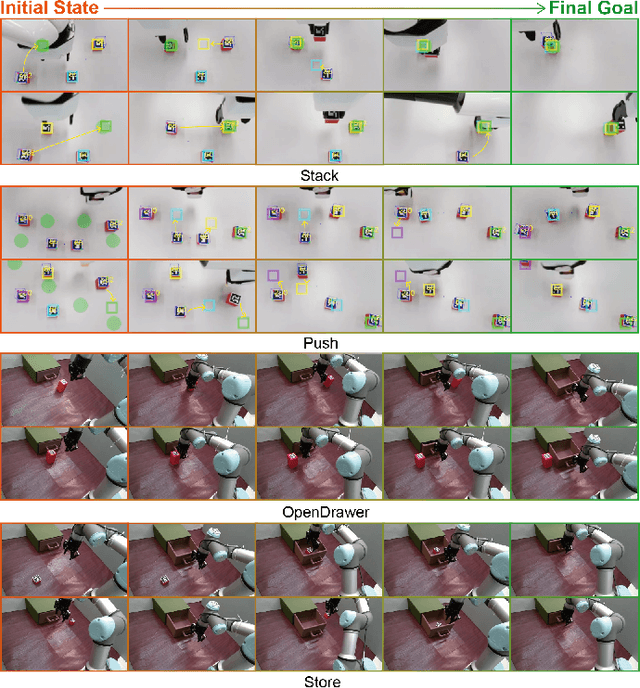

Sep 19, 2025Abstract:Visual observations from different viewpoints can significantly influence the performance of visuomotor policies in robotic manipulation. Among these, egocentric (in-hand) views often provide crucial information for precise control. However, in some applications, equipping robots with dedicated in-hand cameras may pose challenges due to hardware constraints, system complexity, and cost. In this work, we propose to endow robots with imaginative perception - enabling them to 'imagine' in-hand observations from agent views at inference time. We achieve this via novel view synthesis (NVS), leveraging a fine-tuned diffusion model conditioned on the relative pose between the agent and in-hand views cameras. Specifically, we apply LoRA-based fine-tuning to adapt a pretrained NVS model (ZeroNVS) to the robotic manipulation domain. We evaluate our approach on both simulation benchmarks (RoboMimic and MimicGen) and real-world experiments using a Unitree Z1 robotic arm for a strawberry picking task. Results show that synthesized in-hand views significantly enhance policy inference, effectively recovering the performance drop caused by the absence of real in-hand cameras. Our method offers a scalable and hardware-light solution for deploying robust visuomotor policies, highlighting the potential of imaginative visual reasoning in embodied agents.

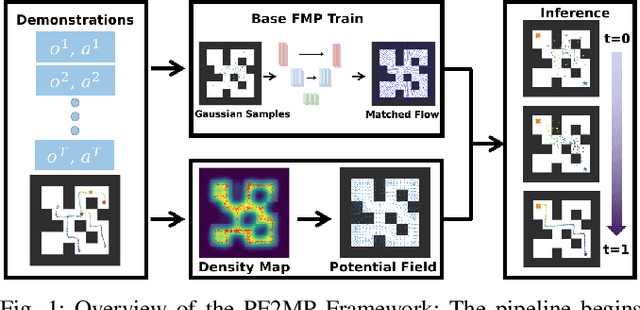

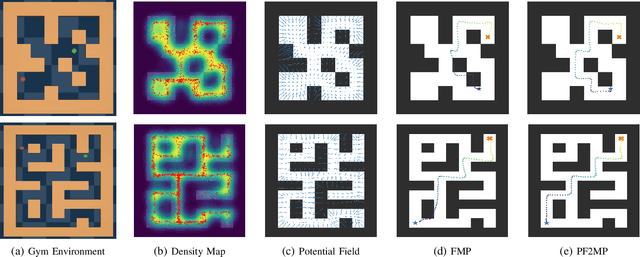

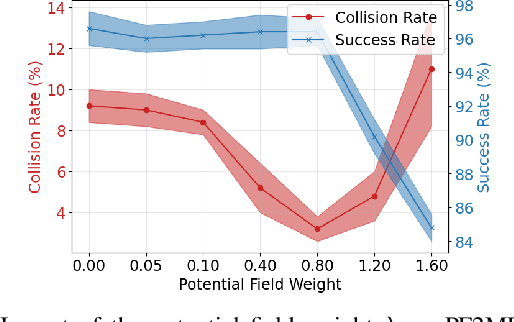

Towards Safe Imitation Learning via Potential Field-Guided Flow Matching

Aug 12, 2025

Abstract:Deep generative models, particularly diffusion and flow matching models, have recently shown remarkable potential in learning complex policies through imitation learning. However, the safety of generated motions remains overlooked, particularly in complex environments with inherent obstacles. In this work, we address this critical gap by proposing Potential Field-Guided Flow Matching Policy (PF2MP), a novel approach that simultaneously learns task policies and extracts obstacle-related information, represented as a potential field, from the same set of successful demonstrations. During inference, PF2MP modulates the flow matching vector field via the learned potential field, enabling safe motion generation. By leveraging these complementary fields, our approach achieves improved safety without compromising task success across diverse environments, such as navigation tasks and robotic manipulation scenarios. We evaluate PF2MP in both simulation and real-world settings, demonstrating its effectiveness in task space and joint space control. Experimental results demonstrate that PF2MP enhances safety, achieving a significant reduction of collisions compared to baseline policies. This work paves the way for safer motion generation in unstructured and obstaclerich environments.

Instruction-Augmented Long-Horizon Planning: Embedding Grounding Mechanisms in Embodied Mobile Manipulation

Mar 11, 2025Abstract:Enabling humanoid robots to perform long-horizon mobile manipulation planning in real-world environments based on embodied perception and comprehension abilities has been a longstanding challenge. With the recent rise of large language models (LLMs), there has been a notable increase in the development of LLM-based planners. These approaches either utilize human-provided textual representations of the real world or heavily depend on prompt engineering to extract such representations, lacking the capability to quantitatively understand the environment, such as determining the feasibility of manipulating objects. To address these limitations, we present the Instruction-Augmented Long-Horizon Planning (IALP) system, a novel framework that employs LLMs to generate feasible and optimal actions based on real-time sensor feedback, including grounded knowledge of the environment, in a closed-loop interaction. Distinct from prior works, our approach augments user instructions into PDDL problems by leveraging both the abstract reasoning capabilities of LLMs and grounding mechanisms. By conducting various real-world long-horizon tasks, each consisting of seven distinct manipulatory skills, our results demonstrate that the IALP system can efficiently solve these tasks with an average success rate exceeding 80%. Our proposed method can operate as a high-level planner, equipping robots with substantial autonomy in unstructured environments through the utilization of multi-modal sensor inputs.

* 17 pages, 11 figures

Non-Prehensile Tool-Object Manipulation by Integrating LLM-Based Planning and Manoeuvrability-Driven Controls

Dec 09, 2024Abstract:The ability to wield tools was once considered exclusive to human intelligence, but it's now known that many other animals, like crows, possess this capability. Yet, robotic systems still fall short of matching biological dexterity. In this paper, we investigate the use of Large Language Models (LLMs), tool affordances, and object manoeuvrability for non-prehensile tool-based manipulation tasks. Our novel method leverages LLMs based on scene information and natural language instructions to enable symbolic task planning for tool-object manipulation. This approach allows the system to convert the human language sentence into a sequence of feasible motion functions. We have developed a novel manoeuvrability-driven controller using a new tool affordance model derived from visual feedback. This controller helps guide the robot's tool utilization and manipulation actions, even within confined areas, using a stepping incremental approach. The proposed methodology is evaluated with experiments to prove its effectiveness under various manipulation scenarios.

Implicit Subgoal Planning with Variational Autoencoders for Long-Horizon Sparse Reward Robotic Tasks

Dec 25, 2023

Abstract:The challenges inherent to long-horizon tasks in robotics persist due to the typical inefficient exploration and sparse rewards in traditional reinforcement learning approaches. To alleviate these challenges, we introduce a novel algorithm, Variational Autoencoder-based Subgoal Inference (VAESI), to accomplish long-horizon tasks through a divide-and-conquer manner. VAESI consists of three components: a Variational Autoencoder (VAE)-based Subgoal Generator, a Hindsight Sampler, and a Value Selector. The VAE-based Subgoal Generator draws inspiration from the human capacity to infer subgoals and reason about the final goal in the context of these subgoals. It is composed of an explicit encoder model, engineered to generate subgoals, and an implicit decoder model, designed to enhance the quality of the generated subgoals by predicting the final goal. Additionally, the Hindsight Sampler selects valid subgoals from an offline dataset to enhance the feasibility of the generated subgoals. The Value Selector utilizes the value function in reinforcement learning to filter the optimal subgoals from subgoal candidates. To validate our method, we conduct several long-horizon tasks in both simulation and the real world, including one locomotion task and three manipulation tasks. The obtained quantitative and qualitative data indicate that our approach achieves promising performance compared to other baseline methods. These experimental results can be seen in the website \url{https://sites.google.com/view/vaesi/home}.

Learning Rhythmic Trajectories with Geometric Constraints for Laser-Based Skincare Procedures

Dec 21, 2023Abstract:The increasing deployment of robots has significantly enhanced the automation levels across a wide and diverse range of industries. This paper investigates the automation challenges of laser-based dermatology procedures in the beauty industry; This group of related manipulation tasks involves delivering energy from a cosmetic laser onto the skin with repetitive patterns. To automate this procedure, we propose to use a robotic manipulator and endow it with the dexterity of a skilled dermatology practitioner through a learning-from-demonstration framework. To ensure that the cosmetic laser can properly deliver the energy onto the skin surface of an individual, we develop a novel structured prediction-based imitation learning algorithm with the merit of handling geometric constraints. Notably, our proposed algorithm effectively tackles the imitation challenges associated with quasi-periodic motions, a common feature of many laser-based cosmetic tasks. The conducted real-world experiments illustrate the performance of our robotic beautician in mimicking realistic dermatological procedures; Our new method is shown to not only replicate the rhythmic movements from the provided demonstrations but also to adapt the acquired skills to previously unseen scenarios and subjects.

A Structured Prediction Approach for Robot Imitation Learning

Sep 26, 2023Abstract:We propose a structured prediction approach for robot imitation learning from demonstrations. Among various tools for robot imitation learning, supervised learning has been observed to have a prominent role. Structured prediction is a form of supervised learning that enables learning models to operate on output spaces with complex structures. Through the lens of structured prediction, we show how robots can learn to imitate trajectories belonging to not only Euclidean spaces but also Riemannian manifolds. Exploiting ideas from information theory, we propose a class of loss functions based on the f-divergence to measure the information loss between the demonstrated and reproduced probabilistic trajectories. Different types of f-divergence will result in different policies, which we call imitation modes. Furthermore, our approach enables the incorporation of spatial and temporal trajectory modulation, which is necessary for robots to be adaptive to the change in working conditions. We benchmark our algorithm against state-of-the-art methods in terms of trajectory reproduction and adaptation. The quantitative evaluation shows that our approach outperforms other algorithms regarding both accuracy and efficiency. We also report real-world experimental results on learning manifold trajectories in a polishing task with a KUKA LWR robot arm, illustrating the effectiveness of our algorithmic framework.

PSO-Based Optimal Coverage Path Planning for Surface Defect Inspection of 3C Components with a Robotic Line Scanner

Jul 10, 2023Abstract:The automatic inspection of surface defects is an important task for quality control in the computers, communications, and consumer electronics (3C) industry. Conventional devices for defect inspection (viz. line-scan sensors) have a limited field of view, thus, a robot-aided defect inspection system needs to scan the object from multiple viewpoints. Optimally selecting the robot's viewpoints and planning a path is regarded as coverage path planning (CPP), a problem that enables inspecting the object's complete surface while reducing the scanning time and avoiding misdetection of defects. However, the development of CPP strategies for robotic line scanners has not been sufficiently studied by researchers. To fill this gap in the literature, in this paper, we present a new approach for robotic line scanners to detect surface defects of 3C free-form objects automatically. Our proposed solution consists of generating a local path by a new hybrid region segmentation method and an adaptive planning algorithm to ensure the coverage of the complete object surface. An optimization method for the global path sequence is developed to maximize the scanning efficiency. To verify our proposed methodology, we conduct detailed simulation-based and experimental studies on various free-form workpieces, and compare its performance with a state-of-the-art solution. The reported results demonstrate the feasibility and effectiveness of our approach.

Efficient Robot Skill Learning with Imitation from a Single Video for Contact-Rich Fabric Manipulation

Apr 24, 2023

Abstract:Classical policy search algorithms for robotics typically require performing extensive explorations, which are time-consuming and expensive to implement with real physical platforms. To facilitate the efficient learning of robot manipulation skills, in this work, we propose a new approach comprised of three modules: (1) learning of general prior knowledge with random explorations in simulation, including state representations, dynamic models, and the constrained action space of the task; (2) extraction of a state alignment-based reward function from a single demonstration video; (3) real-time optimization of the imitation policy under systematic safety constraints with sampling-based model predictive control. This solution results in an efficient one-shot imitation-from-video strategy that simplifies the learning and execution of robot skills in real applications. Specifically, we learn priors in a scene of a task family and then deploy the policy in a novel scene immediately following a single demonstration, preventing time-consuming and risky explorations in the environment. As we do not make a strong assumption of dynamic consistency between the scenes, learning priors can be conducted in simulation to avoid collecting data in real-world circumstances. We evaluate the effectiveness of our approach in the context of contact-rich fabric manipulation, which is a common scenario in industrial and domestic tasks. Detailed numerical simulations and real-world hardware experiments reveal that our method can achieve rapid skill acquisition for challenging manipulation tasks.

Ultrasound-Guided Assistive Robots for Scoliosis Assessment with Optimization-based Control and Variable Impedance

Mar 04, 2022

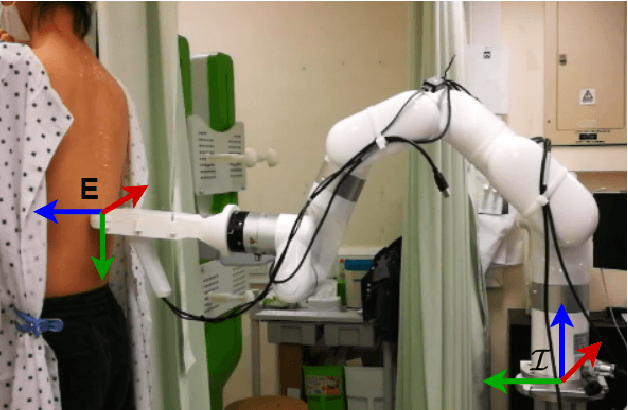

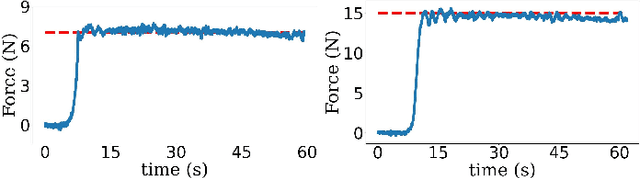

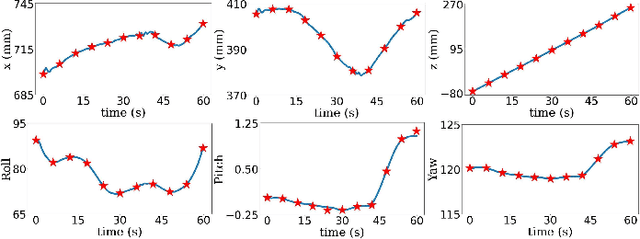

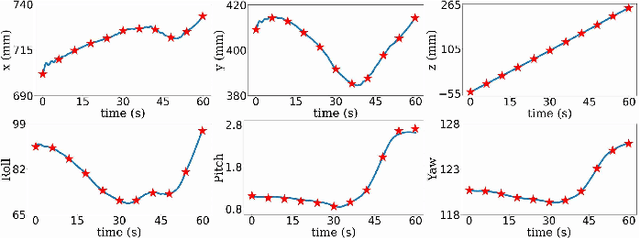

Abstract:Assistive robots for healthcare have seen a growing demand due to the great potential of relieving medical practitioners from routine jobs. In this paper, we investigate the development of an optimization-based control framework for an ultrasound-guided assistive robot to perform scoliosis assessment. A conventional procedure for scoliosis assessment with ultrasound imaging typically requires a medical practitioner to slide an ultrasound probe along a patient's back. To automate this type of procedure, we need to consider multiple objectives, such as contact force, position, orientation, energy, posture, etc. To address the aforementioned components, we propose to formulate the control framework design as a quadratic programming problem with each objective weighed by its task priority subject to a set of equality and inequality constraints. In addition, as the robot needs to establish constant contact with the patient during spine scanning, we incorporate variable impedance regulation of the end-effector position and orientation in the control architecture to enhance safety and stability during the physical human-robot interaction. Wherein, the variable impedance gains are retrieved by learning from the medical expert's demonstrations. The proposed methodology is evaluated by conducting real-world experiments of autonomous scoliosis assessment with a robot manipulator xArm. The effectiveness is verified by the obtained coronal spinal images of both a phantom and a human subject.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge