Omar Zahra

Learning Cloth Folding Tasks with Refined Flow Based Spatio-Temporal Graphs

Oct 16, 2021

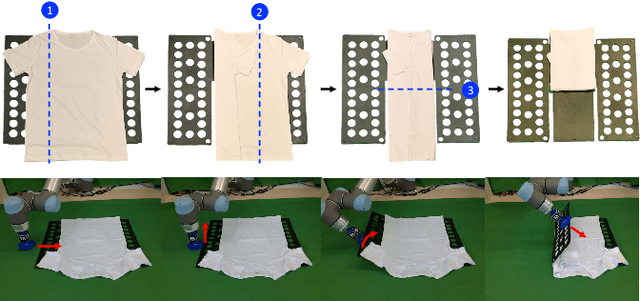

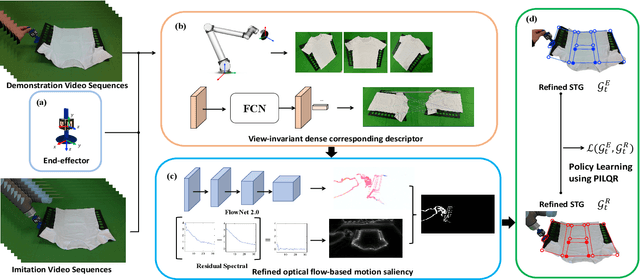

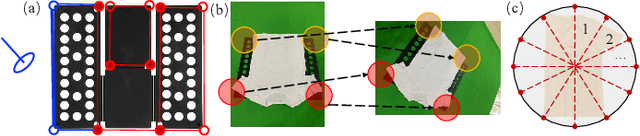

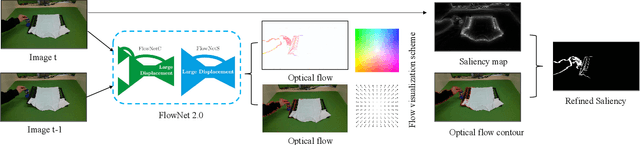

Abstract:Cloth folding is a widespread domestic task that is seemingly performed by humans but which is highly challenging for autonomous robots to execute due to the highly deformable nature of textiles; It is hard to engineer and learn manipulation pipelines to efficiently execute it. In this paper, we propose a new solution for robotic cloth folding (using a standard folding board) via learning from demonstrations. Our demonstration video encoding is based on a high-level abstraction, namely, a refined optical flow-based spatiotemporal graph, as opposed to a low-level encoding such as image pixels. By constructing a new spatiotemporal graph with an advanced visual corresponding descriptor, the policy learning can focus on key points and relations with a 3D spatial configuration, which allows to quickly generalize across different environments. To further boost the policy searching, we combine optical flow and static motion saliency maps to discriminate the dominant motions for better handling the system dynamics in real-time, which aligns with the attentional motion mechanism that dominates the human imitation process. To validate the proposed approach, we analyze the manual folding procedure and developed a custom-made end-effector to efficiently interact with the folding board. Multiple experiments on a real robotic platform were conducted to validate the effectiveness and robustness of the proposed method.

A Neurorobotic Embodiment for Exploring the Dynamical Interactions of a Spiking Cerebellar Model and a Robot Arm During Vision-based Manipulation Tasks

Feb 03, 2021

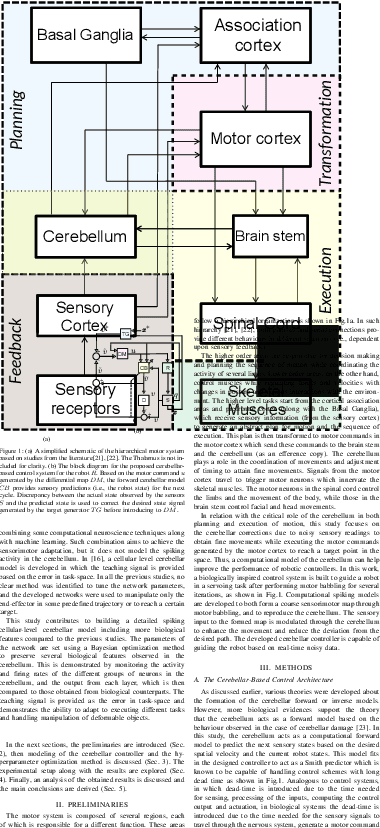

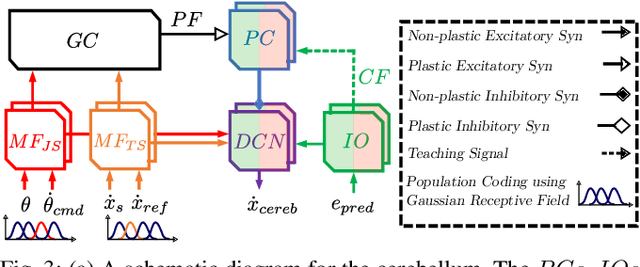

Abstract:While the original goal for developing robots is replacing humans in dangerous and tedious tasks, the final target shall be completely mimicking the human cognitive and motor behaviour. Hence, building detailed computational models for the human brain is one of the reasonable ways to attain this. The cerebellum is one of the key players in our neural system to guarantee dexterous manipulation and coordinated movements as concluded from lesions in that region. Studies suggest that it acts as a forward model providing anticipatory corrections for the sensory signals based on observed discrepancies from the reference values. While most studies consider providing the teaching signal as error in joint-space, few studies consider the error in task-space and even fewer consider the spiking nature of the cerebellum on the cellular-level. In this study, a detailed cellular-level forward cerebellar model is developed, including modeling of Golgi and Basket cells which are usually neglected in previous studies. To preserve the biological features of the cerebellum in the developed model, a hyperparameter optimization method tunes the network accordingly. The efficiency and biological plausibility of the proposed cerebellar-based controller is then demonstrated under different robotic manipulation tasks reproducing motor behaviour observed in human reaching experiments.

Vision-Based Control for Robots by a Fully Spiking Neural System Relying on Cerebellar Predictive Learning

Nov 03, 2020

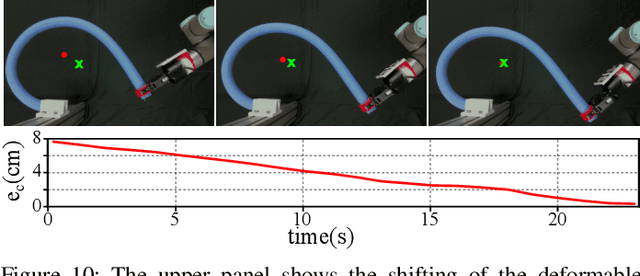

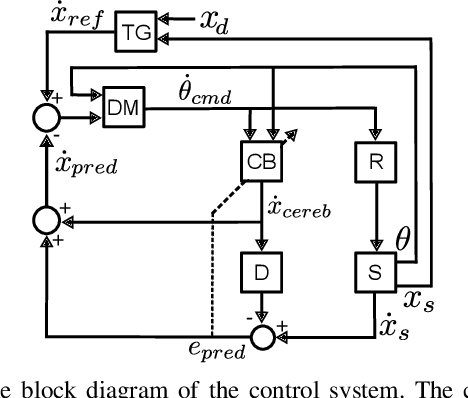

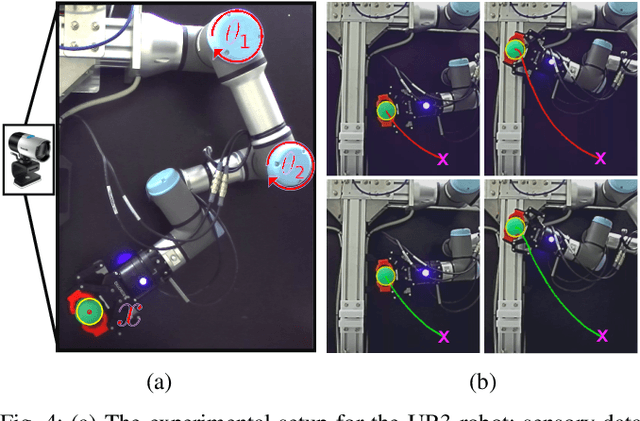

Abstract:The cerebellum plays a distinctive role within our motor control system to achieve fine and coordinated motions. While cerebellar lesions do not lead to a complete loss of motor functions, both action and perception are severally impacted. Hence, it is assumed that the cerebellum uses an internal forward model to provide anticipatory signals by learning from the error in sensory states. In some studies, it was demonstrated that the learning process relies on the joint-space error. However, this may not exist. This work proposes a novel fully spiking neural system that relies on a forward predictive learning by means of a cellular cerebellar model. The forward model is learnt thanks to the sensory feedback in task-space and it acts as a Smith predictor. The latter predicts sensory corrections in input to a differential mapping spiking neural network during a visual servoing task of a robot arm manipulator. In this paper, we promote the developed control system to achieve more accurate target reaching actions and reduce the motion execution time for the robotic reaching tasks thanks to the cerebellar predictive capabilities.

Differential Mapping Spiking Neural Network for Sensor-Based Robot Control

May 20, 2020

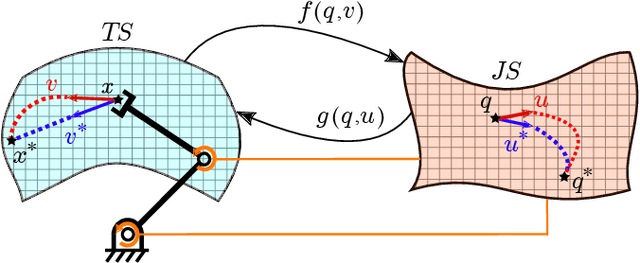

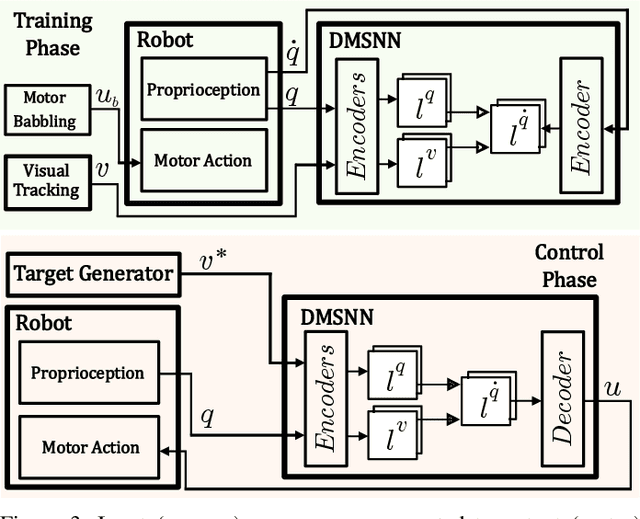

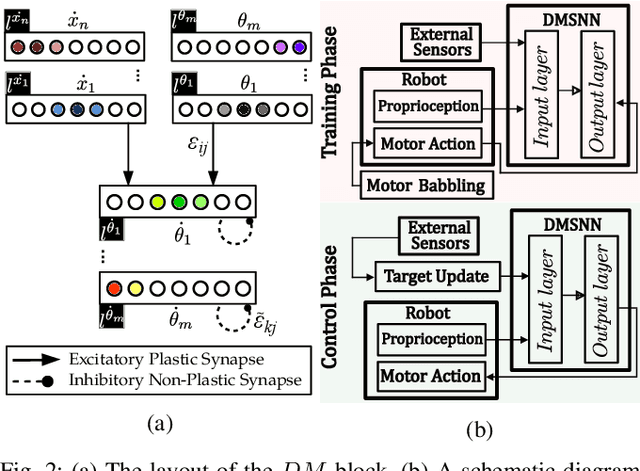

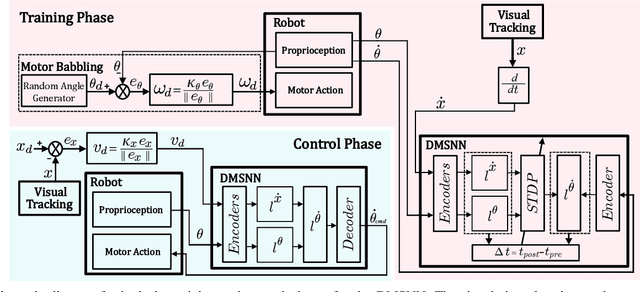

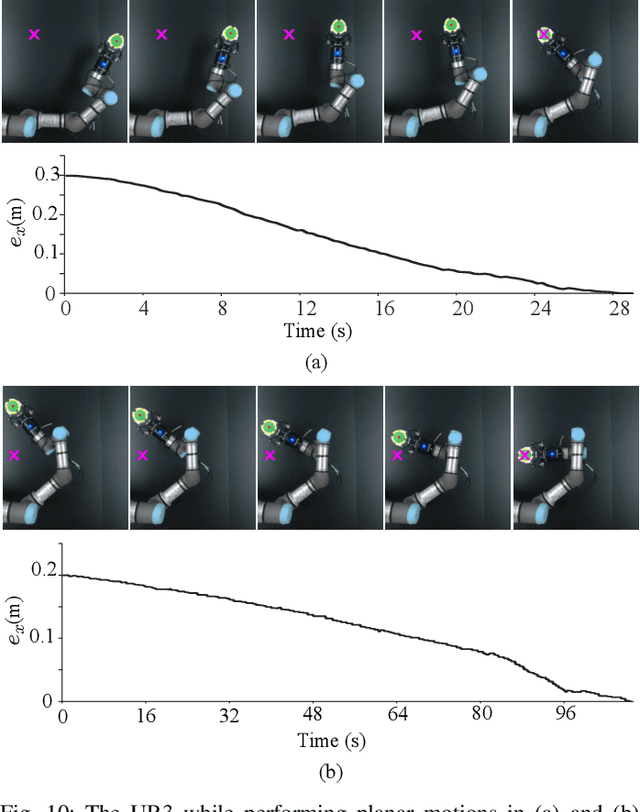

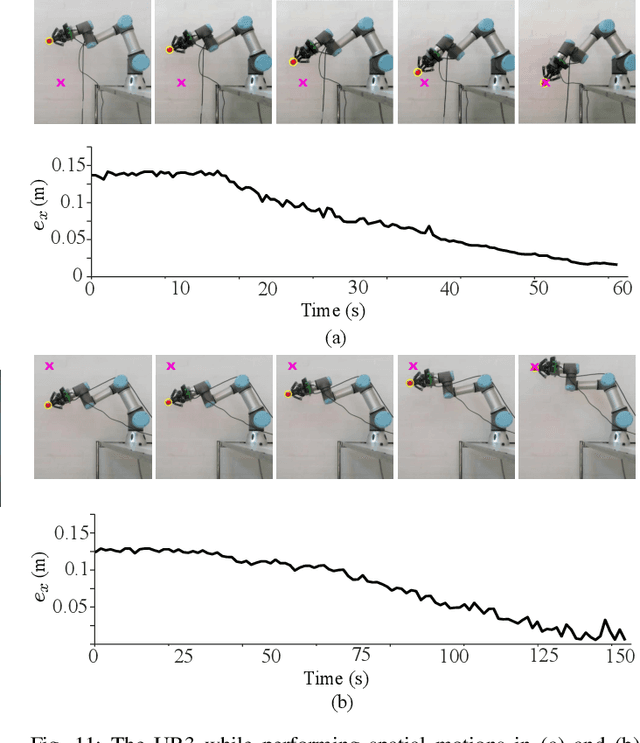

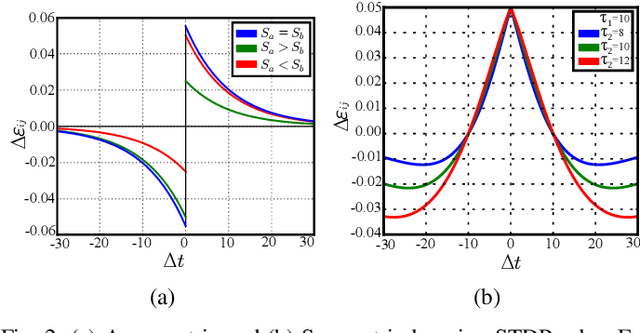

Abstract:In this work, a spiking neural network is proposed for approximating differential sensorimotor maps of robotic systems. The computed model is used as a local Jacobian-like projection that relates changes in sensor space to changes in motor space. The network consists of an input (sensory) layer and an output (motor) layer connected through plastic synapses, with interinhibtory connections at the output layer. Spiking neurons are modeled as Izhikevich neurons with synapses' learning rule based on spike-timing-dependent plasticity. Feedback data from proprioceptive and exteroceptive sensors are encoded and fed into the input layer through a motor babbling process. As the main challenge to building an efficient SNN is to tune its parameters, we present an intuitive tuning method that enables us to considerably reduce the number of neurons and the amount of data required for training. Our proposed architecture represents a biologically plausible neural controller that is capable of handling noisy sensor readings to guide robot movements in real-time. Experimental results are presented to validate the control methodology with a vision-guided robot.

A Self-Organizing Network with Varying Density Structure for Characterizing Sensorimotor Transformations in Robotic Systems

May 01, 2019

Abstract:In this work, we present the development of a neuro-inspired approach for characterizing sensorimotor relations in robotic systems. The proposed method has self-organizing and associative properties that enable it to autonomously obtain these relations without any prior knowledge of either the motor (e.g. mechanical structure) or perceptual (e.g. sensor calibration) models. Self-organizing topographic properties are used to build both sensory and motor maps, then the associative properties rule the stability and accuracy of the emerging connections between these maps. Compared to previous works, our method introduces a new varying density self-organizing map (VDSOM) that controls the concentration of nodes in regions with large transformation errors without affecting much the computational time. A distortion metric is measured to achieve a self-tuning sensorimotor model that adapts to changes in either motor or sensory models. The obtained sensorimotor maps prove to have less error than conventional self-organizing methods and potential for further development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge