Silvia Tolu

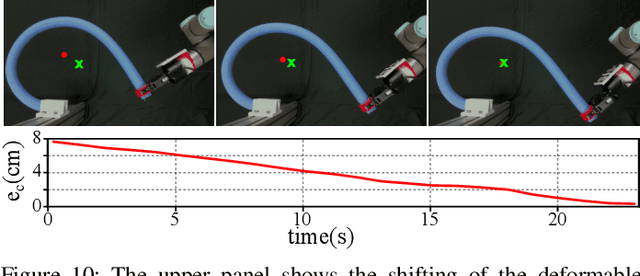

Learning-based Delay Compensation for Enhanced Control of Assistive Soft Robots

Apr 16, 2025Abstract:Soft robots are increasingly used in healthcare, especially for assistive care, due to their inherent safety and adaptability. Controlling soft robots is challenging due to their nonlinear dynamics and the presence of time delays, especially in applications like a soft robotic arm for patient care. This paper presents a learning-based approach to approximate the nonlinear state predictor (Smith Predictor), aiming to improve tracking performance in a two-module soft robot arm with a short inherent input delay. The method uses Kernel Recursive Least Squares Tracker (KRLST) for online learning of the system dynamics and a Legendre Delay Network (LDN) to compress past input history for efficient delay compensation. Experimental results demonstrate significant improvement in tracking performance compared to a baseline model-based non-linear controller. Statistical analysis confirms the significance of the improvements. The method is computationally efficient and adaptable online, making it suitable for real-world scenarios and highlighting its potential for enabling safer and more accurate control of soft robots in assistive care applications.

Event-based Civil Infrastructure Visual Defect Detection: ev-CIVIL Dataset and Benchmark

Apr 08, 2025Abstract:Small Unmanned Aerial Vehicle (UAV) based visual inspections are a more efficient alternative to manual methods for examining civil structural defects, offering safe access to hazardous areas and significant cost savings by reducing labor requirements. However, traditional frame-based cameras, widely used in UAV-based inspections, often struggle to capture defects under low or dynamic lighting conditions. In contrast, Dynamic Vision Sensors (DVS), or event-based cameras, excel in such scenarios by minimizing motion blur, enhancing power efficiency, and maintaining high-quality imaging across diverse lighting conditions without saturation or information loss. Despite these advantages, existing research lacks studies exploring the feasibility of using DVS for detecting civil structural defects.Moreover, there is no dedicated event-based dataset tailored for this purpose. Addressing this gap, this study introduces the first event-based civil infrastructure defect detection dataset, capturing defective surfaces as a spatio-temporal event stream using DVS.In addition to event-based data, the dataset includes grayscale intensity image frames captured simultaneously using an Active Pixel Sensor (APS). Both data types were collected using the DAVIS346 camera, which integrates DVS and APS sensors.The dataset focuses on two types of defects: cracks and spalling, and includes data from both field and laboratory environments. The field dataset comprises 318 recording sequences,documenting 458 distinct cracks and 121 distinct spalling instances.The laboratory dataset includes 362 recording sequences, covering 220 distinct cracks and 308 spalling instances.Four realtime object detection models were evaluated on it to validate the dataset effectiveness.The results demonstrate the dataset robustness in enabling accurate defect detection and classification,even under challenging lighting conditions.

Assisted Physical Interaction: Autonomous Aerial Robots with Neural Network Detection, Navigation, and Safety Layers

Oct 21, 2024

Abstract:The paper introduces a novel framework for safe and autonomous aerial physical interaction in industrial settings. It comprises two main components: a neural network-based target detection system enhanced with edge computing for reduced onboard computational load, and a control barrier function (CBF)-based controller for safe and precise maneuvering. The target detection system is trained on a dataset under challenging visual conditions and evaluated for accuracy across various unseen data with changing lighting conditions. Depth features are utilized for target pose estimation, with the entire detection framework offloaded into low-latency edge computing. The CBF-based controller enables the UAV to converge safely to the target for precise contact. Simulated evaluations of both the controller and target detection are presented, alongside an analysis of real-world detection performance.

* 8 pages,14 figures, ICUAS 2024

Adaptive Variable Impedance Control for a Modular Soft Robot Manipulator in Configuration Space

Oct 09, 2021

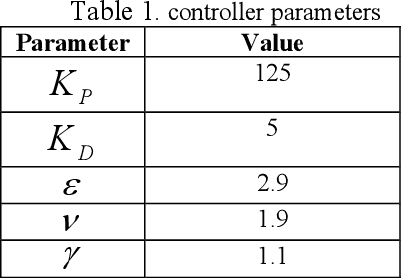

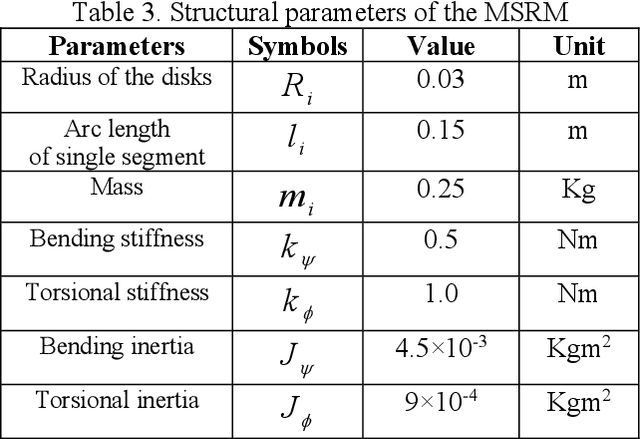

Abstract:Compliance is a strong requirement for human-robot interactions. Soft-robots provide an opportunity to cover the lack of compliance in conventional actuation mechanisms, however, the control of them is very challenging given their intrinsic complex motions. Therefore, soft-robots require new approaches to e.g., modeling, control, dynamics, and planning. One of the control strategies that ensures compliance is the impedance control. During the task execution in the presence of coupling force and position constraints, a dynamic behavior increases the flexibility of the impedance control. This imposes some additional constraints on the stability of the control system. To tackle them, we propose a variable impedance control in configuration space for a modular soft robot manipulator (MSRM) in the presence of model uncertainties and external forces. The external loads are estimated in configuration space using a momentum-based approach in order to reduce the calculation complexity, and the adaptive back-stepping sliding mode (ABSM) controller is designed to guard against uncertainties. Stability analysis is performed using Lyapunov theory which guarantees not only the exponential stability of each state under the designed control law, but also the global stability of the closed-loop system. The system performance is benchmarked against other conventional control methods, such as the sliding mode (SM) and inverse dynamics PD controllers. The results show the effectiveness of the proposed variable impedance control in stabilizing the position error and diminishing the impact of the external load compared to SM and PD controllers.

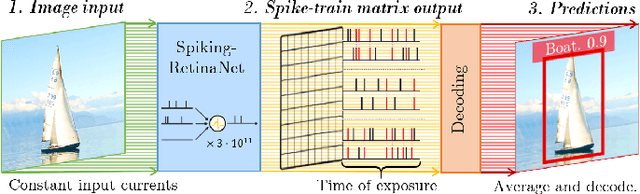

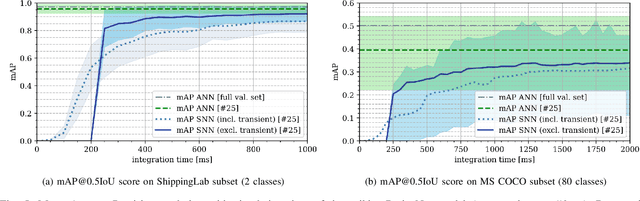

RetinaNet Object Detector based on Analog-to-Spiking Neural Network Conversion

Jun 10, 2021

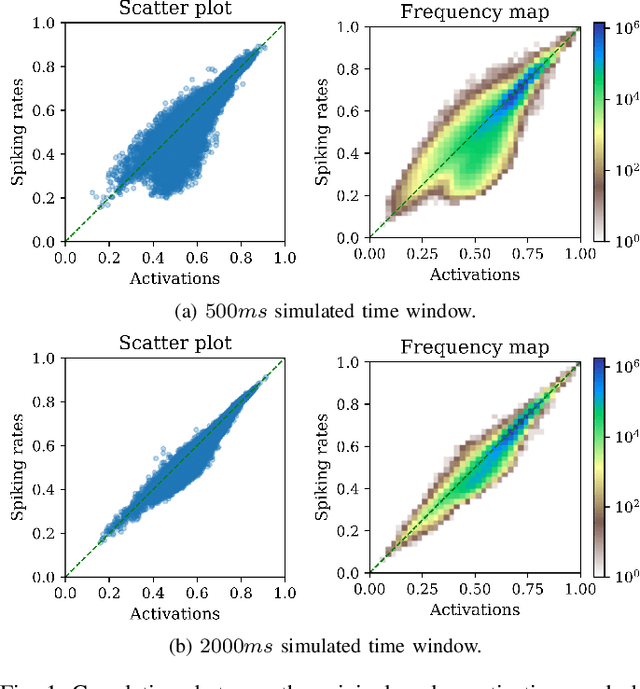

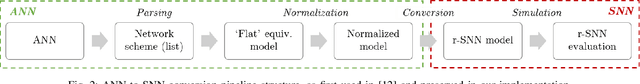

Abstract:The paper proposes a method to convert a deep learning object detector into an equivalent spiking neural network. The aim is to provide a conversion framework that is not constrained to shallow network structures and classification problems as in state-of-the-art conversion libraries. The results show that models of higher complexity, such as the RetinaNet object detector, can be converted with limited loss in performance.

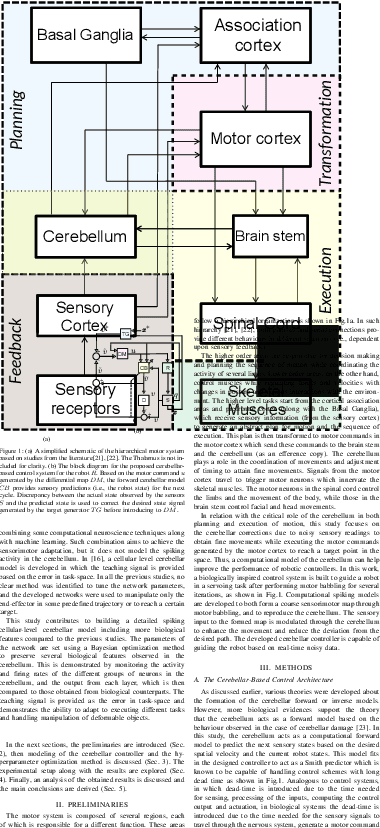

A Neurorobotic Embodiment for Exploring the Dynamical Interactions of a Spiking Cerebellar Model and a Robot Arm During Vision-based Manipulation Tasks

Feb 03, 2021

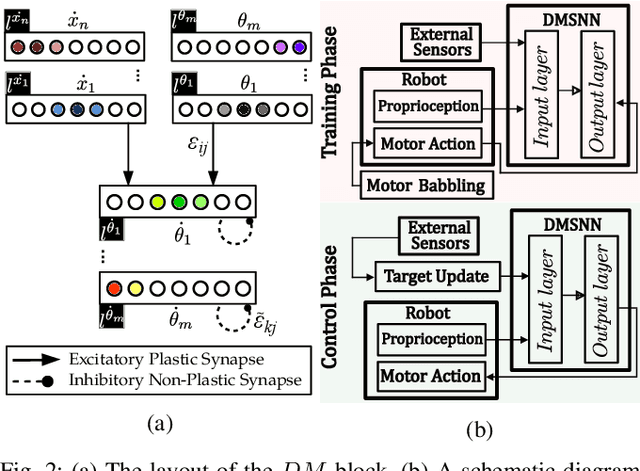

Abstract:While the original goal for developing robots is replacing humans in dangerous and tedious tasks, the final target shall be completely mimicking the human cognitive and motor behaviour. Hence, building detailed computational models for the human brain is one of the reasonable ways to attain this. The cerebellum is one of the key players in our neural system to guarantee dexterous manipulation and coordinated movements as concluded from lesions in that region. Studies suggest that it acts as a forward model providing anticipatory corrections for the sensory signals based on observed discrepancies from the reference values. While most studies consider providing the teaching signal as error in joint-space, few studies consider the error in task-space and even fewer consider the spiking nature of the cerebellum on the cellular-level. In this study, a detailed cellular-level forward cerebellar model is developed, including modeling of Golgi and Basket cells which are usually neglected in previous studies. To preserve the biological features of the cerebellum in the developed model, a hyperparameter optimization method tunes the network accordingly. The efficiency and biological plausibility of the proposed cerebellar-based controller is then demonstrated under different robotic manipulation tasks reproducing motor behaviour observed in human reaching experiments.

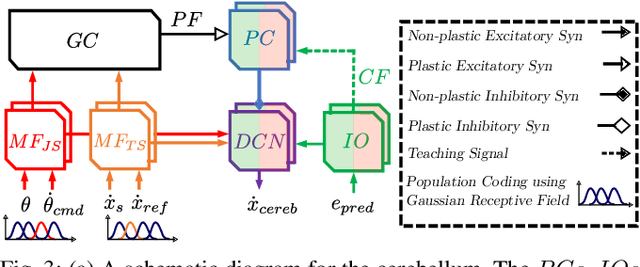

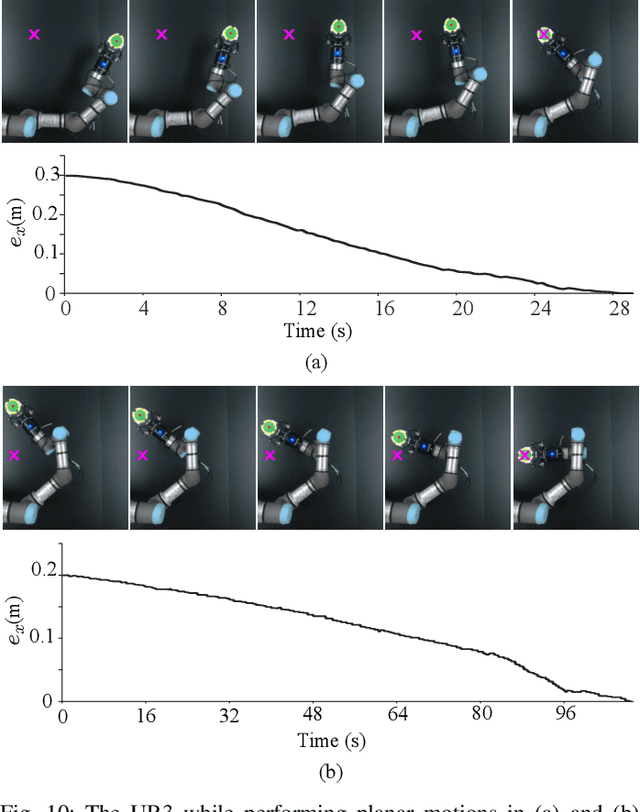

Vision-Based Control for Robots by a Fully Spiking Neural System Relying on Cerebellar Predictive Learning

Nov 03, 2020

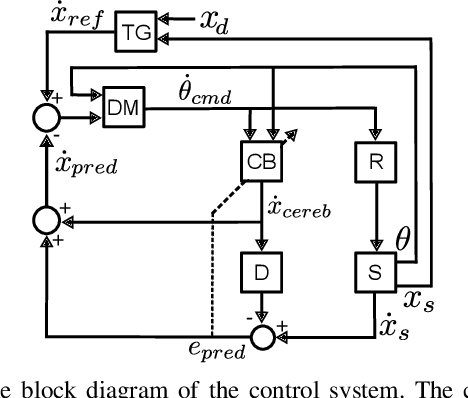

Abstract:The cerebellum plays a distinctive role within our motor control system to achieve fine and coordinated motions. While cerebellar lesions do not lead to a complete loss of motor functions, both action and perception are severally impacted. Hence, it is assumed that the cerebellum uses an internal forward model to provide anticipatory signals by learning from the error in sensory states. In some studies, it was demonstrated that the learning process relies on the joint-space error. However, this may not exist. This work proposes a novel fully spiking neural system that relies on a forward predictive learning by means of a cellular cerebellar model. The forward model is learnt thanks to the sensory feedback in task-space and it acts as a Smith predictor. The latter predicts sensory corrections in input to a differential mapping spiking neural network during a visual servoing task of a robot arm manipulator. In this paper, we promote the developed control system to achieve more accurate target reaching actions and reduce the motion execution time for the robotic reaching tasks thanks to the cerebellar predictive capabilities.

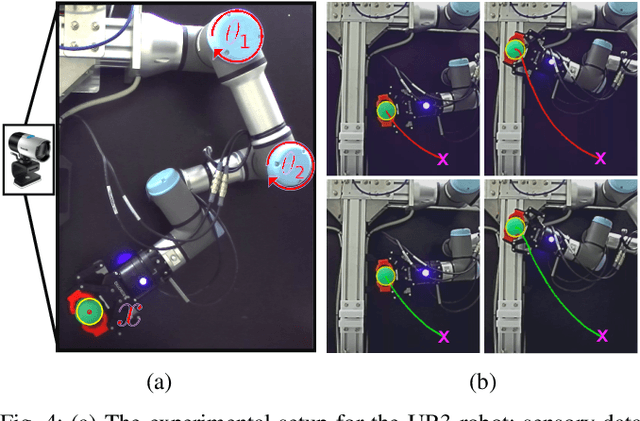

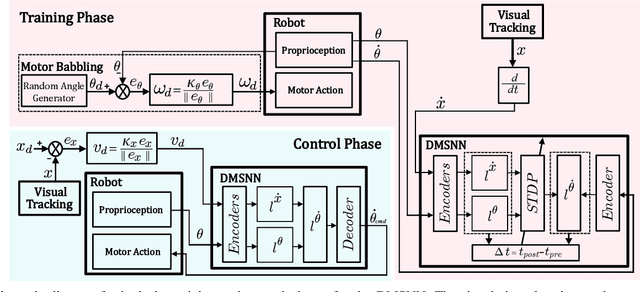

Differential Mapping Spiking Neural Network for Sensor-Based Robot Control

May 20, 2020

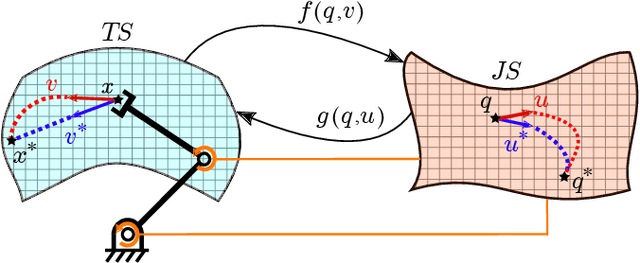

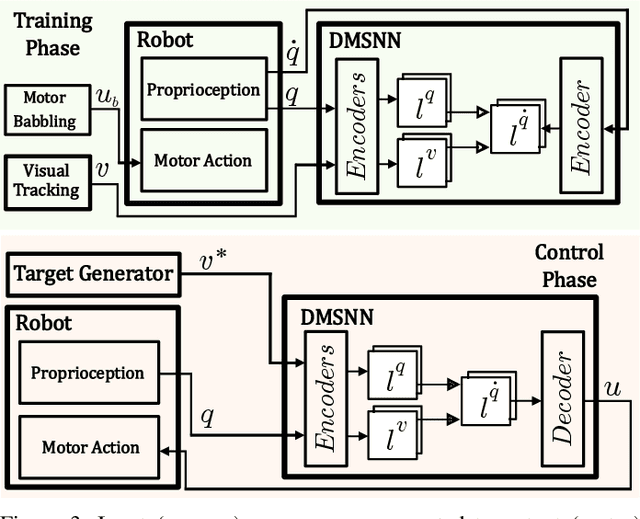

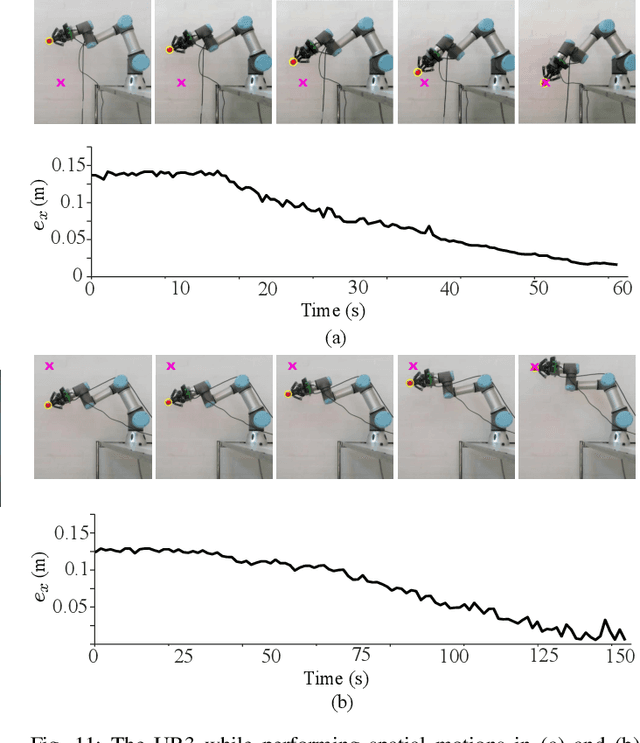

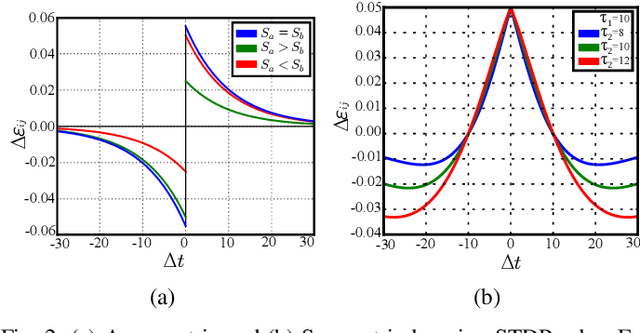

Abstract:In this work, a spiking neural network is proposed for approximating differential sensorimotor maps of robotic systems. The computed model is used as a local Jacobian-like projection that relates changes in sensor space to changes in motor space. The network consists of an input (sensory) layer and an output (motor) layer connected through plastic synapses, with interinhibtory connections at the output layer. Spiking neurons are modeled as Izhikevich neurons with synapses' learning rule based on spike-timing-dependent plasticity. Feedback data from proprioceptive and exteroceptive sensors are encoded and fed into the input layer through a motor babbling process. As the main challenge to building an efficient SNN is to tune its parameters, we present an intuitive tuning method that enables us to considerably reduce the number of neurons and the amount of data required for training. Our proposed architecture represents a biologically plausible neural controller that is capable of handling noisy sensor readings to guide robot movements in real-time. Experimental results are presented to validate the control methodology with a vision-guided robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge