Differential Mapping Spiking Neural Network for Sensor-Based Robot Control

Paper and Code

May 20, 2020

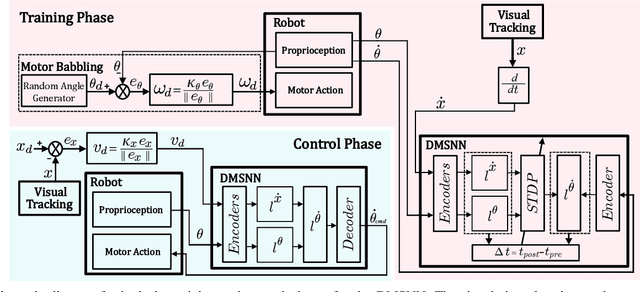

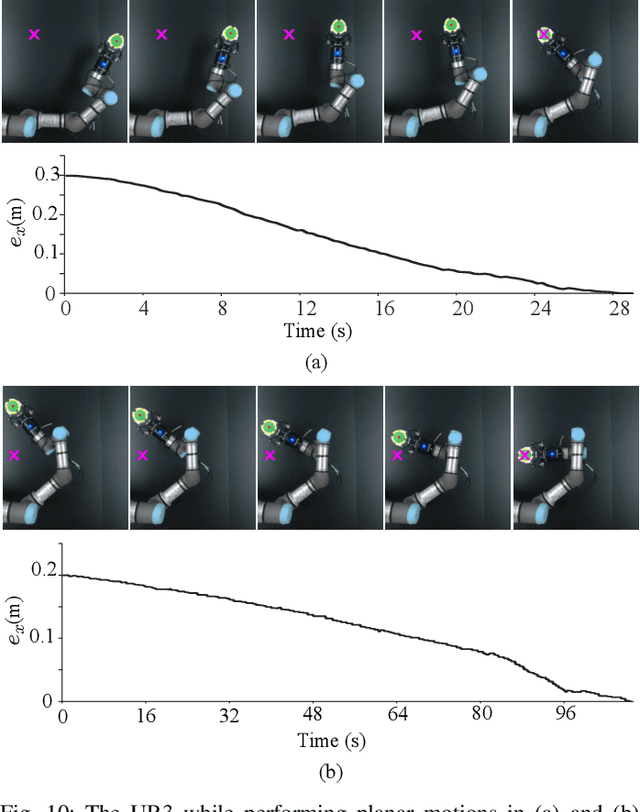

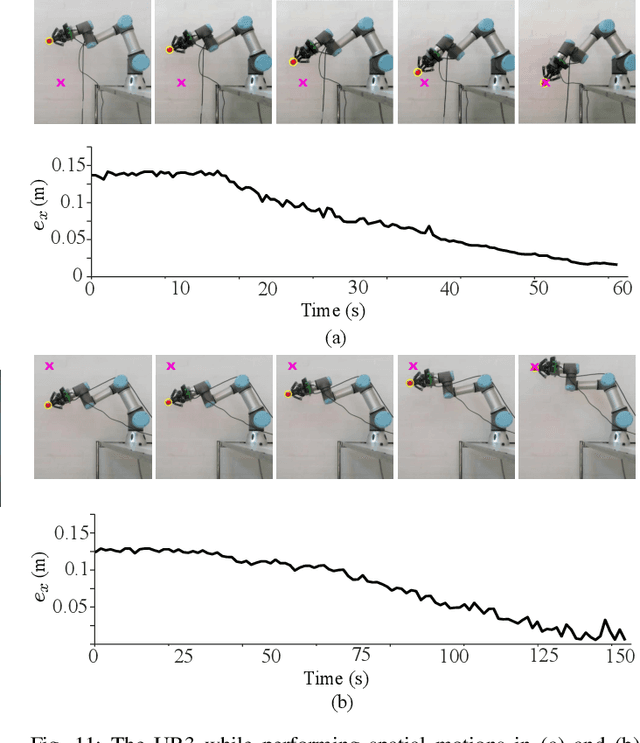

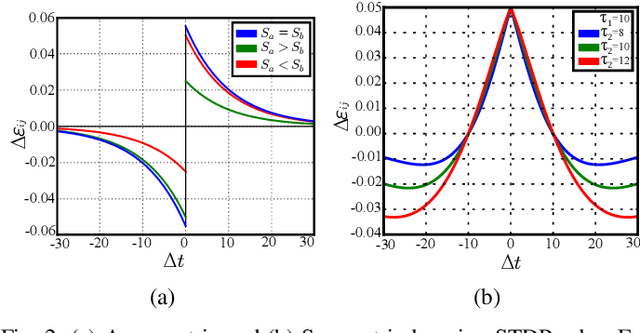

In this work, a spiking neural network is proposed for approximating differential sensorimotor maps of robotic systems. The computed model is used as a local Jacobian-like projection that relates changes in sensor space to changes in motor space. The network consists of an input (sensory) layer and an output (motor) layer connected through plastic synapses, with interinhibtory connections at the output layer. Spiking neurons are modeled as Izhikevich neurons with synapses' learning rule based on spike-timing-dependent plasticity. Feedback data from proprioceptive and exteroceptive sensors are encoded and fed into the input layer through a motor babbling process. As the main challenge to building an efficient SNN is to tune its parameters, we present an intuitive tuning method that enables us to considerably reduce the number of neurons and the amount of data required for training. Our proposed architecture represents a biologically plausible neural controller that is capable of handling noisy sensor readings to guide robot movements in real-time. Experimental results are presented to validate the control methodology with a vision-guided robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge