Hoi-Yin Lee

Non-Prehensile Tool-Object Manipulation by Integrating LLM-Based Planning and Manoeuvrability-Driven Controls

Dec 09, 2024Abstract:The ability to wield tools was once considered exclusive to human intelligence, but it's now known that many other animals, like crows, possess this capability. Yet, robotic systems still fall short of matching biological dexterity. In this paper, we investigate the use of Large Language Models (LLMs), tool affordances, and object manoeuvrability for non-prehensile tool-based manipulation tasks. Our novel method leverages LLMs based on scene information and natural language instructions to enable symbolic task planning for tool-object manipulation. This approach allows the system to convert the human language sentence into a sequence of feasible motion functions. We have developed a novel manoeuvrability-driven controller using a new tool affordance model derived from visual feedback. This controller helps guide the robot's tool utilization and manipulation actions, even within confined areas, using a stepping incremental approach. The proposed methodology is evaluated with experiments to prove its effectiveness under various manipulation scenarios.

PSO-Based Optimal Coverage Path Planning for Surface Defect Inspection of 3C Components with a Robotic Line Scanner

Jul 10, 2023Abstract:The automatic inspection of surface defects is an important task for quality control in the computers, communications, and consumer electronics (3C) industry. Conventional devices for defect inspection (viz. line-scan sensors) have a limited field of view, thus, a robot-aided defect inspection system needs to scan the object from multiple viewpoints. Optimally selecting the robot's viewpoints and planning a path is regarded as coverage path planning (CPP), a problem that enables inspecting the object's complete surface while reducing the scanning time and avoiding misdetection of defects. However, the development of CPP strategies for robotic line scanners has not been sufficiently studied by researchers. To fill this gap in the literature, in this paper, we present a new approach for robotic line scanners to detect surface defects of 3C free-form objects automatically. Our proposed solution consists of generating a local path by a new hybrid region segmentation method and an adaptive planning algorithm to ensure the coverage of the complete object surface. An optimization method for the global path sequence is developed to maximize the scanning efficiency. To verify our proposed methodology, we conduct detailed simulation-based and experimental studies on various free-form workpieces, and compare its performance with a state-of-the-art solution. The reported results demonstrate the feasibility and effectiveness of our approach.

Self-Reconfigurable Soft-Rigid Mobile Agent with Variable Stiffness and Adaptive Morphology

Oct 16, 2022

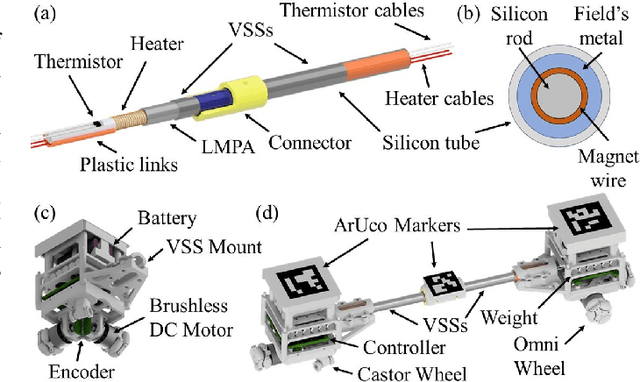

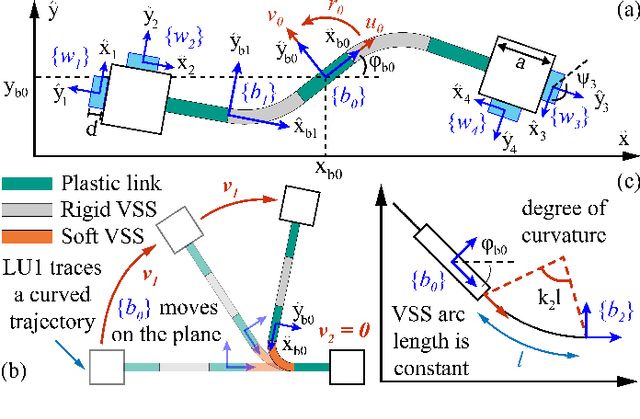

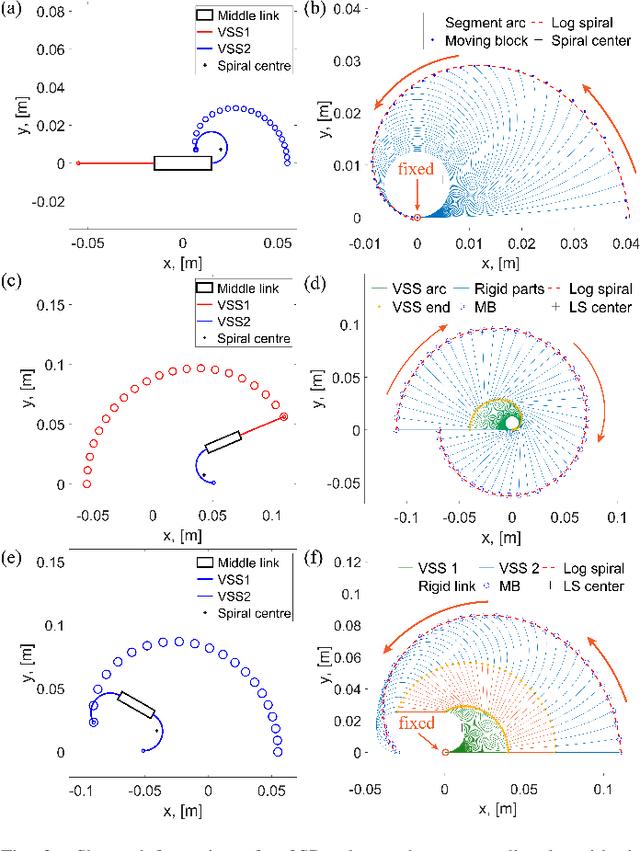

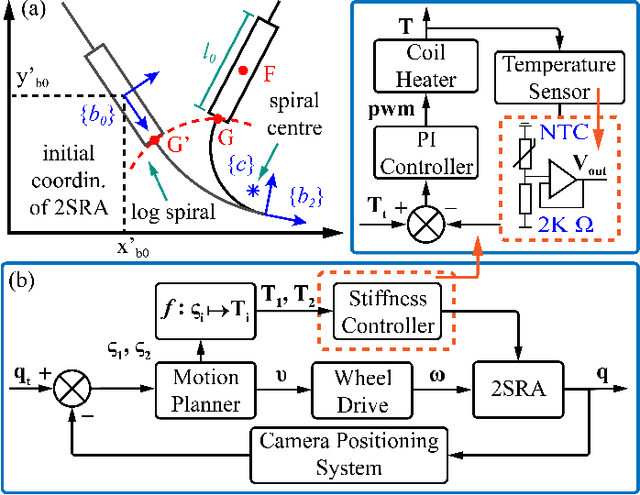

Abstract:In this paper, we propose a novel design of a hybrid mobile robot with controllable stiffness and deformable shape. Compared to conventional mobile agents, our system can switch between rigid and compliant phases by solidifying or melting Field's metal in its structure and, thus, alter its shape through the motion of its active components. In the soft state, the robot's main body can bend into circular arcs, which enables it to conform to surrounding curved objects. This variable geometry of the robot creates new motion modes which cannot be described by standard (i.e., fixed geometry) models. To this end, we develop a unified mathematical model that captures the differential kinematics of both rigid and soft states. An optimised control strategy is further proposed to select the most appropriate phase states and motion modes needed to reach a target pose-shape configuration. The performance of our new method is validated with numerical simulations and experiments conducted on a prototype system. The simulation source code is available at https://github.com/Louashka/2sr-agent-simulation.git}{GitHub repository.

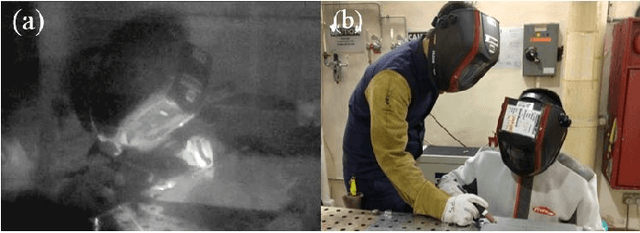

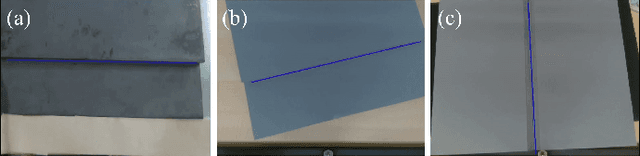

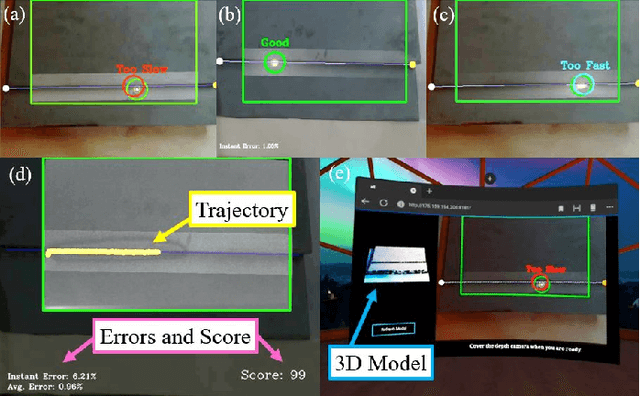

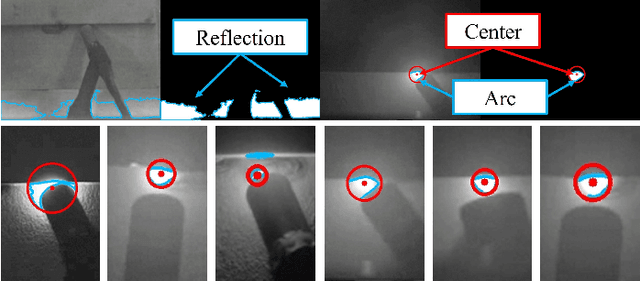

A Multi-Sensor Interface to Improve the Teaching and Learning Experience in Arc Welding Training Tasks

Sep 10, 2021

Abstract:This paper presents the development of a multi-sensor extended reality platform to improve the teaching and learning experience of arc welding tasks. Traditional methods to acquire hand-eye welding coordination skills are typically conducted through one-to-one instruction where trainees/trainers must wear protective helmets and conduct several hands-on tests with metal workpieces. This approach is inefficient as the harmful light emitted from the electric arc impedes the close monitoring of the welding process (practitioners can only observe a small bright spot and most geometric information cannot be perceived). To tackle these problems, some recent training approaches have leveraged on virtual reality (VR) as a way to safely simulate the process and visualize the geometry of the workpieces. However, the synthetic nature of the virtual simulation reduces the effectiveness of the platform; It fails to comprise actual interactions with the welding environment, which may hinder the learning process of a trainee. To incorporate a real welding experience, in this work we present a new automated multi-sensor extended reality platform for arc welding training. It consists of three components: (1) An HDR camera, monitoring the real welding spot in real-time; (2) A depth sensor, capturing the 3D geometry of the scene; and (3) A head-mounted VR display, visualizing the process safely. Our innovative platform provides trainees with a "bot trainer", virtual cues of the seam geometry, automatic spot tracking, and a performance score. To validate the platform's feasibility, we conduct extensive experiments with several welding training tasks. We show that compared with the traditional training practice and recent virtual reality approaches, our automated method achieves better performances in terms of accuracy, learning curve, and effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge