Maria Victorova

Reliability of Robotic Ultrasound Scanning for Scoliosis Assessment in Comparison with Manual Scanning

May 07, 2022

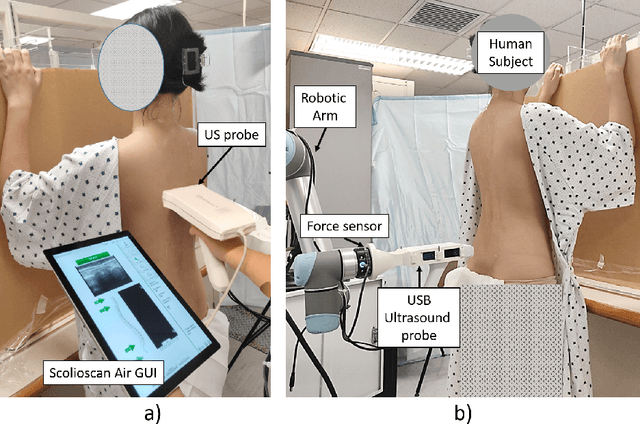

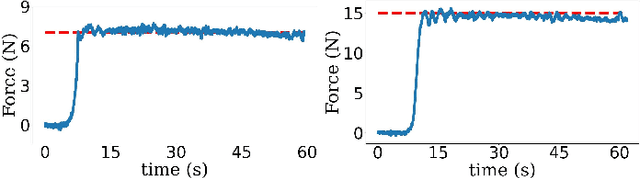

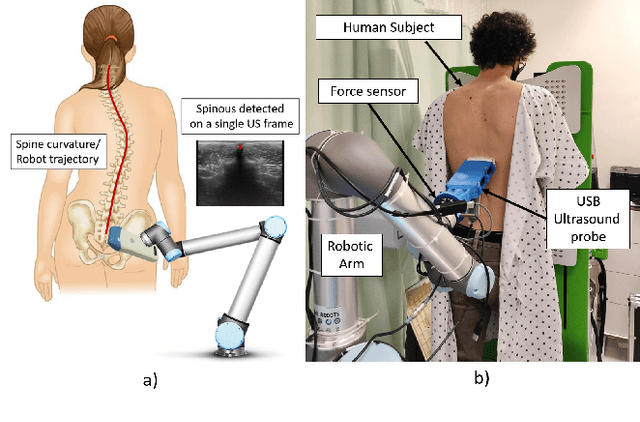

Abstract:Background: Ultrasound (US) imaging for scoliosis assessment is challenging for a non-experienced operator. The robotic scanning was developed to follow a spinal curvature with deep learning and apply consistent forces to the patient' back. Methods: 23 scoliosis patients were scanned with US devices both, robotically and manually. Two human raters measured each subject's spinous process angles (SPA) on robotic and manual coronal images. Results: The robotic method showed high intra- (ICC > 0.85) and inter-rater (ICC > 0.77) reliabilities. Compared with the manual method, the robotic approach showed no significant difference (p < 0.05) when measuring coronal deformity angles. The MAD for intra-rater analysis lies within an acceptable range from 0 deg to 5 deg for a minimum of 86% and a maximum 97% of a total number of the measured angles. Conclusions: This study demonstrated that scoliosis deformity angles measured on ultrasound images obtained with robotic scanning are comparable to those obtained by manual scanning.

Ultrasound-Guided Assistive Robots for Scoliosis Assessment with Optimization-based Control and Variable Impedance

Mar 04, 2022

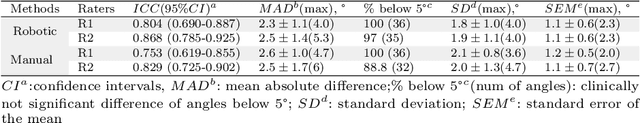

Abstract:Assistive robots for healthcare have seen a growing demand due to the great potential of relieving medical practitioners from routine jobs. In this paper, we investigate the development of an optimization-based control framework for an ultrasound-guided assistive robot to perform scoliosis assessment. A conventional procedure for scoliosis assessment with ultrasound imaging typically requires a medical practitioner to slide an ultrasound probe along a patient's back. To automate this type of procedure, we need to consider multiple objectives, such as contact force, position, orientation, energy, posture, etc. To address the aforementioned components, we propose to formulate the control framework design as a quadratic programming problem with each objective weighed by its task priority subject to a set of equality and inequality constraints. In addition, as the robot needs to establish constant contact with the patient during spine scanning, we incorporate variable impedance regulation of the end-effector position and orientation in the control architecture to enhance safety and stability during the physical human-robot interaction. Wherein, the variable impedance gains are retrieved by learning from the medical expert's demonstrations. The proposed methodology is evaluated by conducting real-world experiments of autonomous scoliosis assessment with a robot manipulator xArm. The effectiveness is verified by the obtained coronal spinal images of both a phantom and a human subject.

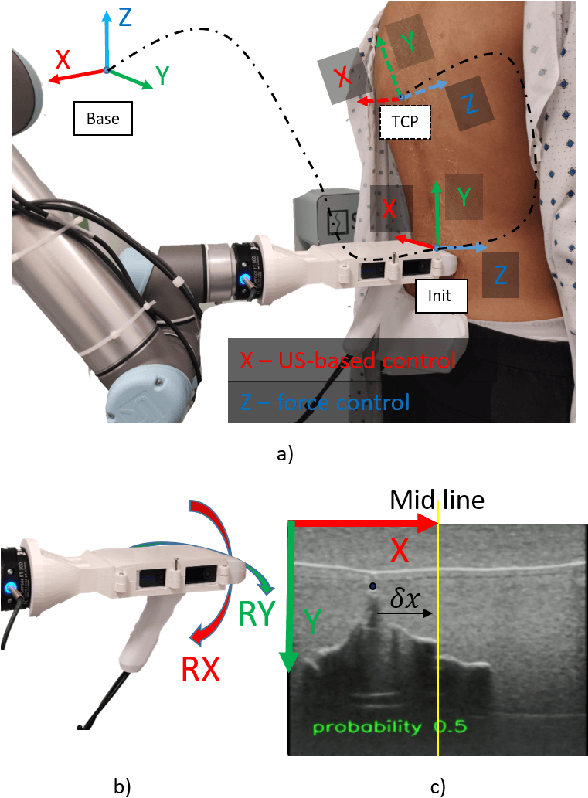

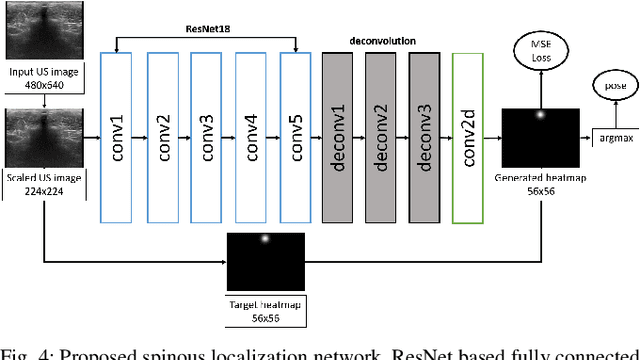

Follow the Curve: Robotic-Ultrasound Navigation with Learning Based Localization of Spinous Processes for Scoliosis Assessment

Sep 11, 2021

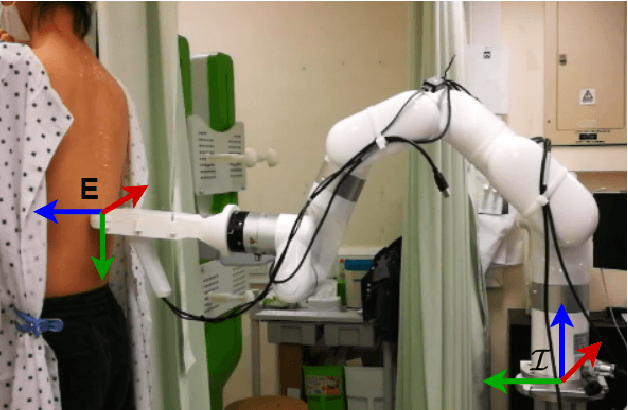

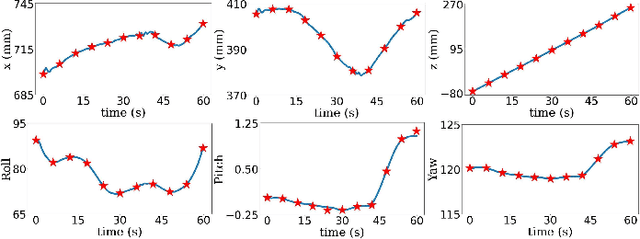

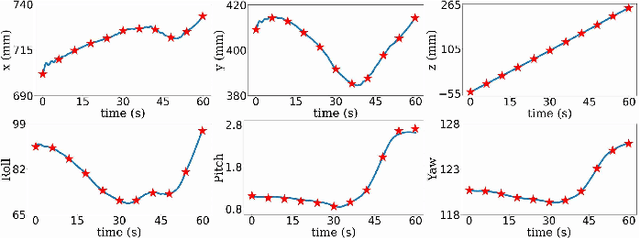

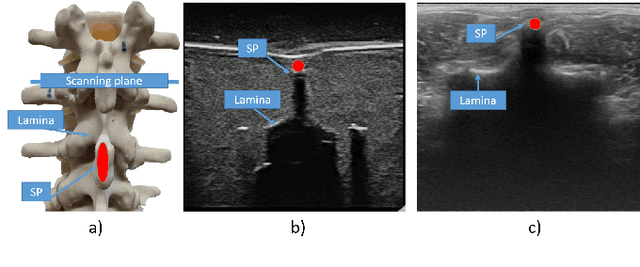

Abstract:The scoliosis progression in adolescents requires close monitoring to timely take treatment measures. Ultrasound imaging is a radiation-free and low-cost alternative in scoliosis assessment to X-rays, which are typically used in clinical practice. However, ultrasound images are prone to speckle noises, making it challenging for sonographers to detect bony features and follow the spine's curvature. This paper introduces a robotic-ultrasound approach for spinal curvature tracking and automatic navigation. A fully connected network with deconvolutional heads is developed to locate the spinous process efficiently with real-time ultrasound images. We use this machine learning-based method to guide the motion of the robot-held ultrasound probe and follow the spinal curvature while capturing ultrasound images and correspondent position. We developed a new force-driven controller that automatically adjusts the probe's pose relative to the skin surface to ensure a good acoustic coupling between the probe and skin. After the scanning, the acquired data is used to reconstruct the coronal spinal image, where the deformity of the scoliosis spine can be assessed and measured. To evaluate the performance of our methodology, we conducted an experimental study with human subjects where the deviations from the image center during the robotized procedure are compared to that obtained from manual scanning. The angles of spinal deformity measured on spinal reconstruction images were similar for both methods, implying that they equally reflect human anatomy.

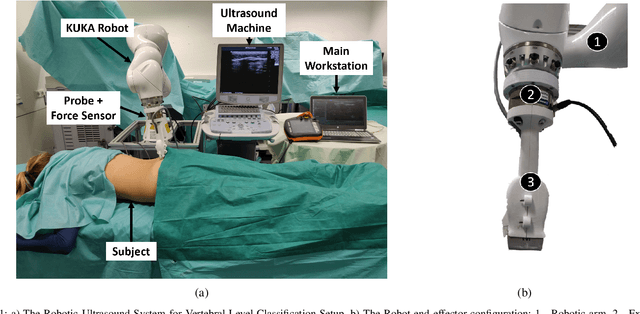

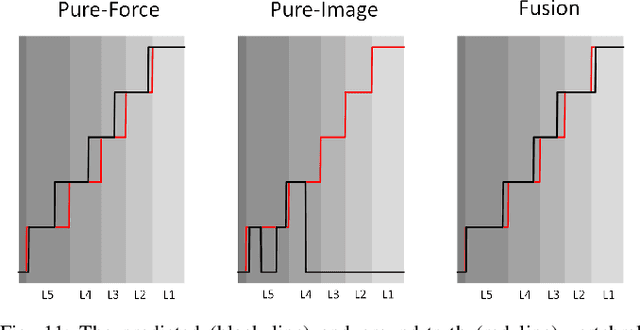

Force-Ultrasound Fusion: Bringing Spine Robotic-US to the Next "Level"

Feb 26, 2020

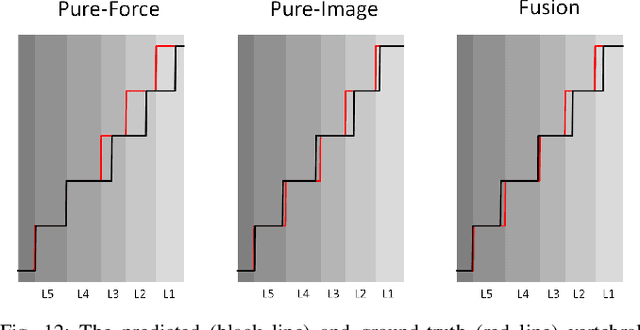

Abstract:Spine injections are commonly performed in several clinical procedures. The localization of the target vertebral level (i.e. the position of a vertebra in a spine) is typically done by back palpation or under X-ray guidance, yielding either higher chances of procedure failure or exposure to ionizing radiation. Preliminary studies have been conducted in the literature, suggesting that ultrasound imaging may be a precise and safe alternative to X-ray for spine level detection. However, ultrasound data are noisy and complicated to interpret. In this study, a robotic-ultrasound approach for automatic vertebral level detection is introduced. The method relies on the fusion of ultrasound and force data, thus providing both "tactile" and visual feedback during the procedure, which results in higher performances in presence of data corruption. A robotic arm automatically scans the volunteer's back along the spine by using force-ultrasound data to locate vertebral levels. The occurrences of vertebral levels are visible on the force trace as peaks, which are enhanced by properly controlling the force applied by the robot on the patient back. Ultrasound data are processed with a Deep Learning method to extract a 1D signal modelling the probabilities of having a vertebra at each location along the spine. Processed force and ultrasound data are fused using a 1D Convolutional Network to compute the location of the vertebral levels. The method is compared to pure image and pure force-based methods for vertebral level counting, showing improved performance. In particular, the fusion method is able to correctly classify 100% of the vertebral levels in the test set, while pure image and pure force-based method could only classify 80% and 90% vertebrae, respectively. The potential of the proposed method is evaluated in an exemplary simulated clinical application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge