Anmin Fu

From Pixels to Trajectory: Universal Adversarial Example Detection via Temporal Imprints

Mar 06, 2025Abstract:For the first time, we unveil discernible temporal (or historical) trajectory imprints resulting from adversarial example (AE) attacks. Standing in contrast to existing studies all focusing on spatial (or static) imprints within the targeted underlying victim models, we present a fresh temporal paradigm for understanding these attacks. Of paramount discovery is that these imprints are encapsulated within a single loss metric, spanning universally across diverse tasks such as classification and regression, and modalities including image, text, and audio. Recognizing the distinct nature of loss between adversarial and clean examples, we exploit this temporal imprint for AE detection by proposing TRAIT (TRaceable Adversarial temporal trajectory ImprinTs). TRAIT operates under minimal assumptions without prior knowledge of attacks, thereby framing the detection challenge as a one-class classification problem. However, detecting AEs is still challenged by significant overlaps between the constructed synthetic losses of adversarial and clean examples due to the absence of ground truth for incoming inputs. TRAIT addresses this challenge by converting the synthetic loss into a spectrum signature, using the technique of Fast Fourier Transform to highlight the discrepancies, drawing inspiration from the temporal nature of the imprints, analogous to time-series signals. Across 12 AE attacks including SMACK (USENIX Sec'2023), TRAIT demonstrates consistent outstanding performance across comprehensively evaluated modalities, tasks, datasets, and model architectures. In all scenarios, TRAIT achieves an AE detection accuracy exceeding 97%, often around 99%, while maintaining a false rejection rate of 1%. TRAIT remains effective under the formulated strong adaptive attacks.

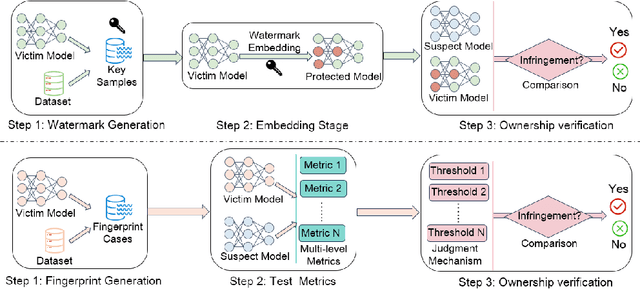

Intellectual Property Protection for Deep Learning Model and Dataset Intelligence

Nov 07, 2024

Abstract:With the growing applications of Deep Learning (DL), especially recent spectacular achievements of Large Language Models (LLMs) such as ChatGPT and LLaMA, the commercial significance of these remarkable models has soared. However, acquiring well-trained models is costly and resource-intensive. It requires a considerable high-quality dataset, substantial investment in dedicated architecture design, expensive computational resources, and efforts to develop technical expertise. Consequently, safeguarding the Intellectual Property (IP) of well-trained models is attracting increasing attention. In contrast to existing surveys overwhelmingly focusing on model IPP mainly, this survey not only encompasses the protection on model level intelligence but also valuable dataset intelligence. Firstly, according to the requirements for effective IPP design, this work systematically summarizes the general and scheme-specific performance evaluation metrics. Secondly, from proactive IP infringement prevention and reactive IP ownership verification perspectives, it comprehensively investigates and analyzes the existing IPP methods for both dataset and model intelligence. Additionally, from the standpoint of training settings, it delves into the unique challenges that distributed settings pose to IPP compared to centralized settings. Furthermore, this work examines various attacks faced by deep IPP techniques. Finally, we outline prospects for promising future directions that may act as a guide for innovative research.

TruVRF: Towards Triple-Granularity Verification on Machine Unlearning

Aug 12, 2024Abstract:The concept of the right to be forgotten has led to growing interest in machine unlearning, but reliable validation methods are lacking, creating opportunities for dishonest model providers to mislead data contributors. Traditional invasive methods like backdoor injection are not feasible for legacy data. To address this, we introduce TruVRF, a non-invasive unlearning verification framework operating at class-, volume-, and sample-level granularities. TruVRF includes three Unlearning-Metrics designed to detect different types of dishonest servers: Neglecting, Lazy, and Deceiving. Unlearning-Metric-I checks class alignment, Unlearning-Metric-II verifies sample count, and Unlearning-Metric-III confirms specific sample deletion. Evaluations on three datasets show TruVRF's robust performance, with over 90% accuracy for Metrics I and III, and a 4.8% to 8.2% inference deviation for Metric II. TruVRF also demonstrates generalizability and practicality across various conditions and with state-of-the-art unlearning frameworks like SISA and Amnesiac Unlearning.

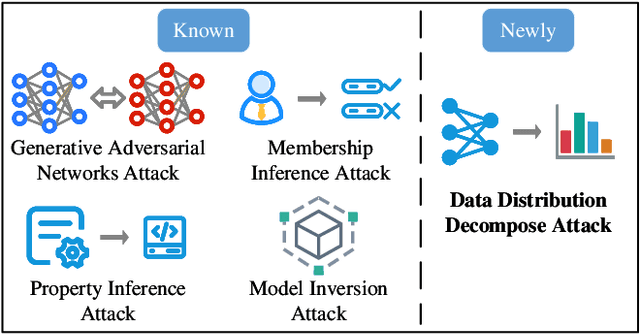

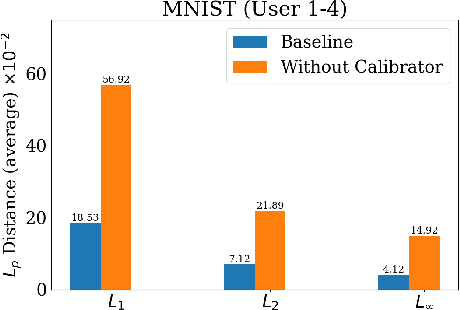

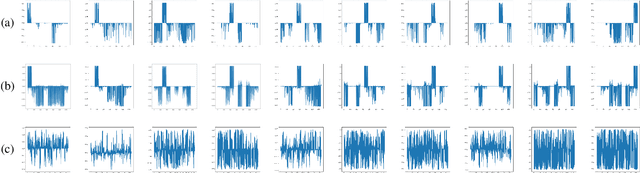

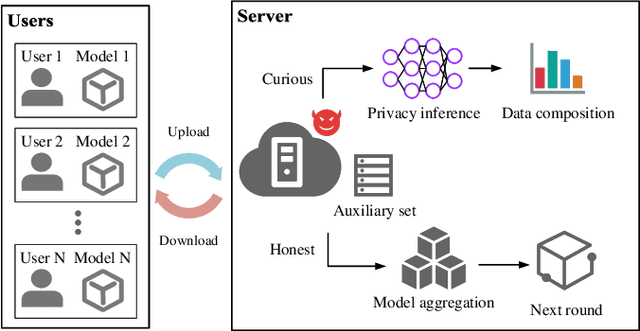

Decaf: Data Distribution Decompose Attack against Federated Learning

May 24, 2024

Abstract:In contrast to prevalent Federated Learning (FL) privacy inference techniques such as generative adversarial networks attacks, membership inference attacks, property inference attacks, and model inversion attacks, we devise an innovative privacy threat: the Data Distribution Decompose Attack on FL, termed Decaf. This attack enables an honest-but-curious FL server to meticulously profile the proportion of each class owned by the victim FL user, divulging sensitive information like local market item distribution and business competitiveness. The crux of Decaf lies in the profound observation that the magnitude of local model gradient changes closely mirrors the underlying data distribution, including the proportion of each class. Decaf addresses two crucial challenges: accurately identify the missing/null class(es) given by any victim user as a premise and then quantify the precise relationship between gradient changes and each remaining non-null class. Notably, Decaf operates stealthily, rendering it entirely passive and undetectable to victim users regarding the infringement of their data distribution privacy. Experimental validation on five benchmark datasets (MNIST, FASHION-MNIST, CIFAR-10, FER-2013, and SkinCancer) employing diverse model architectures, including customized convolutional networks, standardized VGG16, and ResNet18, demonstrates Decaf's efficacy. Results indicate its ability to accurately decompose local user data distribution, regardless of whether it is IID or non-IID distributed. Specifically, the dissimilarity measured using $L_{\infty}$ distance between the distribution decomposed by Decaf and ground truth is consistently below 5\% when no null classes exist. Moreover, Decaf achieves 100\% accuracy in determining any victim user's null classes, validated through formal proof.

Machine Unlearning: Taxonomy, Metrics, Applications, Challenges, and Prospects

Mar 13, 2024Abstract:Personal digital data is a critical asset, and governments worldwide have enforced laws and regulations to protect data privacy. Data users have been endowed with the right to be forgotten of their data. In the course of machine learning (ML), the forgotten right requires a model provider to delete user data and its subsequent impact on ML models upon user requests. Machine unlearning emerges to address this, which has garnered ever-increasing attention from both industry and academia. While the area has developed rapidly, there is a lack of comprehensive surveys to capture the latest advancements. Recognizing this shortage, we conduct an extensive exploration to map the landscape of machine unlearning including the (fine-grained) taxonomy of unlearning algorithms under centralized and distributed settings, debate on approximate unlearning, verification and evaluation metrics, challenges and solutions for unlearning under different applications, as well as attacks targeting machine unlearning. The survey concludes by outlining potential directions for future research, hoping to serve as a guide for interested scholars.

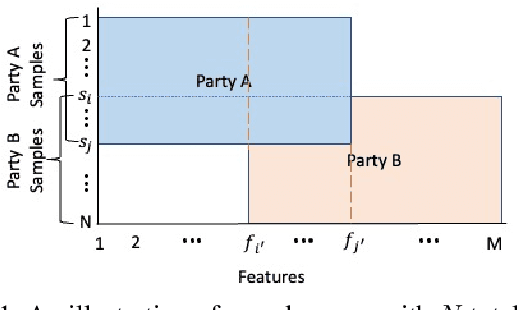

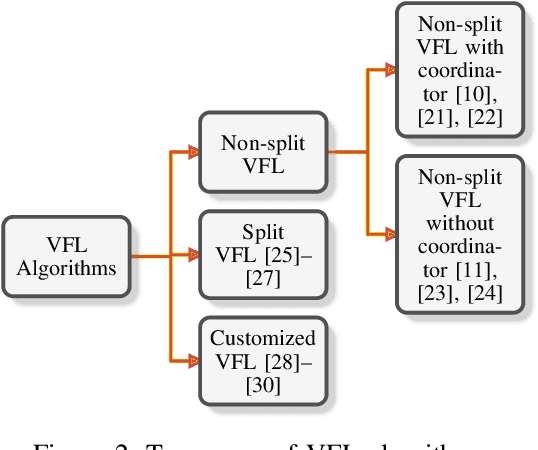

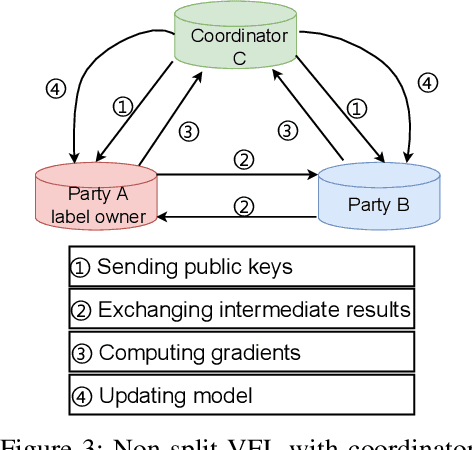

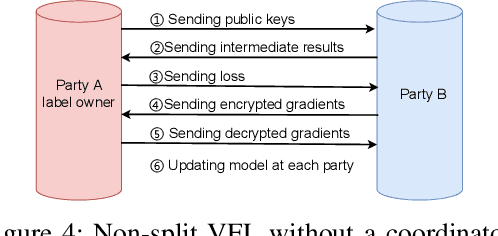

Vertical Federated Learning: Taxonomies, Threats, and Prospects

Feb 03, 2023

Abstract:Federated learning (FL) is the most popular distributed machine learning technique. FL allows machine-learning models to be trained without acquiring raw data to a single point for processing. Instead, local models are trained with local data; the models are then shared and combined. This approach preserves data privacy as locally trained models are shared instead of the raw data themselves. Broadly, FL can be divided into horizontal federated learning (HFL) and vertical federated learning (VFL). For the former, different parties hold different samples over the same set of features; for the latter, different parties hold different feature data belonging to the same set of samples. In a number of practical scenarios, VFL is more relevant than HFL as different companies (e.g., bank and retailer) hold different features (e.g., credit history and shopping history) for the same set of customers. Although VFL is an emerging area of research, it is not well-established compared to HFL. Besides, VFL-related studies are dispersed, and their connections are not intuitive. Thus, this survey aims to bring these VFL-related studies to one place. Firstly, we classify existing VFL structures and algorithms. Secondly, we present the threats from security and privacy perspectives to VFL. Thirdly, for the benefit of future researchers, we discussed the challenges and prospects of VFL in detail.

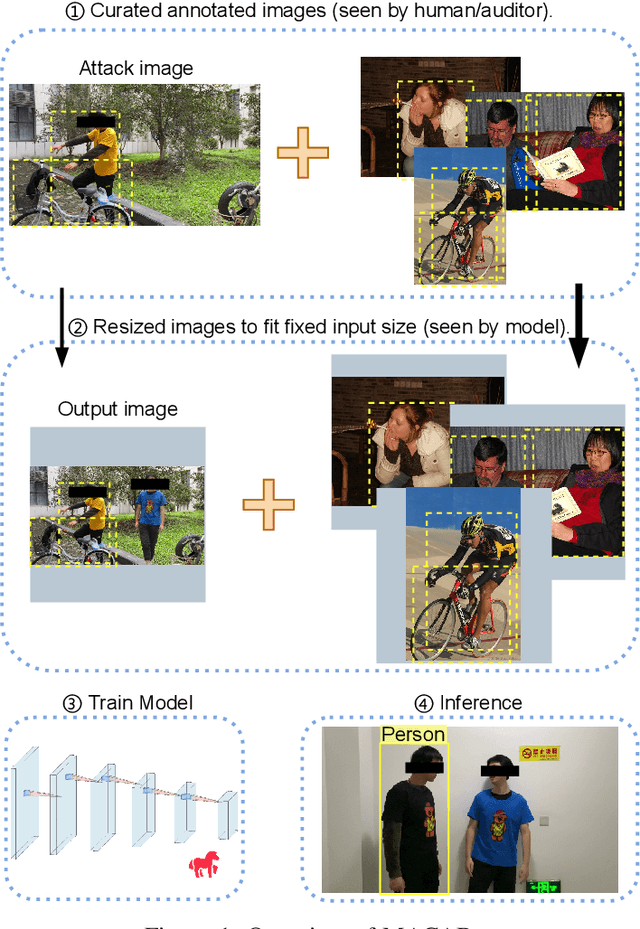

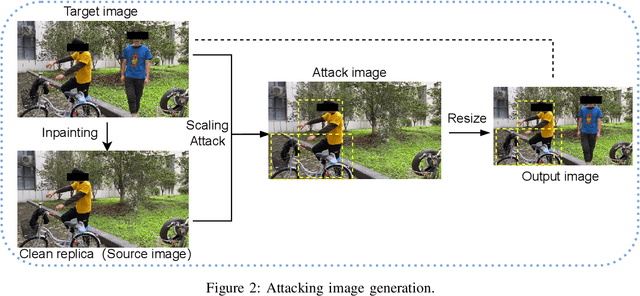

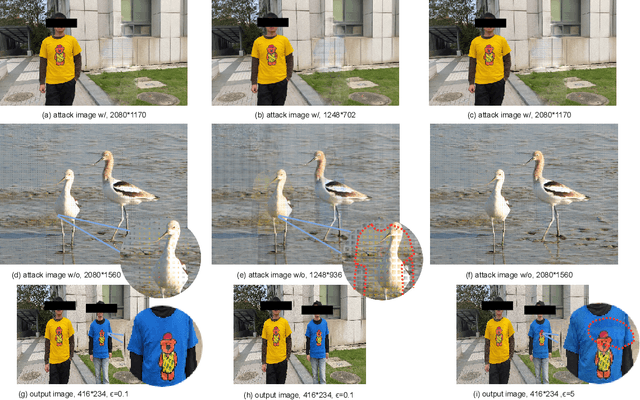

MACAB: Model-Agnostic Clean-Annotation Backdoor to Object Detection with Natural Trigger in Real-World

Sep 06, 2022

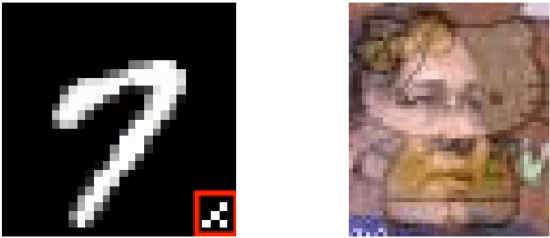

Abstract:Object detection is the foundation of various critical computer-vision tasks such as segmentation, object tracking, and event detection. To train an object detector with satisfactory accuracy, a large amount of data is required. However, due to the intensive workforce involved with annotating large datasets, such a data curation task is often outsourced to a third party or relied on volunteers. This work reveals severe vulnerabilities of such data curation pipeline. We propose MACAB that crafts clean-annotated images to stealthily implant the backdoor into the object detectors trained on them even when the data curator can manually audit the images. We observe that the backdoor effect of both misclassification and the cloaking are robustly achieved in the wild when the backdoor is activated with inconspicuously natural physical triggers. Backdooring non-classification object detection with clean-annotation is challenging compared to backdooring existing image classification tasks with clean-label, owing to the complexity of having multiple objects within each frame, including victim and non-victim objects. The efficacy of the MACAB is ensured by constructively i abusing the image-scaling function used by the deep learning framework, ii incorporating the proposed adversarial clean image replica technique, and iii combining poison data selection criteria given constrained attacking budget. Extensive experiments demonstrate that MACAB exhibits more than 90% attack success rate under various real-world scenes. This includes both cloaking and misclassification backdoor effect even restricted with a small attack budget. The poisoned samples cannot be effectively identified by state-of-the-art detection techniques.The comprehensive video demo is at https://youtu.be/MA7L_LpXkp4, which is based on a poison rate of 0.14% for YOLOv4 cloaking backdoor and Faster R-CNN misclassification backdoor.

CASSOCK: Viable Backdoor Attacks against DNN in The Wall of Source-Specific Backdoor Defences

May 31, 2022

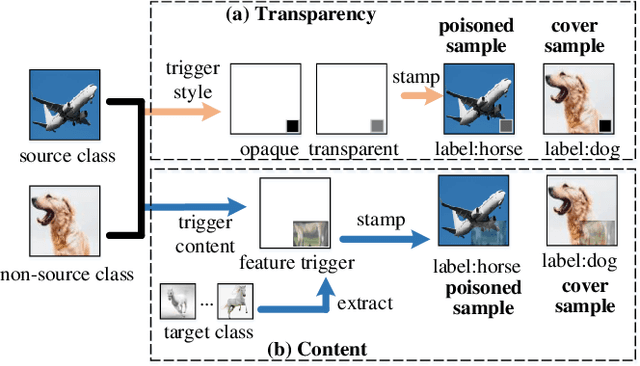

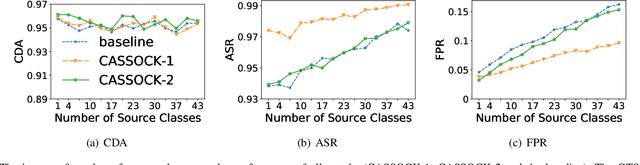

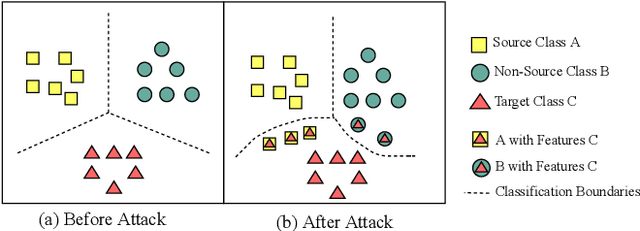

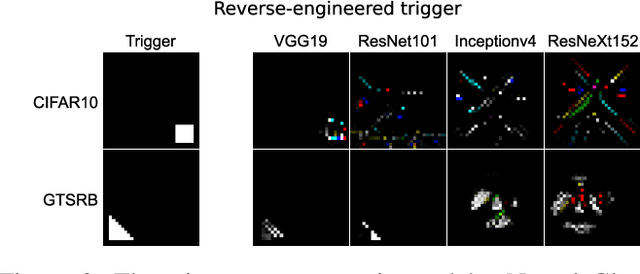

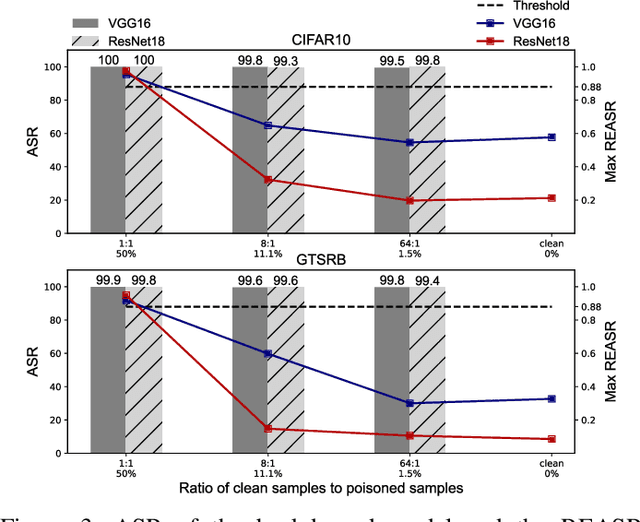

Abstract:Backdoor attacks have been a critical threat to deep neural network (DNN). However, most existing countermeasures focus on source-agnostic backdoor attacks (SABAs) and fail to defeat source-specific backdoor attacks (SSBAs). Compared to an SABA, an SSBA activates a backdoor when an input from attacker-chosen class(es) is stamped with an attacker-specified trigger, making itself stealthier and thus evade most existing backdoor mitigation. Nonetheless, existing SSBAs have trade-offs on attack success rate (ASR, a backdoor is activated by a trigger input from a source class as expected) and false positive rate (FPR, a backdoor is activated unexpectedly by a trigger input from a non-source class). Significantly, they can still be effectively detected by the state-of-the-art (SOTA) countermeasures targeting SSBAs. This work overcomes efficiency and effectiveness deficiencies of existing SSBAs, thus bypassing the SOTA defences. The key insight is to construct desired poisoned and cover data during backdoor training by characterising SSBAs in-depth. Both data are samples with triggers: the cover/poisoned data from non-source/source class(es) holds ground-truth/target labels. Therefore, two cover/poisoned data enhancements are developed from trigger style and content, respectively, coined CASSOCK. First, we leverage trigger patterns with discrepant transparency to craft cover/poisoned data, enforcing triggers with heterogeneous sensitivity on different classes. The second enhancement chooses the target class features as triggers to craft these samples, entangling trigger features with the target class heavily. Compared with existing SSBAs, CASSOCK-based attacks have higher ASR and low FPR on four popular tasks: MNIST, CIFAR10, GTSRB, and LFW. More importantly, CASSOCK has effectively evaded three defences (SCAn, Februus and extended Neural Cleanse) already defeat existing SSBAs effectively.

Towards A Critical Evaluation of Robustness for Deep Learning Backdoor Countermeasures

Apr 13, 2022

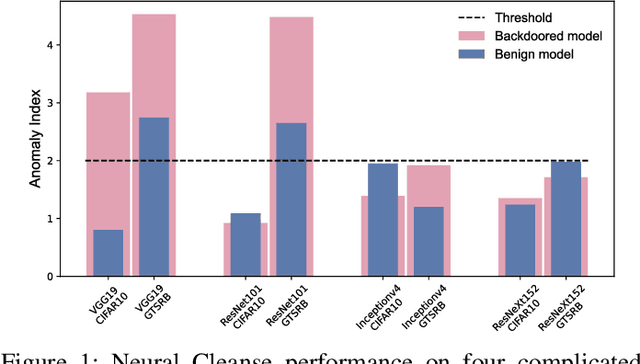

Abstract:Since Deep Learning (DL) backdoor attacks have been revealed as one of the most insidious adversarial attacks, a number of countermeasures have been developed with certain assumptions defined in their respective threat models. However, the robustness of these countermeasures is inadvertently ignored, which can introduce severe consequences, e.g., a countermeasure can be misused and result in a false implication of backdoor detection. For the first time, we critically examine the robustness of existing backdoor countermeasures with an initial focus on three influential model-inspection ones that are Neural Cleanse (S&P'19), ABS (CCS'19), and MNTD (S&P'21). Although the three countermeasures claim that they work well under their respective threat models, they have inherent unexplored non-robust cases depending on factors such as given tasks, model architectures, datasets, and defense hyper-parameter, which are \textit{not even rooted from delicate adaptive attacks}. We demonstrate how to trivially bypass them aligned with their respective threat models by simply varying aforementioned factors. Particularly, for each defense, formal proofs or empirical studies are used to reveal its two non-robust cases where it is not as robust as it claims or expects, especially the recent MNTD. This work highlights the necessity of thoroughly evaluating the robustness of backdoor countermeasures to avoid their misleading security implications in unknown non-robust cases.

Sufficient Reasons for A Zero-Day Intrusion Detection Artificial Immune System

Apr 05, 2022

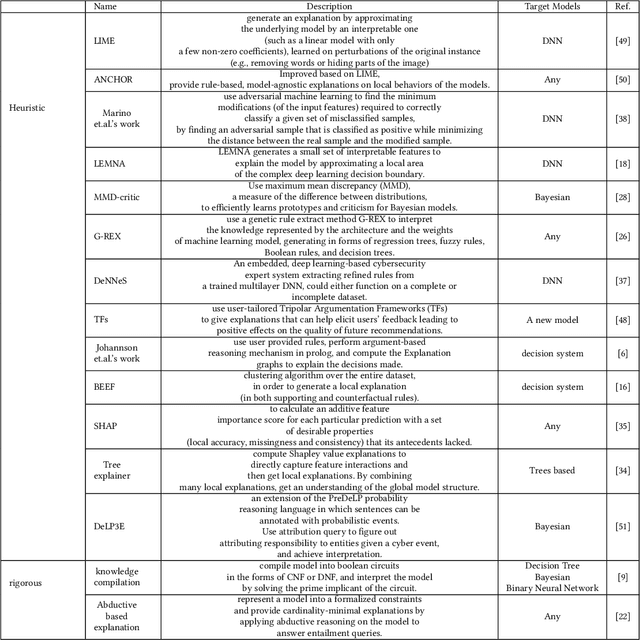

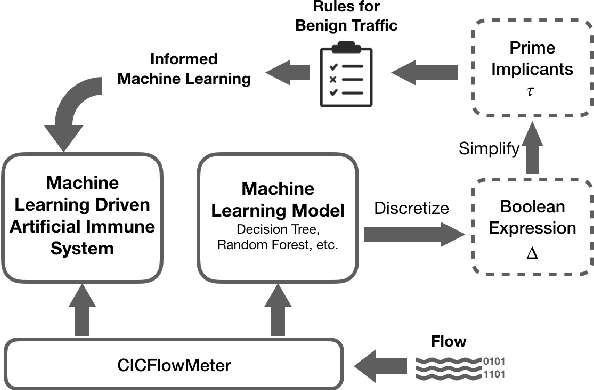

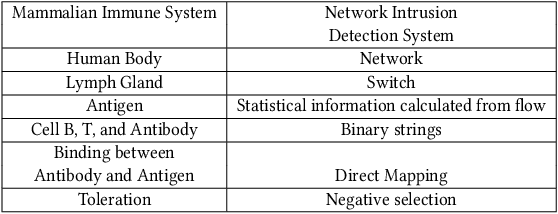

Abstract:The Internet is the most complex machine humankind has ever built, and how to defense it from intrusions is even more complex. With the ever increasing of new intrusions, intrusion detection task rely on Artificial Intelligence more and more. Interpretability and transparency of the machine learning model is the foundation of trust in AI-driven intrusion detection results. Current interpretation Artificial Intelligence technologies in intrusion detection are heuristic, which is neither accurate nor sufficient. This paper proposed a rigorous interpretable Artificial Intelligence driven intrusion detection approach, based on artificial immune system. Details of rigorous interpretation calculation process for a decision tree model is presented. Prime implicant explanation for benign traffic flow are given in detail as rule for negative selection of the cyber immune system. Experiments are carried out in real-life traffic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge