Andreas Kugi

SafeFlowMPC: Predictive and Safe Trajectory Planning for Robot Manipulators with Learning-based Policies

Feb 17, 2026Abstract:The emerging integration of robots into everyday life brings several major challenges. Compared to classical industrial applications, more flexibility is needed in combination with real-time reactivity. Learning-based methods can train powerful policies based on demonstrated trajectories, such that the robot generalizes a task to similar situations. However, these black-box models lack interpretability and rigorous safety guarantees. Optimization-based methods provide these guarantees but lack the required flexibility and generalization capabilities. This work proposes SafeFlowMPC, a combination of flow matching and online optimization to combine the strengths of learning and optimization. This method guarantees safety at all times and is designed to meet the demands of real-time execution by using a suboptimal model-predictive control formulation. SafeFlowMPC achieves strong performance in three real-world experiments on a KUKA 7-DoF manipulator, namely two grasping experiment and a dynamic human-robot object handover experiment. A video of the experiments is available at http://www.acin.tuwien.ac.at/42d6. The code is available at https://github.com/TU-Wien-ACIN-CDS/SafeFlowMPC.

Incremental Language Understanding for Online Motion Planning of Robot Manipulators

Aug 08, 2025Abstract:Human-robot interaction requires robots to process language incrementally, adapting their actions in real-time based on evolving speech input. Existing approaches to language-guided robot motion planning typically assume fully specified instructions, resulting in inefficient stop-and-replan behavior when corrections or clarifications occur. In this paper, we introduce a novel reasoning-based incremental parser which integrates an online motion planning algorithm within the cognitive architecture. Our approach enables continuous adaptation to dynamic linguistic input, allowing robots to update motion plans without restarting execution. The incremental parser maintains multiple candidate parses, leveraging reasoning mechanisms to resolve ambiguities and revise interpretations when needed. By combining symbolic reasoning with online motion planning, our system achieves greater flexibility in handling speech corrections and dynamically changing constraints. We evaluate our framework in real-world human-robot interaction scenarios, demonstrating online adaptions of goal poses, constraints, or task objectives. Our results highlight the advantages of integrating incremental language understanding with real-time motion planning for natural and fluid human-robot collaboration. The experiments are demonstrated in the accompanying video at www.acin.tuwien.ac.at/42d5.

ADAPT: An Autonomous Forklift for Construction Site Operation

Mar 18, 2025

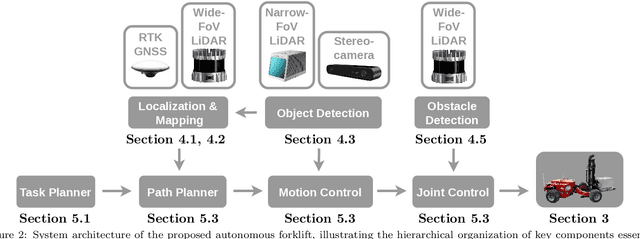

Abstract:Efficient material logistics play a critical role in controlling costs and schedules in the construction industry. However, manual material handling remains prone to inefficiencies, delays, and safety risks. Autonomous forklifts offer a promising solution to streamline on-site logistics, reducing reliance on human operators and mitigating labor shortages. This paper presents the development and evaluation of the Autonomous Dynamic All-terrain Pallet Transporter (ADAPT), a fully autonomous off-road forklift designed for construction environments. Unlike structured warehouse settings, construction sites pose significant challenges, including dynamic obstacles, unstructured terrain, and varying weather conditions. To address these challenges, our system integrates AI-driven perception techniques with traditional approaches for decision making, planning, and control, enabling reliable operation in complex environments. We validate the system through extensive real-world testing, comparing its long-term performance against an experienced human operator across various weather conditions. We also provide a comprehensive analysis of challenges and key lessons learned, contributing to the advancement of autonomous heavy machinery. Our findings demonstrate that autonomous outdoor forklifts can operate near human-level performance, offering a viable path toward safer and more efficient construction logistics.

BoundPlanner: A convex-set-based approach to bounded manipulator trajectory planning

Feb 18, 2025

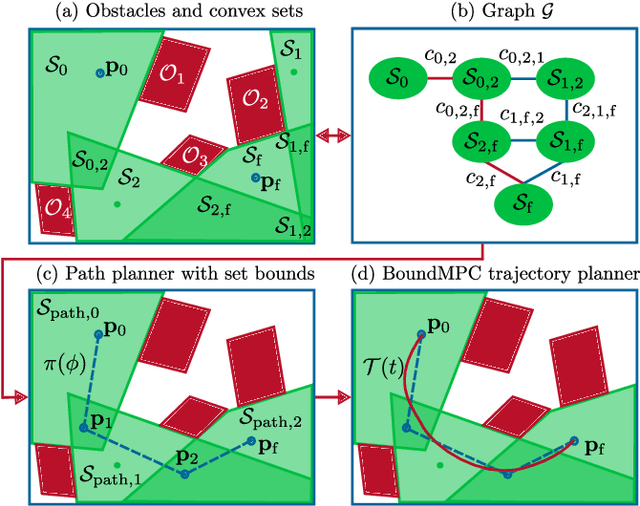

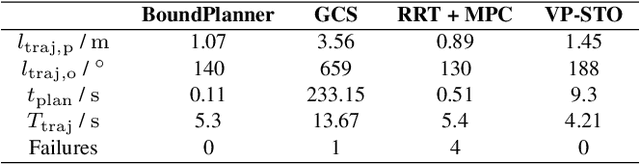

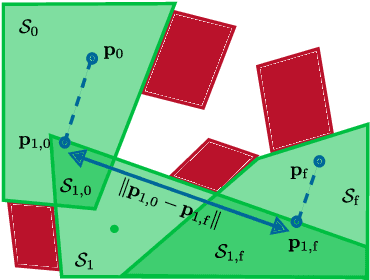

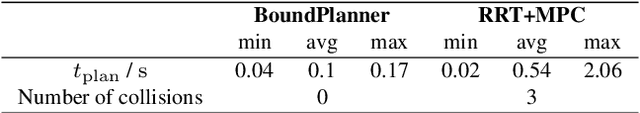

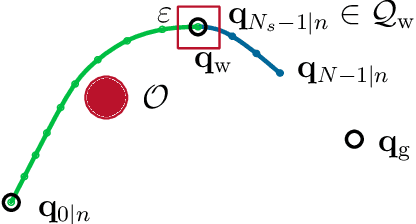

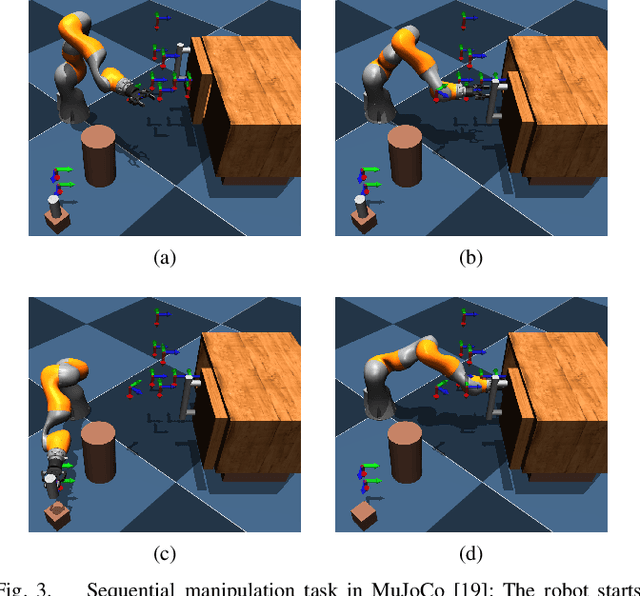

Abstract:Online trajectory planning enables robot manipulators to react quickly to changing environments or tasks. Many robot trajectory planners exist for known environments but are often too slow for online computations. Current methods in online trajectory planning do not find suitable trajectories in challenging scenarios that respect the limits of the robot and account for collisions. This work proposes a trajectory planning framework consisting of the novel Cartesian path planner based on convex sets, called BoundPlanner, and the online trajectory planner BoundMPC. BoundPlanner explores and maps the collision-free space using convex sets to compute a reference path with bounds. BoundMPC is extended in this work to handle convex sets for path deviations, which allows the robot to optimally follow the path within the bounds while accounting for the robot's kinematics. Collisions of the robot's kinematic chain are considered by a novel convex-set-based collision avoidance formulation independent on the number of obstacles. Simulations and experiments with a 7-DoF manipulator show the performance of the proposed planner compared to state-of-the-art methods. The source code is available at github.com/Thieso/BoundPlanner and videos of the experiments can be found at www.acin.tuwien.ac.at/42d4

Towards Autonomous Wood-Log Grasping with a Forestry Crane: Simulator and Benchmarking

Feb 03, 2025

Abstract:Forestry machines operated in forest production environments face challenges when performing manipulation tasks, especially regarding the complicated dynamics of underactuated crane systems and the heavy weight of logs to be grasped. This study investigates the feasibility of using reinforcement learning for forestry crane manipulators in grasping and lifting heavy wood logs autonomously. We first build a simulator using Mujoco physics engine to create realistic scenarios, including modeling a forestry crane with 8 degrees of freedom from CAD data and wood logs of different sizes. We further implement a velocity controller for autonomous log grasping with deep reinforcement learning using a curriculum strategy. Utilizing our new simulator, the proposed control strategy exhibits a success rate of 96% when grasping logs of different diameters and under random initial configurations of the forestry crane. In addition, reward functions and reinforcement learning baselines are implemented to provide an open-source benchmark for the community in large-scale manipulation tasks. A video with several demonstrations can be seen at https://www.acin.tuwien.ac.at/en/d18a/

Combining Federated Learning and Control: A Survey

Jul 12, 2024Abstract:This survey provides an overview of combining Federated Learning (FL) and control to enhance adaptability, scalability, generalization, and privacy in (nonlinear) control applications. Traditional control methods rely on controller design models, but real-world scenarios often require online model retuning or learning. FL offers a distributed approach to model training, enabling collaborative learning across distributed devices while preserving data privacy. By keeping data localized, FL mitigates concerns regarding privacy and security while reducing network bandwidth requirements for communication. This survey summarizes the state-of-the-art concepts and ideas of combining FL and control. The methodical benefits are further discussed, culminating in a detailed overview of expected applications, from dynamical system modeling over controller design, focusing on adaptive control, to knowledge transfer in multi-agent decision-making systems.

Language-Driven Closed-Loop Grasping with Model-Predictive Trajectory Replanning

Jun 14, 2024

Abstract:Combining a vision module inside a closed-loop control system for a \emph{seamless movement} of a robot in a manipulation task is challenging due to the inconsistent update rates between utilized modules. This task is even more difficult in a dynamic environment, e.g., objects are moving. This paper presents a \emph{modular} zero-shot framework for language-driven manipulation of (dynamic) objects through a closed-loop control system with real-time trajectory replanning and an online 6D object pose localization. We segment an object within $\SI{0.5}{\second}$ by leveraging a vision language model via language commands. Then, guided by natural language commands, a closed-loop system, including a unified pose estimation and tracking and online trajectory planning, is utilized to continuously track this object and compute the optimal trajectory in real-time. Our proposed zero-shot framework provides a smooth trajectory that avoids jerky movements and ensures the robot can grasp a non-stationary object. Experiment results exhibit the real-time capability of the proposed zero-shot modular framework for the trajectory optimization module to accurately and efficiently grasp moving objects, i.e., up to \SI{30}{\hertz} update rates for the online 6D pose localization module and \SI{10}{\hertz} update rates for the receding-horizon trajectory optimization. These advantages highlight the modular framework's potential applications in robotics and human-robot interaction; see the video in https://www.acin.tuwien.ac.at/en/6e64/.

Model Predictive Trajectory Planning for Human-Robot Handovers

Apr 11, 2024Abstract:This work develops a novel trajectory planner for human-robot handovers. The handover requirements can naturally be handled by a path-following-based model predictive controller, where the path progress serves as a progress measure of the handover. Moreover, the deviations from the path are used to follow human motion by adapting the path deviation bounds with a handover location prediction. A Gaussian process regression model, which is trained on known handover trajectories, is employed for this prediction. Experiments with a collaborative 7-DoF robotic manipulator show the effectiveness and versatility of the proposed approach.

* 8 pages, 6 figures, Proceedings available under https://www.vdi-mechatroniktagung.rwth-aachen.de/global/show_document.asp?id=aaaaaaaacjcayqj&download=1

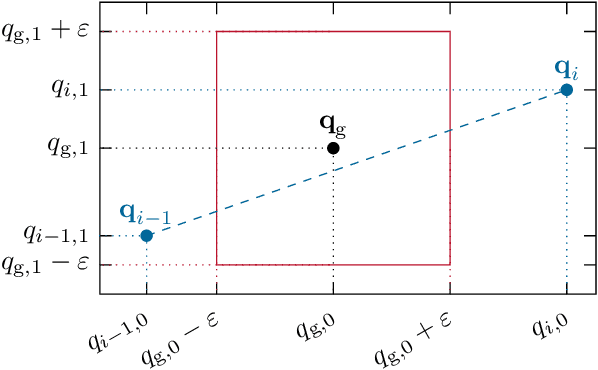

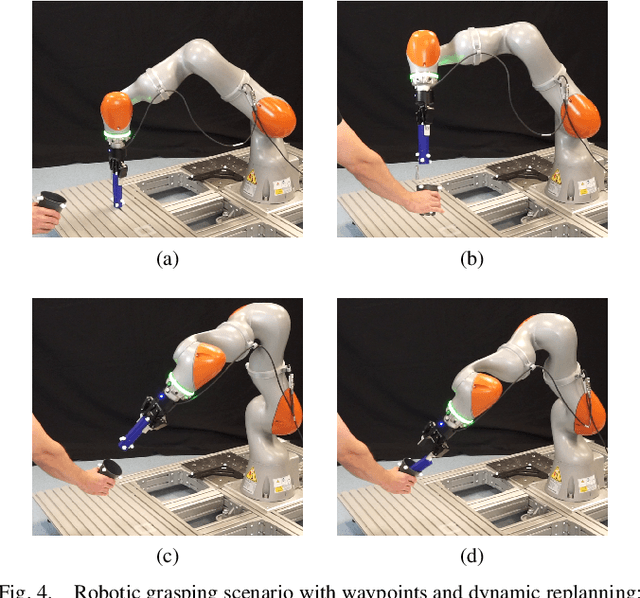

Model Predictive Trajectory Optimization With Dynamically Changing Waypoints for Serial Manipulators

Feb 07, 2024

Abstract:Systematically including dynamically changing waypoints as desired discrete actions, for instance, resulting from superordinate task planning, has been challenging for online model predictive trajectory optimization with short planning horizons. This paper presents a novel waypoint model predictive control (wMPC) concept for online replanning tasks. The main idea is to split the planning horizon at the waypoint when it becomes reachable within the current planning horizon and reduce the horizon length towards the waypoints and goal points. This approach keeps the computational load low and provides flexibility in adapting to changing conditions in real time. The presented approach achieves competitive path lengths and trajectory durations compared to (global) offline RRT-type planners in a multi-waypoint scenario. Moreover, the ability of wMPC to dynamically replan tasks online is experimentally demonstrated on a KUKA LBR iiwa 14 R820 robot in a dynamic pick-and-place scenario.

BoundMPC: Cartesian Trajectory Planning with Error Bounds based on Model Predictive Control in the Joint Space

Jan 10, 2024Abstract:This work presents a novel online model-predictive trajectory planner for robotic manipulators called BoundMPC. This planner allows the collision-free following of Cartesian reference paths in the end-effector's position and orientation, including via-points, within desired asymmetric bounds of the orthogonal path error. The path parameter synchronizes the position and orientation reference paths. The decomposition of the path error into the tangential direction, describing the path progress, and the orthogonal direction, which represents the deviation from the path, is well known for the position from the path-following control in the literature. This paper extends this idea to the orientation by utilizing the Lie theory of rotations. Moreover, the orthogonal error plane is further decomposed into basis directions to define asymmetric Cartesian error bounds easily. Using piecewise linear position and orientation reference paths with via-points is computationally very efficient and allows replanning the pose trajectories during the robot's motion. This feature makes it possible to use this planner for dynamically changing environments and varying goals. The flexibility and performance of BoundMPC are experimentally demonstrated by two scenarios on a 7-DoF Kuka LBR iiwa 14 R820 robot. The first scenario shows the transfer of a larger object from a start to a goal pose through a confined space where the object must be tilted. The second scenario deals with grasping an object from a table where the grasping point changes during the robot's motion, and collisions with other obstacles in the scene must be avoided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge