Adam Loch

Real-time 6-DoF Pose Estimation by an Event-based Camera using Active LED Markers

Oct 25, 2023

Abstract:Real-time applications for autonomous operations depend largely on fast and robust vision-based localization systems. Since image processing tasks require processing large amounts of data, the computational resources often limit the performance of other processes. To overcome this limitation, traditional marker-based localization systems are widely used since they are easy to integrate and achieve reliable accuracy. However, classical marker-based localization systems significantly depend on standard cameras with low frame rates, which often lack accuracy due to motion blur. In contrast, event-based cameras provide high temporal resolution and a high dynamic range, which can be utilized for fast localization tasks, even under challenging visual conditions. This paper proposes a simple but effective event-based pose estimation system using active LED markers (ALM) for fast and accurate pose estimation. The proposed algorithm is able to operate in real time with a latency below \SI{0.5}{\milli\second} while maintaining output rates of \SI{3}{\kilo \hertz}. Experimental results in static and dynamic scenarios are presented to demonstrate the performance of the proposed approach in terms of computational speed and absolute accuracy, using the OptiTrack system as the basis for measurement.

Event-Based high-speed low-latency fiducial marker tracking

Oct 12, 2021

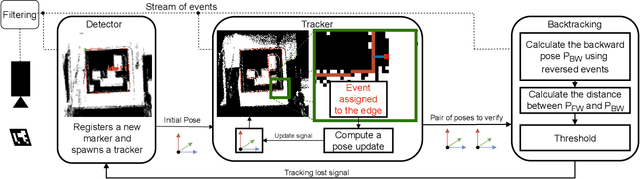

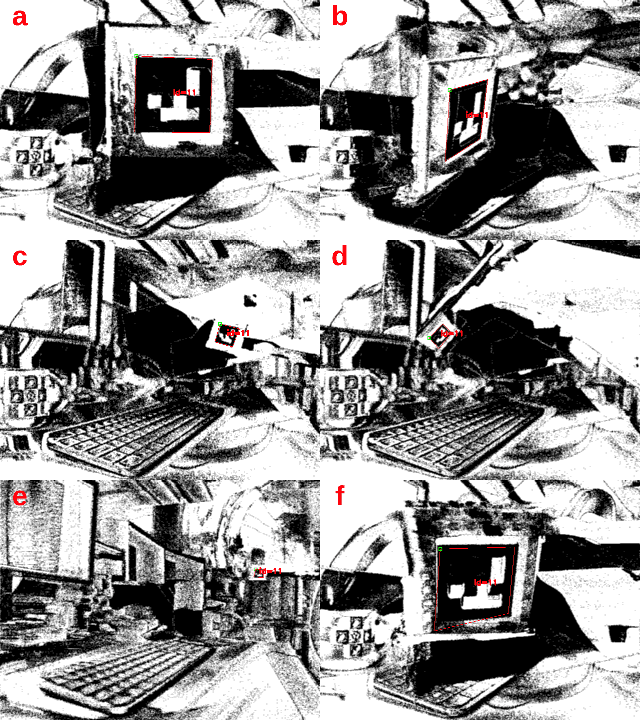

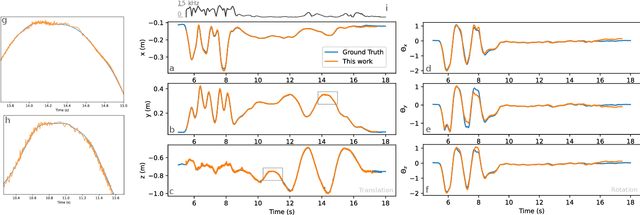

Abstract:Motion and dynamic environments, especially under challenging lighting conditions, are still an open issue for robust robotic applications. In this paper, we propose an end-to-end pipeline for real-time, low latency, 6 degrees-of-freedom pose estimation of fiducial markers. Instead of achieving a pose estimation through a conventional frame-based approach, we employ the high-speed abilities of event-based sensors to directly refine the spatial transformation, using consecutive events. Furthermore, we introduce a novel two-way verification process for detecting tracking errors by backtracking the estimated pose, allowing us to evaluate the quality of our tracking. This approach allows us to achieve pose estimation at a rate up to 156~kHz, while only relying on CPU resources. The average end-to-end latency of our method is 3~ms. Experimental results demonstrate outstanding potential for robotic tasks, such as visual servoing in fast action-perception loops.

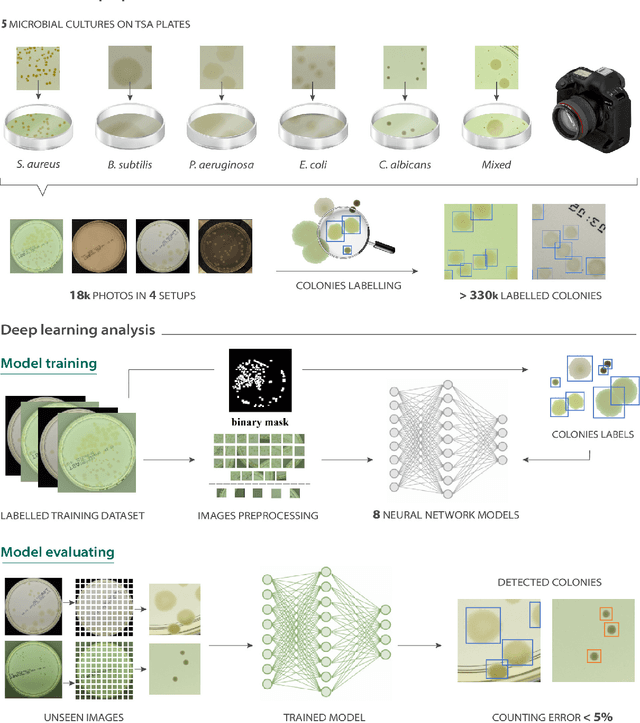

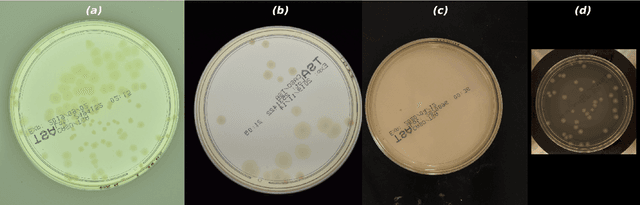

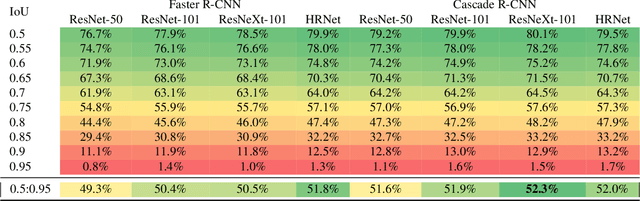

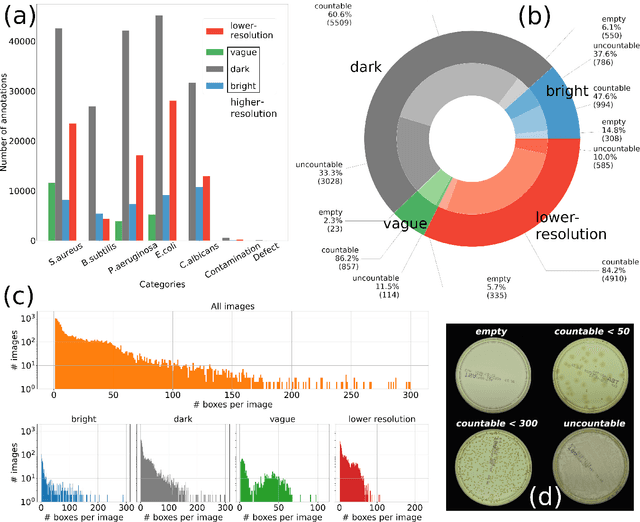

AGAR a microbial colony dataset for deep learning detection

Aug 03, 2021

Abstract:The Annotated Germs for Automated Recognition (AGAR) dataset is an image database of microbial colonies cultured on agar plates. It contains 18000 photos of five different microorganisms as single or mixed cultures, taken under diverse lighting conditions with two different cameras. All the images are classified into "countable", "uncountable", and "empty", with the "countable" class labeled by microbiologists with colony location and species identification (336442 colonies in total). This study describes the dataset itself and the process of its development. In the second part, the performance of selected deep neural network architectures for object detection, namely Faster R-CNN and Cascade R-CNN, was evaluated on the AGAR dataset. The results confirmed the great potential of deep learning methods to automate the process of microbe localization and classification based on Petri dish photos. Moreover, AGAR is the first publicly available dataset of this kind and size and will facilitate the future development of machine learning models. The data used in these studies can be found at https://agar.neurosys.com/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge