Aadirupa Saha

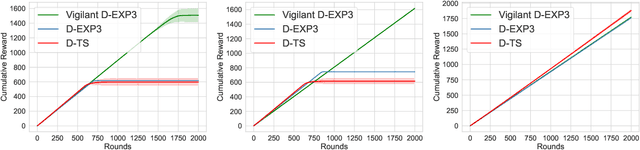

Tracking the Best Expert Privately

Mar 12, 2025Abstract:We design differentially private algorithms for the problem of prediction with expert advice under dynamic regret, also known as tracking the best expert. Our work addresses three natural types of adversaries, stochastic with shifting distributions, oblivious, and adaptive, and designs algorithms with sub-linear regret for all three cases. In particular, under a shifting stochastic adversary where the distribution may shift $S$ times, we provide an $\epsilon$-differentially private algorithm whose expected dynamic regret is at most $O\left( \sqrt{S T \log (NT)} + \frac{S \log (NT)}{\epsilon}\right)$, where $T$ and $N$ are the epsilon horizon and number of experts, respectively. For oblivious adversaries, we give a reduction from dynamic regret minimization to static regret minimization, resulting in an upper bound of $O\left(\sqrt{S T \log(NT)} + \frac{S T^{1/3}\log(T/\delta) \log(NT)}{\epsilon^{2/3}}\right)$ on the expected dynamic regret, where $S$ now denotes the allowable number of switches of the best expert. Finally, similar to static regret, we establish a fundamental separation between oblivious and adaptive adversaries for the dynamic setting: while our algorithms show that sub-linear regret is achievable for oblivious adversaries in the high-privacy regime $\epsilon \le \sqrt{S/T}$, we show that any $(\epsilon, \delta)$-differentially private algorithm must suffer linear dynamic regret under adaptive adversaries for $\epsilon \le \sqrt{S/T}$. Finally, to complement this lower bound, we give an $\epsilon$-differentially private algorithm that attains sub-linear dynamic regret under adaptive adversaries whenever $\epsilon \gg \sqrt{S/T}$.

Hybrid Preference Optimization for Alignment: Provably Faster Convergence Rates by Combining Offline Preferences with Online Exploration

Dec 13, 2024Abstract:Reinforcement Learning from Human Feedback (RLHF) is currently the leading approach for aligning large language models with human preferences. Typically, these models rely on extensive offline preference datasets for training. However, offline algorithms impose strict concentrability requirements, which are often difficult to satisfy. On the other hand, while online algorithms can avoid the concentrability issue, pure online exploration could be expensive due to the active preference query cost and real-time implementation overhead. In this paper, we propose a novel approach: Hybrid Preference Optimization (HPO) which combines online exploration with existing offline preferences by relaxing the stringent concentrability conditions for offline exploration, as well as significantly improving the sample efficiency for its online counterpart. We give the first provably optimal theoretical bound for Hybrid RLHF with preference feedback, providing sample complexity bounds for policy optimization with matching lower bounds. Our results yield improved sample efficiency of hybrid RLHF over pure offline and online exploration.

Strategic Linear Contextual Bandits

Jun 01, 2024Abstract:Motivated by the phenomenon of strategic agents gaming a recommender system to maximize the number of times they are recommended to users, we study a strategic variant of the linear contextual bandit problem, where the arms can strategically misreport their privately observed contexts to the learner. We treat the algorithm design problem as one of mechanism design under uncertainty and propose the Optimistic Grim Trigger Mechanism (OptGTM) that incentivizes the agents (i.e., arms) to report their contexts truthfully while simultaneously minimizing regret. We also show that failing to account for the strategic nature of the agents results in linear regret. However, a trade-off between mechanism design and regret minimization appears to be unavoidable. More broadly, this work aims to provide insight into the intersection of online learning and mechanism design.

DP-Dueling: Learning from Preference Feedback without Compromising User Privacy

Mar 22, 2024Abstract:We consider the well-studied dueling bandit problem, where a learner aims to identify near-optimal actions using pairwise comparisons, under the constraint of differential privacy. We consider a general class of utility-based preference matrices for large (potentially unbounded) decision spaces and give the first differentially private dueling bandit algorithm for active learning with user preferences. Our proposed algorithms are computationally efficient with near-optimal performance, both in terms of the private and non-private regret bound. More precisely, we show that when the decision space is of finite size $K$, our proposed algorithm yields order optimal $O\Big(\sum_{i = 2}^K\log\frac{KT}{\Delta_i} + \frac{K}{\epsilon}\Big)$ regret bound for pure $\epsilon$-DP, where $\Delta_i$ denotes the suboptimality gap of the $i$-th arm. We also present a matching lower bound analysis which proves the optimality of our algorithms. Finally, we extend our results to any general decision space in $d$-dimensions with potentially infinite arms and design an $\epsilon$-DP algorithm with regret $\tilde{O} \left( \frac{d^6}{\kappa \epsilon } + \frac{ d\sqrt{T }}{\kappa} \right)$, providing privacy for free when $T \gg d$.

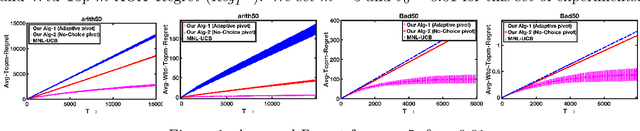

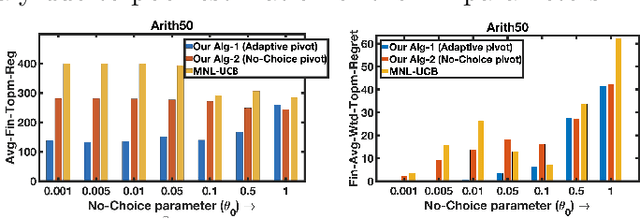

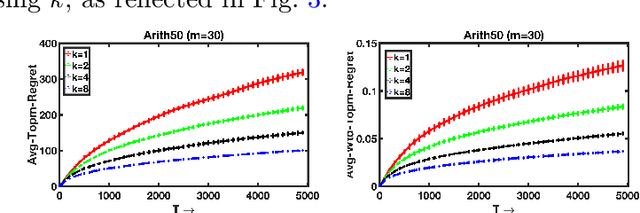

Stop Relying on No-Choice and Do not Repeat the Moves: Optimal, Efficient and Practical Algorithms for Assortment Optimization

Feb 29, 2024

Abstract:We address the problem of active online assortment optimization problem with preference feedback, which is a framework for modeling user choices and subsetwise utility maximization. The framework is useful in various real-world applications including ad placement, online retail, recommender systems, fine-tuning language models, amongst many. The problem, although has been studied in the past, lacks an intuitive and practical solution approach with simultaneously efficient algorithm and optimal regret guarantee. E.g., popularly used assortment selection algorithms often require the presence of a `strong reference' which is always included in the choice sets, further they are also designed to offer the same assortments repeatedly until the reference item gets selected -- all such requirements are quite unrealistic for practical applications. In this paper, we designed efficient algorithms for the problem of regret minimization in assortment selection with \emph{Plackett Luce} (PL) based user choices. We designed a novel concentration guarantee for estimating the score parameters of the PL model using `\emph{Pairwise Rank-Breaking}', which builds the foundation of our proposed algorithms. Moreover, our methods are practical, provably optimal, and devoid of the aforementioned limitations of the existing methods. Empirical evaluations corroborate our findings and outperform the existing baselines.

Think Before You Duel: Understanding Complexities of Preference Learning under Constrained Resources

Dec 28, 2023

Abstract:We consider the problem of reward maximization in the dueling bandit setup along with constraints on resource consumption. As in the classic dueling bandits, at each round the learner has to choose a pair of items from a set of $K$ items and observe a relative feedback for the current pair. Additionally, for both items, the learner also observes a vector of resource consumptions. The objective of the learner is to maximize the cumulative reward, while ensuring that the total consumption of any resource is within the allocated budget. We show that due to the relative nature of the feedback, the problem is more difficult than its bandit counterpart and that without further assumptions the problem is not learnable from a regret minimization perspective. Thereafter, by exploiting assumptions on the available budget, we provide an EXP3 based dueling algorithm that also considers the associated consumptions and show that it achieves an $\tilde{\mathcal{O}}\left({\frac{OPT^{(b)}}{B}}K^{1/3}T^{2/3}\right)$ regret, where $OPT^{(b)}$ is the optimal value and $B$ is the available budget. Finally, we provide numerical simulations to demonstrate the efficacy of our proposed method.

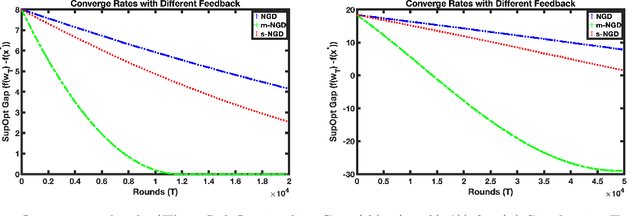

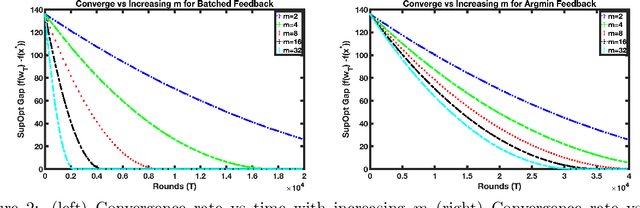

Faster Convergence with Multiway Preferences

Dec 19, 2023

Abstract:We address the problem of convex optimization with preference feedback, where the goal is to minimize a convex function given a weaker form of comparison queries. Each query consists of two points and the dueling feedback returns a (noisy) single-bit binary comparison of the function values of the two queried points. Here we consider the sign-function-based comparison feedback model and analyze the convergence rates with batched and multiway (argmin of a set queried points) comparisons. Our main goal is to understand the improved convergence rates owing to parallelization in sign-feedback-based optimization problems. Our work is the first to study the problem of convex optimization with multiway preferences and analyze the optimal convergence rates. Our first contribution lies in designing efficient algorithms with a convergence rate of $\smash{\widetilde O}(\frac{d}{\min\{m,d\} \epsilon})$ for $m$-batched preference feedback where the learner can query $m$-pairs in parallel. We next study a $m$-multiway comparison (`battling') feedback, where the learner can get to see the argmin feedback of $m$-subset of queried points and show a convergence rate of $\smash{\widetilde O}(\frac{d}{ \min\{\log m,d\}\epsilon })$. We show further improved convergence rates with an additional assumption of strong convexity. Finally, we also study the convergence lower bounds for batched preferences and multiway feedback optimization showing the optimality of our convergence rates w.r.t. $m$.

Federated Online and Bandit Convex Optimization

Nov 29, 2023Abstract:We study the problems of distributed online and bandit convex optimization against an adaptive adversary. We aim to minimize the average regret on $M$ machines working in parallel over $T$ rounds with $R$ intermittent communications. Assuming the underlying cost functions are convex and can be generated adaptively, our results show that collaboration is not beneficial when the machines have access to the first-order gradient information at the queried points. This is in contrast to the case for stochastic functions, where each machine samples the cost functions from a fixed distribution. Furthermore, we delve into the more challenging setting of federated online optimization with bandit (zeroth-order) feedback, where the machines can only access values of the cost functions at the queried points. The key finding here is identifying the high-dimensional regime where collaboration is beneficial and may even lead to a linear speedup in the number of machines. We further illustrate our findings through federated adversarial linear bandits by developing novel distributed single and two-point feedback algorithms. Our work is the first attempt towards a systematic understanding of federated online optimization with limited feedback, and it attains tight regret bounds in the intermittent communication setting for both first and zeroth-order feedback. Our results thus bridge the gap between stochastic and adaptive settings in federated online optimization.

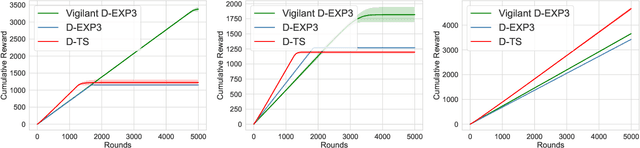

Bandits Meet Mechanism Design to Combat Clickbait in Online Recommendation

Nov 27, 2023

Abstract:We study a strategic variant of the multi-armed bandit problem, which we coin the strategic click-bandit. This model is motivated by applications in online recommendation where the choice of recommended items depends on both the click-through rates and the post-click rewards. Like in classical bandits, rewards follow a fixed unknown distribution. However, we assume that the click-rate of each arm is chosen strategically by the arm (e.g., a host on Airbnb) in order to maximize the number of times it gets clicked. The algorithm designer does not know the post-click rewards nor the arms' actions (i.e., strategically chosen click-rates) in advance, and must learn both values over time. To solve this problem, we design an incentive-aware learning algorithm, UCB-S, which achieves two goals simultaneously: (a) incentivizing desirable arm behavior under uncertainty; (b) minimizing regret by learning unknown parameters. We characterize all approximate Nash equilibria among arms under UCB-S and show a $\tilde{\mathcal{O}} (\sqrt{KT})$ regret bound uniformly in every equilibrium. We also show that incentive-unaware algorithms generally fail to achieve low regret in the strategic click-bandit. Finally, we support our theoretical results by simulations of strategic arm behavior which confirm the effectiveness and robustness of our proposed incentive design.

Dueling Optimization with a Monotone Adversary

Nov 18, 2023Abstract:We introduce and study the problem of dueling optimization with a monotone adversary, which is a generalization of (noiseless) dueling convex optimization. The goal is to design an online algorithm to find a minimizer $\mathbf{x}^{*}$ for a function $f\colon X \to \mathbb{R}$, where $X \subseteq \mathbb{R}^d$. In each round, the algorithm submits a pair of guesses, i.e., $\mathbf{x}^{(1)}$ and $\mathbf{x}^{(2)}$, and the adversary responds with any point in the space that is at least as good as both guesses. The cost of each query is the suboptimality of the worse of the two guesses; i.e., ${\max} \left( f(\mathbf{x}^{(1)}), f(\mathbf{x}^{(2)}) \right) - f(\mathbf{x}^{*})$. The goal is to minimize the number of iterations required to find an $\varepsilon$-optimal point and to minimize the total cost (regret) of the guesses over many rounds. Our main result is an efficient randomized algorithm for several natural choices of the function $f$ and set $X$ that incurs cost $O(d)$ and iteration complexity $O(d\log(1/\varepsilon)^2)$. Moreover, our dependence on $d$ is asymptotically optimal, as we show examples in which any randomized algorithm for this problem must incur $\Omega(d)$ cost and iteration complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge