Ziyun Li

Foveated Instance Segmentation

Mar 27, 2025

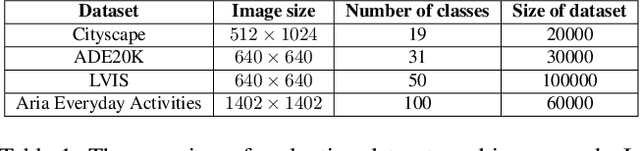

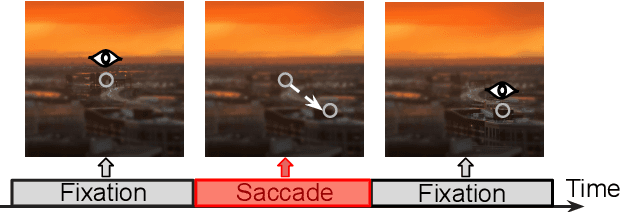

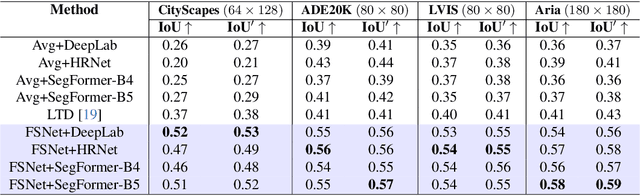

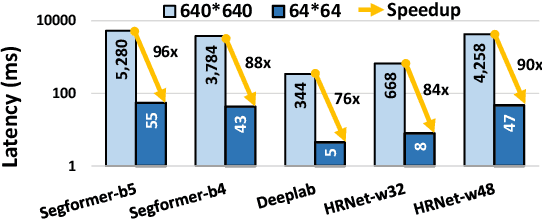

Abstract:Instance segmentation is essential for augmented reality and virtual reality (AR/VR) as it enables precise object recognition and interaction, enhancing the integration of virtual and real-world elements for an immersive experience. However, the high computational overhead of segmentation limits its application on resource-constrained AR/VR devices, causing large processing latency and degrading user experience. In contrast to conventional scenarios, AR/VR users typically focus on only a few regions within their field of view before shifting perspective, allowing segmentation to be concentrated on gaze-specific areas. This insight drives the need for efficient segmentation methods that prioritize processing instance of interest, reducing computational load and enhancing real-time performance. In this paper, we present a foveated instance segmentation (FovealSeg) framework that leverages real-time user gaze data to perform instance segmentation exclusively on instance of interest, resulting in substantial computational savings. Evaluation results show that FSNet achieves an IoU of 0.56 on ADE20K and 0.54 on LVIS, notably outperforming the baseline. The code is available at https://github.com/SAI-

Unlocking Visual Secrets: Inverting Features with Diffusion Priors for Image Reconstruction

Dec 11, 2024Abstract:Inverting visual representations within deep neural networks (DNNs) presents a challenging and important problem in the field of security and privacy for deep learning. The main goal is to invert the features of an unidentified target image generated by a pre-trained DNN, aiming to reconstruct the original image. Feature inversion holds particular significance in understanding the privacy leakage inherent in contemporary split DNN execution techniques, as well as in various applications based on the extracted DNN features. In this paper, we explore the use of diffusion models, a promising technique for image synthesis, to enhance feature inversion quality. We also investigate the potential of incorporating alternative forms of prior knowledge, such as textual prompts and cross-frame temporal correlations, to further improve the quality of inverted features. Our findings reveal that diffusion models can effectively leverage hidden information from the DNN features, resulting in superior reconstruction performance compared to previous methods. This research offers valuable insights into how diffusion models can enhance privacy and security within applications that are reliant on DNN features.

GazeGen: Gaze-Driven User Interaction for Visual Content Generation

Nov 07, 2024

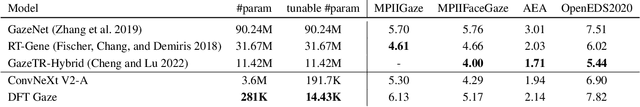

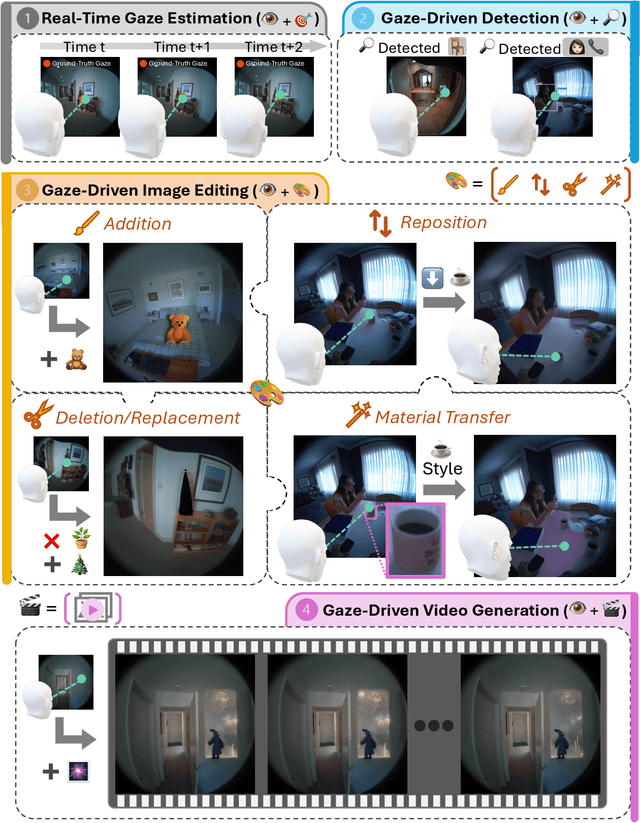

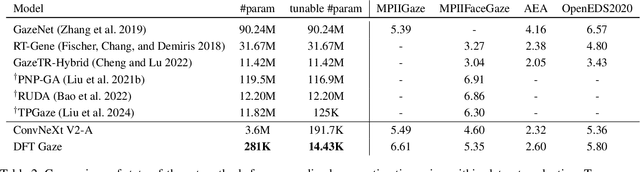

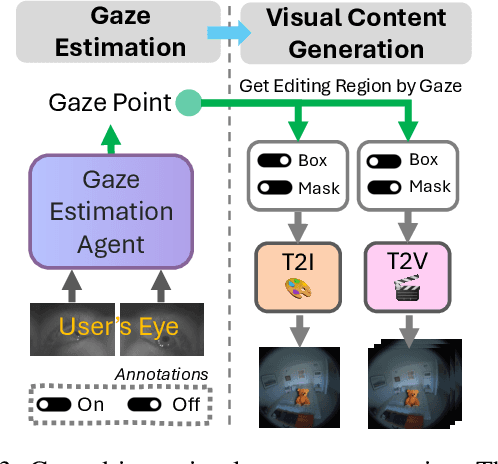

Abstract:We present GazeGen, a user interaction system that generates visual content (images and videos) for locations indicated by the user's eye gaze. GazeGen allows intuitive manipulation of visual content by targeting regions of interest with gaze. Using advanced techniques in object detection and generative AI, GazeGen performs gaze-controlled image adding/deleting, repositioning, and surface material changes of image objects, and converts static images into videos. Central to GazeGen is the DFT Gaze (Distilled and Fine-Tuned Gaze) agent, an ultra-lightweight model with only 281K parameters, performing accurate real-time gaze predictions tailored to individual users' eyes on small edge devices. GazeGen is the first system to combine visual content generation with real-time gaze estimation, made possible exclusively by DFT Gaze. This real-time gaze estimation enables various visual content generation tasks, all controlled by the user's gaze. The input for DFT Gaze is the user's eye images, while the inputs for visual content generation are the user's view and the predicted gaze point from DFT Gaze. To achieve efficient gaze predictions, we derive the small model from a large model (10x larger) via novel knowledge distillation and personal adaptation techniques. We integrate knowledge distillation with a masked autoencoder, developing a compact yet powerful gaze estimation model. This model is further fine-tuned with Adapters, enabling highly accurate and personalized gaze predictions with minimal user input. DFT Gaze ensures low-latency and precise gaze tracking, supporting a wide range of gaze-driven tasks. We validate the performance of DFT Gaze on AEA and OpenEDS2020 benchmarks, demonstrating low angular gaze error and low latency on the edge device (Raspberry Pi 4). Furthermore, we describe applications of GazeGen, illustrating its versatility and effectiveness in various usage scenarios.

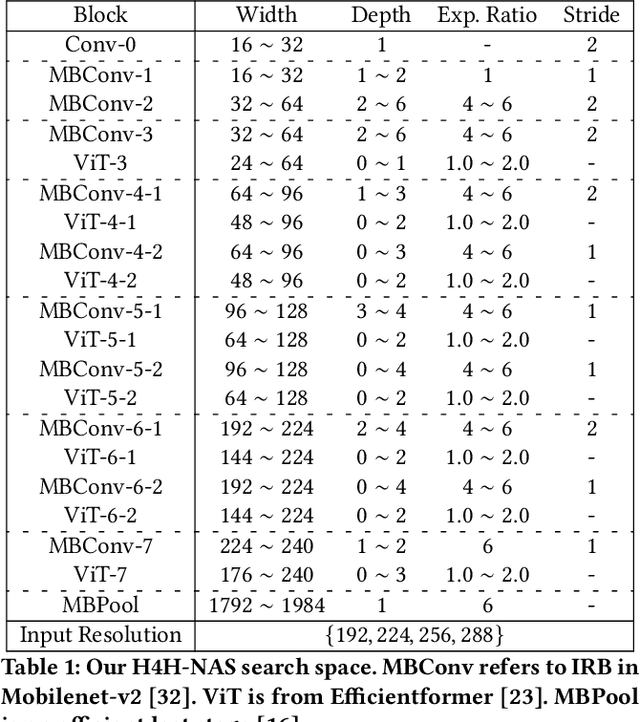

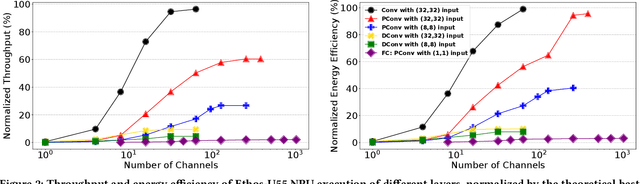

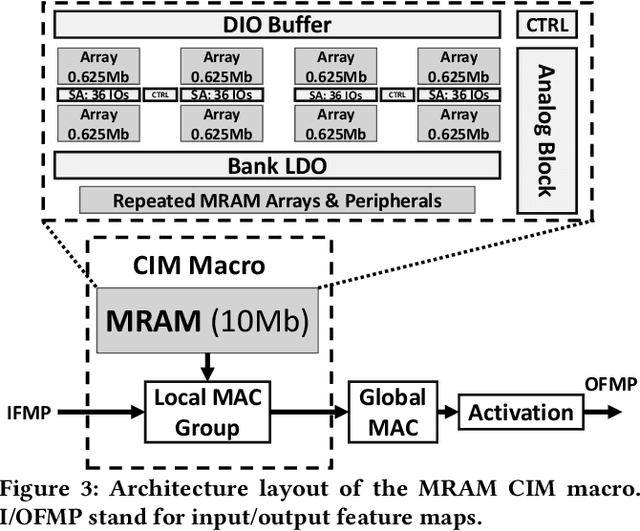

Neural Architecture Search of Hybrid Models for NPU-CIM Heterogeneous AR/VR Devices

Oct 10, 2024

Abstract:Low-Latency and Low-Power Edge AI is essential for Virtual Reality and Augmented Reality applications. Recent advances show that hybrid models, combining convolution layers (CNN) and transformers (ViT), often achieve superior accuracy/performance tradeoff on various computer vision and machine learning (ML) tasks. However, hybrid ML models can pose system challenges for latency and energy-efficiency due to their diverse nature in dataflow and memory access patterns. In this work, we leverage the architecture heterogeneity from Neural Processing Units (NPU) and Compute-In-Memory (CIM) and perform diverse execution schemas to efficiently execute these hybrid models. We also introduce H4H-NAS, a Neural Architecture Search framework to design efficient hybrid CNN/ViT models for heterogeneous edge systems with both NPU and CIM. Our H4H-NAS approach is powered by a performance estimator built with NPU performance results measured on real silicon, and CIM performance based on industry IPs. H4H-NAS searches hybrid CNN/ViT models with fine granularity and achieves significant (up to 1.34%) top-1 accuracy improvement on ImageNet dataset. Moreover, results from our Algo/HW co-design reveal up to 56.08% overall latency and 41.72% energy improvements by introducing such heterogeneous computing over baseline solutions. The framework guides the design of hybrid network architectures and system architectures of NPU+CIM heterogeneous systems.

Supervised Knowledge May Hurt Novel Class Discovery Performance

Jun 06, 2023

Abstract:Novel class discovery (NCD) aims to infer novel categories in an unlabeled dataset by leveraging prior knowledge of a labeled set comprising disjoint but related classes. Given that most existing literature focuses primarily on utilizing supervised knowledge from a labeled set at the methodology level, this paper considers the question: Is supervised knowledge always helpful at different levels of semantic relevance? To proceed, we first establish a novel metric, so-called transfer flow, to measure the semantic similarity between labeled/unlabeled datasets. To show the validity of the proposed metric, we build up a large-scale benchmark with various degrees of semantic similarities between labeled/unlabeled datasets on ImageNet by leveraging its hierarchical class structure. The results based on the proposed benchmark show that the proposed transfer flow is in line with the hierarchical class structure; and that NCD performance is consistent with the semantic similarities (measured by the proposed metric). Next, by using the proposed transfer flow, we conduct various empirical experiments with different levels of semantic similarity, yielding that supervised knowledge may hurt NCD performance. Specifically, using supervised information from a low-similarity labeled set may lead to a suboptimal result as compared to using pure self-supervised knowledge. These results reveal the inadequacy of the existing NCD literature which usually assumes that supervised knowledge is beneficial. Finally, we develop a pseudo-version of the transfer flow as a practical reference to decide if supervised knowledge should be used in NCD. Its effectiveness is supported by our empirical studies, which show that the pseudo transfer flow (with or without supervised knowledge) is consistent with the corresponding accuracy based on various datasets. Code is released at https://github.com/J-L-O/SK-Hurt-NCD

A Closer Look at Novel Class Discovery from the Labeled Set

Sep 21, 2022

Abstract:Novel class discovery (NCD) aims to infer novel categories in an unlabeled dataset leveraging prior knowledge of a labeled set comprising disjoint but related classes. Existing research focuses primarily on utilizing the labeled set at the methodological level, with less emphasis on the analysis of the labeled set itself. Thus, in this paper, we rethink novel class discovery from the labeled set and focus on two core questions: (i) Given a specific unlabeled set, what kind of labeled set can best support novel class discovery? (ii) A fundamental premise of NCD is that the labeled set must be related to the unlabeled set, but how can we measure this relation? For (i), we propose and substantiate the hypothesis that NCD could benefit more from a labeled set with a large degree of semantic similarity to the unlabeled set. Specifically, we establish an extensive and large-scale benchmark with varying degrees of semantic similarity between labeled/unlabeled datasets on ImageNet by leveraging its hierarchical class structure. As a sharp contrast, the existing NCD benchmarks are developed based on labeled sets with different number of categories and images, and completely ignore the semantic relation. For (ii), we introduce a mathematical definition for quantifying the semantic similarity between labeled and unlabeled sets. In addition, we use this metric to confirm the validity of our proposed benchmark and demonstrate that it highly correlates with NCD performance. Furthermore, without quantitative analysis, previous works commonly believe that label information is always beneficial. However, counterintuitively, our experimental results show that using labels may lead to sub-optimal outcomes in low-similarity settings.

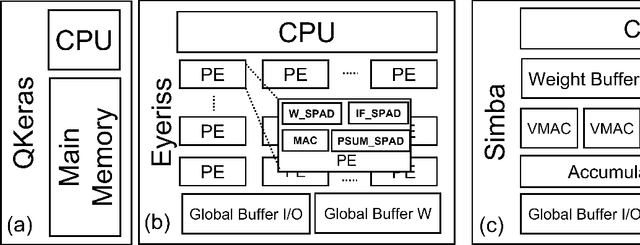

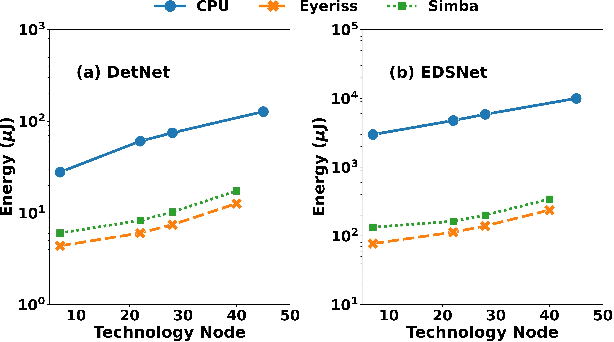

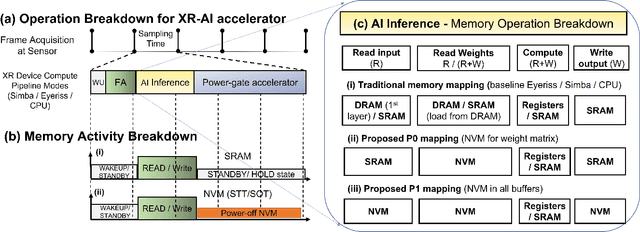

Memory-Oriented Design-Space Exploration of Edge-AI Hardware for XR Applications

Jun 08, 2022

Abstract:Low-Power Edge-AI capabilities are essential for on-device extended reality (XR) applications to support the vision of Metaverse. In this work, we investigate two representative XR workloads: (i) Hand detection and (ii) Eye segmentation, for hardware design space exploration. For both applications, we train deep neural networks and analyze the impact of quantization and hardware specific bottlenecks. Through simulations, we evaluate a CPU and two systolic inference accelerator implementations. Next, we compare these hardware solutions with advanced technology nodes. The impact of integrating state-of-the-art emerging non-volatile memory technology (STT/SOT/VGSOT MRAM) into the XR-AI inference pipeline is evaluated. We found that significant energy benefits (>=80%) can be achieved for hand detection (IPS=40) and eye segmentation (IPS=6) by introducing non-volatile memory in the memory hierarchy for designs at 7nm node while meeting minimum IPS (inference per second). Moreover, we can realize substantial reduction in area (>=30%) owing to the small form factor of MRAM compared to traditional SRAM.

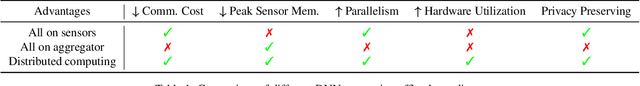

SplitNets: Designing Neural Architectures for Efficient Distributed Computing on Head-Mounted Systems

Apr 10, 2022

Abstract:We design deep neural networks (DNNs) and corresponding networks' splittings to distribute DNNs' workload to camera sensors and a centralized aggregator on head mounted devices to meet system performance targets in inference accuracy and latency under the given hardware resource constraints. To achieve an optimal balance among computation, communication, and performance, a split-aware neural architecture search framework, SplitNets, is introduced to conduct model designing, splitting, and communication reduction simultaneously. We further extend the framework to multi-view systems for learning to fuse inputs from multiple camera sensors with optimal performance and systemic efficiency. We validate SplitNets for single-view system on ImageNet as well as multi-view system on 3D classification, and show that the SplitNets framework achieves state-of-the-art (SOTA) performance and system latency compared with existing approaches.

Distributed On-Sensor Compute System for AR/VR Devices: A Semi-Analytical Simulation Framework for Power Estimation

Mar 14, 2022

Abstract:Augmented Reality/Virtual Reality (AR/VR) glasses are widely foreseen as the next generation computing platform. AR/VR glasses are a complex "system of systems" which must satisfy stringent form factor, computing-, power- and thermal- requirements. In this paper, we will show that a novel distributed on-sensor compute architecture, coupled with new semiconductor technologies (such as dense 3D-IC interconnects and Spin-Transfer Torque Magneto Random Access Memory, STT-MRAM) and, most importantly, a full hardware-software co-optimization are the solutions to achieve attractive and socially acceptable AR/VR glasses. To this end, we developed a semi-analytical simulation framework to estimate the power consumption of novel AR/VR distributed on-sensor computing architectures. The model allows the optimization of the main technological features of the system modules, as well as the computer-vision algorithm partition strategy across the distributed compute architecture. We show that, in the case of the compute-intensive machine learning based Hand Tracking algorithm, the distributed on-sensor compute architecture can reduce the system power consumption compared to a centralized system, with the additional benefits in terms of latency and privacy.

Not All Knowledge Is Created Equal

Jun 02, 2021

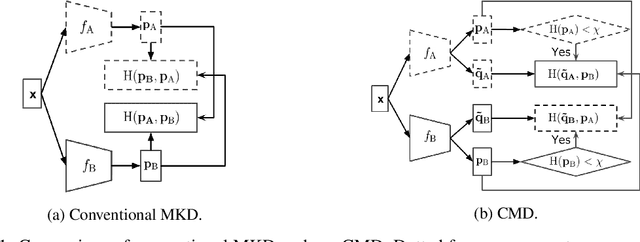

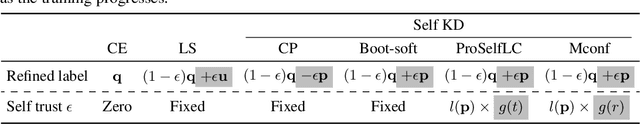

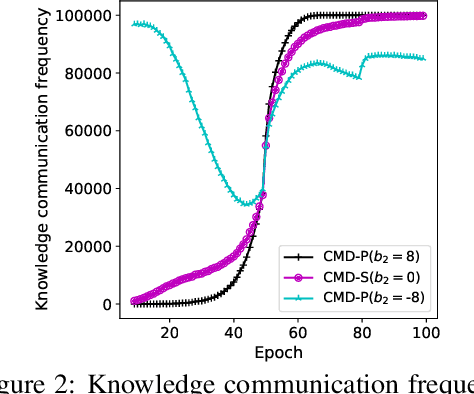

Abstract:Mutual knowledge distillation (MKD) improves a model by distilling knowledge from another model. However, not all knowledge is certain and correct, especially under adverse conditions. For example, label noise usually leads to less reliable models due to the undesired memorisation [1, 2]. Wrong knowledge misleads the learning rather than helps. This problem can be handled by two aspects: (i) improving the reliability of a model where the knowledge is from (i.e., knowledge source's reliability); (ii) selecting reliable knowledge for distillation. In the literature, making a model more reliable is widely studied while selective MKD receives little attention. Therefore, we focus on studying selective MKD and highlight its importance in this work. Concretely, a generic MKD framework, Confident knowledge selection followed by Mutual Distillation (CMD), is designed. The key component of CMD is a generic knowledge selection formulation, making the selection threshold either static (CMD-S) or progressive (CMD-P). Additionally, CMD covers two special cases: zero knowledge and all knowledge, leading to a unified MKD framework. We empirically find CMD-P performs better than CMD-S. The main reason is that a model's knowledge upgrades and becomes confident as the training progresses. Extensive experiments are present to demonstrate the effectiveness of CMD and thoroughly justify the design of CMD. For example, CMD-P obtains new state-of-the-art results in robustness against label noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge