Ziyu Jia

RoboAfford++: A Generative AI-Enhanced Dataset for Multimodal Affordance Learning in Robotic Manipulation and Navigation

Nov 16, 2025Abstract:Robotic manipulation and navigation are fundamental capabilities of embodied intelligence, enabling effective robot interactions with the physical world. Achieving these capabilities requires a cohesive understanding of the environment, including object recognition to localize target objects, object affordances to identify potential interaction areas and spatial affordances to discern optimal areas for both object placement and robot movement. While Vision-Language Models (VLMs) excel at high-level task planning and scene understanding, they often struggle to infer actionable positions for physical interaction, such as functional grasping points and permissible placement regions. This limitation stems from the lack of fine-grained annotations for object and spatial affordances in their training datasets. To tackle this challenge, we introduce RoboAfford++, a generative AI-enhanced dataset for multimodal affordance learning for both robotic manipulation and navigation. Our dataset comprises 869,987 images paired with 2.0 million question answering (QA) annotations, covering three critical tasks: object affordance recognition to identify target objects based on attributes and spatial relationships, object affordance prediction to pinpoint functional parts for manipulation, and spatial affordance localization to identify free space for object placement and robot navigation. Complementing this dataset, we propose RoboAfford-Eval, a comprehensive benchmark for assessing affordance-aware prediction in real-world scenarios, featuring 338 meticulously annotated samples across the same three tasks. Extensive experimental results reveal the deficiencies of existing VLMs in affordance learning, while fine-tuning on the RoboAfford++ dataset significantly enhances their ability to reason about object and spatial affordances, validating the dataset's effectiveness.

Disentangling Emotional Bases and Transient Fluctuations: A Low-Rank Sparse Decomposition Approach for Video Affective Analysis

Nov 14, 2025Abstract:Video-based Affective Computing (VAC), vital for emotion analysis and human-computer interaction, suffers from model instability and representational degradation due to complex emotional dynamics. Since the meaning of different emotional fluctuations may differ under different emotional contexts, the core limitation is the lack of a hierarchical structural mechanism to disentangle distinct affective components, i.e., emotional bases (the long-term emotional tone), and transient fluctuations (the short-term emotional fluctuations). To address this, we propose the Low-Rank Sparse Emotion Understanding Framework (LSEF), a unified model grounded in the Low-Rank Sparse Principle, which theoretically reframes affective dynamics as a hierarchical low-rank sparse compositional process. LSEF employs three plug-and-play modules, i.e., the Stability Encoding Module (SEM) captures low-rank emotional bases; the Dynamic Decoupling Module (DDM) isolates sparse transient signals; and the Consistency Integration Module (CIM) reconstructs multi-scale stability and reactivity coherence. This framework is optimized by a Rank Aware Optimization (RAO) strategy that adaptively balances gradient smoothness and sensitivity. Extensive experiments across multiple datasets confirm that LSEF significantly enhances robustness and dynamic discrimination, which further validates the effectiveness and generality of hierarchical low-rank sparse modeling for understanding affective dynamics.

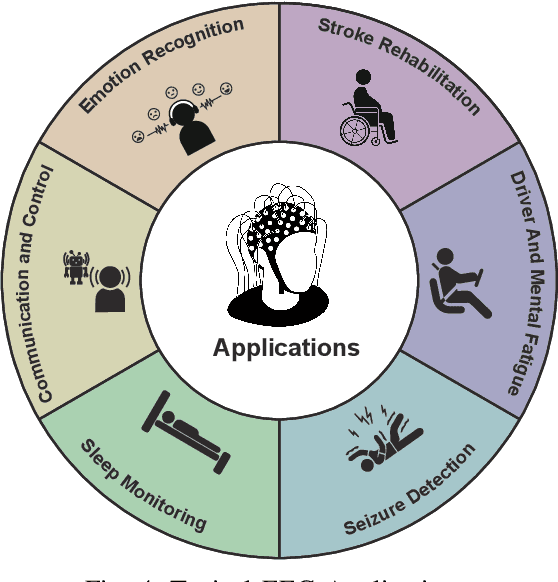

ECHO: Toward Contextual Seq2Seq Paradigms in Large EEG Models

Sep 26, 2025

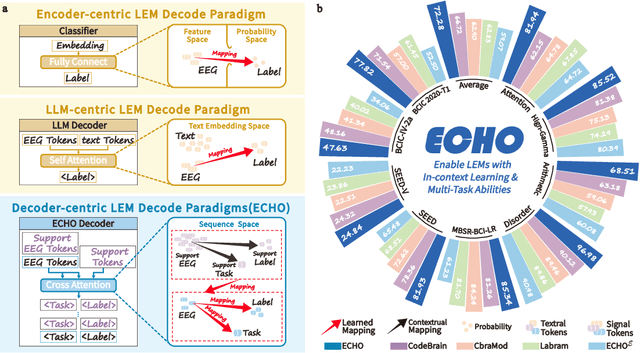

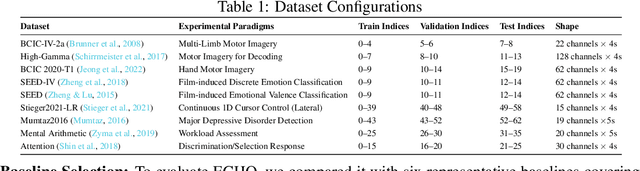

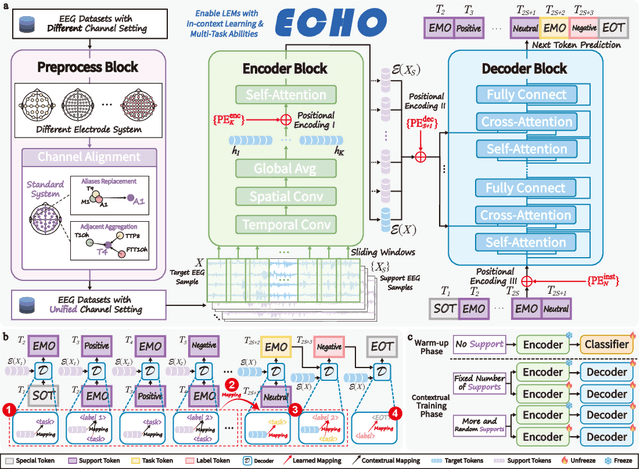

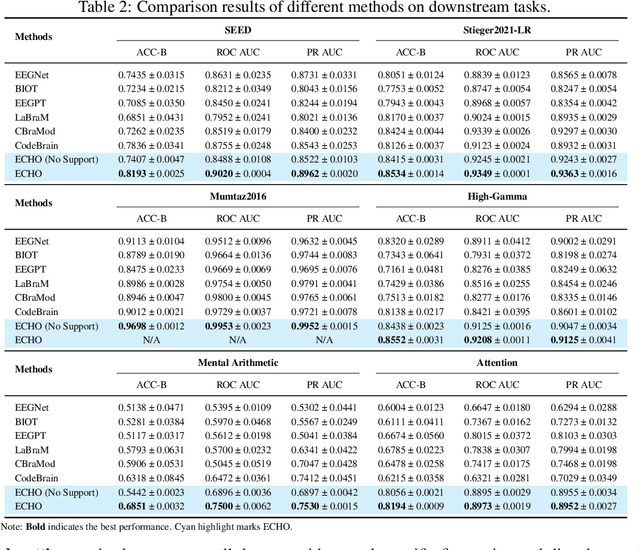

Abstract:Electroencephalography (EEG), with its broad range of applications, necessitates models that can generalize effectively across various tasks and datasets. Large EEG Models (LEMs) address this by pretraining encoder-centric architectures on large-scale unlabeled data to extract universal representations. While effective, these models lack decoders of comparable capacity, limiting the full utilization of the learned features. To address this issue, we introduce ECHO, a novel decoder-centric LEM paradigm that reformulates EEG modeling as sequence-to-sequence learning. ECHO captures layered relationships among signals, labels, and tasks within sequence space, while incorporating discrete support samples to construct contextual cues. This design equips ECHO with in-context learning, enabling dynamic adaptation to heterogeneous tasks without parameter updates. Extensive experiments across multiple datasets demonstrate that, even with basic model components, ECHO consistently outperforms state-of-the-art single-task LEMs in multi-task settings, showing superior generalization and adaptability.

CodeBrain: Bridging Decoupled Tokenizer and Multi-Scale Architecture for EEG Foundation Model

Jun 10, 2025

Abstract:Electroencephalography (EEG) provides real-time insights into brain activity and is widely used in neuroscience. However, variations in channel configurations, sequence lengths, and task objectives limit the transferability of traditional task-specific models. Although recent EEG foundation models (EFMs) aim to learn generalizable representations, they struggle with limited heterogeneous representation capacity and inefficiency in capturing multi-scale brain dependencies. To address these challenges, we propose CodeBrain, an efficient EFM structurally aligned with brain organization, trained in two stages. (1) We introduce a TFDual-Tokenizer that independently tokenizes heterogeneous temporal and frequency components, enabling a quadratic expansion of the discrete representation space. This also offers a degree of interpretability through cross-domain token analysis. (2) We propose the EEGSSM, which combines a structured global convolution architecture and a sliding window attention mechanism to jointly model sparse long-range and local dependencies. Unlike fully connected Transformer models, EEGSSM better reflects the brain's small-world topology and efficiently captures EEG's inherent multi-scale structure. EEGSSM is trained with a masked self-supervised learning objective to predict token indices obtained in TFDual-Tokenizer. Comprehensive experiments on 10 public EEG datasets demonstrate the generalizability of CodeBrain with linear probing. By offering biologically informed and interpretable EEG modeling, CodeBrain lays the foundation for future neuroscience research. Both code and pretraining weights will be released in the future version.

BiT-MamSleep: Bidirectional Temporal Mamba for EEG Sleep Staging

Nov 03, 2024

Abstract:In this paper, we address the challenges in automatic sleep stage classification, particularly the high computational cost, inadequate modeling of bidirectional temporal dependencies, and class imbalance issues faced by Transformer-based models. To address these limitations, we propose BiT-MamSleep, a novel architecture that integrates the Triple-Resolution CNN (TRCNN) for efficient multi-scale feature extraction with the Bidirectional Mamba (BiMamba) mechanism, which models both short- and long-term temporal dependencies through bidirectional processing of EEG data. Additionally, BiT-MamSleep incorporates an Adaptive Feature Recalibration (AFR) module and a temporal enhancement block to dynamically refine feature importance, optimizing classification accuracy without increasing computational complexity. To further improve robustness, we apply optimization techniques such as Focal Loss and SMOTE to mitigate class imbalance. Extensive experiments on four public datasets demonstrate that BiT-MamSleep significantly outperforms state-of-the-art methods, particularly in handling long EEG sequences and addressing class imbalance, leading to more accurate and scalable sleep stage classification.

ST-USleepNet: A Spatial-Temporal Coupling Prominence Network for Multi-Channel Sleep Staging

Aug 21, 2024

Abstract:Sleep staging is critical for assessing sleep quality and diagnosing disorders. Recent advancements in artificial intelligence have driven the development of automated sleep staging models, which still face two significant challenges. 1) Simultaneously extracting prominent temporal and spatial sleep features from multi-channel raw signals, including characteristic sleep waveforms and salient spatial brain networks. 2) Capturing the spatial-temporal coupling patterns essential for accurate sleep staging. To address these challenges, we propose a novel framework named ST-USleepNet, comprising a spatial-temporal graph construction module (ST) and a U-shaped sleep network (USleepNet). The ST module converts raw signals into a spatial-temporal graph to model spatial-temporal couplings. The USleepNet utilizes a U-shaped structure originally designed for image segmentation. Similar to how image segmentation isolates significant targets, when applied to both raw sleep signals and ST module-generated graph data, USleepNet segments these inputs to extract prominent temporal and spatial sleep features simultaneously. Testing on three datasets demonstrates that ST-USleepNet outperforms existing baselines, and model visualizations confirm its efficacy in extracting prominent sleep features and temporal-spatial coupling patterns across various sleep stages. The code is available at: https://github.com/Majy-Yuji/ST-USleepNet.git.

A Comprehensive Survey on EEG-Based Emotion Recognition: A Graph-Based Perspective

Aug 13, 2024

Abstract:Compared to other modalities, electroencephalogram (EEG) based emotion recognition can intuitively respond to emotional patterns in the human brain and, therefore, has become one of the most focused tasks in affective computing. The nature of emotions is a physiological and psychological state change in response to brain region connectivity, making emotion recognition focus more on the dependency between brain regions instead of specific brain regions. A significant trend is the application of graphs to encapsulate such dependency as dynamic functional connections between nodes across temporal and spatial dimensions. Concurrently, the neuroscientific underpinnings behind this dependency endow the application of graphs in this field with a distinctive significance. However, there is neither a comprehensive review nor a tutorial for constructing emotion-relevant graphs in EEG-based emotion recognition. In this paper, we present a comprehensive survey of these studies, delivering a systematic review of graph-related methods in this field from a methodological perspective. We propose a unified framework for graph applications in this field and categorize these methods on this basis. Finally, based on previous studies, we also present several open challenges and future directions in this field.

Graph Neural Networks in EEG-based Emotion Recognition: A Survey

Feb 02, 2024

Abstract:Compared to other modalities, EEG-based emotion recognition can intuitively respond to the emotional patterns in the human brain and, therefore, has become one of the most concerning tasks in the brain-computer interfaces field. Since dependencies within brain regions are closely related to emotion, a significant trend is to develop Graph Neural Networks (GNNs) for EEG-based emotion recognition. However, brain region dependencies in emotional EEG have physiological bases that distinguish GNNs in this field from those in other time series fields. Besides, there is neither a comprehensive review nor guidance for constructing GNNs in EEG-based emotion recognition. In the survey, our categorization reveals the commonalities and differences of existing approaches under a unified framework of graph construction. We analyze and categorize methods from three stages in the framework to provide clear guidance on constructing GNNs in EEG-based emotion recognition. In addition, we discuss several open challenges and future directions, such as Temporal full-connected graph and Graph condensation.

An EEG Channel Selection Framework for Driver Drowsiness Detection via Interpretability Guidance

Apr 26, 2023

Abstract:Drowsy driving has a crucial influence on driving safety, creating an urgent demand for driver drowsiness detection. Electroencephalogram (EEG) signal can accurately reflect the mental fatigue state and thus has been widely studied in drowsiness monitoring. However, the raw EEG data is inherently noisy and redundant, which is neglected by existing works that just use single-channel EEG data or full-head channel EEG data for model training, resulting in limited performance of driver drowsiness detection. In this paper, we are the first to propose an Interpretability-guided Channel Selection (ICS) framework for the driver drowsiness detection task. Specifically, we design a two-stage training strategy to progressively select the key contributing channels with the guidance of interpretability. We first train a teacher network in the first stage using full-head channel EEG data. Then we apply the class activation mapping (CAM) to the trained teacher model to highlight the high-contributing EEG channels and further propose a channel voting scheme to select the top N contributing EEG channels. Finally, we train a student network with the selected channels of EEG data in the second stage for driver drowsiness detection. Experiments are designed on a public dataset, and the results demonstrate that our method is highly applicable and can significantly improve the performance of cross-subject driver drowsiness detection.

Interpretable and Robust AI in EEG Systems: A Survey

Apr 21, 2023

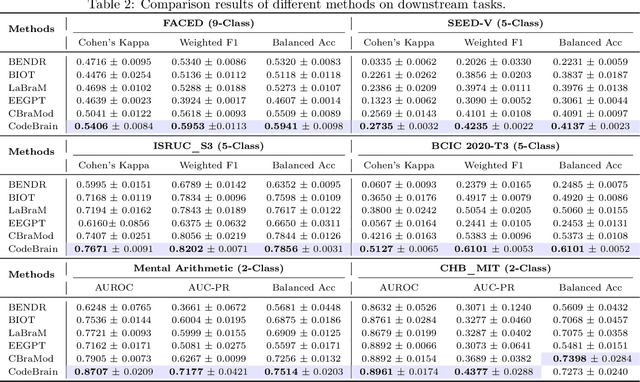

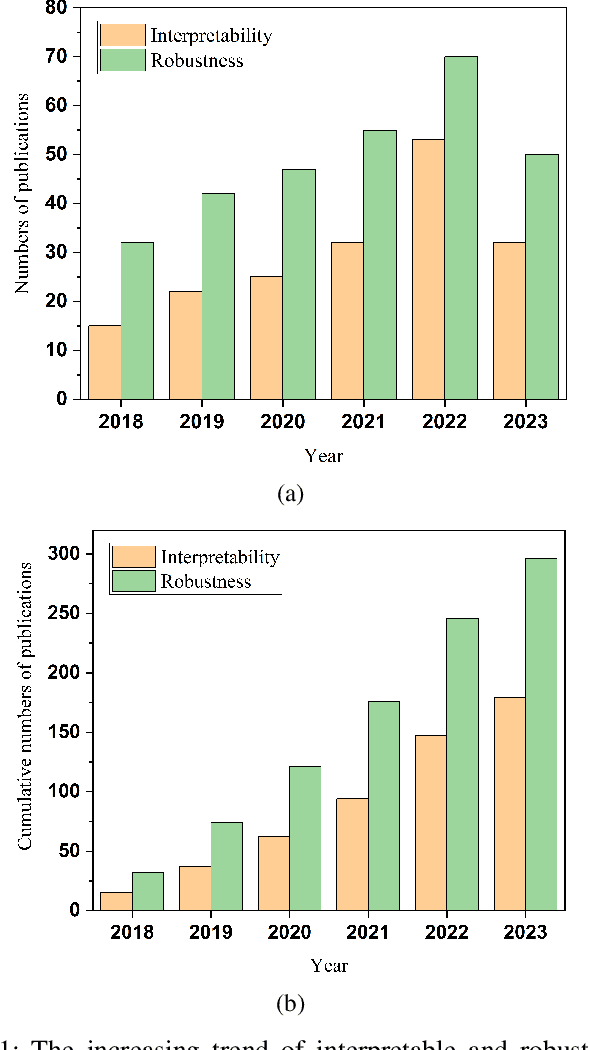

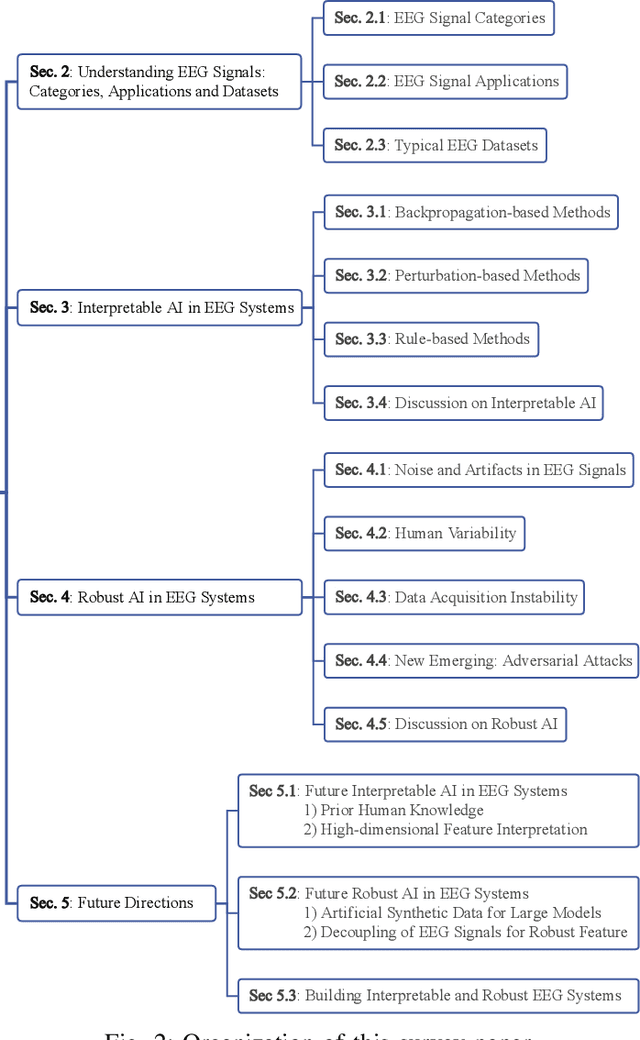

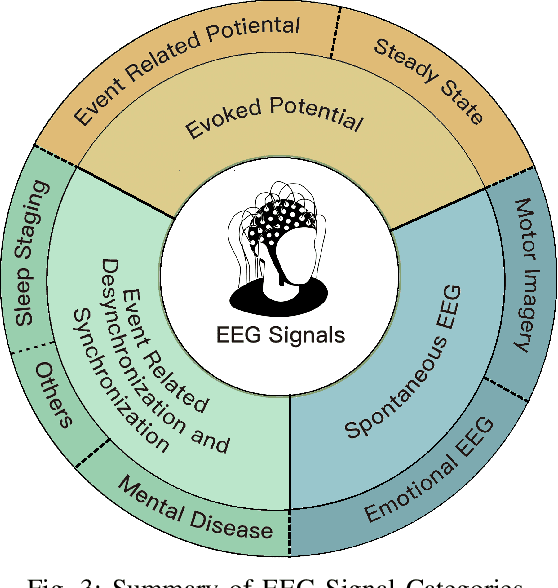

Abstract:The close coupling of artificial intelligence (AI) and electroencephalography (EEG) has substantially advanced human-computer interaction (HCI) technologies in the AI era. Different from traditional EEG systems, the interpretability and robustness of AI-based EEG systems are becoming particularly crucial. The interpretability clarifies the inner working mechanisms of AI models and thus can gain the trust of users. The robustness reflects the AI's reliability against attacks and perturbations, which is essential for sensitive and fragile EEG signals. Thus the interpretability and robustness of AI in EEG systems have attracted increasing attention, and their research has achieved great progress recently. However, there is still no survey covering recent advances in this field. In this paper, we present the first comprehensive survey and summarize the interpretable and robust AI techniques for EEG systems. Specifically, we first propose a taxonomy of interpretability by characterizing it into three types: backpropagation, perturbation, and inherently interpretable methods. Then we classify the robustness mechanisms into four classes: noise and artifacts, human variability, data acquisition instability, and adversarial attacks. Finally, we identify several critical and unresolved challenges for interpretable and robust AI in EEG systems and further discuss their future directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge