Xinliang Zhou

EEG-DLite: Dataset Distillation for Efficient Large EEG Model Training

Dec 13, 2025

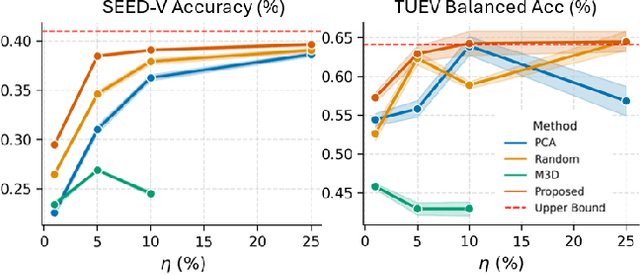

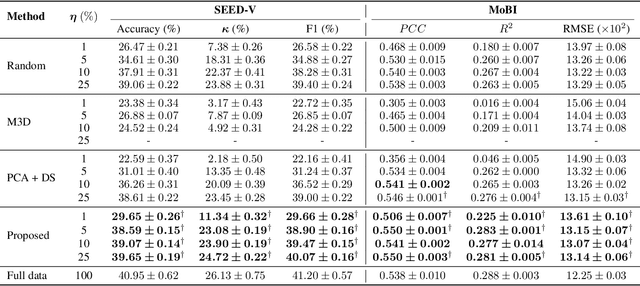

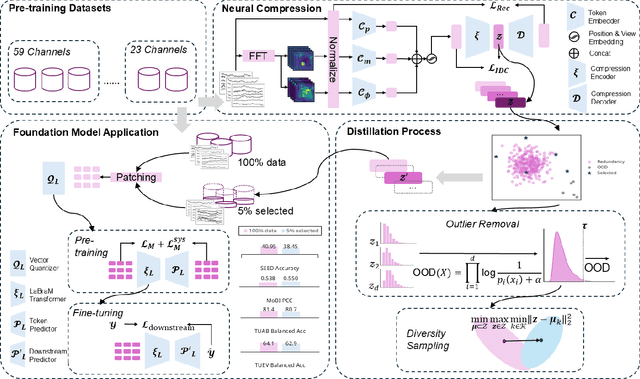

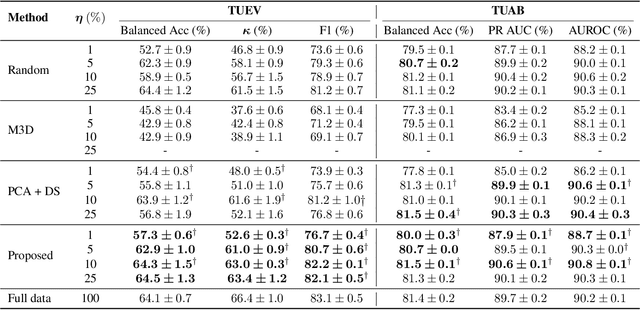

Abstract:Large-scale EEG foundation models have shown strong generalization across a range of downstream tasks, but their training remains resource-intensive due to the volume and variable quality of EEG data. In this work, we introduce EEG-DLite, a data distillation framework that enables more efficient pre-training by selectively removing noisy and redundant samples from large EEG datasets. EEG-DLite begins by encoding EEG segments into compact latent representations using a self-supervised autoencoder, allowing sample selection to be performed efficiently and with reduced sensitivity to noise. Based on these representations, EEG-DLite filters out outliers and minimizes redundancy, resulting in a smaller yet informative subset that retains the diversity essential for effective foundation model training. Through extensive experiments, we demonstrate that training on only 5 percent of a 2,500-hour dataset curated with EEG-DLite yields performance comparable to, and in some cases better than, training on the full dataset across multiple downstream tasks. To our knowledge, this is the first systematic study of pre-training data distillation in the context of EEG foundation models. EEG-DLite provides a scalable and practical path toward more effective and efficient physiological foundation modeling. The code is available at https://github.com/t170815518/EEG-DLite.

ECHO: Toward Contextual Seq2Seq Paradigms in Large EEG Models

Sep 26, 2025

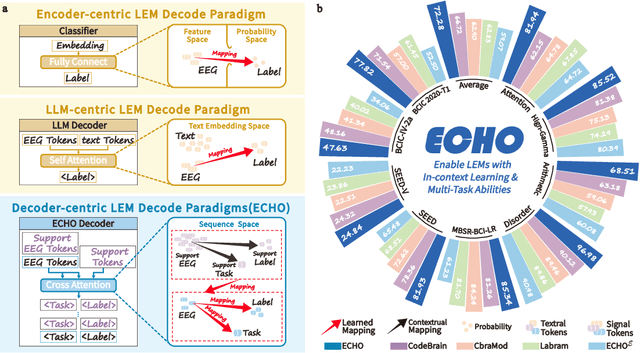

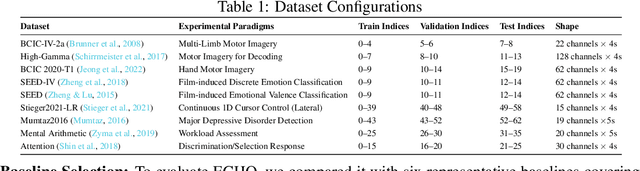

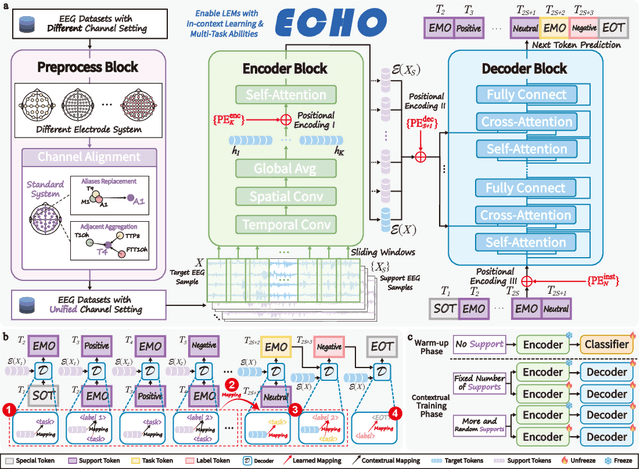

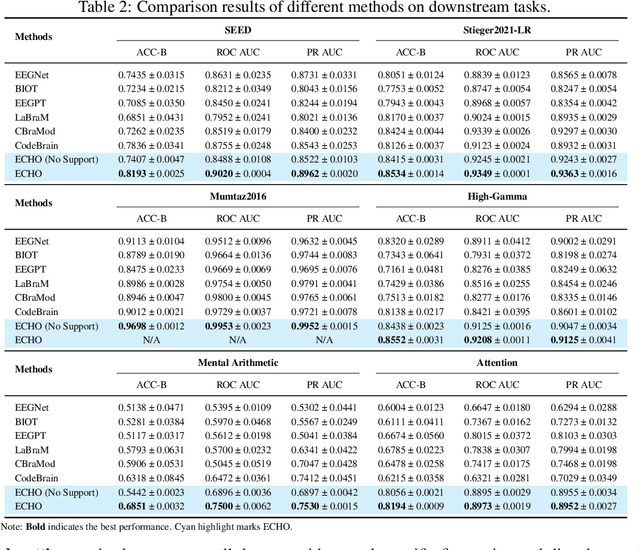

Abstract:Electroencephalography (EEG), with its broad range of applications, necessitates models that can generalize effectively across various tasks and datasets. Large EEG Models (LEMs) address this by pretraining encoder-centric architectures on large-scale unlabeled data to extract universal representations. While effective, these models lack decoders of comparable capacity, limiting the full utilization of the learned features. To address this issue, we introduce ECHO, a novel decoder-centric LEM paradigm that reformulates EEG modeling as sequence-to-sequence learning. ECHO captures layered relationships among signals, labels, and tasks within sequence space, while incorporating discrete support samples to construct contextual cues. This design equips ECHO with in-context learning, enabling dynamic adaptation to heterogeneous tasks without parameter updates. Extensive experiments across multiple datasets demonstrate that, even with basic model components, ECHO consistently outperforms state-of-the-art single-task LEMs in multi-task settings, showing superior generalization and adaptability.

BrainPro: Towards Large-scale Brain State-aware EEG Representation Learning

Sep 26, 2025

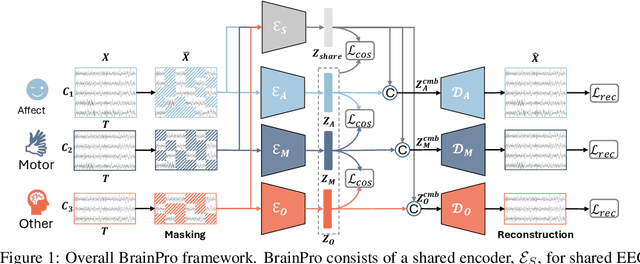

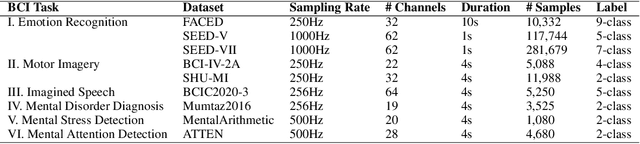

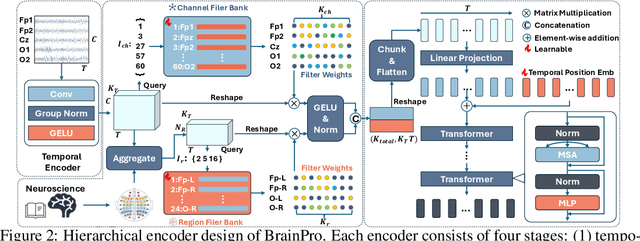

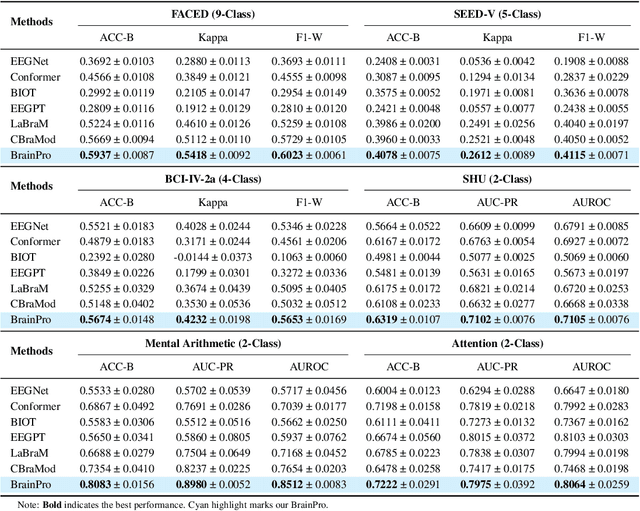

Abstract:Electroencephalography (EEG) is a non-invasive technique for recording brain electrical activity, widely used in brain-computer interface (BCI) and healthcare. Recent EEG foundation models trained on large-scale datasets have shown improved performance and generalizability over traditional decoding methods, yet significant challenges remain. Existing models often fail to explicitly capture channel-to-channel and region-to-region interactions, which are critical sources of information inherently encoded in EEG signals. Due to varying channel configurations across datasets, they either approximate spatial structure with self-attention or restrict training to a limited set of common channels, sacrificing flexibility and effectiveness. Moreover, although EEG datasets reflect diverse brain states such as emotion, motor, and others, current models rarely learn state-aware representations during self-supervised pre-training. To address these gaps, we propose BrainPro, a large EEG model that introduces a retrieval-based spatial learning block to flexibly capture channel- and region-level interactions across varying electrode layouts, and a brain state-decoupling block that enables state-aware representation learning through parallel encoders with decoupling and region-aware reconstruction losses. This design allows BrainPro to adapt seamlessly to diverse tasks and hardware settings. Pre-trained on an extensive EEG corpus, BrainPro achieves state-of-the-art performance and robust generalization across nine public BCI datasets. Our codes and the pre-trained weights will be released.

Fuzzy Information Evolution with Three-Way Decision in Social Network Group Decision-Making

May 22, 2025Abstract:In group decision-making (GDM) scenarios, uncertainty, dynamic social structures, and vague information present major challenges for traditional opinion dynamics models. To address these issues, this study proposes a novel social network group decision-making (SNGDM) framework that integrates three-way decision (3WD) theory, dynamic network reconstruction, and linguistic opinion representation. First, the 3WD mechanism is introduced to explicitly model hesitation and ambiguity in agent judgments, thereby preventing irrational decisions. Second, a connection adjustment rule based on opinion similarity is developed, enabling agents to adaptively update their communication links and better reflect the evolving nature of social relationships. Third, linguistic terms are used to describe agent opinions, allowing the model to handle subjective, vague, or incomplete information more effectively. Finally, an integrated multi-agent decision-making framework is constructed, which simultaneously considers individual uncertainty, opinion evolution, and network dynamics. The proposed model is applied to a multi-UAV cooperative decision-making scenario, where simulation results and consensus analysis demonstrate its effectiveness. Experimental comparisons further verify the advantages of the algorithm in enhancing system stability and representing realistic decision-making behaviors.

SelectiveFinetuning: Enhancing Transfer Learning in Sleep Staging through Selective Domain Alignment

Jan 07, 2025

Abstract:In practical sleep stage classification, a key challenge is the variability of EEG data across different subjects and environments. Differences in physiology, age, health status, and recording conditions can lead to domain shifts between data. These domain shifts often result in decreased model accuracy and reliability, particularly when the model is applied to new data with characteristics different from those it was originally trained on, which is a typical manifestation of negative transfer. To address this, we propose SelectiveFinetuning in this paper. Our method utilizes a pretrained Multi Resolution Convolutional Neural Network (MRCNN) to extract EEG features, capturing the distinctive characteristics of different sleep stages. To mitigate the effect of domain shifts, we introduce a domain aligning mechanism that employs Earth Mover Distance (EMD) to evaluate and select source domain data closely matching the target domain. By finetuning the model with selective source data, our SelectiveFinetuning enhances the model's performance on target domain that exhibits domain shifts compared to the data used for training. Experimental results show that our method outperforms existing baselines, offering greater robustness and adaptability in practical scenarios where data distributions are often unpredictable.

BiT-MamSleep: Bidirectional Temporal Mamba for EEG Sleep Staging

Nov 03, 2024

Abstract:In this paper, we address the challenges in automatic sleep stage classification, particularly the high computational cost, inadequate modeling of bidirectional temporal dependencies, and class imbalance issues faced by Transformer-based models. To address these limitations, we propose BiT-MamSleep, a novel architecture that integrates the Triple-Resolution CNN (TRCNN) for efficient multi-scale feature extraction with the Bidirectional Mamba (BiMamba) mechanism, which models both short- and long-term temporal dependencies through bidirectional processing of EEG data. Additionally, BiT-MamSleep incorporates an Adaptive Feature Recalibration (AFR) module and a temporal enhancement block to dynamically refine feature importance, optimizing classification accuracy without increasing computational complexity. To further improve robustness, we apply optimization techniques such as Focal Loss and SMOTE to mitigate class imbalance. Extensive experiments on four public datasets demonstrate that BiT-MamSleep significantly outperforms state-of-the-art methods, particularly in handling long EEG sequences and addressing class imbalance, leading to more accurate and scalable sleep stage classification.

A Comprehensive Survey on EEG-Based Emotion Recognition: A Graph-Based Perspective

Aug 13, 2024

Abstract:Compared to other modalities, electroencephalogram (EEG) based emotion recognition can intuitively respond to emotional patterns in the human brain and, therefore, has become one of the most focused tasks in affective computing. The nature of emotions is a physiological and psychological state change in response to brain region connectivity, making emotion recognition focus more on the dependency between brain regions instead of specific brain regions. A significant trend is the application of graphs to encapsulate such dependency as dynamic functional connections between nodes across temporal and spatial dimensions. Concurrently, the neuroscientific underpinnings behind this dependency endow the application of graphs in this field with a distinctive significance. However, there is neither a comprehensive review nor a tutorial for constructing emotion-relevant graphs in EEG-based emotion recognition. In this paper, we present a comprehensive survey of these studies, delivering a systematic review of graph-related methods in this field from a methodological perspective. We propose a unified framework for graph applications in this field and categorize these methods on this basis. Finally, based on previous studies, we also present several open challenges and future directions in this field.

Graph Neural Networks in EEG-based Emotion Recognition: A Survey

Feb 02, 2024

Abstract:Compared to other modalities, EEG-based emotion recognition can intuitively respond to the emotional patterns in the human brain and, therefore, has become one of the most concerning tasks in the brain-computer interfaces field. Since dependencies within brain regions are closely related to emotion, a significant trend is to develop Graph Neural Networks (GNNs) for EEG-based emotion recognition. However, brain region dependencies in emotional EEG have physiological bases that distinguish GNNs in this field from those in other time series fields. Besides, there is neither a comprehensive review nor guidance for constructing GNNs in EEG-based emotion recognition. In the survey, our categorization reveals the commonalities and differences of existing approaches under a unified framework of graph construction. We analyze and categorize methods from three stages in the framework to provide clear guidance on constructing GNNs in EEG-based emotion recognition. In addition, we discuss several open challenges and future directions, such as Temporal full-connected graph and Graph condensation.

EENED: End-to-End Neural Epilepsy Detection based on Convolutional Transformer

May 17, 2023

Abstract:Recently Transformer and Convolution neural network (CNN) based models have shown promising results in EEG signal processing. Transformer models can capture the global dependencies in EEG signals through a self-attention mechanism, while CNN models can capture local features such as sawtooth waves. In this work, we propose an end-to-end neural epilepsy detection model, EENED, that combines CNN and Transformer. Specifically, by introducing the convolution module into the Transformer encoder, EENED can learn the time-dependent relationship of the patient's EEG signal features and notice local EEG abnormal mutations closely related to epilepsy, such as the appearance of spikes and the sprinkling of sharp and slow waves. Our proposed framework combines the ability of Transformer and CNN to capture different scale features of EEG signals and holds promise for improving the accuracy and reliability of epilepsy detection. Our source code will be released soon on GitHub.

EEG-based Sleep Staging with Hybrid Attention

May 16, 2023

Abstract:Sleep staging is critical for assessing sleep quality and diagnosing sleep disorders. However, capturing both the spatial and temporal relationships within electroencephalogram (EEG) signals during different sleep stages remains challenging. In this paper, we propose a novel framework called the Hybrid Attention EEG Sleep Staging (HASS) Framework. Specifically, we propose a well-designed spatio-temporal attention mechanism to adaptively assign weights to inter-channels and intra-channel EEG segments based on the spatio-temporal relationship of the brain during different sleep stages. Experiment results on the MASS and ISRUC datasets demonstrate that HASS can significantly improve typical sleep staging networks. Our proposed framework alleviates the difficulties of capturing the spatial-temporal relationship of EEG signals during sleep staging and holds promise for improving the accuracy and reliability of sleep assessment in both clinical and research settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge