Jinan Zhou

ECHO: Toward Contextual Seq2Seq Paradigms in Large EEG Models

Sep 26, 2025

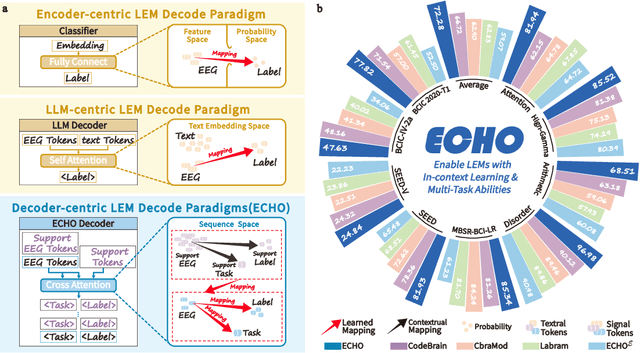

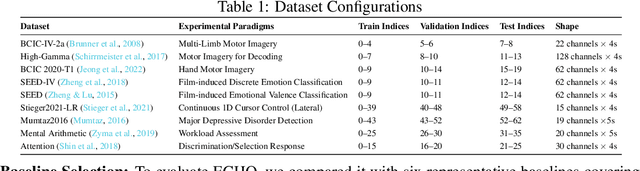

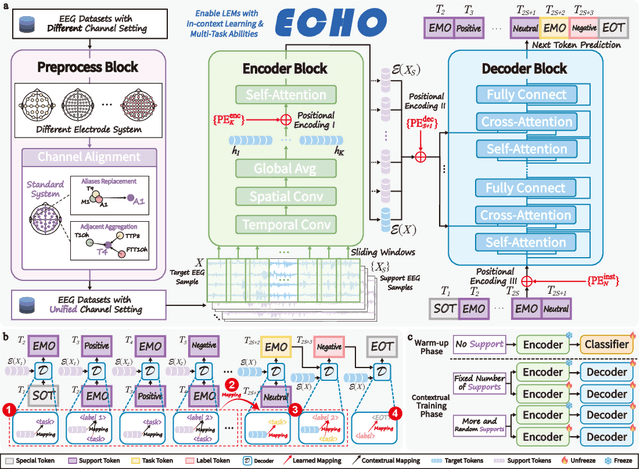

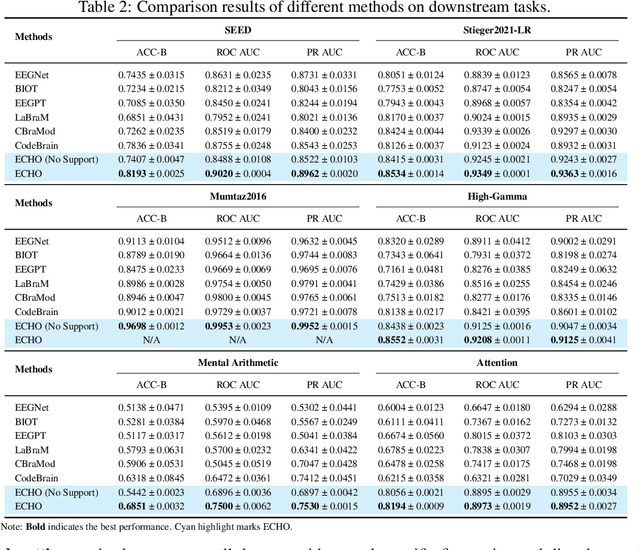

Abstract:Electroencephalography (EEG), with its broad range of applications, necessitates models that can generalize effectively across various tasks and datasets. Large EEG Models (LEMs) address this by pretraining encoder-centric architectures on large-scale unlabeled data to extract universal representations. While effective, these models lack decoders of comparable capacity, limiting the full utilization of the learned features. To address this issue, we introduce ECHO, a novel decoder-centric LEM paradigm that reformulates EEG modeling as sequence-to-sequence learning. ECHO captures layered relationships among signals, labels, and tasks within sequence space, while incorporating discrete support samples to construct contextual cues. This design equips ECHO with in-context learning, enabling dynamic adaptation to heterogeneous tasks without parameter updates. Extensive experiments across multiple datasets demonstrate that, even with basic model components, ECHO consistently outperforms state-of-the-art single-task LEMs in multi-task settings, showing superior generalization and adaptability.

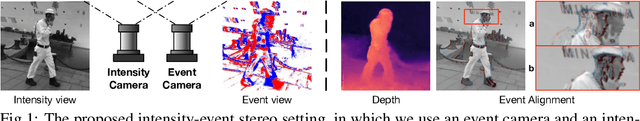

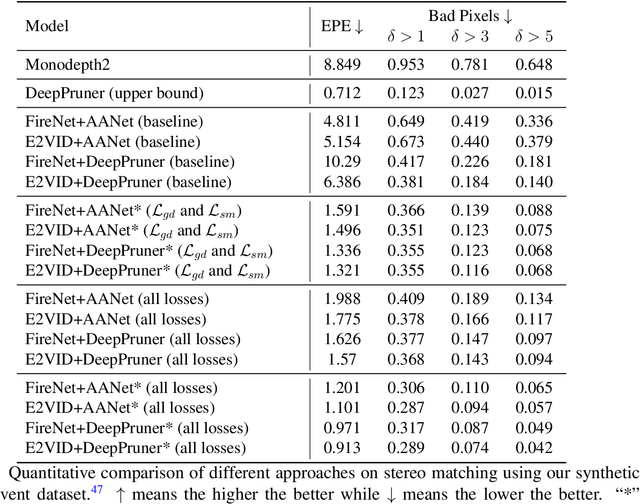

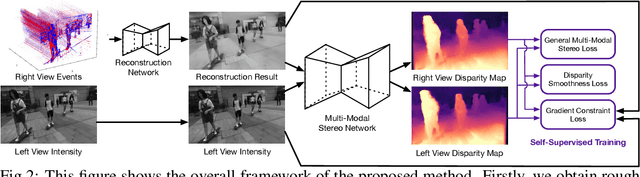

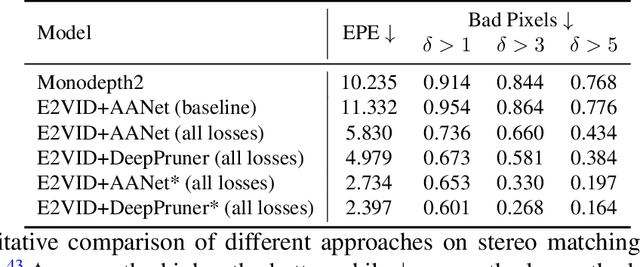

Self-Supervised Intensity-Event Stereo Matching

Nov 01, 2022

Abstract:Event cameras are novel bio-inspired vision sensors that output pixel-level intensity changes in microsecond accuracy with a high dynamic range and low power consumption. Despite these advantages, event cameras cannot be directly applied to computational imaging tasks due to the inability to obtain high-quality intensity and events simultaneously. This paper aims to connect a standalone event camera and a modern intensity camera so that the applications can take advantage of both two sensors. We establish this connection through a multi-modal stereo matching task. We first convert events to a reconstructed image and extend the existing stereo networks to this multi-modality condition. We propose a self-supervised method to train the multi-modal stereo network without using ground truth disparity data. The structure loss calculated on image gradients is used to enable self-supervised learning on such multi-modal data. Exploiting the internal stereo constraint between views with different modalities, we introduce general stereo loss functions, including disparity cross-consistency loss and internal disparity loss, leading to improved performance and robustness compared to existing approaches. The experiments demonstrate the effectiveness of the proposed method, especially the proposed general stereo loss functions, on both synthetic and real datasets. At last, we shed light on employing the aligned events and intensity images in downstream tasks, e.g., video interpolation application.

NASIB: Neural Architecture Search withIn Budget

Oct 19, 2019

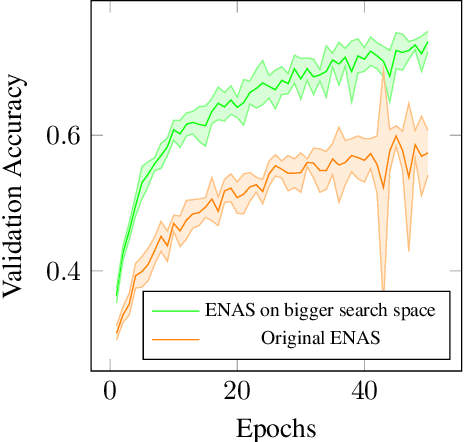

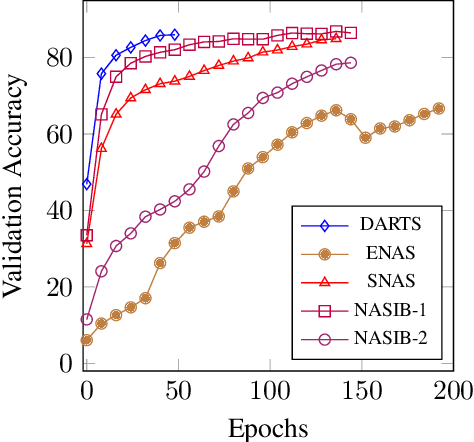

Abstract:Neural Architecture Search (NAS) represents a class of methods to generate the optimal neural network architecture and typically iterate over candidate architectures till convergence over some particular metric like validation loss. They are constrained by the available computation resources, especially in enterprise environments. In this paper, we propose a new approach for NAS, called NASIB, which adapts and attunes to the computation resources (budget) available by varying the exploration vs. exploitation trade-off. We reduce the expert bias by searching over an augmented search space induced by Superkernels. The proposed method can provide the architecture search useful for different computation resources and different domains beyond image classification of natural images where we lack bespoke architecture motifs and domain expertise. We show, on CIFAR10, that itis possible to search over a space that comprises of 12x more candidate operations than the traditional prior art in just 1.5 GPU days, while reaching close to state of the art accuracy. While our method searches over an exponentially larger search space, it could lead to novel architectures that require lesser domain expertise, compared to the majority of the existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge