Jingying Ma

A Foundation Model for Chest X-ray Interpretation with Grounded Reasoning via Online Reinforcement Learning

Sep 04, 2025Abstract:Medical foundation models (FMs) have shown tremendous promise amid the rapid advancements in artificial intelligence (AI) technologies. However, current medical FMs typically generate answers in a black-box manner, lacking transparent reasoning processes and locally grounded interpretability, which hinders their practical clinical deployments. To this end, we introduce DeepMedix-R1, a holistic medical FM for chest X-ray (CXR) interpretation. It leverages a sequential training pipeline: initially fine-tuned on curated CXR instruction data to equip with fundamental CXR interpretation capabilities, then exposed to high-quality synthetic reasoning samples to enable cold-start reasoning, and finally refined via online reinforcement learning to enhance both grounded reasoning quality and generation performance. Thus, the model produces both an answer and reasoning steps tied to the image's local regions for each query. Quantitative evaluation demonstrates substantial improvements in report generation (e.g., 14.54% and 31.32% over LLaVA-Rad and MedGemma) and visual question answering (e.g., 57.75% and 23.06% over MedGemma and CheXagent) tasks. To facilitate robust assessment, we propose Report Arena, a benchmarking framework using advanced language models to evaluate answer quality, further highlighting the superiority of DeepMedix-R1. Expert review of generated reasoning steps reveals greater interpretability and clinical plausibility compared to the established Qwen2.5-VL-7B model (0.7416 vs. 0.2584 overall preference). Collectively, our work advances medical FM development toward holistic, transparent, and clinically actionable modeling for CXR interpretation.

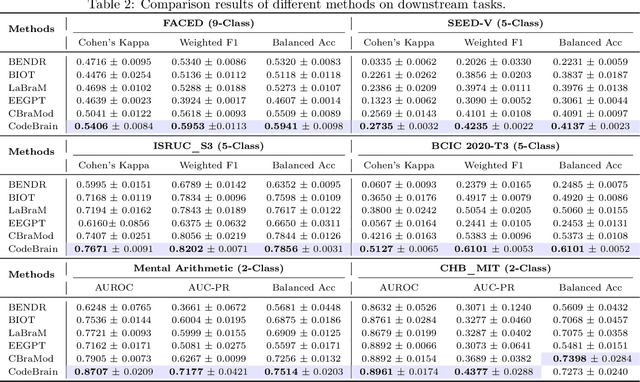

CodeBrain: Bridging Decoupled Tokenizer and Multi-Scale Architecture for EEG Foundation Model

Jun 10, 2025

Abstract:Electroencephalography (EEG) provides real-time insights into brain activity and is widely used in neuroscience. However, variations in channel configurations, sequence lengths, and task objectives limit the transferability of traditional task-specific models. Although recent EEG foundation models (EFMs) aim to learn generalizable representations, they struggle with limited heterogeneous representation capacity and inefficiency in capturing multi-scale brain dependencies. To address these challenges, we propose CodeBrain, an efficient EFM structurally aligned with brain organization, trained in two stages. (1) We introduce a TFDual-Tokenizer that independently tokenizes heterogeneous temporal and frequency components, enabling a quadratic expansion of the discrete representation space. This also offers a degree of interpretability through cross-domain token analysis. (2) We propose the EEGSSM, which combines a structured global convolution architecture and a sliding window attention mechanism to jointly model sparse long-range and local dependencies. Unlike fully connected Transformer models, EEGSSM better reflects the brain's small-world topology and efficiently captures EEG's inherent multi-scale structure. EEGSSM is trained with a masked self-supervised learning objective to predict token indices obtained in TFDual-Tokenizer. Comprehensive experiments on 10 public EEG datasets demonstrate the generalizability of CodeBrain with linear probing. By offering biologically informed and interpretable EEG modeling, CodeBrain lays the foundation for future neuroscience research. Both code and pretraining weights will be released in the future version.

Simultaneous Polysomnography and Cardiotocography Reveal Temporal Correlation Between Maternal Obstructive Sleep Apnea and Fetal Hypoxia

Apr 17, 2025Abstract:Background: Obstructive sleep apnea syndrome (OSAS) during pregnancy is common and can negatively affect fetal outcomes. However, studies on the immediate effects of maternal hypoxia on fetal heart rate (FHR) changes are lacking. Methods: We used time-synchronized polysomnography (PSG) and cardiotocography (CTG) data from two cohorts to analyze the correlation between maternal hypoxia and FHR changes (accelerations or decelerations). Maternal hypoxic event characteristics were analyzed using generalized linear modeling (GLM) to assess their associations with different FHR changes. Results: A total of 118 pregnant women participated. FHR changes were significantly associated with maternal hypoxia, primarily characterized by accelerations. A longer hypoxic duration correlated with more significant FHR accelerations (P < 0.05), while prolonged hypoxia and greater SpO2 drop were linked to FHR decelerations (P < 0.05). Both cohorts showed a transient increase in FHR during maternal hypoxia, which returned to baseline after the event resolved. Conclusion: Maternal hypoxia significantly affects FHR, suggesting that maternal OSAS may contribute to fetal hypoxia. These findings highlight the importance of maternal-fetal interactions and provide insights for future interventions.

Self-supervised Quantized Representation for Seamlessly Integrating Knowledge Graphs with Large Language Models

Jan 30, 2025

Abstract:Due to the presence of the natural gap between Knowledge Graph (KG) structures and the natural language, the effective integration of holistic structural information of KGs with Large Language Models (LLMs) has emerged as a significant question. To this end, we propose a two-stage framework to learn and apply quantized codes for each entity, aiming for the seamless integration of KGs with LLMs. Firstly, a self-supervised quantized representation (SSQR) method is proposed to compress both KG structural and semantic knowledge into discrete codes (\ie, tokens) that align the format of language sentences. We further design KG instruction-following data by viewing these learned codes as features to directly input to LLMs, thereby achieving seamless integration. The experiment results demonstrate that SSQR outperforms existing unsupervised quantized methods, producing more distinguishable codes. Further, the fine-tuned LLaMA2 and LLaMA3.1 also have superior performance on KG link prediction and triple classification tasks, utilizing only 16 tokens per entity instead of thousands in conventional prompting methods.

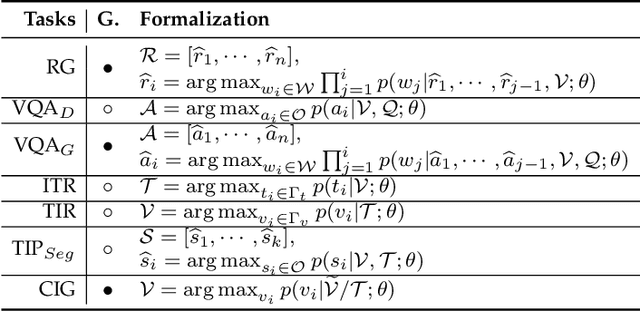

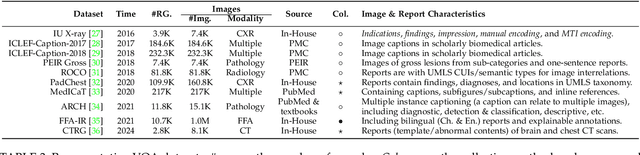

Has Multimodal Learning Delivered Universal Intelligence in Healthcare? A Comprehensive Survey

Aug 23, 2024

Abstract:The rapid development of artificial intelligence has constantly reshaped the field of intelligent healthcare and medicine. As a vital technology, multimodal learning has increasingly garnered interest due to data complementarity, comprehensive modeling form, and great application potential. Currently, numerous researchers are dedicating their attention to this field, conducting extensive studies and constructing abundant intelligent systems. Naturally, an open question arises that has multimodal learning delivered universal intelligence in healthcare? To answer the question, we adopt three unique viewpoints for a holistic analysis. Firstly, we conduct a comprehensive survey of the current progress of medical multimodal learning from the perspectives of datasets, task-oriented methods, and universal foundation models. Based on them, we further discuss the proposed question from five issues to explore the real impacts of advanced techniques in healthcare, from data and technologies to performance and ethics. The answer is that current technologies have NOT achieved universal intelligence and there remains a significant journey to undertake. Finally, in light of the above reviews and discussions, we point out ten potential directions for exploration towards the goal of universal intelligence in healthcare.

ST-USleepNet: A Spatial-Temporal Coupling Prominence Network for Multi-Channel Sleep Staging

Aug 21, 2024

Abstract:Sleep staging is critical for assessing sleep quality and diagnosing disorders. Recent advancements in artificial intelligence have driven the development of automated sleep staging models, which still face two significant challenges. 1) Simultaneously extracting prominent temporal and spatial sleep features from multi-channel raw signals, including characteristic sleep waveforms and salient spatial brain networks. 2) Capturing the spatial-temporal coupling patterns essential for accurate sleep staging. To address these challenges, we propose a novel framework named ST-USleepNet, comprising a spatial-temporal graph construction module (ST) and a U-shaped sleep network (USleepNet). The ST module converts raw signals into a spatial-temporal graph to model spatial-temporal couplings. The USleepNet utilizes a U-shaped structure originally designed for image segmentation. Similar to how image segmentation isolates significant targets, when applied to both raw sleep signals and ST module-generated graph data, USleepNet segments these inputs to extract prominent temporal and spatial sleep features simultaneously. Testing on three datasets demonstrates that ST-USleepNet outperforms existing baselines, and model visualizations confirm its efficacy in extracting prominent sleep features and temporal-spatial coupling patterns across various sleep stages. The code is available at: https://github.com/Majy-Yuji/ST-USleepNet.git.

Language Modeling on Tabular Data: A Survey of Foundations, Techniques and Evolution

Aug 20, 2024

Abstract:Tabular data, a prevalent data type across various domains, presents unique challenges due to its heterogeneous nature and complex structural relationships. Achieving high predictive performance and robustness in tabular data analysis holds significant promise for numerous applications. Influenced by recent advancements in natural language processing, particularly transformer architectures, new methods for tabular data modeling have emerged. Early techniques concentrated on pre-training transformers from scratch, often encountering scalability issues. Subsequently, methods leveraging pre-trained language models like BERT have been developed, which require less data and yield enhanced performance. The recent advent of large language models, such as GPT and LLaMA, has further revolutionized the field, facilitating more advanced and diverse applications with minimal fine-tuning. Despite the growing interest, a comprehensive survey of language modeling techniques for tabular data remains absent. This paper fills this gap by providing a systematic review of the development of language modeling for tabular data, encompassing: (1) a categorization of different tabular data structures and data types; (2) a review of key datasets used in model training and tasks used for evaluation; (3) a summary of modeling techniques including widely-adopted data processing methods, popular architectures, and training objectives; (4) the evolution from adapting traditional Pre-training/Pre-trained language models to the utilization of large language models; (5) an identification of persistent challenges and potential future research directions in language modeling for tabular data analysis. GitHub page associated with this survey is available at: https://github.com/lanxiang1017/Language-Modeling-on-Tabular-Data-Survey.git.

Deep Learning with Information Fusion and Model Interpretation for Health Monitoring of Fetus based on Long-term Prenatal Electronic Fetal Heart Rate Monitoring Data

Jan 27, 2024Abstract:Long-term fetal heart rate (FHR) monitoring during the antepartum period, increasingly popularized by electronic FHR monitoring, represents a growing approach in FHR monitoring. This kind of continuous monitoring, in contrast to the short-term one, collects an extended period of fetal heart data. This offers a more comprehensive understanding of fetus's conditions. However, the interpretation of long-term antenatal fetal heart monitoring is still in its early stages, lacking corresponding clinical standards. Furthermore, the substantial amount of data generated by continuous monitoring imposes a significant burden on clinical work when analyzed manually. To address above challenges, this study develops an automatic analysis system named LARA (Long-term Antepartum Risk Analysis system) for continuous FHR monitoring, combining deep learning and information fusion methods. LARA's core is a well-established convolutional neural network (CNN) model. It processes long-term FHR data as input and generates a Risk Distribution Map (RDM) and Risk Index (RI) as the analysis results. We evaluate LARA on inner test dataset, the performance metrics are as follows: AUC 0.872, accuracy 0.816, specificity 0.811, sensitivity 0.806, precision 0.271, and F1 score 0.415. In our study, we observe that long-term FHR monitoring data with higher RI is more likely to result in adverse outcomes (p=0.0021). In conclusion, this study introduces LARA, the first automated analysis system for long-term FHR monitoring, initiating the further explorations into its clinical value in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge