Zihan Zhu

Decoding Rewards in Competitive Games: Inverse Game Theory with Entropy Regularization

Jan 19, 2026Abstract:Estimating the unknown reward functions driving agents' behaviors is of central interest in inverse reinforcement learning and game theory. To tackle this problem, we develop a unified framework for reward function recovery in two-player zero-sum matrix games and Markov games with entropy regularization, where we aim to reconstruct the underlying reward functions given observed players' strategies and actions. This task is challenging due to the inherent ambiguity of inverse problems, the non-uniqueness of feasible rewards, and limited observational data coverage. To address these challenges, we establish the reward function's identifiability using the quantal response equilibrium (QRE) under linear assumptions. Building upon this theoretical foundation, we propose a novel algorithm to learn reward functions from observed actions. Our algorithm works in both static and dynamic settings and is adaptable to incorporate different methods, such as Maximum Likelihood Estimation (MLE). We provide strong theoretical guarantees for the reliability and sample efficiency of our algorithm. Further, we conduct extensive numerical studies to demonstrate the practical effectiveness of the proposed framework, offering new insights into decision-making in competitive environments.

Statistical Inference for Fuzzy Clustering

Jan 06, 2026Abstract:Clustering is a central tool in biomedical research for discovering heterogeneous patient subpopulations, where group boundaries are often diffuse rather than sharply separated. Traditional methods produce hard partitions, whereas soft clustering methods such as fuzzy $c$-means (FCM) allow mixed memberships and better capture uncertainty and gradual transitions. Despite the widespread use of FCM, principled statistical inference for fuzzy clustering remains limited. We develop a new framework for weighted fuzzy $c$-means (WFCM) for settings with potential cluster size imbalance. Cluster-specific weights rebalance the classical FCM criterion so that smaller clusters are not overwhelmed by dominant groups, and the weighted objective induces a normalized density model with scale parameter $σ$ and fuzziness parameter $m$. Estimation is performed via a blockwise majorize--minimize (MM) procedure that alternates closed-form membership and centroid updates with likelihood-based updates of $(σ,\bw)$. The intractable normalizing constant is approximated by importance sampling using a data-adaptive Gaussian mixture proposal. We further provide likelihood ratio tests for comparing cluster centers and bootstrap-based confidence intervals. We establish consistency and asymptotic normality of the maximum likelihood estimator, validate the method through simulations, and illustrate it using single-cell RNA-seq and Alzheimer disease Neuroimaging Initiative (ADNI) data. These applications demonstrate stable uncertainty quantification and biologically meaningful soft memberships, ranging from well-separated cell populations under imbalance to a graded AD versus non-AD continuum consistent with disease progression.

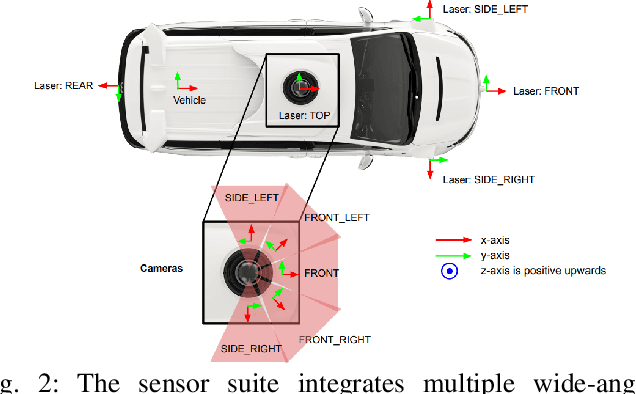

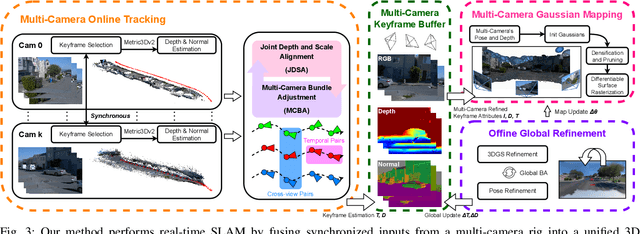

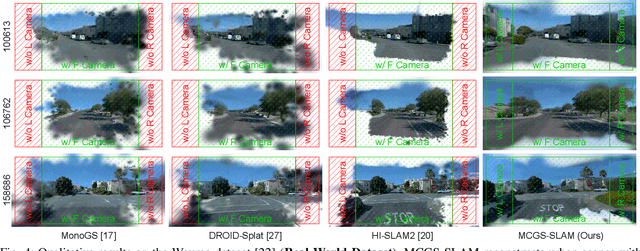

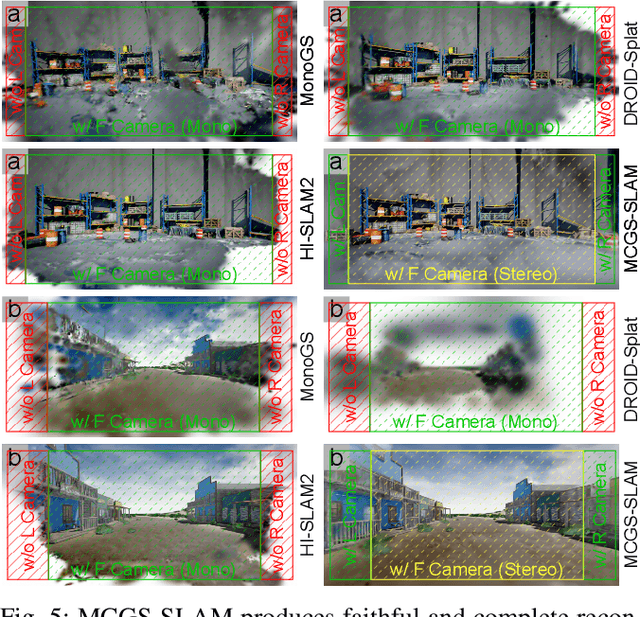

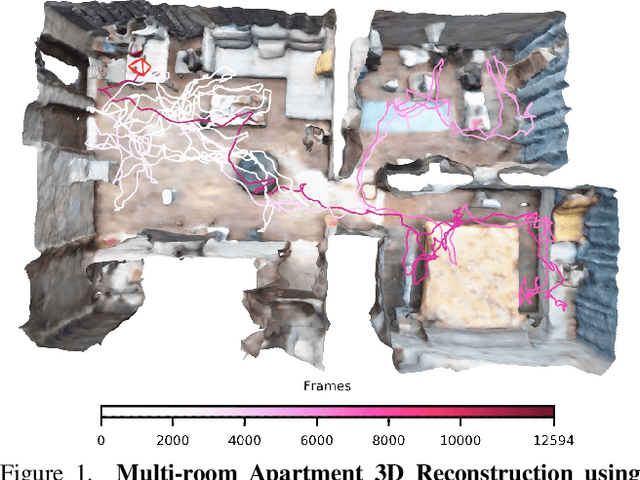

MCGS-SLAM: A Multi-Camera SLAM Framework Using Gaussian Splatting for High-Fidelity Mapping

Sep 17, 2025

Abstract:Recent progress in dense SLAM has primarily targeted monocular setups, often at the expense of robustness and geometric coverage. We present MCGS-SLAM, the first purely RGB-based multi-camera SLAM system built on 3D Gaussian Splatting (3DGS). Unlike prior methods relying on sparse maps or inertial data, MCGS-SLAM fuses dense RGB inputs from multiple viewpoints into a unified, continuously optimized Gaussian map. A multi-camera bundle adjustment (MCBA) jointly refines poses and depths via dense photometric and geometric residuals, while a scale consistency module enforces metric alignment across views using low-rank priors. The system supports RGB input and maintains real-time performance at large scale. Experiments on synthetic and real-world datasets show that MCGS-SLAM consistently yields accurate trajectories and photorealistic reconstructions, usually outperforming monocular baselines. Notably, the wide field of view from multi-camera input enables reconstruction of side-view regions that monocular setups miss, critical for safe autonomous operation. These results highlight the promise of multi-camera Gaussian Splatting SLAM for high-fidelity mapping in robotics and autonomous driving.

WildGS-SLAM: Monocular Gaussian Splatting SLAM in Dynamic Environments

Apr 04, 2025Abstract:We present WildGS-SLAM, a robust and efficient monocular RGB SLAM system designed to handle dynamic environments by leveraging uncertainty-aware geometric mapping. Unlike traditional SLAM systems, which assume static scenes, our approach integrates depth and uncertainty information to enhance tracking, mapping, and rendering performance in the presence of moving objects. We introduce an uncertainty map, predicted by a shallow multi-layer perceptron and DINOv2 features, to guide dynamic object removal during both tracking and mapping. This uncertainty map enhances dense bundle adjustment and Gaussian map optimization, improving reconstruction accuracy. Our system is evaluated on multiple datasets and demonstrates artifact-free view synthesis. Results showcase WildGS-SLAM's superior performance in dynamic environments compared to state-of-the-art methods.

False Discovery Rate Control via Frequentist-assisted Horseshoe

Feb 08, 2025Abstract:The horseshoe prior, a widely used handy alternative to the spike-and-slab prior, has proven to be an exceptional default global-local shrinkage prior in Bayesian inference and machine learning. However, designing tests with frequentist false discovery rate (FDR) control using the horseshoe prior or the general class of global-local shrinkage priors remains an open problem. In this paper, we propose a frequentist-assisted horseshoe procedure that not only resolves this long-standing FDR control issue for the high dimensional normal means testing problem but also exhibits satisfactory finite-sample FDR control under any desired nominal level for both large-scale multiple independent and correlated tests. We carry out the frequentist-assisted horseshoe procedure in an easy and intuitive way by using the minimax estimator of the global parameter of the horseshoe prior while maintaining the remaining full Bayes vanilla horseshoe structure. The results of both intensive simulations under different sparsity levels, and real-world data demonstrate that the frequentist-assisted horseshoe procedure consistently achieves robust finite-sample FDR control. Existing frequentist or Bayesian FDR control procedures can lose finite-sample FDR control in a variety of common sparse cases. Based on the intimate relationship between the minimax estimation and the level of FDR control discovered in this work, we point out potential generalizations to achieve FDR control for both more complicated models and the general global-local shrinkage prior family.

ProcTex: Consistent and Interactive Text-to-texture Synthesis for Procedural Models

Jan 28, 2025

Abstract:Recent advancement in 2D image diffusion models has driven significant progress in text-guided texture synthesis, enabling realistic, high-quality texture generation from arbitrary text prompts. However, current methods usually focus on synthesizing texture for single static 3D objects, and struggle to handle entire families of shapes, such as those produced by procedural programs. Applying existing methods naively to each procedural shape is too slow to support exploring different parameter settings at interactive rates, and also results in inconsistent textures across the procedural shapes. To this end, we introduce ProcTex, the first text-to-texture system designed for procedural 3D models. ProcTex enables consistent and real-time text-guided texture synthesis for families of shapes, which integrates seamlessly with the interactive design flow of procedural models. To ensure consistency, our core approach is to generate texture for the shape produced by one setting of the procedural parameters, followed by a texture transfer stage to apply the texture to other parameter settings. We also develop several techniques, including a novel UV displacement network for real-time texture transfer, the retexturing pipeline to support structural changes from discrete procedural parameters, and part-level UV texture map generation for local appearance editing. Extensive experiments on a diverse set of professional procedural models validate ProcTex's ability to produce high-quality, visually consistent textures while supporting real-time, interactive applications.

NeRF On-the-go: Exploiting Uncertainty for Distractor-free NeRFs in the Wild

May 29, 2024

Abstract:Neural Radiance Fields (NeRFs) have shown remarkable success in synthesizing photorealistic views from multi-view images of static scenes, but face challenges in dynamic, real-world environments with distractors like moving objects, shadows, and lighting changes. Existing methods manage controlled environments and low occlusion ratios but fall short in render quality, especially under high occlusion scenarios. In this paper, we introduce NeRF On-the-go, a simple yet effective approach that enables the robust synthesis of novel views in complex, in-the-wild scenes from only casually captured image sequences. Delving into uncertainty, our method not only efficiently eliminates distractors, even when they are predominant in captures, but also achieves a notably faster convergence speed. Through comprehensive experiments on various scenes, our method demonstrates a significant improvement over state-of-the-art techniques. This advancement opens new avenues for NeRF in diverse and dynamic real-world applications.

NICER-SLAM: Neural Implicit Scene Encoding for RGB SLAM

Feb 07, 2023

Abstract:Neural implicit representations have recently become popular in simultaneous localization and mapping (SLAM), especially in dense visual SLAM. However, previous works in this direction either rely on RGB-D sensors, or require a separate monocular SLAM approach for camera tracking and do not produce high-fidelity dense 3D scene reconstruction. In this paper, we present NICER-SLAM, a dense RGB SLAM system that simultaneously optimizes for camera poses and a hierarchical neural implicit map representation, which also allows for high-quality novel view synthesis. To facilitate the optimization process for mapping, we integrate additional supervision signals including easy-to-obtain monocular geometric cues and optical flow, and also introduce a simple warping loss to further enforce geometry consistency. Moreover, to further boost performance in complicated indoor scenes, we also propose a local adaptive transformation from signed distance functions (SDFs) to density in the volume rendering equation. On both synthetic and real-world datasets we demonstrate strong performance in dense mapping, tracking, and novel view synthesis, even competitive with recent RGB-D SLAM systems.

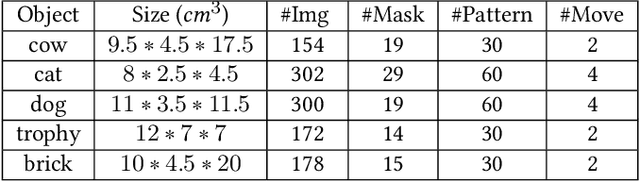

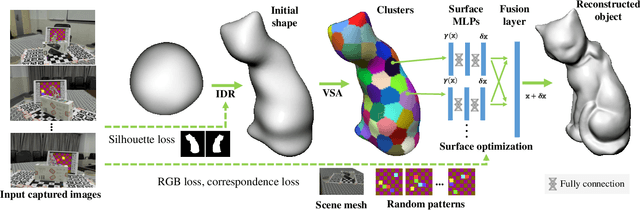

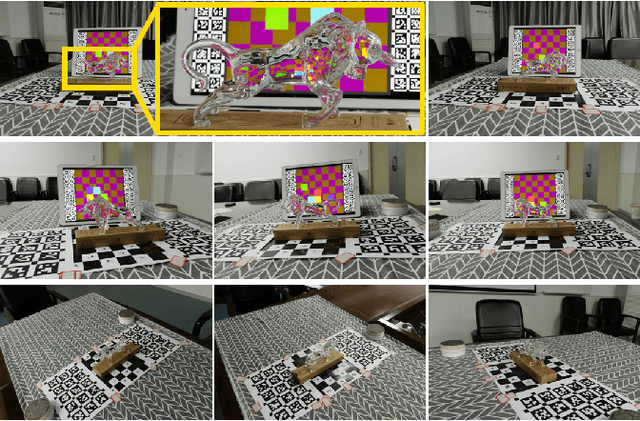

A Hybrid Mesh-neural Representation for 3D Transparent Object Reconstruction

Mar 24, 2022

Abstract:We propose a novel method to reconstruct the 3D shapes of transparent objects using hand-held captured images under natural light conditions. It combines the advantage of explicit mesh and multi-layer perceptron (MLP) network, a hybrid representation, to simplify the capture setting used in recent contributions. After obtaining an initial shape through the multi-view silhouettes, we introduce surface-based local MLPs to encode the vertex displacement field (VDF) for the reconstruction of surface details. The design of local MLPs allows to represent the VDF in a piece-wise manner using two layer MLP networks, which is beneficial to the optimization algorithm. Defining local MLPs on the surface instead of the volume also reduces the searching space. Such a hybrid representation enables us to relax the ray-pixel correspondences that represent the light path constraint to our designed ray-cell correspondences, which significantly simplifies the implementation of single-image based environment matting algorithm. We evaluate our representation and reconstruction algorithm on several transparent objects with ground truth models. Our experiments show that our method can produce high-quality reconstruction results superior to state-of-the-art methods using a simplified data acquisition setup.

NICE-SLAM: Neural Implicit Scalable Encoding for SLAM

Dec 22, 2021

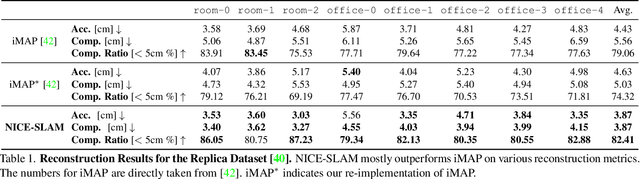

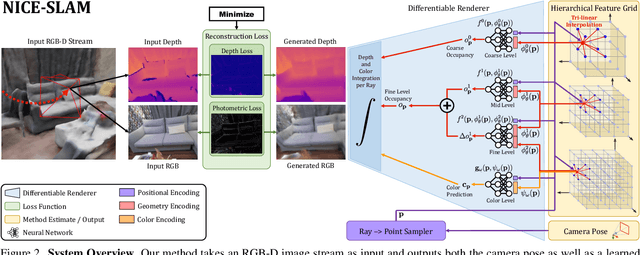

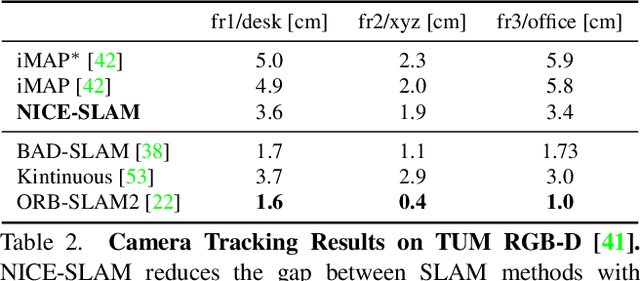

Abstract:Neural implicit representations have recently shown encouraging results in various domains, including promising progress in simultaneous localization and mapping (SLAM). Nevertheless, existing methods produce over-smoothed scene reconstructions and have difficulty scaling up to large scenes. These limitations are mainly due to their simple fully-connected network architecture that does not incorporate local information in the observations. In this paper, we present NICE-SLAM, a dense SLAM system that incorporates multi-level local information by introducing a hierarchical scene representation. Optimizing this representation with pre-trained geometric priors enables detailed reconstruction on large indoor scenes. Compared to recent neural implicit SLAM systems, our approach is more scalable, efficient, and robust. Experiments on five challenging datasets demonstrate competitive results of NICE-SLAM in both mapping and tracking quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge