Michael Evans

NVIDIA Nemotron 3: Efficient and Open Intelligence

Dec 24, 2025Abstract:We introduce the Nemotron 3 family of models - Nano, Super, and Ultra. These models deliver strong agentic, reasoning, and conversational capabilities. The Nemotron 3 family uses a Mixture-of-Experts hybrid Mamba-Transformer architecture to provide best-in-class throughput and context lengths of up to 1M tokens. Super and Ultra models are trained with NVFP4 and incorporate LatentMoE, a novel approach that improves model quality. The two larger models also include MTP layers for faster text generation. All Nemotron 3 models are post-trained using multi-environment reinforcement learning enabling reasoning, multi-step tool use, and support granular reasoning budget control. Nano, the smallest model, outperforms comparable models in accuracy while remaining extremely cost-efficient for inference. Super is optimized for collaborative agents and high-volume workloads such as IT ticket automation. Ultra, the largest model, provides state-of-the-art accuracy and reasoning performance. Nano is released together with its technical report and this white paper, while Super and Ultra will follow in the coming months. We will openly release the model weights, pre- and post-training software, recipes, and all data for which we hold redistribution rights.

Nemotron 3 Nano: Open, Efficient Mixture-of-Experts Hybrid Mamba-Transformer Model for Agentic Reasoning

Dec 23, 2025Abstract:We present Nemotron 3 Nano 30B-A3B, a Mixture-of-Experts hybrid Mamba-Transformer language model. Nemotron 3 Nano was pretrained on 25 trillion text tokens, including more than 3 trillion new unique tokens over Nemotron 2, followed by supervised fine tuning and large-scale RL on diverse environments. Nemotron 3 Nano achieves better accuracy than our previous generation Nemotron 2 Nano while activating less than half of the parameters per forward pass. It achieves up to 3.3x higher inference throughput than similarly-sized open models like GPT-OSS-20B and Qwen3-30B-A3B-Thinking-2507, while also being more accurate on popular benchmarks. Nemotron 3 Nano demonstrates enhanced agentic, reasoning, and chat abilities and supports context lengths up to 1M tokens. We release both our pretrained Nemotron 3 Nano 30B-A3B Base and post-trained Nemotron 3 Nano 30B-A3B checkpoints on Hugging Face.

NVIDIA Nemotron Nano V2 VL

Nov 07, 2025Abstract:We introduce Nemotron Nano V2 VL, the latest model of the Nemotron vision-language series designed for strong real-world document understanding, long video comprehension, and reasoning tasks. Nemotron Nano V2 VL delivers significant improvements over our previous model, Llama-3.1-Nemotron-Nano-VL-8B, across all vision and text domains through major enhancements in model architecture, datasets, and training recipes. Nemotron Nano V2 VL builds on Nemotron Nano V2, a hybrid Mamba-Transformer LLM, and innovative token reduction techniques to achieve higher inference throughput in long document and video scenarios. We are releasing model checkpoints in BF16, FP8, and FP4 formats and sharing large parts of our datasets, recipes and training code.

NVIDIA Nemotron Nano 2: An Accurate and Efficient Hybrid Mamba-Transformer Reasoning Model

Aug 21, 2025

Abstract:We introduce Nemotron-Nano-9B-v2, a hybrid Mamba-Transformer language model designed to increase throughput for reasoning workloads while achieving state-of-the-art accuracy compared to similarly-sized models. Nemotron-Nano-9B-v2 builds on the Nemotron-H architecture, in which the majority of the self-attention layers in the common Transformer architecture are replaced with Mamba-2 layers, to achieve improved inference speed when generating the long thinking traces needed for reasoning. We create Nemotron-Nano-9B-v2 by first pre-training a 12-billion-parameter model (Nemotron-Nano-12B-v2-Base) on 20 trillion tokens using an FP8 training recipe. After aligning Nemotron-Nano-12B-v2-Base, we employ the Minitron strategy to compress and distill the model with the goal of enabling inference on up to 128k tokens on a single NVIDIA A10G GPU (22GiB of memory, bfloat16 precision). Compared to existing similarly-sized models (e.g., Qwen3-8B), we show that Nemotron-Nano-9B-v2 achieves on-par or better accuracy on reasoning benchmarks while achieving up to 6x higher inference throughput in reasoning settings like 8k input and 16k output tokens. We are releasing Nemotron-Nano-9B-v2, Nemotron-Nano12B-v2-Base, and Nemotron-Nano-9B-v2-Base checkpoints along with the majority of our pre- and post-training datasets on Hugging Face.

Llama-Nemotron: Efficient Reasoning Models

May 02, 2025

Abstract:We introduce the Llama-Nemotron series of models, an open family of heterogeneous reasoning models that deliver exceptional reasoning capabilities, inference efficiency, and an open license for enterprise use. The family comes in three sizes -- Nano (8B), Super (49B), and Ultra (253B) -- and performs competitively with state-of-the-art reasoning models such as DeepSeek-R1 while offering superior inference throughput and memory efficiency. In this report, we discuss the training procedure for these models, which entails using neural architecture search from Llama 3 models for accelerated inference, knowledge distillation, and continued pretraining, followed by a reasoning-focused post-training stage consisting of two main parts: supervised fine-tuning and large scale reinforcement learning. Llama-Nemotron models are the first open-source models to support a dynamic reasoning toggle, allowing users to switch between standard chat and reasoning modes during inference. To further support open research and facilitate model development, we provide the following resources: 1. We release the Llama-Nemotron reasoning models -- LN-Nano, LN-Super, and LN-Ultra -- under the commercially permissive NVIDIA Open Model License Agreement. 2. We release the complete post-training dataset: Llama-Nemotron-Post-Training-Dataset. 3. We also release our training codebases: NeMo, NeMo-Aligner, and Megatron-LM.

Nemotron-H: A Family of Accurate and Efficient Hybrid Mamba-Transformer Models

Apr 10, 2025

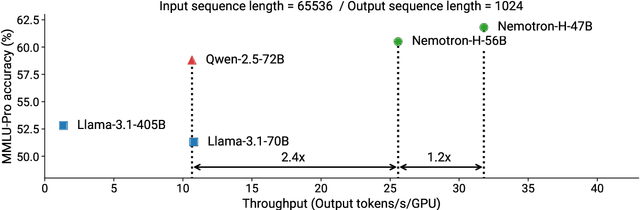

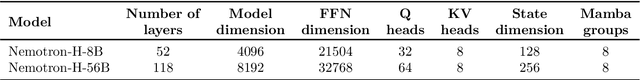

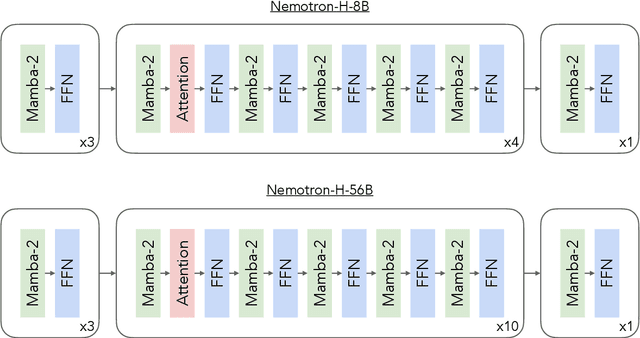

Abstract:As inference-time scaling becomes critical for enhanced reasoning capabilities, it is increasingly becoming important to build models that are efficient to infer. We introduce Nemotron-H, a family of 8B and 56B/47B hybrid Mamba-Transformer models designed to reduce inference cost for a given accuracy level. To achieve this goal, we replace the majority of self-attention layers in the common Transformer model architecture with Mamba layers that perform constant computation and require constant memory per generated token. We show that Nemotron-H models offer either better or on-par accuracy compared to other similarly-sized state-of-the-art open-sourced Transformer models (e.g., Qwen-2.5-7B/72B and Llama-3.1-8B/70B), while being up to 3$\times$ faster at inference. To further increase inference speed and reduce the memory required at inference time, we created Nemotron-H-47B-Base from the 56B model using a new compression via pruning and distillation technique called MiniPuzzle. Nemotron-H-47B-Base achieves similar accuracy to the 56B model, but is 20% faster to infer. In addition, we introduce an FP8-based training recipe and show that it can achieve on par results with BF16-based training. This recipe is used to train the 56B model. All Nemotron-H models will be released, with support in Hugging Face, NeMo, and Megatron-LM.

False Discovery Rate Control via Frequentist-assisted Horseshoe

Feb 08, 2025Abstract:The horseshoe prior, a widely used handy alternative to the spike-and-slab prior, has proven to be an exceptional default global-local shrinkage prior in Bayesian inference and machine learning. However, designing tests with frequentist false discovery rate (FDR) control using the horseshoe prior or the general class of global-local shrinkage priors remains an open problem. In this paper, we propose a frequentist-assisted horseshoe procedure that not only resolves this long-standing FDR control issue for the high dimensional normal means testing problem but also exhibits satisfactory finite-sample FDR control under any desired nominal level for both large-scale multiple independent and correlated tests. We carry out the frequentist-assisted horseshoe procedure in an easy and intuitive way by using the minimax estimator of the global parameter of the horseshoe prior while maintaining the remaining full Bayes vanilla horseshoe structure. The results of both intensive simulations under different sparsity levels, and real-world data demonstrate that the frequentist-assisted horseshoe procedure consistently achieves robust finite-sample FDR control. Existing frequentist or Bayesian FDR control procedures can lose finite-sample FDR control in a variety of common sparse cases. Based on the intimate relationship between the minimax estimation and the level of FDR control discovered in this work, we point out potential generalizations to achieve FDR control for both more complicated models and the general global-local shrinkage prior family.

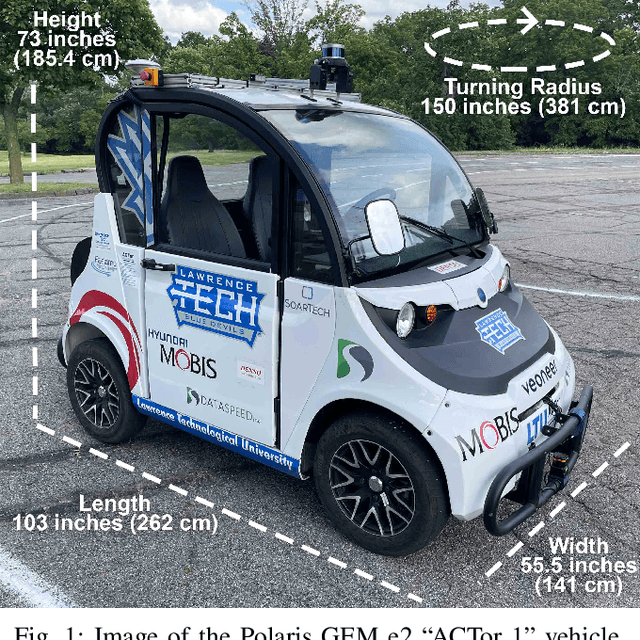

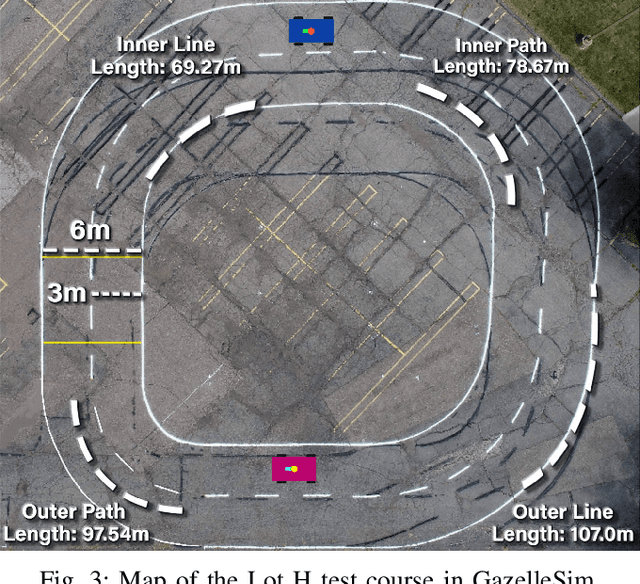

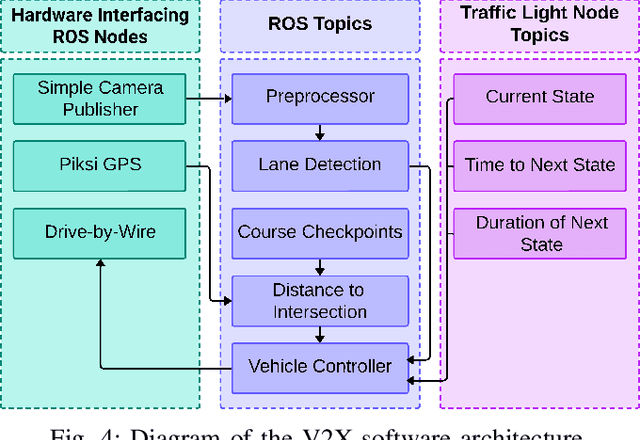

Developing, Analyzing, and Evaluating Self-Drive Algorithms Using Drive-by-Wire Electric Vehicles

Sep 04, 2024

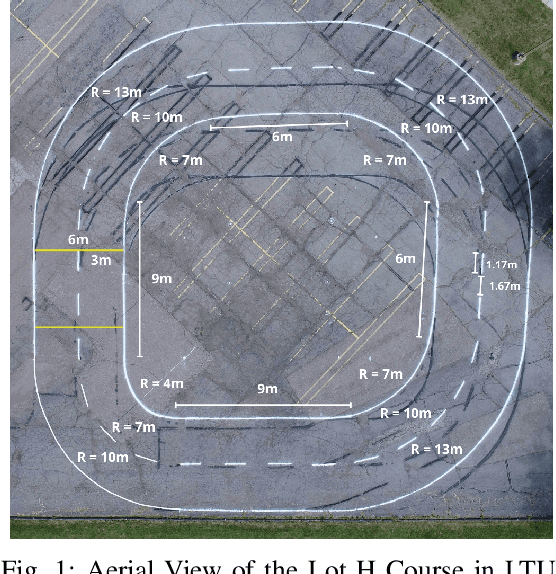

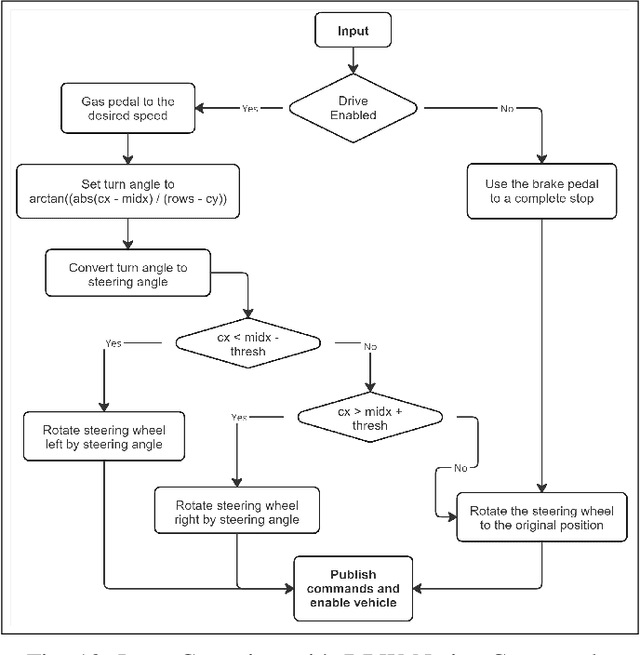

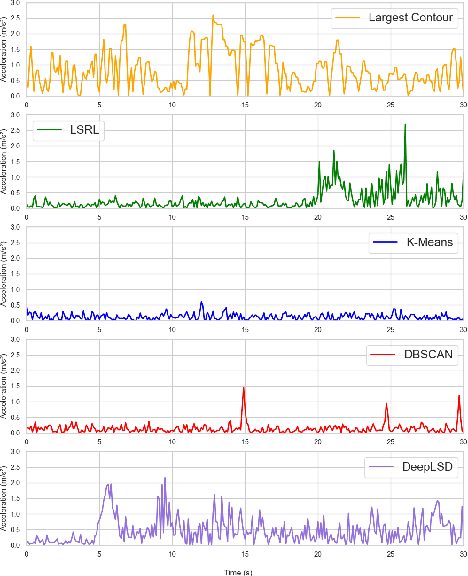

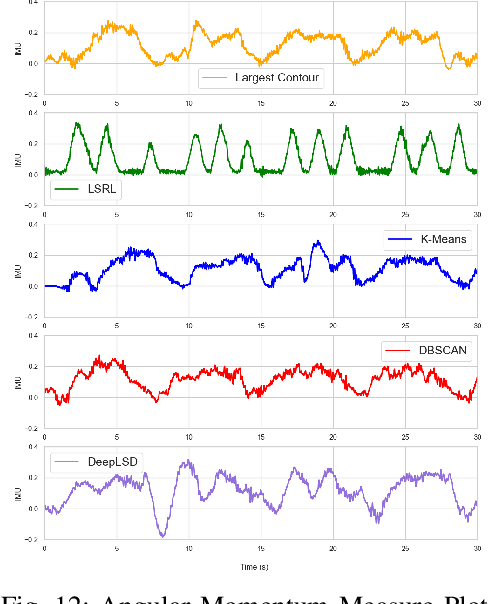

Abstract:Reliable lane-following algorithms are essential for safe and effective autonomous driving. This project was primarily focused on developing and evaluating different lane-following programs to find the most reliable algorithm for a Vehicle to Everything (V2X) project. The algorithms were first tested on a simulator and then with real vehicles equipped with a drive-by-wire system using ROS (Robot Operating System). Their performance was assessed through reliability, comfort, speed, and adaptability metrics. The results show that the two most reliable approaches detect both lane lines and use unsupervised learning to separate them. These approaches proved to be robust in various driving scenarios, making them suitable candidates for integration into the V2X project.

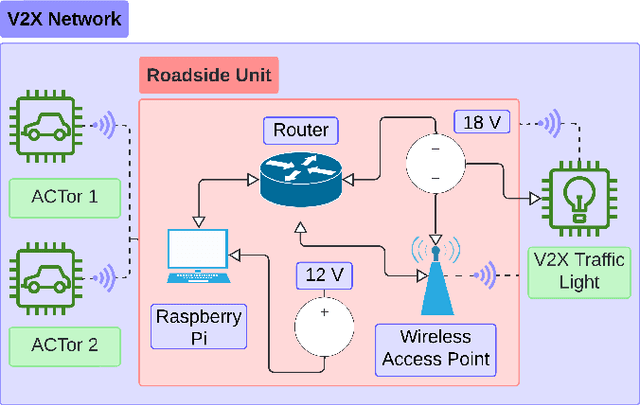

Vehicle-to-Everything (V2X) Communication: A Roadside Unit for Adaptive Intersection Control of Autonomous Electric Vehicles

Sep 01, 2024

Abstract:Recent advances in autonomous vehicle technologies and cellular network speeds motivate developments in vehicle-to-everything (V2X) communications. Enhanced road safety features and improved fuel efficiency are some of the motivations behind V2X for future transportation systems. Adaptive intersection control systems have considerable potential to achieve these goals by minimizing idle times and predicting short-term future traffic conditions. Integrating V2X into traffic management systems introduces the infrastructure necessary to make roads safer for all users and initiates the shift towards more intelligent and connected cities. To demonstrate our solution, we implement both a simulated and real-world representation of a 4-way intersection and crosswalk scenario with 2 self-driving electric vehicles, a roadside unit (RSU), and traffic light. Our architecture minimizes fuel consumption through intersections by reducing acceleration and braking by up to 75.35%. We implement a cost-effective solution to intelligent and connected intersection control to serve as a proof-of-concept model suitable as the basis for continued research and development. Code for this project is available at https://github.com/MMachado05/REU-2024.

"It is there, and you need it, so why do you not use it?" Achieving better adoption of AI systems by domain experts, in the case study of natural science research

Mar 25, 2024Abstract:Artificial Intelligence (AI) is becoming ubiquitous in domains such as medicine and natural science research. However, when AI systems are implemented in practice, domain experts often refuse them. Low acceptance hinders effective human-AI collaboration, even when it is essential for progress. In natural science research, scientists' ineffective use of AI-enabled systems can impede them from analysing their data and advancing their research. We conducted an ethnographically informed study of 10 in-depth interviews with AI practitioners and natural scientists at the organisation facing low adoption of algorithmic systems. Results were consolidated into recommendations for better AI adoption: i) actively supporting experts during the initial stages of system use, ii) communicating the capabilities of a system in a user-relevant way, and iii) following predefined collaboration rules. We discuss the broader implications of our findings and expand on how our proposed requirements could support practitioners and experts across domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge