Valentin Bieri

WildGS-SLAM: Monocular Gaussian Splatting SLAM in Dynamic Environments

Apr 04, 2025Abstract:We present WildGS-SLAM, a robust and efficient monocular RGB SLAM system designed to handle dynamic environments by leveraging uncertainty-aware geometric mapping. Unlike traditional SLAM systems, which assume static scenes, our approach integrates depth and uncertainty information to enhance tracking, mapping, and rendering performance in the presence of moving objects. We introduce an uncertainty map, predicted by a shallow multi-layer perceptron and DINOv2 features, to guide dynamic object removal during both tracking and mapping. This uncertainty map enhances dense bundle adjustment and Gaussian map optimization, improving reconstruction accuracy. Our system is evaluated on multiple datasets and demonstrates artifact-free view synthesis. Results showcase WildGS-SLAM's superior performance in dynamic environments compared to state-of-the-art methods.

OpenCity3D: What do Vision-Language Models know about Urban Environments?

Mar 21, 2025Abstract:Vision-language models (VLMs) show great promise for 3D scene understanding but are mainly applied to indoor spaces or autonomous driving, focusing on low-level tasks like segmentation. This work expands their use to urban-scale environments by leveraging 3D reconstructions from multi-view aerial imagery. We propose OpenCity3D, an approach that addresses high-level tasks, such as population density estimation, building age classification, property price prediction, crime rate assessment, and noise pollution evaluation. Our findings highlight OpenCity3D's impressive zero-shot and few-shot capabilities, showcasing adaptability to new contexts. This research establishes a new paradigm for language-driven urban analytics, enabling applications in planning, policy, and environmental monitoring. See our project page: opencity3d.github.io

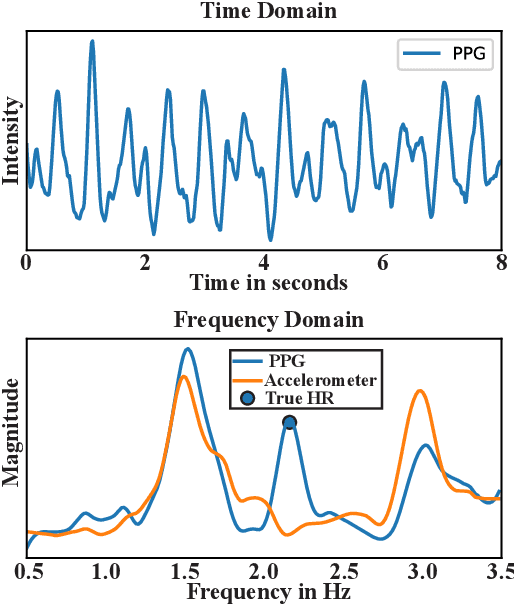

BeliefPPG: Uncertainty-aware Heart Rate Estimation from PPG signals via Belief Propagation

Jun 14, 2023

Abstract:We present a novel learning-based method that achieves state-of-the-art performance on several heart rate estimation benchmarks extracted from photoplethysmography signals (PPG). We consider the evolution of the heart rate in the context of a discrete-time stochastic process that we represent as a hidden Markov model. We derive a distribution over possible heart rate values for a given PPG signal window through a trained neural network. Using belief propagation, we incorporate the statistical distribution of heart rate changes to refine these estimates in a temporal context. From this, we obtain a quantized probability distribution over the range of possible heart rate values that captures a meaningful and well-calibrated estimate of the inherent predictive uncertainty. We show the robustness of our method on eight public datasets with three different cross-validation experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge