Berken Utku Demirel

Temporal Cardiovascular Dynamics for Improved PPG-Based Heart Rate Estimation

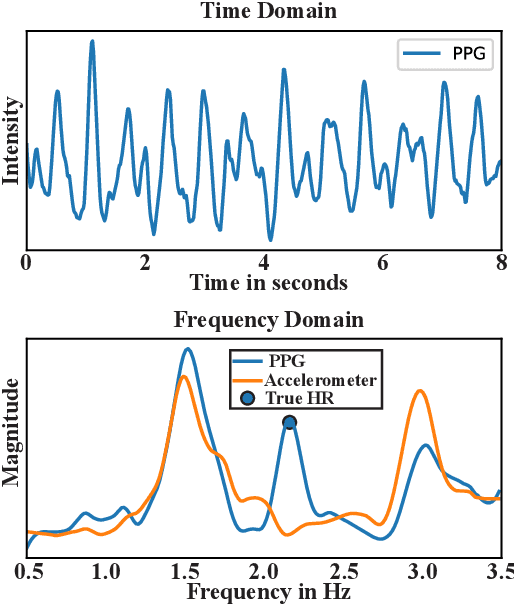

Oct 31, 2025Abstract:The oscillations of the human heart rate are inherently complex and non-linear -- they are best described by mathematical chaos, and they present a challenge when applied to the practical domain of cardiovascular health monitoring in everyday life. In this work, we study the non-linear chaotic behavior of heart rate through mutual information and introduce a novel approach for enhancing heart rate estimation in real-life conditions. Our proposed approach not only explains and handles the non-linear temporal complexity from a mathematical perspective but also improves the deep learning solutions when combined with them. We validate our proposed method on four established datasets from real-life scenarios and compare its performance with existing algorithms thoroughly with extensive ablation experiments. Our results demonstrate a substantial improvement, up to 40\%, of the proposed approach in estimating heart rate compared to traditional methods and existing machine-learning techniques while reducing the reliance on multiple sensing modalities and eliminating the need for post-processing steps.

Learning Without Augmenting: Unsupervised Time Series Representation Learning via Frame Projections

Oct 26, 2025Abstract:Self-supervised learning (SSL) has emerged as a powerful paradigm for learning representations without labeled data. Most SSL approaches rely on strong, well-established, handcrafted data augmentations to generate diverse views for representation learning. However, designing such augmentations requires domain-specific knowledge and implicitly imposes representational invariances on the model, which can limit generalization. In this work, we propose an unsupervised representation learning method that replaces augmentations by generating views using orthonormal bases and overcomplete frames. We show that embeddings learned from orthonormal and overcomplete spaces reside on distinct manifolds, shaped by the geometric biases introduced by representing samples in different spaces. By jointly leveraging the complementary geometry of these distinct manifolds, our approach achieves superior performance without artificially increasing data diversity through strong augmentations. We demonstrate the effectiveness of our method on nine datasets across five temporal sequence tasks, where signal-specific characteristics make data augmentations particularly challenging. Without relying on augmentation-induced diversity, our method achieves performance gains of up to 15--20\% over existing self-supervised approaches. Source code: https://github.com/eth-siplab/Learning-with-FrameProjections

Beyond Subjectivity: Continuous Cybersickness Detection Using EEG-based Multitaper Spectrum Estimation

Mar 27, 2025Abstract:Virtual reality (VR) presents immersive opportunities across many applications, yet the inherent risk of developing cybersickness during interaction can severely reduce enjoyment and platform adoption. Cybersickness is marked by symptoms such as dizziness and nausea, which previous work primarily assessed via subjective post-immersion questionnaires and motion-restricted controlled setups. In this paper, we investigate the \emph{dynamic nature} of cybersickness while users experience and freely interact in VR. We propose a novel method to \emph{continuously} identify and quantitatively gauge cybersickness levels from users' \emph{passively monitored} electroencephalography (EEG) and head motion signals. Our method estimates multitaper spectrums from EEG, integrating specialized EEG processing techniques to counter motion artifacts, and, thus, tracks cybersickness levels in real-time. Unlike previous approaches, our method requires no user-specific calibration or personalization for detecting cybersickness. Our work addresses the considerable challenge of reproducibility and subjectivity in cybersickness research.

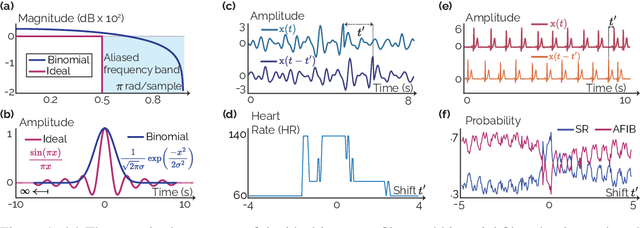

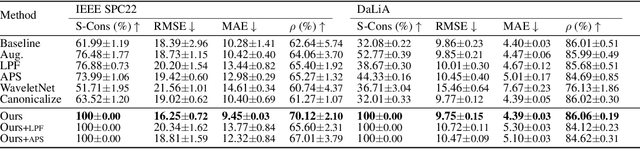

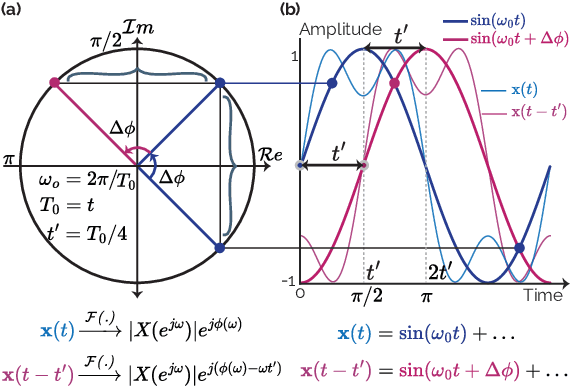

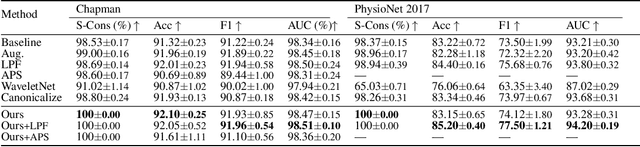

Shifting the Paradigm: A Diffeomorphism Between Time Series Data Manifolds for Achieving Shift-Invariancy in Deep Learning

Feb 27, 2025

Abstract:Deep learning models lack shift invariance, making them sensitive to input shifts that cause changes in output. While recent techniques seek to address this for images, our findings show that these approaches fail to provide shift-invariance in time series, where the data generation mechanism is more challenging due to the interaction of low and high frequencies. Worse, they also decrease performance across several tasks. In this paper, we propose a novel differentiable bijective function that maps samples from their high-dimensional data manifold to another manifold of the same dimension, without any dimensional reduction. Our approach guarantees that samples -- when subjected to random shifts -- are mapped to a unique point in the manifold while preserving all task-relevant information without loss. We theoretically and empirically demonstrate that the proposed transformation guarantees shift-invariance in deep learning models without imposing any limits to the shift. Our experiments on six time series tasks with state-of-the-art methods show that our approach consistently improves the performance while enabling models to achieve complete shift-invariance without modifying or imposing restrictions on the model's topology. The source code is available on \href{https://github.com/eth-siplab/Shifting-the-Paradigm}{GitHub}.

WildPPG: A Real-World PPG Dataset of Long Continuous Recordings

Dec 23, 2024

Abstract:Reflective photoplethysmography (PPG) has become the default sensing technique in wearable devices to monitor cardiac activity via a person's heart rate (HR). However, PPG-based HR estimates can be substantially impacted by factors such as the wearer's activities, sensor placement and resulting motion artifacts, as well as environmental characteristics such as temperature and ambient light. These and other factors can significantly impact and decrease HR prediction reliability. In this paper, we show that state-of-the-art HR estimation methods struggle when processing \emph{representative} data from everyday activities in outdoor environments, likely because they rely on existing datasets that captured controlled conditions. We introduce a novel multimodal dataset and benchmark results for continuous PPG recordings during outdoor activities from 16 participants over 13.5 hours, captured from four wearable sensors, each worn at a different location on the body, totaling 216\,hours. Our recordings include accelerometer, temperature, and altitude data, as well as a synchronized Lead I-based electrocardiogram for ground-truth HR references. Participants completed a round trip from Zurich to Jungfraujoch, a tall mountain in Switzerland over the course of one day. The trip included outdoor and indoor activities such as walking, hiking, stair climbing, eating, drinking, and resting at various temperatures and altitudes (up to 3,571\,m above sea level) as well as using cars, trains, cable cars, and lifts for transport -- all of which impacted participants' physiological dynamics. We also present a novel method that estimates HR values more robustly in such real-world scenarios than existing baselines.

Tri-Spectral PPG: Robust Reflective Photoplethysmography by Fusing Multiple Wavelengths for Cardiac Monitoring

Dec 23, 2024

Abstract:Multi-channel photoplethysmography (PPG) sensors have found widespread adoption in wearable devices for monitoring cardiac health. Channels thereby serve different functions -- whereas green is commonly used for metrics such as heart rate and heart rate variability, red and infrared are commonly used for pulse oximetry. In this paper, we introduce a novel method that simultaneously fuses multi-channel PPG signals into a single recovered PPG signal that can be input to further processing. Via signal fusion, our learning-based method compensates for the artifacts that affect wavelengths to different extents, such as motion and ambient light changes. We evaluate our method on a novel dataset of multi-channel PPG recordings and electrocardiogram recordings for reference from 10 participants over the course of 13 hours during real-world activities outside the laboratory. Using the fusion PPG signal our method recovered, participants' heart rates can be calculated with a mean error of 4.5\,bpm (23\% lower than from green PPG signals at 5.9\,bpm).

An Unsupervised Approach for Periodic Source Detection in Time Series

Jun 01, 2024

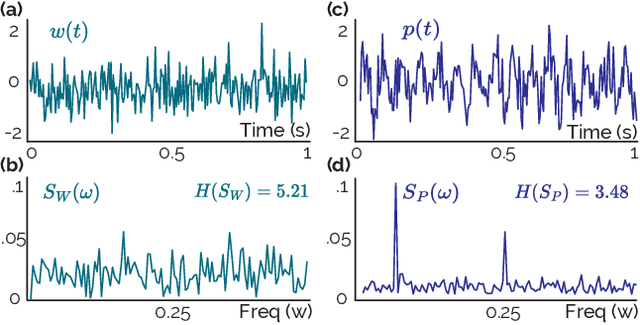

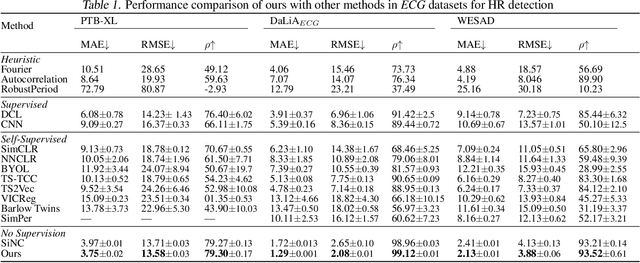

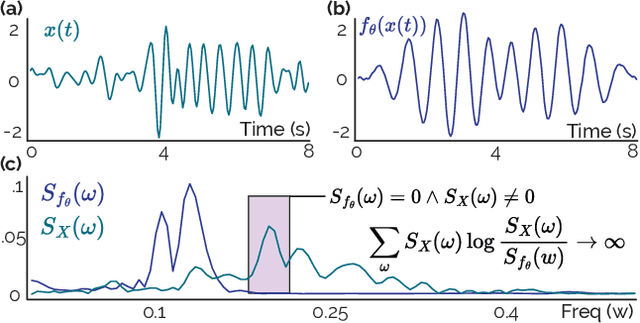

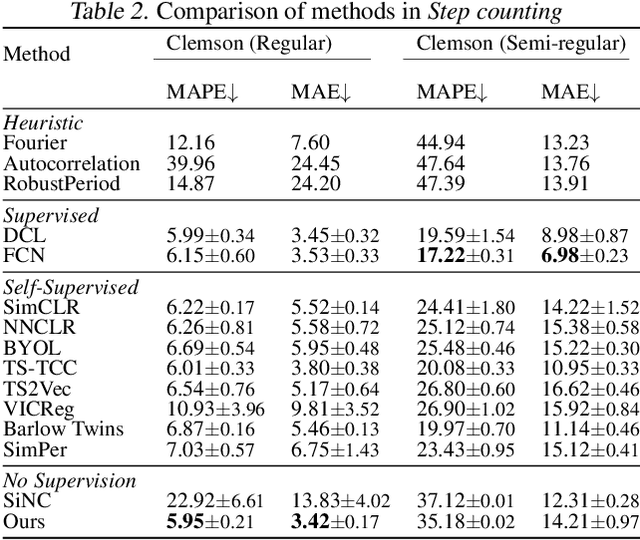

Abstract:Detection of periodic patterns of interest within noisy time series data plays a critical role in various tasks, spanning from health monitoring to behavior analysis. Existing learning techniques often rely on labels or clean versions of signals for detecting the periodicity, and those employing self-supervised learning methods are required to apply proper augmentations, which is already challenging for time series and can result in collapse -- all representations collapse to a single point due to strong augmentations. In this work, we propose a novel method to detect the periodicity in time series without the need for any labels or requiring tailored positive or negative data generation mechanisms with specific augmentations. We mitigate the collapse issue by ensuring the learned representations retain information from the original samples without imposing any random variance constraints on the batch. Our experiments in three time series tasks against state-of-the-art learning methods show that the proposed approach consistently outperforms prior works, achieving performance improvements of more than 45--50\%, showing its effectiveness. Code: https://github.com/eth-siplab/Unsupervised_Periodicity_Detection

Finding Order in Chaos: A Novel Data Augmentation Method for Time Series in Contrastive Learning

Sep 23, 2023Abstract:The success of contrastive learning is well known to be dependent on data augmentation. Although the degree of data augmentations has been well controlled by utilizing pre-defined techniques in some domains like vision, time-series data augmentation is less explored and remains a challenging problem due to the complexity of the data generation mechanism, such as the intricate mechanism involved in the cardiovascular system. Moreover, there is no widely recognized and general time-series augmentation method that can be applied across different tasks. In this paper, we propose a novel data augmentation method for quasi-periodic time-series tasks that aims to connect intra-class samples together, and thereby find order in the latent space. Our method builds upon the well-known mixup technique by incorporating a novel approach that accounts for the periodic nature of non-stationary time-series. Also, by controlling the degree of chaos created by data augmentation, our method leads to improved feature representations and performance on downstream tasks. We evaluate our proposed method on three time-series tasks, including heart rate estimation, human activity recognition, and cardiovascular disease detection. Extensive experiments against state-of-the-art methods show that the proposed approach outperforms prior works on optimal data generation and known data augmentation techniques in the three tasks, reflecting the effectiveness of the presented method. Source code: https://github.com/eth-siplab/Finding_Order_in_Chaos

BeliefPPG: Uncertainty-aware Heart Rate Estimation from PPG signals via Belief Propagation

Jun 14, 2023

Abstract:We present a novel learning-based method that achieves state-of-the-art performance on several heart rate estimation benchmarks extracted from photoplethysmography signals (PPG). We consider the evolution of the heart rate in the context of a discrete-time stochastic process that we represent as a hidden Markov model. We derive a distribution over possible heart rate values for a given PPG signal window through a trained neural network. Using belief propagation, we incorporate the statistical distribution of heart rate changes to refine these estimates in a temporal context. From this, we obtain a quantized probability distribution over the range of possible heart rate values that captures a meaningful and well-calibrated estimate of the inherent predictive uncertainty. We show the robustness of our method on eight public datasets with three different cross-validation experiments.

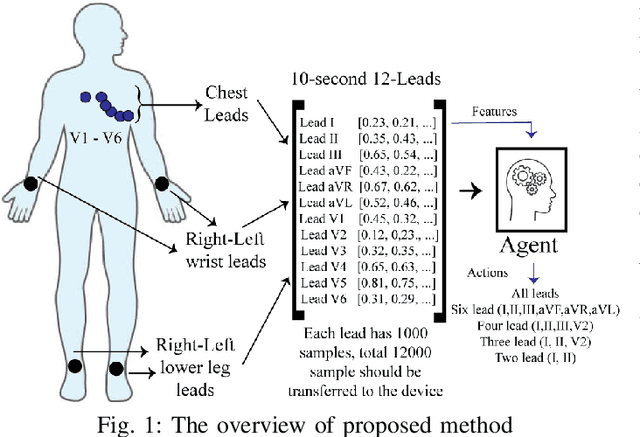

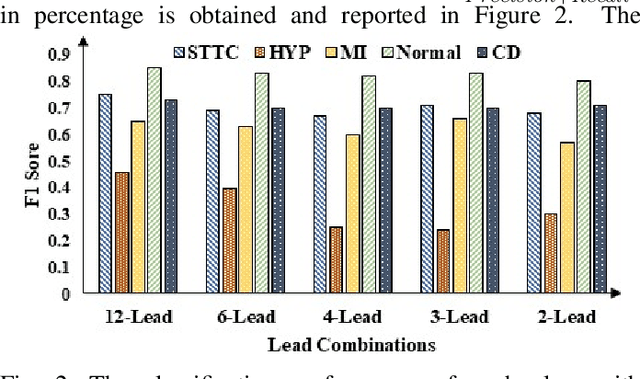

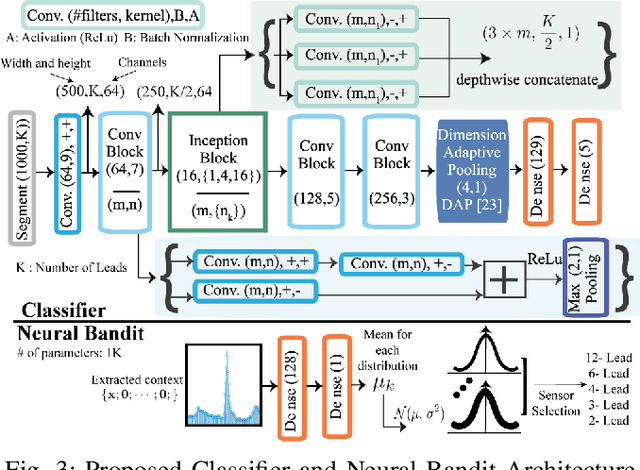

Neural Contextual Bandits Based Dynamic Sensor Selection for Low-Power Body-Area Networks

May 24, 2022

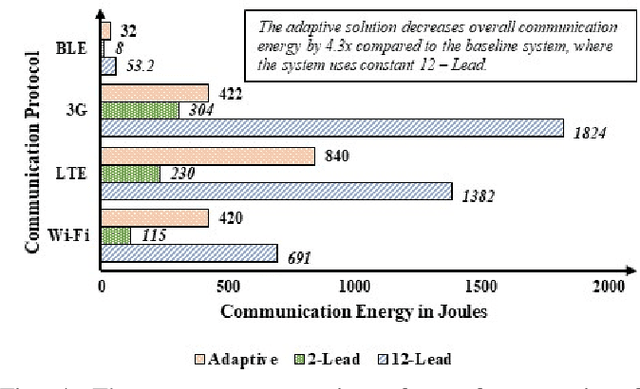

Abstract:Providing health monitoring devices with machine intelligence is important for enabling automatic mobile healthcare applications. However, this brings additional challenges due to the resource scarcity of these devices. This work introduces a neural contextual bandits based dynamic sensor selection methodology for high-performance and resource-efficient body-area networks to realize next generation mobile health monitoring devices. The methodology utilizes contextual bandits to select the most informative sensor combinations during runtime and ignore redundant data for decreasing transmission and computing power in a body area network (BAN). The proposed method has been validated using one of the most common health monitoring applications: cardiac activity monitoring. Solutions from our proposed method are compared against those from related works in terms of classification performance and energy while considering the communication energy consumption. Our final solutions could reach $78.8\%$ AU-PRC on the PTB-XL ECG dataset for cardiac abnormality detection while decreasing the overall energy consumption and computational energy by $3.7 \times$ and $4.3 \times$, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge