Zhiying Jiang

Expert-Data Alignment Governs Generation Quality in Decentralized Diffusion Models

Feb 02, 2026Abstract:Decentralized Diffusion Models (DDMs) route denoising through experts trained independently on disjoint data clusters, which can strongly disagree in their predictions. What governs the quality of generations in such systems? We present the first ever systematic investigation of this question. A priori, the expectation is that minimizing denoising trajectory sensitivity -- minimizing how perturbations amplify during sampling -- should govern generation quality. We demonstrate this hypothesis is incorrect: a stability-quality dissociation. Full ensemble routing, which combines all expert predictions at each step, achieves the most stable sampling dynamics and best numerical convergence while producing the worst generation quality (FID 47.9 vs. 22.6 for sparse Top-2 routing). Instead, we identify expert-data alignment as the governing principle: generation quality depends on routing inputs to experts whose training distribution covers the current denoising state. Across two distinct DDM systems, we validate expert-data alignment using (i) data-cluster distance analysis, confirming sparse routing selects experts with data clusters closest to the current denoising state, and (ii) per-expert analysis, showing selected experts produce more accurate predictions than non-selected ones, and (iii) expert disagreement analysis, showing quality degrades when experts disagree. For DDM deployment, our findings establish that routing should prioritize expert-data alignment over numerical stability metrics.

HATIR: Heat-Aware Diffusion for Turbulent Infrared Video Super-Resolution

Jan 08, 2026Abstract:Infrared video has been of great interest in visual tasks under challenging environments, but often suffers from severe atmospheric turbulence and compression degradation. Existing video super-resolution (VSR) methods either neglect the inherent modality gap between infrared and visible images or fail to restore turbulence-induced distortions. Directly cascading turbulence mitigation (TM) algorithms with VSR methods leads to error propagation and accumulation due to the decoupled modeling of degradation between turbulence and resolution. We introduce HATIR, a Heat-Aware Diffusion for Turbulent InfraRed Video Super-Resolution, which injects heat-aware deformation priors into the diffusion sampling path to jointly model the inverse process of turbulent degradation and structural detail loss. Specifically, HATIR constructs a Phasor-Guided Flow Estimator, rooted in the physical principle that thermally active regions exhibit consistent phasor responses over time, enabling reliable turbulence-aware flow to guide the reverse diffusion process. To ensure the fidelity of structural recovery under nonuniform distortions, a Turbulence-Aware Decoder is proposed to selectively suppress unstable temporal cues and enhance edge-aware feature aggregation via turbulence gating and structure-aware attention. We built FLIR-IVSR, the first dataset for turbulent infrared VSR, comprising paired LR-HR sequences from a FLIR T1050sc camera (1024 X 768) spanning 640 diverse scenes with varying camera and object motion conditions. This encourages future research in infrared VSR. Project page: https://github.com/JZ0606/HATIR

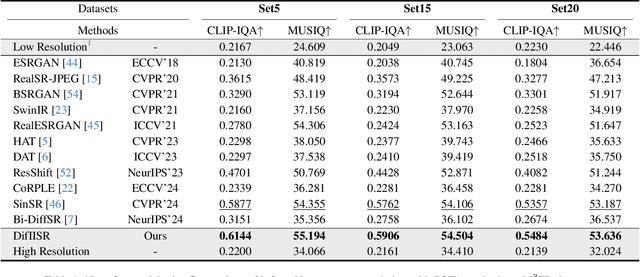

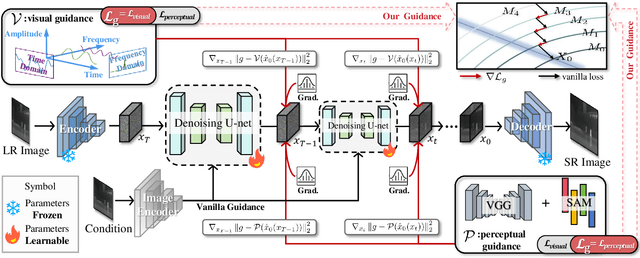

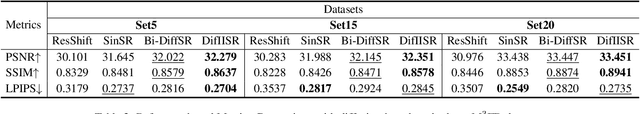

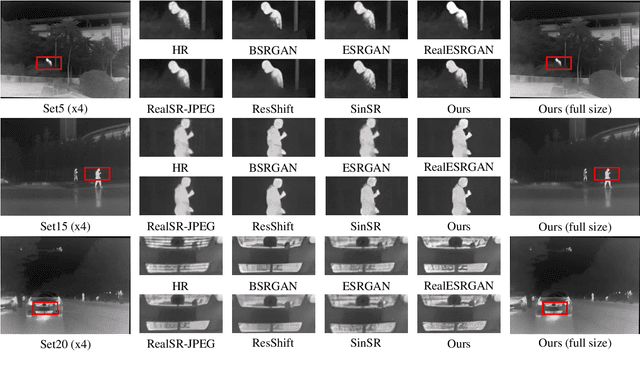

DifIISR: A Diffusion Model with Gradient Guidance for Infrared Image Super-Resolution

Mar 03, 2025

Abstract:Infrared imaging is essential for autonomous driving and robotic operations as a supportive modality due to its reliable performance in challenging environments. Despite its popularity, the limitations of infrared cameras, such as low spatial resolution and complex degradations, consistently challenge imaging quality and subsequent visual tasks. Hence, infrared image super-resolution (IISR) has been developed to address this challenge. While recent developments in diffusion models have greatly advanced this field, current methods to solve it either ignore the unique modal characteristics of infrared imaging or overlook the machine perception requirements. To bridge these gaps, we propose DifIISR, an infrared image super-resolution diffusion model optimized for visual quality and perceptual performance. Our approach achieves task-based guidance for diffusion by injecting gradients derived from visual and perceptual priors into the noise during the reverse process. Specifically, we introduce an infrared thermal spectrum distribution regulation to preserve visual fidelity, ensuring that the reconstructed infrared images closely align with high-resolution images by matching their frequency components. Subsequently, we incorporate various visual foundational models as the perceptual guidance for downstream visual tasks, infusing generalizable perceptual features beneficial for detection and segmentation. As a result, our approach gains superior visual results while attaining State-Of-The-Art downstream task performance. Code is available at https://github.com/zirui0625/DifIISR

Infrared and Visible Image Fusion: From Data Compatibility to Task Adaption

Jan 18, 2025Abstract:Infrared-visible image fusion (IVIF) is a critical task in computer vision, aimed at integrating the unique features of both infrared and visible spectra into a unified representation. Since 2018, the field has entered the deep learning era, with an increasing variety of approaches introducing a range of networks and loss functions to enhance visual performance. However, challenges such as data compatibility, perception accuracy, and efficiency remain. Unfortunately, there is a lack of recent comprehensive surveys that address this rapidly expanding domain. This paper fills that gap by providing a thorough survey covering a broad range of topics. We introduce a multi-dimensional framework to elucidate common learning-based IVIF methods, from visual enhancement strategies to data compatibility and task adaptability. We also present a detailed analysis of these approaches, accompanied by a lookup table clarifying their core ideas. Furthermore, we summarize performance comparisons, both quantitatively and qualitatively, focusing on registration, fusion, and subsequent high-level tasks. Beyond technical analysis, we discuss potential future directions and open issues in this area. For further details, visit our GitHub repository: https://github.com/RollingPlain/IVIF_ZOO.

SeFENet: Robust Deep Homography Estimation via Semantic-Driven Feature Enhancement

Dec 09, 2024

Abstract:Images captured in harsh environments often exhibit blurred details, reduced contrast, and color distortion, which hinder feature detection and matching, thereby affecting the accuracy and robustness of homography estimation. While visual enhancement can improve contrast and clarity, it may introduce visual-tolerant artifacts that obscure the structural integrity of images. Considering the resilience of semantic information against environmental interference, we propose a semantic-driven feature enhancement network for robust homography estimation, dubbed SeFENet. Concretely, we first introduce an innovative hierarchical scale-aware module to expand the receptive field by aggregating multi-scale information, thereby effectively extracting image features under diverse harsh conditions. Subsequently, we propose a semantic-guided constraint module combined with a high-level perceptual framework to achieve degradation-tolerant with semantic feature. A meta-learning-based training strategy is introduced to mitigate the disparity between semantic and structural features. By internal-external alternating optimization, the proposed network achieves implicit semantic-wise feature enhancement, thereby improving the robustness of homography estimation in adverse environments by strengthening the local feature comprehension and context information extraction. Experimental results under both normal and harsh conditions demonstrate that SeFENet significantly outperforms SOTA methods, reducing point match error by at least 41\% on the large-scale datasets.

HUPE: Heuristic Underwater Perceptual Enhancement with Semantic Collaborative Learning

Nov 29, 2024Abstract:Underwater images are often affected by light refraction and absorption, reducing visibility and interfering with subsequent applications. Existing underwater image enhancement methods primarily focus on improving visual quality while overlooking practical implications. To strike a balance between visual quality and application, we propose a heuristic invertible network for underwater perception enhancement, dubbed HUPE, which enhances visual quality and demonstrates flexibility in handling other downstream tasks. Specifically, we introduced an information-preserving reversible transformation with embedded Fourier transform to establish a bidirectional mapping between underwater images and their clear images. Additionally, a heuristic prior is incorporated into the enhancement process to better capture scene information. To further bridge the feature gap between vision-based enhancement images and application-oriented images, a semantic collaborative learning module is applied in the joint optimization process of the visual enhancement task and the downstream task, which guides the proposed enhancement model to extract more task-oriented semantic features while obtaining visually pleasing images. Extensive experiments, both quantitative and qualitative, demonstrate the superiority of our HUPE over state-of-the-art methods. The source code is available at https://github.com/ZengxiZhang/HUPE.

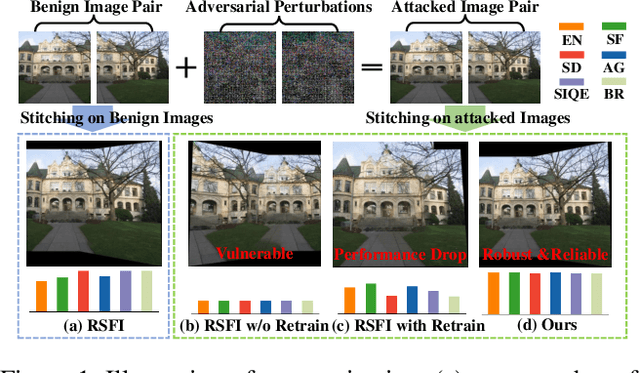

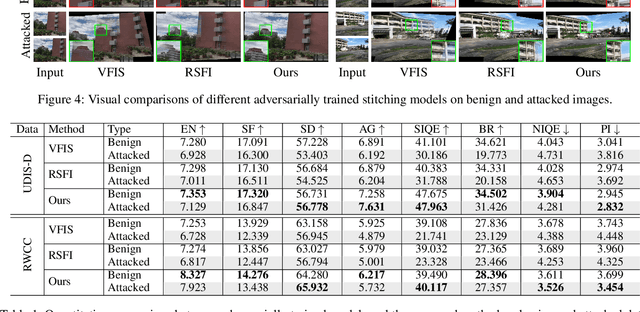

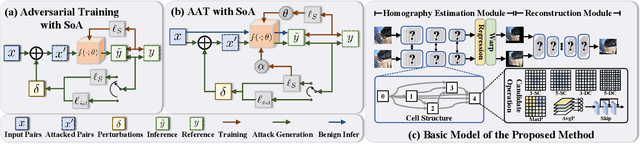

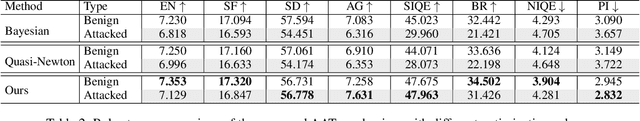

Towards Robust Image Stitching: An Adaptive Resistance Learning against Compatible Attacks

Feb 25, 2024

Abstract:Image stitching seamlessly integrates images captured from varying perspectives into a single wide field-of-view image. Such integration not only broadens the captured scene but also augments holistic perception in computer vision applications. Given a pair of captured images, subtle perturbations and distortions which go unnoticed by the human visual system tend to attack the correspondence matching, impairing the performance of image stitching algorithms. In light of this challenge, this paper presents the first attempt to improve the robustness of image stitching against adversarial attacks. Specifically, we introduce a stitching-oriented attack~(SoA), tailored to amplify the alignment loss within overlapping regions, thereby targeting the feature matching procedure. To establish an attack resistant model, we delve into the robustness of stitching architecture and develop an adaptive adversarial training~(AAT) to balance attack resistance with stitching precision. In this way, we relieve the gap between the routine adversarial training and benign models, ensuring resilience without quality compromise. Comprehensive evaluation across real-world and synthetic datasets validate the deterioration of SoA on stitching performance. Furthermore, AAT emerges as a more robust solution against adversarial perturbations, delivering superior stitching results. Code is available at:https://github.com/Jzy2017/TRIS.

From Text to Pixels: A Context-Aware Semantic Synergy Solution for Infrared and Visible Image Fusion

Dec 31, 2023

Abstract:With the rapid progression of deep learning technologies, multi-modality image fusion has become increasingly prevalent in object detection tasks. Despite its popularity, the inherent disparities in how different sources depict scene content make fusion a challenging problem. Current fusion methodologies identify shared characteristics between the two modalities and integrate them within this shared domain using either iterative optimization or deep learning architectures, which often neglect the intricate semantic relationships between modalities, resulting in a superficial understanding of inter-modal connections and, consequently, suboptimal fusion outcomes. To address this, we introduce a text-guided multi-modality image fusion method that leverages the high-level semantics from textual descriptions to integrate semantics from infrared and visible images. This method capitalizes on the complementary characteristics of diverse modalities, bolstering both the accuracy and robustness of object detection. The codebook is utilized to enhance a streamlined and concise depiction of the fused intra- and inter-domain dynamics, fine-tuned for optimal performance in detection tasks. We present a bilevel optimization strategy that establishes a nexus between the joint problem of fusion and detection, optimizing both processes concurrently. Furthermore, we introduce the first dataset of paired infrared and visible images accompanied by text prompts, paving the way for future research. Extensive experiments on several datasets demonstrate that our method not only produces visually superior fusion results but also achieves a higher detection mAP over existing methods, achieving state-of-the-art results.

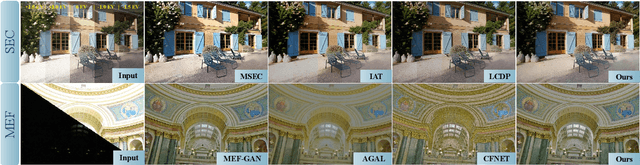

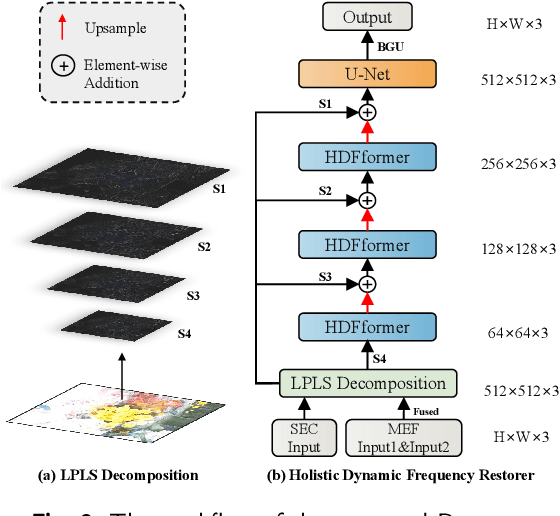

Holistic Dynamic Frequency Transformer for Image Fusion and Exposure Correction

Sep 03, 2023

Abstract:The correction of exposure-related issues is a pivotal component in enhancing the quality of images, offering substantial implications for various computer vision tasks. Historically, most methodologies have predominantly utilized spatial domain recovery, offering limited consideration to the potentialities of the frequency domain. Additionally, there has been a lack of a unified perspective towards low-light enhancement, exposure correction, and multi-exposure fusion, complicating and impeding the optimization of image processing. In response to these challenges, this paper proposes a novel methodology that leverages the frequency domain to improve and unify the handling of exposure correction tasks. Our method introduces Holistic Frequency Attention and Dynamic Frequency Feed-Forward Network, which replace conventional correlation computation in the spatial-domain. They form a foundational building block that facilitates a U-shaped Holistic Dynamic Frequency Transformer as a filter to extract global information and dynamically select important frequency bands for image restoration. Complementing this, we employ a Laplacian pyramid to decompose images into distinct frequency bands, followed by multiple restorers, each tuned to recover specific frequency-band information. The pyramid fusion allows a more detailed and nuanced image restoration process. Ultimately, our structure unifies the three tasks of low-light enhancement, exposure correction, and multi-exposure fusion, enabling comprehensive treatment of all classical exposure errors. Benchmarking on mainstream datasets for these tasks, our proposed method achieves state-of-the-art results, paving the way for more sophisticated and unified solutions in exposure correction.

Dual Adversarial Resilience for Collaborating Robust Underwater Image Enhancement and Perception

Sep 03, 2023

Abstract:Due to the uneven scattering and absorption of different light wavelengths in aquatic environments, underwater images suffer from low visibility and clear color deviations. With the advancement of autonomous underwater vehicles, extensive research has been conducted on learning-based underwater enhancement algorithms. These works can generate visually pleasing enhanced images and mitigate the adverse effects of degraded images on subsequent perception tasks. However, learning-based methods are susceptible to the inherent fragility of adversarial attacks, causing significant disruption in results. In this work, we introduce a collaborative adversarial resilience network, dubbed CARNet, for underwater image enhancement and subsequent detection tasks. Concretely, we first introduce an invertible network with strong perturbation-perceptual abilities to isolate attacks from underwater images, preventing interference with image enhancement and perceptual tasks. Furthermore, we propose a synchronized attack training strategy with both visual-driven and perception-driven attacks enabling the network to discern and remove various types of attacks. Additionally, we incorporate an attack pattern discriminator to heighten the robustness of the network against different attacks. Extensive experiments demonstrate that the proposed method outputs visually appealing enhancement images and perform averagely 6.71% higher detection mAP than state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge