Gehui Li

Multi-Agent Image Restoration

Mar 12, 2025

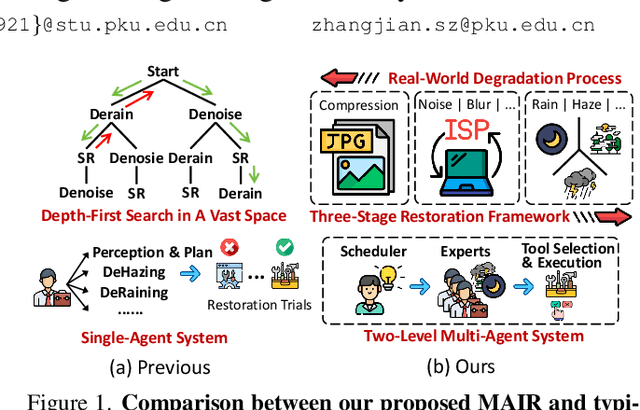

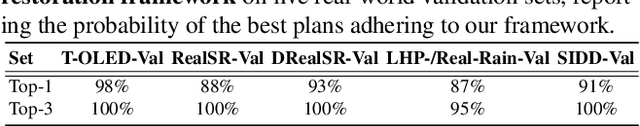

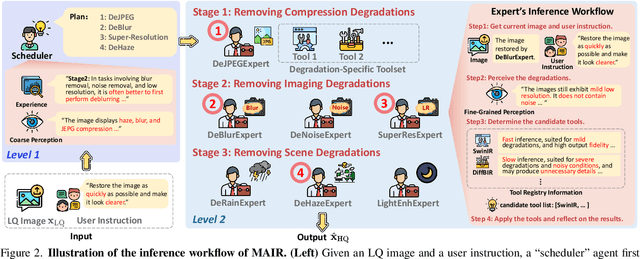

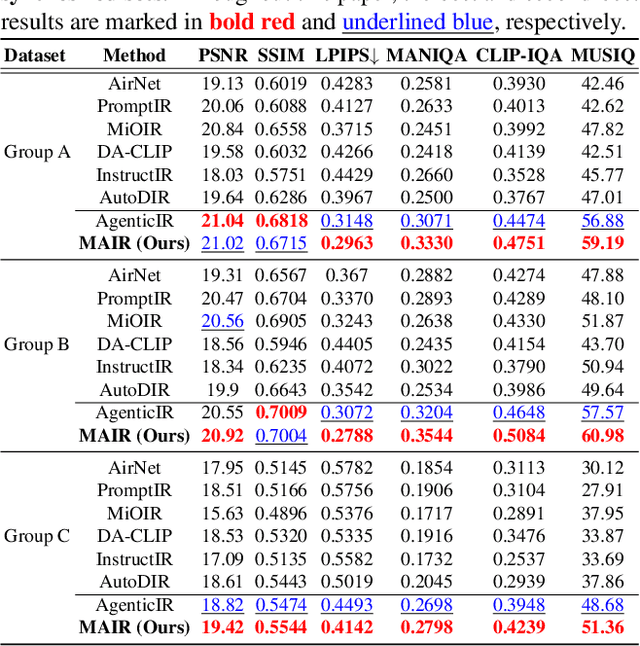

Abstract:Image restoration (IR) is challenging due to the complexity of real-world degradations. While many specialized and all-in-one IR models have been developed, they fail to effectively handle complex, mixed degradations. Recent agentic methods RestoreAgent and AgenticIR leverage intelligent, autonomous workflows to alleviate this issue, yet they suffer from suboptimal results and inefficiency due to their resource-intensive finetunings, and ineffective searches and tool execution trials for satisfactory outputs. In this paper, we propose MAIR, a novel Multi-Agent approach for complex IR problems. We introduce a real-world degradation prior, categorizing degradations into three types: (1) scene, (2) imaging, and (3) compression, which are observed to occur sequentially in real world, and reverse them in the opposite order. Built upon this three-stage restoration framework, MAIR emulates a team of collaborative human specialists, including a "scheduler" for overall planning and multiple "experts" dedicated to specific degradations. This design minimizes search space and trial efforts, improving image quality while reducing inference costs. In addition, a registry mechanism is introduced to enable easy integration of new tools. Experiments on both synthetic and real-world datasets show that proposed MAIR achieves competitive performance and improved efficiency over the previous agentic IR system. Code and models will be made available.

OSMamba: Omnidirectional Spectral Mamba with Dual-Domain Prior Generator for Exposure Correction

Nov 22, 2024

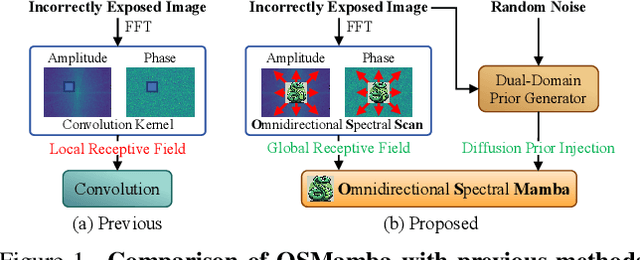

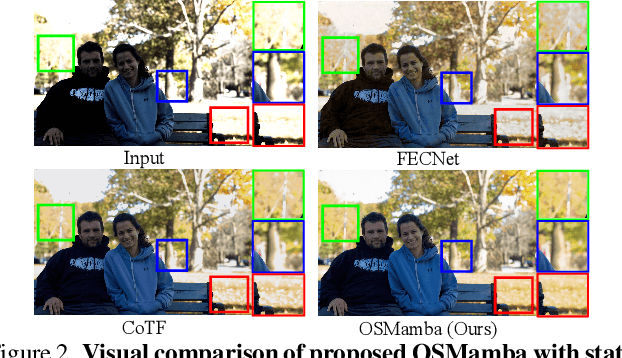

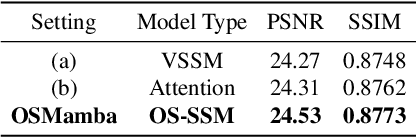

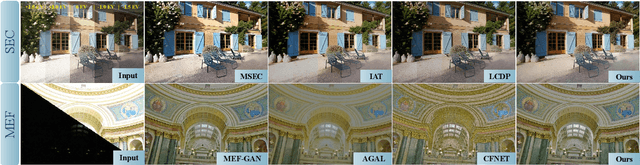

Abstract:Exposure correction is a fundamental problem in computer vision and image processing. Recently, frequency domain-based methods have achieved impressive improvement, yet they still struggle with complex real-world scenarios under extreme exposure conditions. This is due to the local convolutional receptive fields failing to model long-range dependencies in the spectrum, and the non-generative learning paradigm being inadequate for retrieving lost details from severely degraded regions. In this paper, we propose Omnidirectional Spectral Mamba (OSMamba), a novel exposure correction network that incorporates the advantages of state space models and generative diffusion models to address these limitations. Specifically, OSMamba introduces an omnidirectional spectral scanning mechanism that adapts Mamba to the frequency domain to capture comprehensive long-range dependencies in both the amplitude and phase spectra of deep image features, hence enhancing illumination correction and structure recovery. Furthermore, we develop a dual-domain prior generator that learns from well-exposed images to generate a degradation-free diffusion prior containing correct information about severely under- and over-exposed regions for better detail restoration. Extensive experiments on multiple-exposure and mixed-exposure datasets demonstrate that the proposed OSMamba achieves state-of-the-art performance both quantitatively and qualitatively.

Adversarial Diffusion Compression for Real-World Image Super-Resolution

Nov 20, 2024

Abstract:Real-world image super-resolution (Real-ISR) aims to reconstruct high-resolution images from low-resolution inputs degraded by complex, unknown processes. While many Stable Diffusion (SD)-based Real-ISR methods have achieved remarkable success, their slow, multi-step inference hinders practical deployment. Recent SD-based one-step networks like OSEDiff and S3Diff alleviate this issue but still incur high computational costs due to their reliance on large pretrained SD models. This paper proposes a novel Real-ISR method, AdcSR, by distilling the one-step diffusion network OSEDiff into a streamlined diffusion-GAN model under our Adversarial Diffusion Compression (ADC) framework. We meticulously examine the modules of OSEDiff, categorizing them into two types: (1) Removable (VAE encoder, prompt extractor, text encoder, etc.) and (2) Prunable (denoising UNet and VAE decoder). Since direct removal and pruning can degrade the model's generation capability, we pretrain our pruned VAE decoder to restore its ability to decode images and employ adversarial distillation to compensate for performance loss. This ADC-based diffusion-GAN hybrid design effectively reduces complexity by 73% in inference time, 78% in computation, and 74% in parameters, while preserving the model's generation capability. Experiments manifest that our proposed AdcSR achieves competitive recovery quality on both synthetic and real-world datasets, offering up to 9.3$\times$ speedup over previous one-step diffusion-based methods. Code and models will be made available.

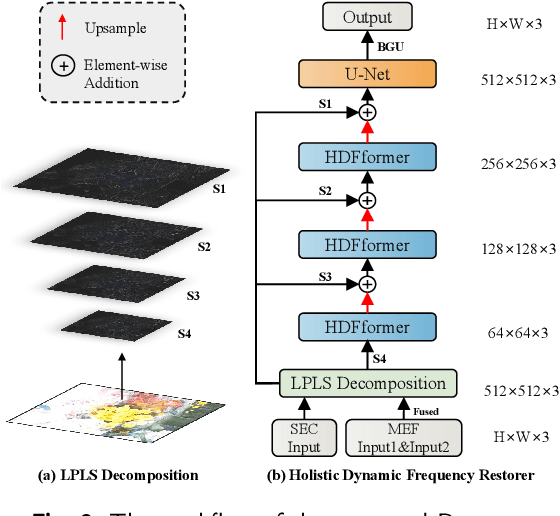

Holistic Dynamic Frequency Transformer for Image Fusion and Exposure Correction

Sep 03, 2023

Abstract:The correction of exposure-related issues is a pivotal component in enhancing the quality of images, offering substantial implications for various computer vision tasks. Historically, most methodologies have predominantly utilized spatial domain recovery, offering limited consideration to the potentialities of the frequency domain. Additionally, there has been a lack of a unified perspective towards low-light enhancement, exposure correction, and multi-exposure fusion, complicating and impeding the optimization of image processing. In response to these challenges, this paper proposes a novel methodology that leverages the frequency domain to improve and unify the handling of exposure correction tasks. Our method introduces Holistic Frequency Attention and Dynamic Frequency Feed-Forward Network, which replace conventional correlation computation in the spatial-domain. They form a foundational building block that facilitates a U-shaped Holistic Dynamic Frequency Transformer as a filter to extract global information and dynamically select important frequency bands for image restoration. Complementing this, we employ a Laplacian pyramid to decompose images into distinct frequency bands, followed by multiple restorers, each tuned to recover specific frequency-band information. The pyramid fusion allows a more detailed and nuanced image restoration process. Ultimately, our structure unifies the three tasks of low-light enhancement, exposure correction, and multi-exposure fusion, enabling comprehensive treatment of all classical exposure errors. Benchmarking on mainstream datasets for these tasks, our proposed method achieves state-of-the-art results, paving the way for more sophisticated and unified solutions in exposure correction.

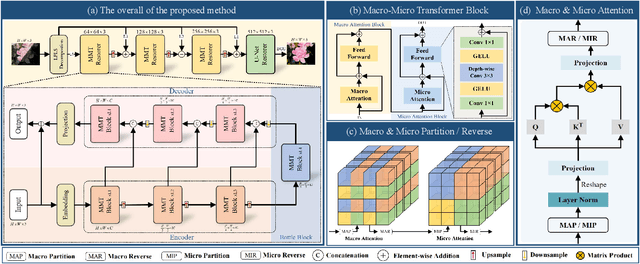

Fearless Luminance Adaptation: A Macro-Micro-Hierarchical Transformer for Exposure Correction

Sep 02, 2023

Abstract:Photographs taken with less-than-ideal exposure settings often display poor visual quality. Since the correction procedures vary significantly, it is difficult for a single neural network to handle all exposure problems. Moreover, the inherent limitations of convolutions, hinder the models ability to restore faithful color or details on extremely over-/under- exposed regions. To overcome these limitations, we propose a Macro-Micro-Hierarchical transformer, which consists of a macro attention to capture long-range dependencies, a micro attention to extract local features, and a hierarchical structure for coarse-to-fine correction. In specific, the complementary macro-micro attention designs enhance locality while allowing global interactions. The hierarchical structure enables the network to correct exposure errors of different scales layer by layer. Furthermore, we propose a contrast constraint and couple it seamlessly in the loss function, where the corrected image is pulled towards the positive sample and pushed away from the dynamically generated negative samples. Thus the remaining color distortion and loss of detail can be removed. We also extend our method as an image enhancer for low-light face recognition and low-light semantic segmentation. Experiments demonstrate that our approach obtains more attractive results than state-of-the-art methods quantitatively and qualitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge