Zhixin Yan

TextSplat: Text-Guided Semantic Fusion for Generalizable Gaussian Splatting

Apr 13, 2025Abstract:Recent advancements in Generalizable Gaussian Splatting have enabled robust 3D reconstruction from sparse input views by utilizing feed-forward Gaussian Splatting models, achieving superior cross-scene generalization. However, while many methods focus on geometric consistency, they often neglect the potential of text-driven guidance to enhance semantic understanding, which is crucial for accurately reconstructing fine-grained details in complex scenes. To address this limitation, we propose TextSplat--the first text-driven Generalizable Gaussian Splatting framework. By employing a text-guided fusion of diverse semantic cues, our framework learns robust cross-modal feature representations that improve the alignment of geometric and semantic information, producing high-fidelity 3D reconstructions. Specifically, our framework employs three parallel modules to obtain complementary representations: the Diffusion Prior Depth Estimator for accurate depth information, the Semantic Aware Segmentation Network for detailed semantic information, and the Multi-View Interaction Network for refined cross-view features. Then, in the Text-Guided Semantic Fusion Module, these representations are integrated via the text-guided and attention-based feature aggregation mechanism, resulting in enhanced 3D Gaussian parameters enriched with detailed semantic cues. Experimental results on various benchmark datasets demonstrate improved performance compared to existing methods across multiple evaluation metrics, validating the effectiveness of our framework. The code will be publicly available.

MSSDA: Multi-Sub-Source Adaptation for Diabetic Foot Neuropathy Recognition

Sep 21, 2024

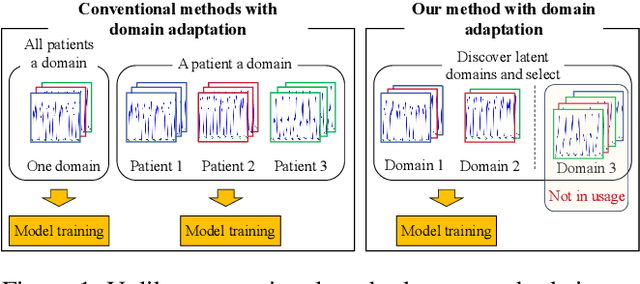

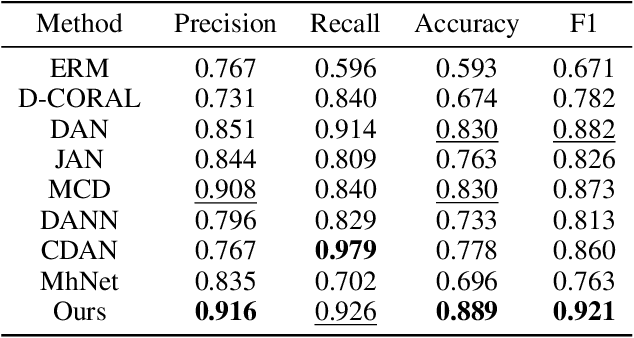

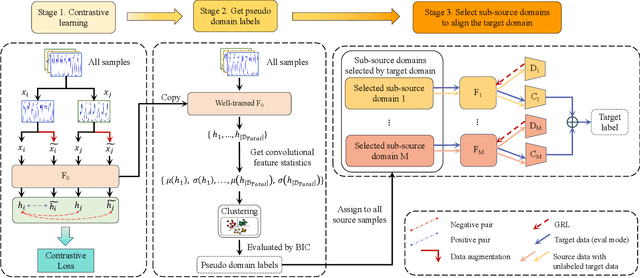

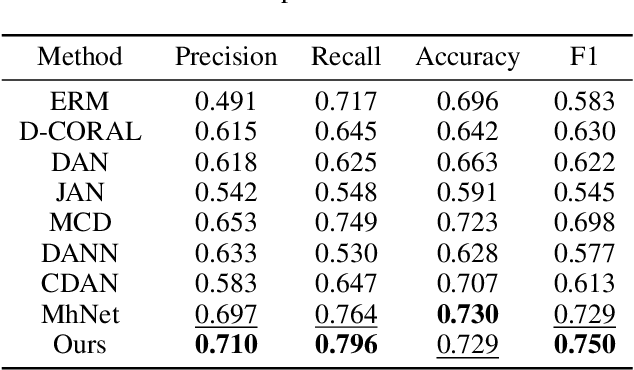

Abstract:Diabetic foot neuropathy (DFN) is a critical factor leading to diabetic foot ulcers, which is one of the most common and severe complications of diabetes mellitus (DM) and is associated with high risks of amputation and mortality. Despite its significance, existing datasets do not directly derive from plantar data and lack continuous, long-term foot-specific information. To advance DFN research, we have collected a novel dataset comprising continuous plantar pressure data to recognize diabetic foot neuropathy. This dataset includes data from 94 DM patients with DFN and 41 DM patients without DFN. Moreover, traditional methods divide datasets by individuals, potentially leading to significant domain discrepancies in some feature spaces due to the absence of mid-domain data. In this paper, we propose an effective domain adaptation method to address this proplem. We split the dataset based on convolutional feature statistics and select appropriate sub-source domains to enhance efficiency and avoid negative transfer. We then align the distributions of each source and target domain pair in specific feature spaces to minimize the domain gap. Comprehensive results validate the effectiveness of our method on both the newly proposed dataset for DFN recognition and an existing dataset.

PhysMamba: Leveraging Dual-Stream Cross-Attention SSD for Remote Physiological Measurement

Aug 02, 2024Abstract:Remote Photoplethysmography (rPPG) is a non-contact technique for extracting physiological signals from facial videos, used in applications like emotion monitoring, medical assistance, and anti-face spoofing. Unlike controlled laboratory settings, real-world environments often contain motion artifacts and noise, affecting the performance of existing methods. To address this, we propose PhysMamba, a dual-stream time-frequency interactive model based on Mamba. PhysMamba integrates the state-of-the-art Mamba-2 model and employs a dual-stream architecture to learn diverse rPPG features, enhancing robustness in noisy conditions. Additionally, we designed the Cross-Attention State Space Duality (CASSD) module to improve information exchange and feature complementarity between the two streams. We validated PhysMamba using PURE, UBFC-rPPG and MMPD. Experimental results show that PhysMamba achieves state-of-the-art performance across various scenarios, particularly in complex environments, demonstrating its potential in practical remote heart rate monitoring applications.

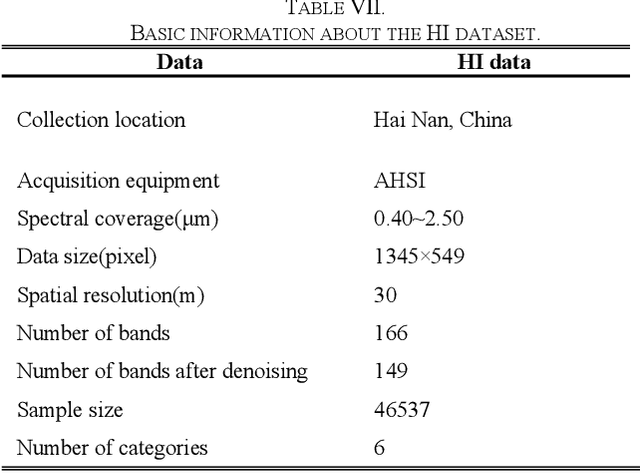

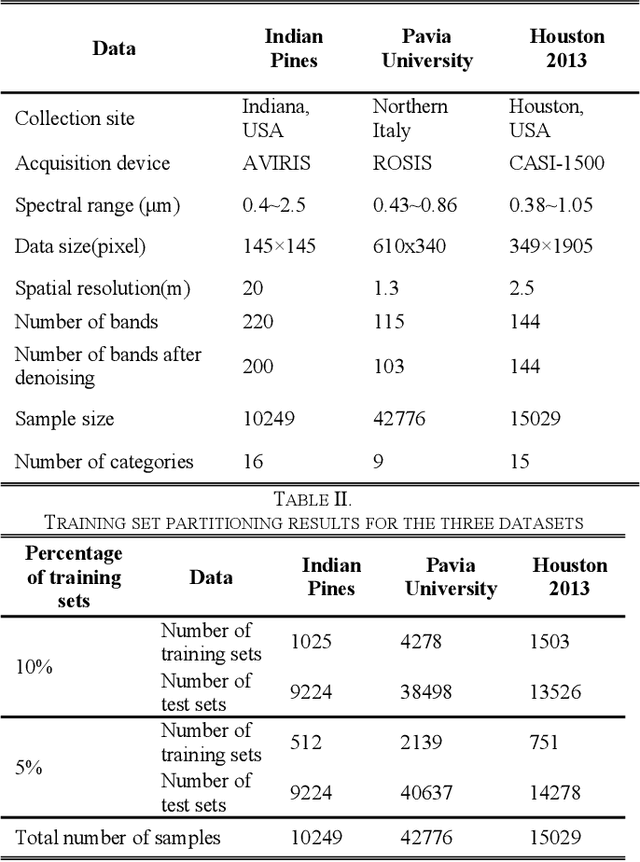

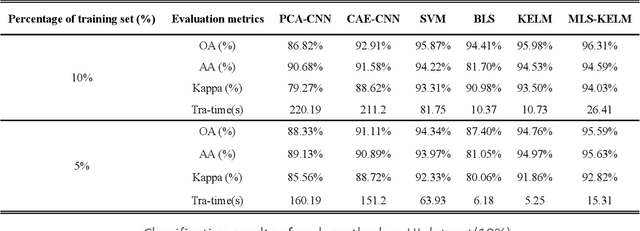

Kernel Extreme Learning Machine Optimized by the Sparrow Search Algorithm for Hyperspectral Image Classification

Apr 03, 2022

Abstract:To improve the classification performance and generalization ability of the hyperspectral image classification algorithm, this paper uses Multi-Scale Total Variation (MSTV) to extract the spectral features, local binary pattern (LBP) to extract spatial features, and feature superposition to obtain the fused features of hyperspectral images. A new swarm intelligence optimization method with high convergence and strong global search capability, the Sparrow Search Algorithm (SSA), is used to optimize the kernel parameters and regularization coefficients of the Kernel Extreme Learning Machine (KELM). In summary, a multiscale fusion feature hyperspectral image classification method (MLS-KELM) is proposed in this paper. The Indian Pines, Pavia University and Houston 2013 datasets were selected to validate the classification performance of MLS-KELM, and the method was applied to ZY1-02D hyperspectral data. The experimental results show that MLS-KELM has better classification performance and generalization ability compared with other popular classification methods, and MLS-KELM shows its strong robustness in the small sample case.

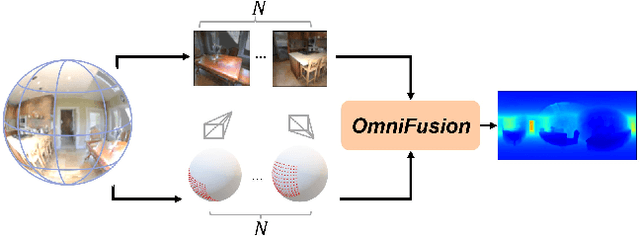

OmniFusion: 360 Monocular Depth Estimation via Geometry-Aware Fusion

Mar 29, 2022

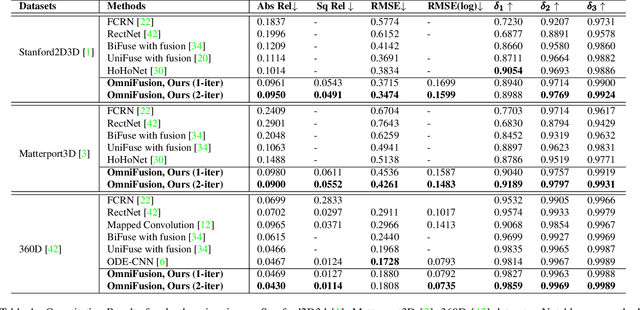

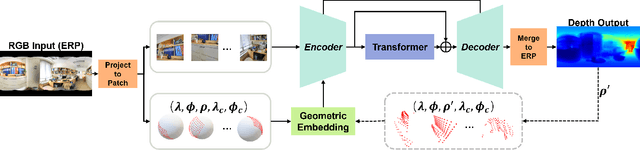

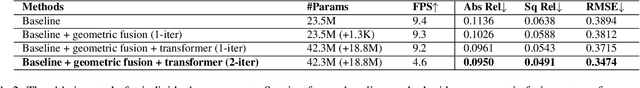

Abstract:A well-known challenge in applying deep-learning methods to omnidirectional images is spherical distortion. In dense regression tasks such as depth estimation, where structural details are required, using a vanilla CNN layer on the distorted 360 image results in undesired information loss. In this paper, we propose a 360 monocular depth estimation pipeline, OmniFusion, to tackle the spherical distortion issue. Our pipeline transforms a 360 image into less-distorted perspective patches (i.e. tangent images) to obtain patch-wise predictions via CNN, and then merge the patch-wise results for final output. To handle the discrepancy between patch-wise predictions which is a major issue affecting the merging quality, we propose a new framework with the following key components. First, we propose a geometry-aware feature fusion mechanism that combines 3D geometric features with 2D image features to compensate for the patch-wise discrepancy. Second, we employ the self-attention-based transformer architecture to conduct a global aggregation of patch-wise information, which further improves the consistency. Last, we introduce an iterative depth refinement mechanism, to further refine the estimated depth based on the more accurate geometric features. Experiments show that our method greatly mitigates the distortion issue, and achieves state-of-the-art performances on several 360 monocular depth estimation benchmark datasets.

PanoDepth: A Two-Stage Approach for Monocular Omnidirectional Depth Estimation

Feb 02, 2022

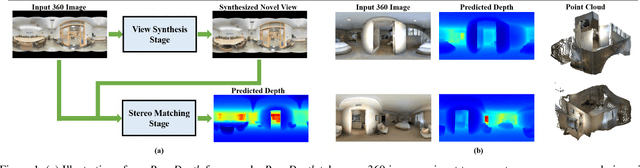

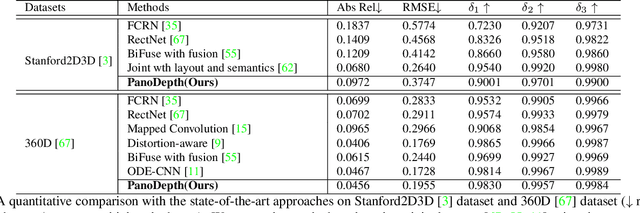

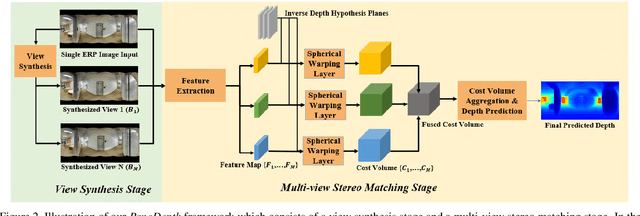

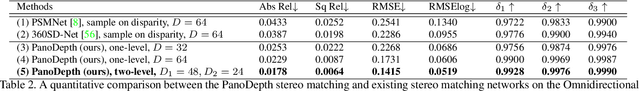

Abstract:Omnidirectional 3D information is essential for a wide range of applications such as Virtual Reality, Autonomous Driving, Robotics, etc. In this paper, we propose a novel, model-agnostic, two-stage pipeline for omnidirectional monocular depth estimation. Our proposed framework PanoDepth takes one 360 image as input, produces one or more synthesized views in the first stage, and feeds the original image and the synthesized images into the subsequent stereo matching stage. In the second stage, we propose a differentiable Spherical Warping Layer to handle omnidirectional stereo geometry efficiently and effectively. By utilizing the explicit stereo-based geometric constraints in the stereo matching stage, PanoDepth can generate dense high-quality depth. We conducted extensive experiments and ablation studies to evaluate PanoDepth with both the full pipeline as well as the individual modules in each stage. Our results show that PanoDepth outperforms the state-of-the-art approaches by a large margin for 360 monocular depth estimation.

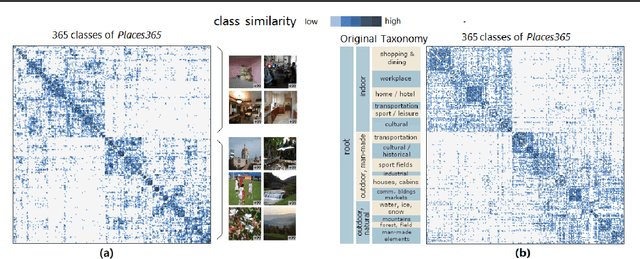

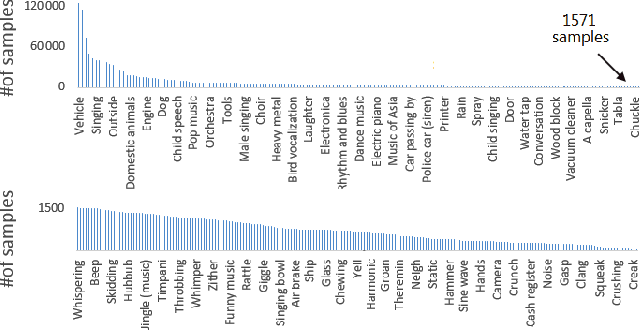

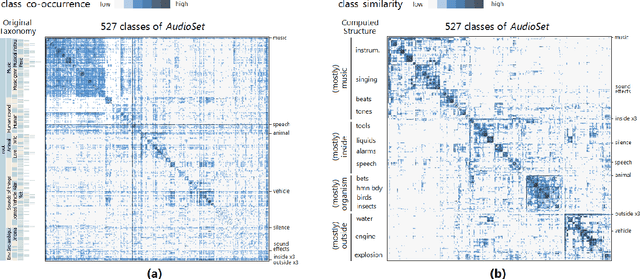

Visualizing Classification Structure in Deep Neural Networks

Jul 12, 2020

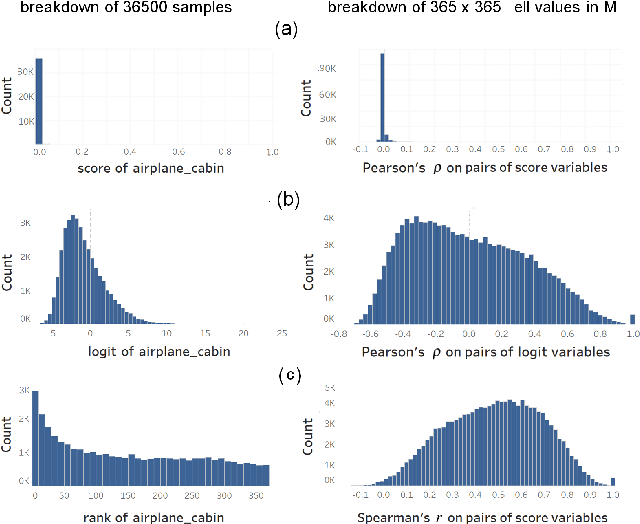

Abstract:We propose a measure to compute class similarity in large-scale classification based on prediction scores. Such measure has not been formally pro-posed in the literature. We show how visualizing the class similarity matrix can reveal hierarchical structures and relationships that govern the classes. Through examples with various classifiers, we demonstrate how such structures can help in analyzing the classification behavior and in inferring potential corner cases. The source code for one example is available as a notebook at https://github.com/bilalsal/blocks

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge