Bilal Alsallakh

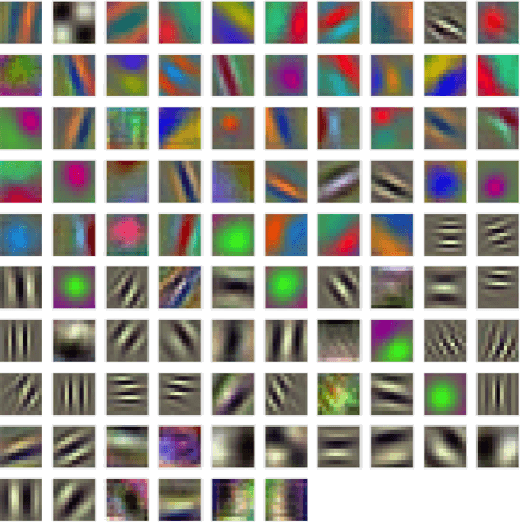

On Symmetries in Convolutional Weights

Mar 24, 2025

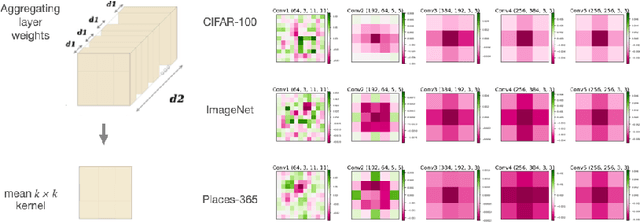

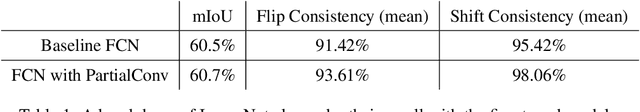

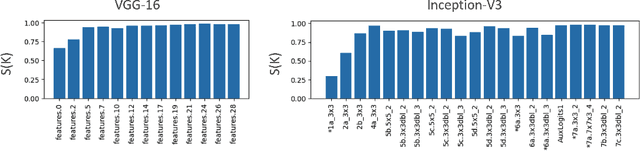

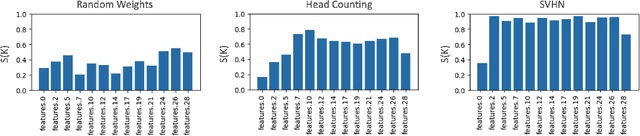

Abstract:We explore the symmetry of the mean k x k weight kernel in each layer of various convolutional neural networks. Unlike individual neurons, the mean kernels in internal layers tend to be symmetric about their centers instead of favoring specific directions. We investigate why this symmetry emerges in various datasets and models, and how it is impacted by certain architectural choices. We show how symmetry correlates with desirable properties such as shift and flip consistency, and might constitute an inherent inductive bias in convolutional neural networks.

Bias Mitigation Framework for Intersectional Subgroups in Neural Networks

Dec 26, 2022Abstract:We propose a fairness-aware learning framework that mitigates intersectional subgroup bias associated with protected attributes. Prior research has primarily focused on mitigating one kind of bias by incorporating complex fairness-driven constraints into optimization objectives or designing additional layers that focus on specific protected attributes. We introduce a simple and generic bias mitigation approach that prevents models from learning relationships between protected attributes and output variable by reducing mutual information between them. We demonstrate that our approach is effective in reducing bias with little or no drop in accuracy. We also show that the models trained with our learning framework become causally fair and insensitive to the values of protected attributes. Finally, we validate our approach by studying feature interactions between protected and non-protected attributes. We demonstrate that these interactions are significantly reduced when applying our bias mitigation.

Prescriptive and Descriptive Approaches to Machine-Learning Transparency

Apr 27, 2022

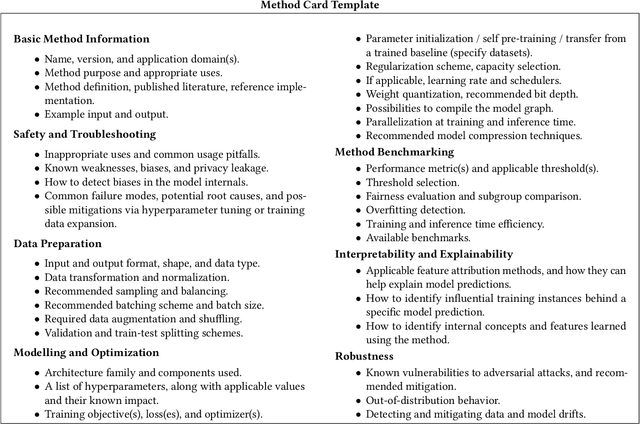

Abstract:Specialized documentation techniques have been developed to communicate key facts about machine-learning (ML) systems and the datasets and models they rely on. Techniques such as Datasheets, FactSheets, and Model Cards have taken a mainly descriptive approach, providing various details about the system components. While the above information is essential for product developers and external experts to assess whether the ML system meets their requirements, other stakeholders might find it less actionable. In particular, ML engineers need guidance on how to mitigate potential shortcomings in order to fix bugs or improve the system's performance. We survey approaches that aim to provide such guidance in a prescriptive way. We further propose a preliminary approach, called Method Cards, which aims to increase the transparency and reproducibility of ML systems by providing prescriptive documentation of commonly-used ML methods and techniques. We showcase our proposal with an example in small object detection, and demonstrate how Method Cards can communicate key considerations for model developers. We further highlight avenues for improving the user experience of ML engineers based on Method Cards.

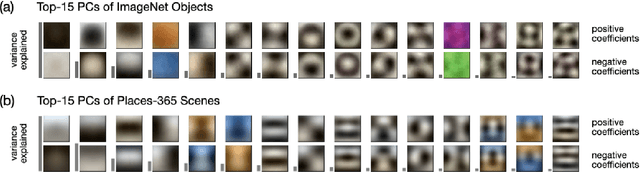

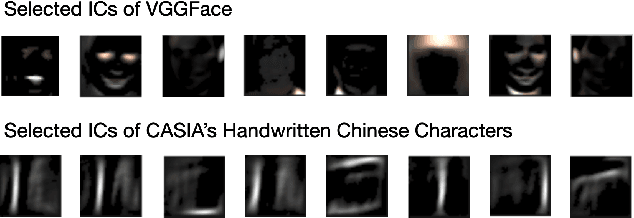

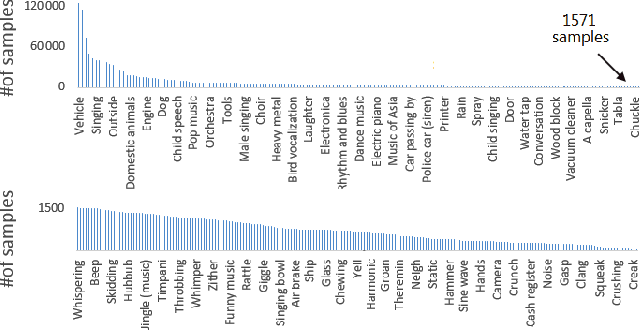

A Tour of Visualization Techniques for Computer Vision Datasets

Apr 19, 2022

Abstract:We survey a number of data visualization techniques for analyzing Computer Vision (CV) datasets. These techniques help us understand properties and latent patterns in such data, by applying dataset-level analysis. We present various examples of how such analysis helps predict the potential impact of the dataset properties on CV models and informs appropriate mitigation of their shortcomings. Finally, we explore avenues for further visualization techniques of different modalities of CV datasets as well as ones that are tailored to support specific CV tasks and analysis needs.

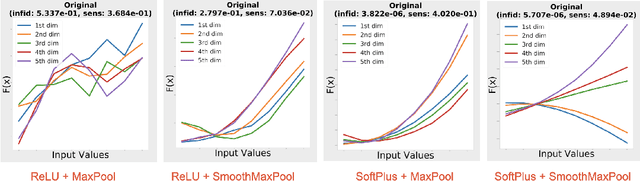

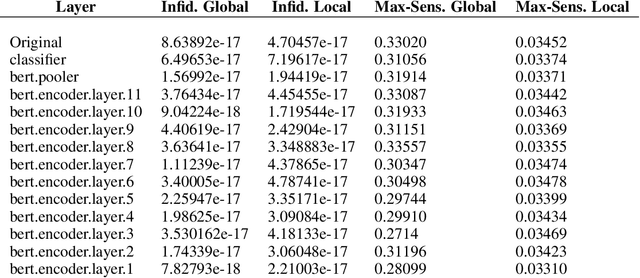

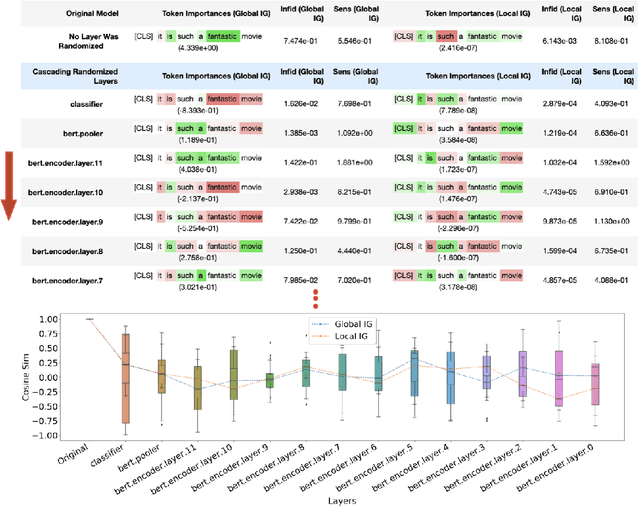

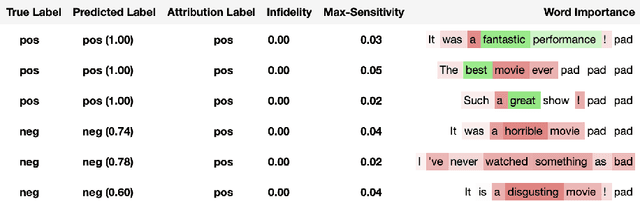

Investigating sanity checks for saliency maps with image and text classification

Jun 08, 2021

Abstract:Saliency maps have shown to be both useful and misleading for explaining model predictions especially in the context of images. In this paper, we perform sanity checks for text modality and show that the conclusions made for image do not directly transfer to text. We also analyze the effects of the input multiplier in certain saliency maps using similarity scores, max-sensitivity and infidelity evaluation metrics. Our observations reveal that the input multiplier carries input's structural patterns in explanation maps, thus leading to similar results regardless of the choice of model parameters. We also show that the smoothness of a Neural Network (NN) function can affect the quality of saliency-based explanations. Our investigations reveal that replacing ReLUs with Softplus and MaxPool with smoother variants such as LogSumExp (LSE) can lead to explanations that are more reliable based on the infidelity evaluation metric.

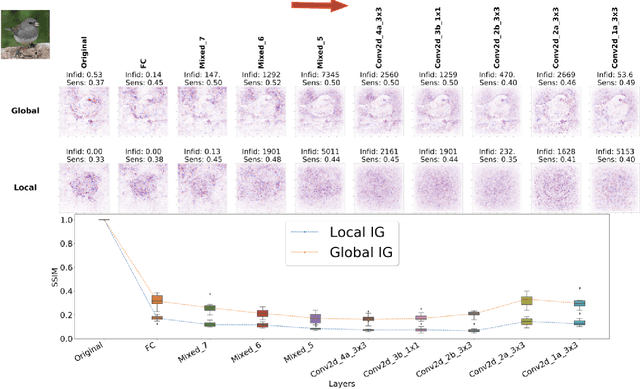

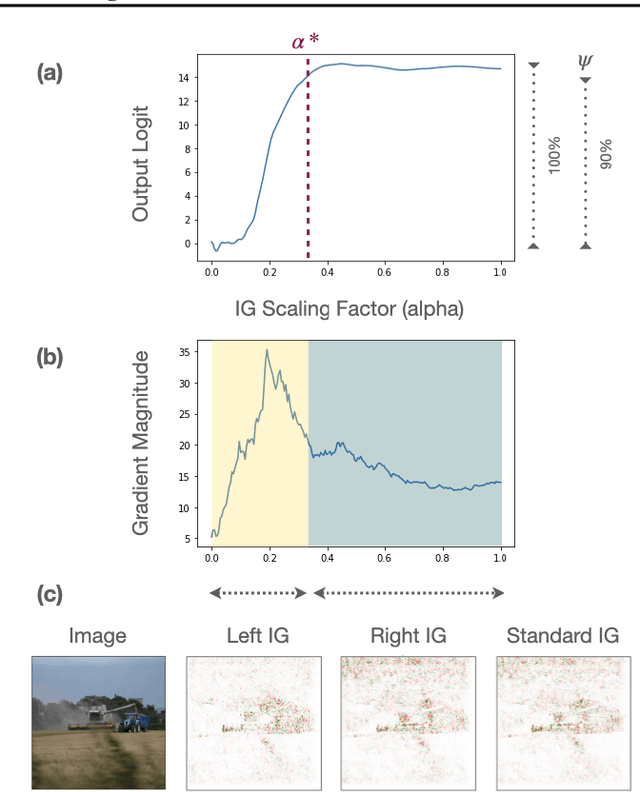

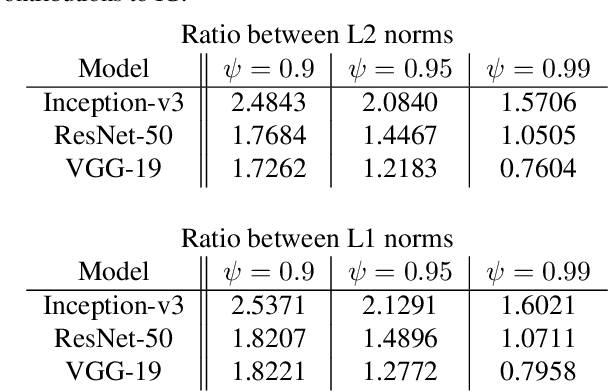

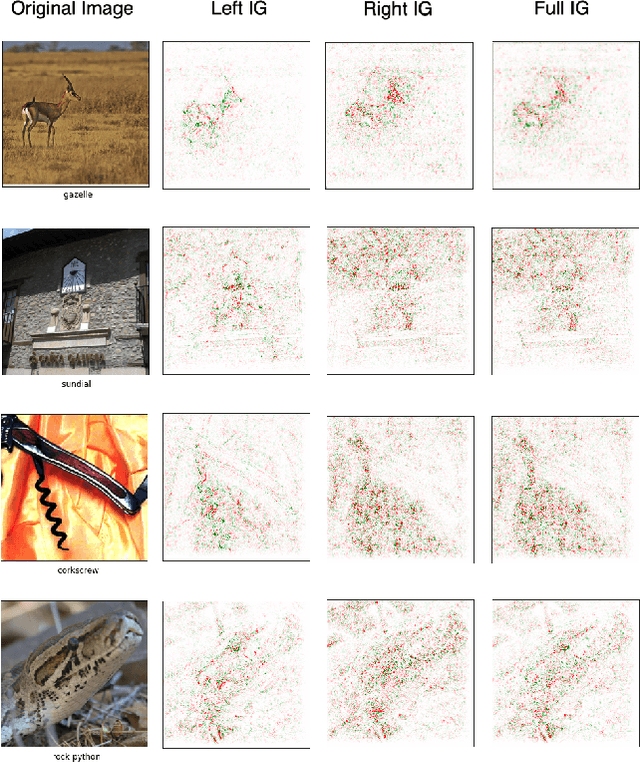

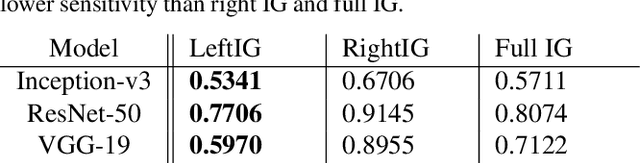

Investigating Saturation Effects in Integrated Gradients

Oct 23, 2020

Abstract:Integrated Gradients has become a popular method for post-hoc model interpretability. De-spite its popularity, the composition and relative impact of different regions of the integral path are not well understood. We explore these effects and find that gradients in saturated regions of this path, where model output changes minimally, contribute disproportionately to the computed attribution. We propose a variant of IntegratedGradients which primarily captures gradients in unsaturated regions and evaluate this method on ImageNet classification networks. We find that this attribution technique shows higher model faithfulness and lower sensitivity to noise com-pared with standard Integrated Gradients. A note-book illustrating our computations and results is available at https://github.com/vivekmig/captum-1/tree/ExpandedIG.

Mind the Pad -- CNNs can Develop Blind Spots

Oct 05, 2020

Abstract:We show how feature maps in convolutional networks are susceptible to spatial bias. Due to a combination of architectural choices, the activation at certain locations is systematically elevated or weakened. The major source of this bias is the padding mechanism. Depending on several aspects of convolution arithmetic, this mechanism can apply the padding unevenly, leading to asymmetries in the learned weights. We demonstrate how such bias can be detrimental to certain tasks such as small object detection: the activation is suppressed if the stimulus lies in the impacted area, leading to blind spots and misdetection. We propose solutions to mitigate spatial bias and demonstrate how they can improve model accuracy.

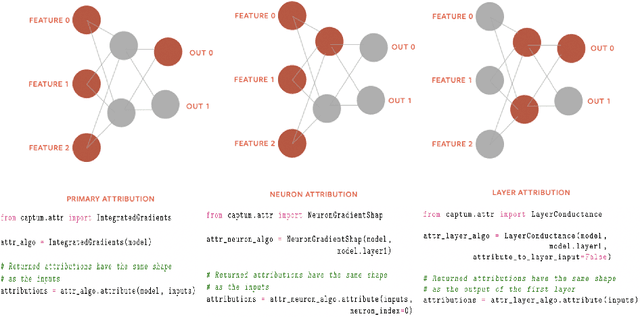

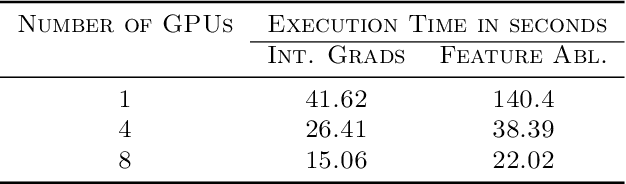

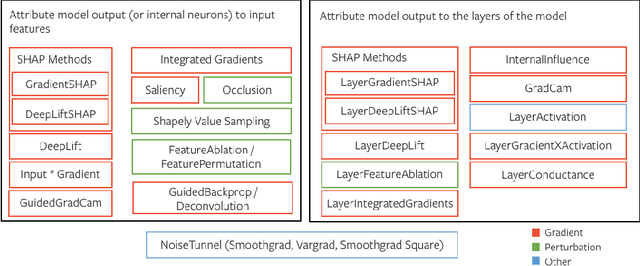

Captum: A unified and generic model interpretability library for PyTorch

Sep 16, 2020

Abstract:In this paper we introduce a novel, unified, open-source model interpretability library for PyTorch [12]. The library contains generic implementations of a number of gradient and perturbation-based attribution algorithms, also known as feature, neuron and layer importance algorithms, as well as a set of evaluation metrics for these algorithms. It can be used for both classification and non-classification models including graph-structured models built on Neural Networks (NN). In this paper we give a high-level overview of supported attribution algorithms and show how to perform memory-efficient and scalable computations. We emphasize that the three main characteristics of the library are multimodality, extensibility and ease of use. Multimodality supports different modality of inputs such as image, text, audio or video. Extensibility allows adding new algorithms and features. The library is also designed for easy understanding and use. Besides, we also introduce an interactive visualization tool called Captum Insights that is built on top of Captum library and allows sample-based model debugging and visualization using feature importance metrics.

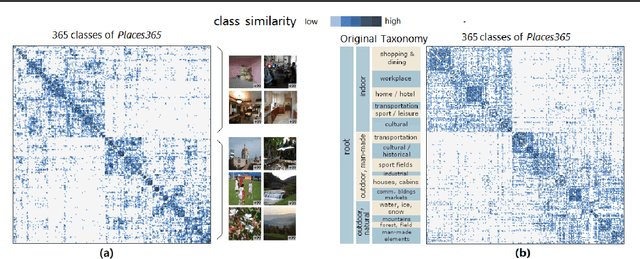

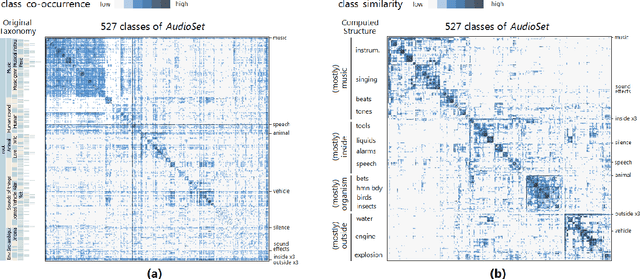

Visualizing Classification Structure in Deep Neural Networks

Jul 12, 2020

Abstract:We propose a measure to compute class similarity in large-scale classification based on prediction scores. Such measure has not been formally pro-posed in the literature. We show how visualizing the class similarity matrix can reveal hierarchical structures and relationships that govern the classes. Through examples with various classifiers, we demonstrate how such structures can help in analyzing the classification behavior and in inferring potential corner cases. The source code for one example is available as a notebook at https://github.com/bilalsal/blocks

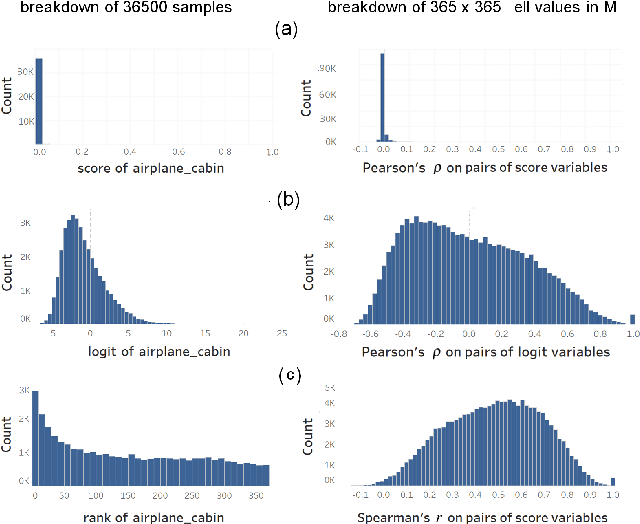

Prediction Scores as a Window into Classifier Behavior

Nov 18, 2017

Abstract:Most multi-class classifiers make their prediction for a test sample by scoring the classes and selecting the one with the highest score. Analyzing these prediction scores is useful to understand the classifier behavior and to assess its reliability. We present an interactive visualization that facilitates per-class analysis of these scores. Our system, called Classilist, enables relating these scores to the classification correctness and to the underlying samples and their features. We illustrate how such analysis reveals varying behavior of different classifiers. Classilist is available for use online, along with source code, video tutorials, and plugins for R, RapidMiner, and KNIME at https://katehara.github.io/classilist-site/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge