Zhiwei Deng

Dynamic Diffusion Schrödinger Bridge in Astrophysical Observational Inversions

Jun 11, 2025Abstract:We study Diffusion Schr\"odinger Bridge (DSB) models in the context of dynamical astrophysical systems, specifically tackling observational inverse prediction tasks within Giant Molecular Clouds (GMCs) for star formation. We introduce the Astro-DSB model, a variant of DSB with the pairwise domain assumption tailored for astrophysical dynamics. By investigating its learning process and prediction performance in both physically simulated data and in real observations (the Taurus B213 data), we present two main takeaways. First, from the astrophysical perspective, our proposed paired DSB method improves interpretability, learning efficiency, and prediction performance over conventional astrostatistical and other machine learning methods. Second, from the generative modeling perspective, probabilistic generative modeling reveals improvements over discriminative pixel-to-pixel modeling in Out-Of-Distribution (OOD) testing cases of physical simulations with unseen initial conditions and different dominant physical processes. Our study expands research into diffusion models beyond the traditional visual synthesis application and provides evidence of the models' learning abilities beyond pure data statistics, paving a path for future physics-aware generative models which can align dynamics between machine learning and real (astro)physical systems.

DD-Ranking: Rethinking the Evaluation of Dataset Distillation

May 19, 2025

Abstract:In recent years, dataset distillation has provided a reliable solution for data compression, where models trained on the resulting smaller synthetic datasets achieve performance comparable to those trained on the original datasets. To further improve the performance of synthetic datasets, various training pipelines and optimization objectives have been proposed, greatly advancing the field of dataset distillation. Recent decoupled dataset distillation methods introduce soft labels and stronger data augmentation during the post-evaluation phase and scale dataset distillation up to larger datasets (e.g., ImageNet-1K). However, this raises a question: Is accuracy still a reliable metric to fairly evaluate dataset distillation methods? Our empirical findings suggest that the performance improvements of these methods often stem from additional techniques rather than the inherent quality of the images themselves, with even randomly sampled images achieving superior results. Such misaligned evaluation settings severely hinder the development of DD. Therefore, we propose DD-Ranking, a unified evaluation framework, along with new general evaluation metrics to uncover the true performance improvements achieved by different methods. By refocusing on the actual information enhancement of distilled datasets, DD-Ranking provides a more comprehensive and fair evaluation standard for future research advancements.

ICONS: Influence Consensus for Vision-Language Data Selection

Jan 06, 2025

Abstract:Visual Instruction Tuning typically requires a large amount of vision-language training data. This data often containing redundant information that increases computational costs without proportional performance gains. In this work, we introduce ICONS, a gradient-driven Influence CONsensus approach for vision-language data Selection that selects a compact training dataset for efficient multi-task training. The key element of our approach is cross-task influence consensus, which uses majority voting across task-specific influence matrices to identify samples that are consistently valuable across multiple tasks, allowing us to effectively prioritize data that optimizes for overall performance. Experiments show that models trained on our selected data (20% of LLaVA-665K) achieve 98.6% of the relative performance obtained using the full dataset. Additionally, we release this subset, LLaVA-ICONS-133K, a compact yet highly informative subset of LLaVA-665K visual instruction tuning data, preserving high impact training data for efficient vision-language model development.

Influential Language Data Selection via Gradient Trajectory Pursuit

Oct 22, 2024Abstract:Curating a desirable dataset for training has been the core of building highly capable large language models (Touvron et al., 2023; Achiam et al., 2023; Team et al.,2024). Gradient influence scores (Pruthi et al., 2020; Xia et al., 2024) are shown to be correlated with model performance and are commonly used as the criterion for data selection. However, existing methods are built upon either individual sample rankings or inefficient matching process, leading to suboptimal performance or scaling up issues.In this paper, we propose Gradient Trajectory Pursuit (GTP), an algorithm that performs pursuit of gradient trajectories via jointly selecting data points under an L0-norm regularized objective. The proposed algorithm highlights: (1) joint selection instead of independent top-k selection, which automatically de-duplicates samples; (2) higher efficiency with compressive sampling processes, which can be further sped up using a distributed framework. In the experiments, we demonstrate the algorithm in both in-domain and target-domain selection benchmarks and show that it outperforms top-k selection and competitive algorithms consistently, for example, our algorithm chooses as low as 0.5% data to achieve full performance on the targeted instruction tuning tasks

A Label is Worth a Thousand Images in Dataset Distillation

Jun 15, 2024

Abstract:Data $\textit{quality}$ is a crucial factor in the performance of machine learning models, a principle that dataset distillation methods exploit by compressing training datasets into much smaller counterparts that maintain similar downstream performance. Understanding how and why data distillation methods work is vital not only for improving these methods but also for revealing fundamental characteristics of "good" training data. However, a major challenge in achieving this goal is the observation that distillation approaches, which rely on sophisticated but mostly disparate methods to generate synthetic data, have little in common with each other. In this work, we highlight a largely overlooked aspect common to most of these methods: the use of soft (probabilistic) labels. Through a series of ablation experiments, we study the role of soft labels in depth. Our results reveal that the main factor explaining the performance of state-of-the-art distillation methods is not the specific techniques used to generate synthetic data but rather the use of soft labels. Furthermore, we demonstrate that not all soft labels are created equal; they must contain $\textit{structured information}$ to be beneficial. We also provide empirical scaling laws that characterize the effectiveness of soft labels as a function of images-per-class in the distilled dataset and establish an empirical Pareto frontier for data-efficient learning. Combined, our findings challenge conventional wisdom in dataset distillation, underscore the importance of soft labels in learning, and suggest new directions for improving distillation methods. Code for all experiments is available at https://github.com/sunnytqin/no-distillation.

What is Dataset Distillation Learning?

Jun 06, 2024

Abstract:Dataset distillation has emerged as a strategy to overcome the hurdles associated with large datasets by learning a compact set of synthetic data that retains essential information from the original dataset. While distilled data can be used to train high performing models, little is understood about how the information is stored. In this study, we posit and answer three questions about the behavior, representativeness, and point-wise information content of distilled data. We reveal distilled data cannot serve as a substitute for real data during training outside the standard evaluation setting for dataset distillation. Additionally, the distillation process retains high task performance by compressing information related to the early training dynamics of real models. Finally, we provide an framework for interpreting distilled data and reveal that individual distilled data points contain meaningful semantic information. This investigation sheds light on the intricate nature of distilled data, providing a better understanding on how they can be effectively utilized.

Devil's Advocate: Anticipatory Reflection for LLM Agents

May 29, 2024

Abstract:In this work, we introduce a novel approach that equips LLM agents with introspection, enhancing consistency and adaptability in solving complex tasks. Our approach prompts LLM agents to decompose a given task into manageable subtasks (i.e., to make a plan), and to continuously introspect upon the suitability and results of their actions. We implement a three-fold introspective intervention: 1) anticipatory reflection on potential failures and alternative remedy before action execution, 2) post-action alignment with subtask objectives and backtracking with remedy to ensure utmost effort in plan execution, and 3) comprehensive review upon plan completion for future strategy refinement. By deploying and experimenting with this methodology - a zero-shot approach - within WebArena for practical tasks in web environments, our agent demonstrates superior performance over existing zero-shot methods. The experimental results suggest that our introspection-driven approach not only enhances the agent's ability to navigate unanticipated challenges through a robust mechanism of plan execution, but also improves efficiency by reducing the number of trials and plan revisions needed to achieve a task.

TauAD: MRI-free Tau Anomaly Detection in PET Imaging via Conditioned Diffusion Models

May 21, 2024

Abstract:The emergence of tau PET imaging over the last decade has enabled Alzheimer's disease (AD) researchers to examine tau pathology in vivo and more effectively characterize the disease trajectories of AD. Current tau PET analysis methods, however, typically perform inferences on large cortical ROIs and are limited in the detection of localized tau pathology that varies across subjects. Furthermore, a high-resolution MRI is required to carry out conventional tau PET analysis, which is not commonly acquired in clinical practices and may not be acquired for many elderly patients with dementia due to strong motion artifacts, claustrophobia, or certain metal implants. In this work, we propose a novel conditional diffusion model to perform MRI-free anomaly detection from tau PET imaging data. By including individualized conditions and two complementary loss maps from pseudo-healthy and pseudo-unhealthy reconstructions, our model computes an anomaly map across the entire brain area that allows simply training a support vector machine (SVM) for classifying disease severity. We train our model on ADNI subjects (n=534) and evaluate its performance on a separate dataset from the preclinical subjects of the A4 clinical trial (n=447). We demonstrate that our method outperforms baseline generative models and the conventional Z-score-based method in anomaly localization without mis-detecting off-target bindings in sub-cortical and out-of-brain areas. By classifying the A4 subjects according to their anomaly map using the SVM trained on ADNI data, we show that our method can successfully group preclinical subjects with significantly different cognitive functions, which further demonstrates the effectiveness of our method in capturing biologically relevant anomaly in tau PET imaging.

Distributional Dataset Distillation with Subtask Decomposition

Mar 01, 2024Abstract:What does a neural network learn when training from a task-specific dataset? Synthesizing this knowledge is the central idea behind Dataset Distillation, which recent work has shown can be used to compress large datasets into a small set of input-label pairs ($\textit{prototypes}$) that capture essential aspects of the original dataset. In this paper, we make the key observation that existing methods distilling into explicit prototypes are very often suboptimal, incurring in unexpected storage cost from distilled labels. In response, we propose $\textit{Distributional Dataset Distillation}$ (D3), which encodes the data using minimal sufficient per-class statistics and paired with a decoder, we distill dataset into a compact distributional representation that is more memory-efficient compared to prototype-based methods. To scale up the process of learning these representations, we propose $\textit{Federated distillation}$, which decomposes the dataset into subsets, distills them in parallel using sub-task experts and then re-aggregates them. We thoroughly evaluate our algorithm on a three-dimensional metric and show that our method achieves state-of-the-art results on TinyImageNet and ImageNet-1K. Specifically, we outperform the prior art by $6.9\%$ on ImageNet-1K under the storage budget of 2 images per class.

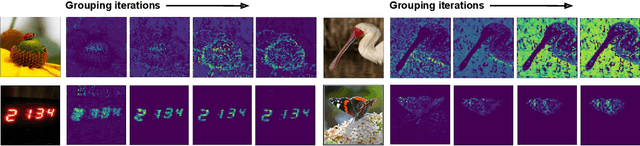

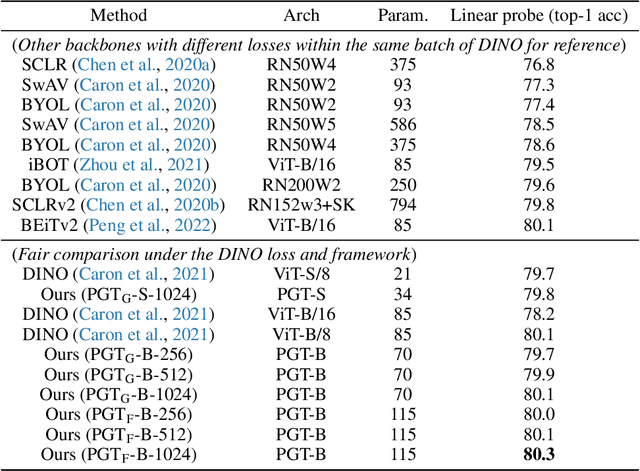

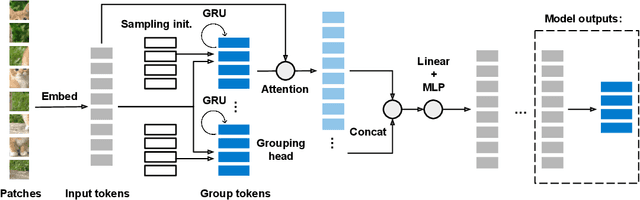

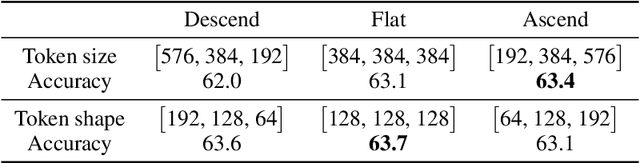

Perceptual Group Tokenizer: Building Perception with Iterative Grouping

Nov 30, 2023

Abstract:Human visual recognition system shows astonishing capability of compressing visual information into a set of tokens containing rich representations without label supervision. One critical driving principle behind it is perceptual grouping. Despite being widely used in computer vision in the early 2010s, it remains a mystery whether perceptual grouping can be leveraged to derive a neural visual recognition backbone that generates as powerful representations. In this paper, we propose the Perceptual Group Tokenizer, a model that entirely relies on grouping operations to extract visual features and perform self-supervised representation learning, where a series of grouping operations are used to iteratively hypothesize the context for pixels or superpixels to refine feature representations. We show that the proposed model can achieve competitive performance compared to state-of-the-art vision architectures, and inherits desirable properties including adaptive computation without re-training, and interpretability. Specifically, Perceptual Group Tokenizer achieves 80.3% on ImageNet-1K self-supervised learning benchmark with linear probe evaluation, marking a new progress under this paradigm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge