Zhiheng Zhang

MCVI-SANet: A lightweight semi-supervised model for LAI and SPAD estimation of winter wheat under vegetation index saturation

Dec 20, 2025Abstract:Vegetation index (VI) saturation during the dense canopy stage and limited ground-truth annotations of winter wheat constrain accurate estimation of LAI and SPAD. Existing VI-based and texture-driven machine learning methods exhibit limited feature expressiveness. In addition, deep learning baselines suffer from domain gaps and high data demands, which restrict their generalization. Therefore, this study proposes the Multi-Channel Vegetation Indices Saturation Aware Net (MCVI-SANet), a lightweight semi-supervised vision model. The model incorporates a newly designed Vegetation Index Saturation-Aware Block (VI-SABlock) for adaptive channel-spatial feature enhancement. It also integrates a VICReg-based semi-supervised strategy to further improve generalization. Datasets were partitioned using a vegetation height-informed strategy to maintain representativeness across growth stages. Experiments over 10 repeated runs demonstrate that MCVI-SANet achieves state-of-the-art accuracy. The model attains an average R2 of 0.8123 and RMSE of 0.4796 for LAI, and an average R2 of 0.6846 and RMSE of 2.4222 for SPAD. This performance surpasses the best-performing baselines, with improvements of 8.95% in average LAI R2 and 8.17% in average SPAD R2. Moreover, MCVI-SANet maintains high inference speed with only 0.10M parameters. Overall, the integration of semi-supervised learning with agronomic priors provides a promising approach for enhancing remote sensing-based precision agriculture.

On Path to Multimodal Historical Reasoning: HistBench and HistAgent

May 26, 2025Abstract:Recent advances in large language models (LLMs) have led to remarkable progress across domains, yet their capabilities in the humanities, particularly history, remain underexplored. Historical reasoning poses unique challenges for AI, involving multimodal source interpretation, temporal inference, and cross-linguistic analysis. While general-purpose agents perform well on many existing benchmarks, they lack the domain-specific expertise required to engage with historical materials and questions. To address this gap, we introduce HistBench, a new benchmark of 414 high-quality questions designed to evaluate AI's capacity for historical reasoning and authored by more than 40 expert contributors. The tasks span a wide range of historical problems-from factual retrieval based on primary sources to interpretive analysis of manuscripts and images, to interdisciplinary challenges involving archaeology, linguistics, or cultural history. Furthermore, the benchmark dataset spans 29 ancient and modern languages and covers a wide range of historical periods and world regions. Finding the poor performance of LLMs and other agents on HistBench, we further present HistAgent, a history-specific agent equipped with carefully designed tools for OCR, translation, archival search, and image understanding in History. On HistBench, HistAgent based on GPT-4o achieves an accuracy of 27.54% pass@1 and 36.47% pass@2, significantly outperforming LLMs with online search and generalist agents, including GPT-4o (18.60%), DeepSeek-R1(14.49%) and Open Deep Research-smolagents(20.29% pass@1 and 25.12% pass@2). These results highlight the limitations of existing LLMs and generalist agents and demonstrate the advantages of HistAgent for historical reasoning.

Learning Transformation-Isomorphic Latent Space for Accurate Hand Pose Estimation

Feb 18, 2025Abstract:Vision-based regression tasks, such as hand pose estimation, have achieved higher accuracy and faster convergence through representation learning. However, existing representation learning methods often encounter the following issues: the high semantic level of features extracted from images is inadequate for regressing low-level information, and the extracted features include task-irrelevant information, reducing their compactness and interfering with regression tasks. To address these challenges, we propose TI-Net, a highly versatile visual Network backbone designed to construct a Transformation Isomorphic latent space. Specifically, we employ linear transformations to model geometric transformations in the latent space and ensure that {\rm TI-Net} aligns them with those in the image space. This ensures that the latent features capture compact, low-level information beneficial for pose estimation tasks. We evaluated TI-Net on the hand pose estimation task to demonstrate the network's superiority. On the DexYCB dataset, TI-Net achieved a 10% improvement in the PA-MPJPE metric compared to specialized state-of-the-art (SOTA) hand pose estimation methods. Our code will be released in the future.

Online Experimental Design With Estimation-Regret Trade-off Under Network Interference

Dec 04, 2024Abstract:Network interference has garnered significant interest in the field of causal inference. It reflects diverse sociological behaviors, wherein the treatment assigned to one individual within a network may influence the outcome of other individuals, such as their neighbors. To estimate the causal effect, one classical way is to randomly assign experimental candidates into different groups and compare their differences. However, in the context of sequential experiments, such treatment assignment may result in a large regret. In this paper, we develop a unified interference-based online experimental design framework. Compared to existing literature, we expand the definition of arm space by leveraging the statistical concept of exposure mapping. Importantly, we establish the Pareto-optimal trade-off between the estimation accuracy and regret with respect to both time period and arm space, which remains superior to the baseline even in the absence of network interference. We further propose an algorithmic implementation and model generalization.

DARK: Denoising, Amplification, Restoration Kit

May 21, 2024

Abstract:This paper introduces a novel lightweight computational framework for enhancing images under low-light conditions, utilizing advanced machine learning and convolutional neural networks (CNNs). Traditional enhancement techniques often fail to adequately address issues like noise, color distortion, and detail loss in challenging lighting environments. Our approach leverages insights from the Retinex theory and recent advances in image restoration networks to develop a streamlined model that efficiently processes illumination components and integrates context-sensitive enhancements through optimized convolutional blocks. This results in significantly improved image clarity and color fidelity, while avoiding over-enhancement and unnatural color shifts. Crucially, our model is designed to be lightweight, ensuring low computational demand and suitability for real-time applications on standard consumer hardware. Performance evaluations confirm that our model not only surpasses existing methods in enhancing low-light images but also maintains a minimal computational footprint.

Unpaired Multi-domain Attribute Translation of 3D Facial Shapes with a Square and Symmetric Geometric Map

Aug 25, 2023

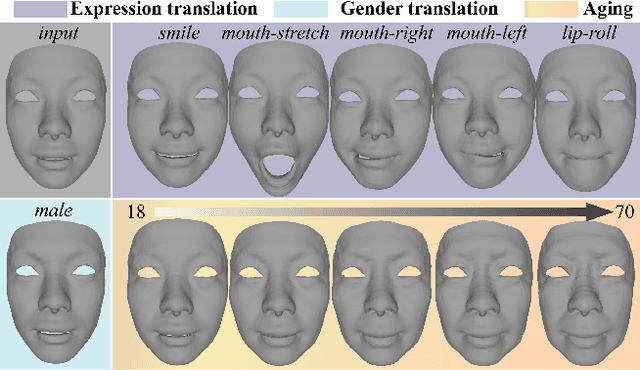

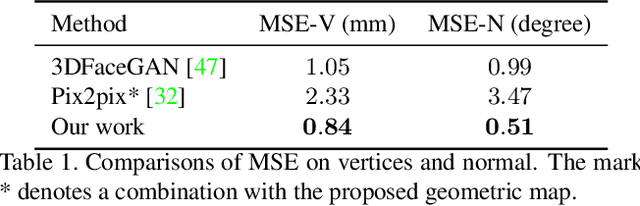

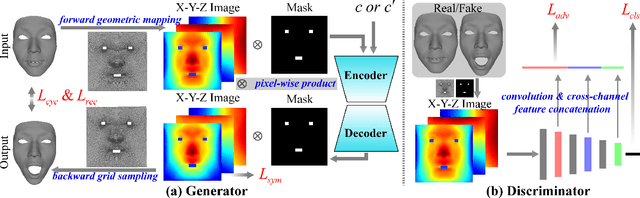

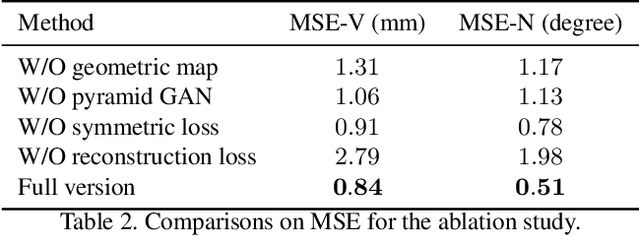

Abstract:While impressive progress has recently been made in image-oriented facial attribute translation, shape-oriented 3D facial attribute translation remains an unsolved issue. This is primarily limited by the lack of 3D generative models and ineffective usage of 3D facial data. We propose a learning framework for 3D facial attribute translation to relieve these limitations. Firstly, we customize a novel geometric map for 3D shape representation and embed it in an end-to-end generative adversarial network. The geometric map represents 3D shapes symmetrically on a square image grid, while preserving the neighboring relationship of 3D vertices in a local least-square sense. This enables effective learning for the latent representation of data with different attributes. Secondly, we employ a unified and unpaired learning framework for multi-domain attribute translation. It not only makes effective usage of data correlation from multiple domains, but also mitigates the constraint for hardly accessible paired data. Finally, we propose a hierarchical architecture for the discriminator to guarantee robust results against both global and local artifacts. We conduct extensive experiments to demonstrate the advantage of the proposed framework over the state-of-the-art in generating high-fidelity facial shapes. Given an input 3D facial shape, the proposed framework is able to synthesize novel shapes of different attributes, which covers some downstream applications, such as expression transfer, gender translation, and aging. Code at https://github.com/NaughtyZZ/3D_facial_shape_attribute_translation_ssgmap.

Causal Influence Maximization in Hypergraph

Jan 28, 2023Abstract:Influence Maximization (IM) is the task of selecting a fixed number of seed nodes in a given network to maximize dissemination benefits. Although the research for efficient algorithms has been dedicated recently, it is usually neglected to further explore the graph structure and the objective function inherently. With this motivation, we take the first attempt on the hypergraph-based IM with a novel causal objective. We consider the case that each hypergraph node carries specific attributes with Individual Treatment Effect (ITE), namely the change of potential outcomes before/after infections in a causal inference perspective. In many scenarios, the sum of ITEs of the infected is a more reasonable objective for influence spread, whereas it is difficult to achieve via current IM algorithms. In this paper, we introduce a new algorithm called \textbf{CauIM}. We first recover the ITE of each node with observational data and then conduct a weighted greedy algorithm to maximize the sum of ITEs of the infected. Theoretically, we mainly present the generalized lower bound of influence spread beyond the well-known $(1-\frac{1}{e})$ optimal guarantee and provide the robustness analysis. Empirically, in real-world experiments, we demonstrate the effectiveness and robustness of \textbf{CauIM}. It outperforms the previous IM and randomized methods significantly.

Optimizing AD Pruning of Sponsored Search with Reinforcement Learning

Aug 05, 2020

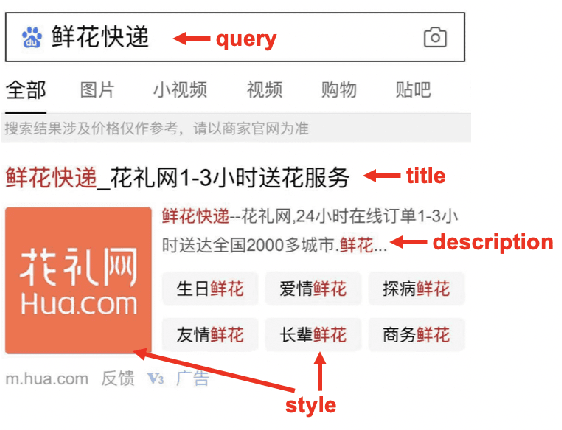

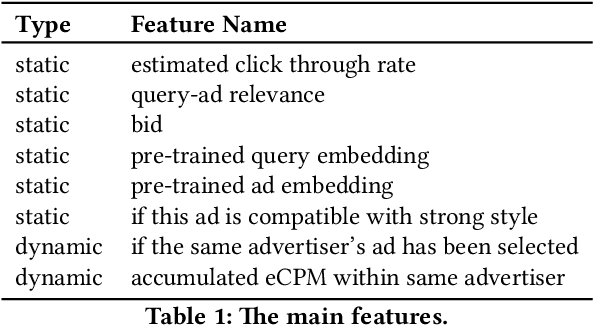

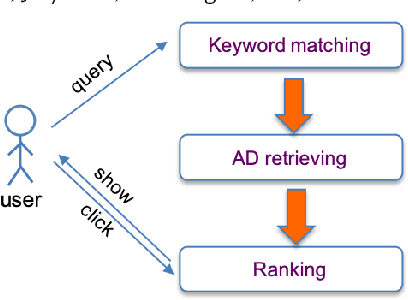

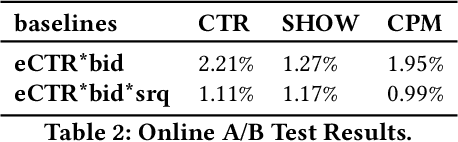

Abstract:Industrial sponsored search system (SSS) can be logically divided into three modules: keywords matching, ad retrieving, and ranking. During ad retrieving, the ad candidates grow exponentially. A query with high commercial value might retrieve a great deal of ad candidates such that the ranking module could not afford. Due to limited latency and computing resources, the candidates have to be pruned earlier. Suppose we set a pruning line to cut SSS into two parts: upstream and downstream. The problem we are going to address is: how to pick out the best $K$ items from $N$ candidates provided by the upstream to maximize the total system's revenue. Since the industrial downstream is very complicated and updated quickly, a crucial restriction in this problem is that the selection scheme should get adapted to the downstream. In this paper, we propose a novel model-free reinforcement learning approach to fixing this problem. Our approach considers downstream as a black-box environment, and the agent sequentially selects items and finally feeds into the downstream, where revenue would be estimated and used as a reward to improve the selection policy. To the best of our knowledge, this is first time to consider the system optimization from a downstream adaption view. It is also the first time to use reinforcement learning techniques to tackle this problem. The idea has been successfully realized in Baidu's sponsored search system, and online long time A/B test shows remarkable improvements on revenue.

Dynamic Window-level Granger Causality of Multi-channel Time Series

Jun 14, 2020

Abstract:Granger causality method analyzes the time series causalities without building a complex causality graph. However, the traditional Granger causality method assumes that the causalities lie between time series channels and remain constant, which cannot model the real-world time series data with dynamic causalities along the time series channels. In this paper, we present the dynamic window-level Granger causality method (DWGC) for multi-channel time series data. We build the causality model on the window-level by doing the F-test with the forecasting errors on the sliding windows. We propose the causality indexing trick in our DWGC method to reweight the original time series data. Essentially, the causality indexing is to decrease the auto-correlation and increase the cross-correlation causal effects, which improves the DWGC method. Theoretical analysis and experimental results on two synthetic and one real-world datasets show that the improved DWGC method with causality indexing better detects the window-level causalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge