Zhigang Tu

EFSI-DETR: Efficient Frequency-Semantic Integration for Real-Time Small Object Detection in UAV Imagery

Jan 26, 2026Abstract:Real-time small object detection in Unmanned Aerial Vehicle (UAV) imagery remains challenging due to limited feature representation and ineffective multi-scale fusion. Existing methods underutilize frequency information and rely on static convolutional operations, which constrain the capacity to obtain rich feature representations and hinder the effective exploitation of deep semantic features. To address these issues, we propose EFSI-DETR, a novel detection framework that integrates efficient semantic feature enhancement with dynamic frequency-spatial guidance. EFSI-DETR comprises two main components: (1) a Dynamic Frequency-Spatial Unified Synergy Network (DyFusNet) that jointly exploits frequency and spatial cues for robust multi-scale feature fusion, (2) an Efficient Semantic Feature Concentrator (ESFC) that enables deep semantic extraction with minimal computational cost. Furthermore, a Fine-grained Feature Retention (FFR) strategy is adopted to incorporate spatially rich shallow features during fusion to preserve fine-grained details, crucial for small object detection in UAV imagery. Extensive experiments on VisDrone and CODrone benchmarks demonstrate that our EFSI-DETR achieves the state-of-the-art performance with real-time efficiency, yielding improvement of \textbf{1.6}\% and \textbf{5.8}\% in AP and AP$_{s}$ on VisDrone, while obtaining \textbf{188} FPS inference speed on a single RTX 4090 GPU.

DreamDance: Animating Character Art via Inpainting Stable Gaussian Worlds

May 30, 2025

Abstract:This paper presents DreamDance, a novel character art animation framework capable of producing stable, consistent character and scene motion conditioned on precise camera trajectories. To achieve this, we re-formulate the animation task as two inpainting-based steps: Camera-aware Scene Inpainting and Pose-aware Video Inpainting. The first step leverages a pre-trained image inpainting model to generate multi-view scene images from the reference art and optimizes a stable large-scale Gaussian field, which enables coarse background video rendering with camera trajectories. However, the rendered video is rough and only conveys scene motion. To resolve this, the second step trains a pose-aware video inpainting model that injects the dynamic character into the scene video while enhancing background quality. Specifically, this model is a DiT-based video generation model with a gating strategy that adaptively integrates the character's appearance and pose information into the base background video. Through extensive experiments, we demonstrate the effectiveness and generalizability of DreamDance, producing high-quality and consistent character animations with remarkable camera dynamics.

Visual Prompting for One-shot Controllable Video Editing without Inversion

Apr 19, 2025

Abstract:One-shot controllable video editing (OCVE) is an important yet challenging task, aiming to propagate user edits that are made -- using any image editing tool -- on the first frame of a video to all subsequent frames, while ensuring content consistency between edited frames and source frames. To achieve this, prior methods employ DDIM inversion to transform source frames into latent noise, which is then fed into a pre-trained diffusion model, conditioned on the user-edited first frame, to generate the edited video. However, the DDIM inversion process accumulates errors, which hinder the latent noise from accurately reconstructing the source frames, ultimately compromising content consistency in the generated edited frames. To overcome it, our method eliminates the need for DDIM inversion by performing OCVE through a novel perspective based on visual prompting. Furthermore, inspired by consistency models that can perform multi-step consistency sampling to generate a sequence of content-consistent images, we propose a content consistency sampling (CCS) to ensure content consistency between the generated edited frames and the source frames. Moreover, we introduce a temporal-content consistency sampling (TCS) based on Stein Variational Gradient Descent to ensure temporal consistency across the edited frames. Extensive experiments validate the effectiveness of our approach.

EchoMask: Speech-Queried Attention-based Mask Modeling for Holistic Co-Speech Motion Generation

Apr 15, 2025

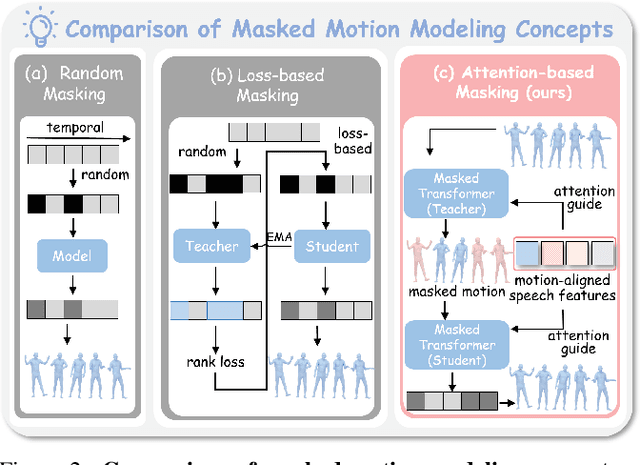

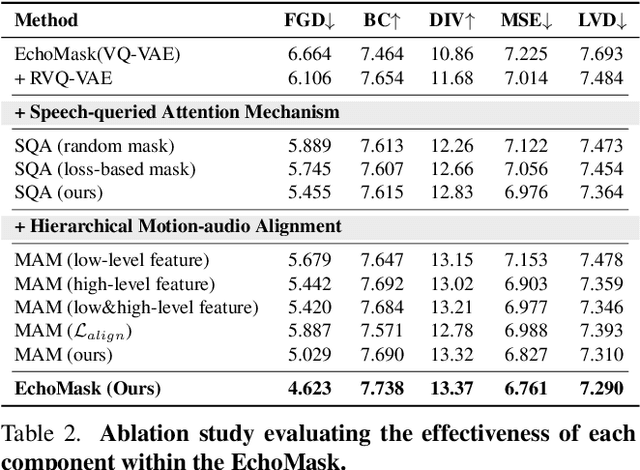

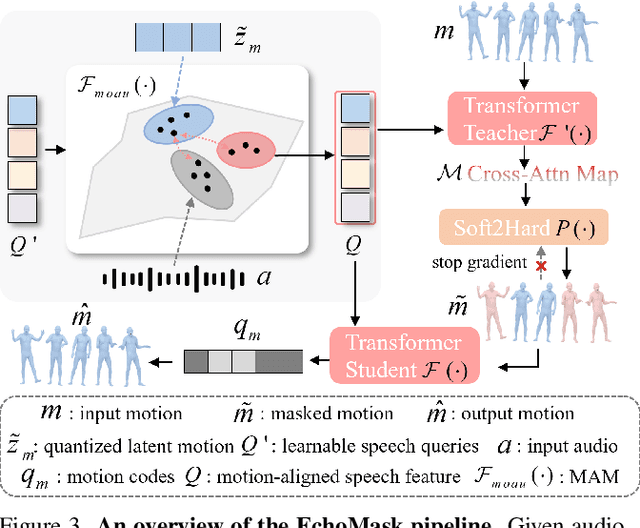

Abstract:Masked modeling framework has shown promise in co-speech motion generation. However, it struggles to identify semantically significant frames for effective motion masking. In this work, we propose a speech-queried attention-based mask modeling framework for co-speech motion generation. Our key insight is to leverage motion-aligned speech features to guide the masked motion modeling process, selectively masking rhythm-related and semantically expressive motion frames. Specifically, we first propose a motion-audio alignment module (MAM) to construct a latent motion-audio joint space. In this space, both low-level and high-level speech features are projected, enabling motion-aligned speech representation using learnable speech queries. Then, a speech-queried attention mechanism (SQA) is introduced to compute frame-level attention scores through interactions between motion keys and speech queries, guiding selective masking toward motion frames with high attention scores. Finally, the motion-aligned speech features are also injected into the generation network to facilitate co-speech motion generation. Qualitative and quantitative evaluations confirm that our method outperforms existing state-of-the-art approaches, successfully producing high-quality co-speech motion.

EMO-X: Efficient Multi-Person Pose and Shape Estimation in One-Stage

Apr 11, 2025Abstract:Expressive Human Pose and Shape Estimation (EHPS) aims to jointly estimate human pose, hand gesture, and facial expression from monocular images. Existing methods predominantly rely on Transformer-based architectures, which suffer from quadratic complexity in self-attention, leading to substantial computational overhead, especially in multi-person scenarios. Recently, Mamba has emerged as a promising alternative to Transformers due to its efficient global modeling capability. However, it remains limited in capturing fine-grained local dependencies, which are essential for precise EHPS. To address these issues, we propose EMO-X, the Efficient Multi-person One-stage model for multi-person EHPS. Specifically, we explore a Scan-based Global-Local Decoder (SGLD) that integrates global context with skeleton-aware local features to iteratively enhance human tokens. Our EMO-X leverages the superior global modeling capability of Mamba and designs a local bidirectional scan mechanism for skeleton-aware local refinement. Comprehensive experiments demonstrate that EMO-X strikes an excellent balance between efficiency and accuracy. Notably, it achieves a significant reduction in computational complexity, requiring 69.8% less inference time compared to state-of-the-art (SOTA) methods, while outperforming most of them in accuracy.

OwlSight: A Robust Illumination Adaptation Framework for Dark Video Human Action Recognition

Mar 30, 2025Abstract:Human action recognition in low-light environments is crucial for various real-world applications. However, the existing approaches overlook the full utilization of brightness information throughout the training phase, leading to suboptimal performance. To address this limitation, we propose OwlSight, a biomimetic-inspired framework with whole-stage illumination enhancement to interact with action classification for accurate dark video human action recognition. Specifically, OwlSight incorporates a Time-Consistency Module (TCM) to capture shallow spatiotemporal features meanwhile maintaining temporal coherence, which are then processed by a Luminance Adaptation Module (LAM) to dynamically adjust the brightness based on the input luminance distribution. Furthermore, a Reflect Augmentation Module (RAM) is presented to maximize illumination utilization and simultaneously enhance action recognition via two interactive paths. Additionally, we build Dark-101, a large-scale dataset comprising 18,310 dark videos across 101 action categories, significantly surpassing existing datasets (e.g., ARID1.5 and Dark-48) in scale and diversity. Extensive experiments demonstrate that the proposed OwlSight achieves state-of-the-art performance across four low-light action recognition benchmarks. Notably, it outperforms previous best approaches by 5.36% on ARID1.5 and 1.72% on Dark-101, highlighting its effectiveness in challenging dark environments.

Adaptive Prototype Model for Attribute-based Multi-label Few-shot Action Recognition

Feb 18, 2025Abstract:In real-world action recognition systems, incorporating more attributes helps achieve a more comprehensive understanding of human behavior. However, using a single model to simultaneously recognize multiple attributes can lead to a decrease in accuracy. In this work, we propose a novel method i.e. Adaptive Attribute Prototype Model (AAPM) for human action recognition, which captures rich action-relevant attribute information and strikes a balance between accuracy and robustness. Firstly, we introduce the Text-Constrain Module (TCM) to incorporate textual information from potential labels, and constrain the construction of different attributes prototype representations. In addition, we explore the Attribute Assignment Method (AAM) to address the issue of training bias and increase robustness during the training process.Furthermore, we construct a new video dataset with attribute-based multi-label called Multi-Kinetics for evaluation, which contains various attribute labels (e.g. action, scene, object, etc.) related to human behavior. Extensive experiments demonstrate that our AAPM achieves the state-of-the-art performance in both attribute-based multi-label few-shot action recognition and single-label few-shot action recognition. The project and dataset are available at an anonymous account https://github.com/theAAPM/AAPM

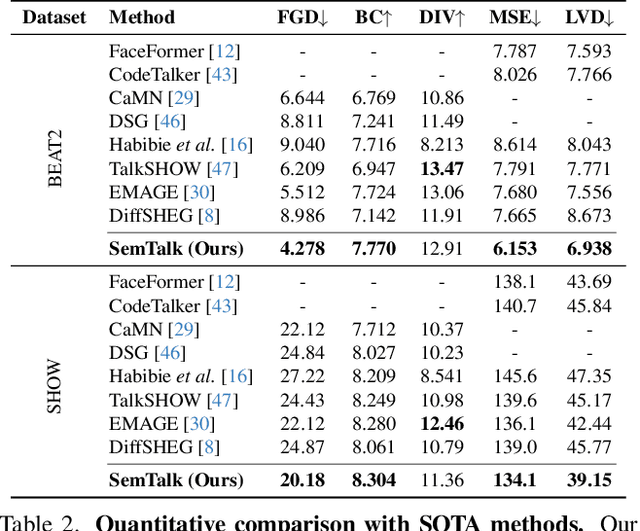

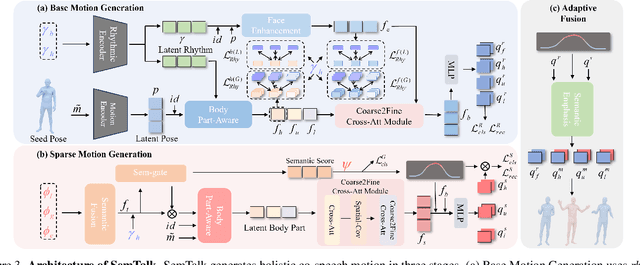

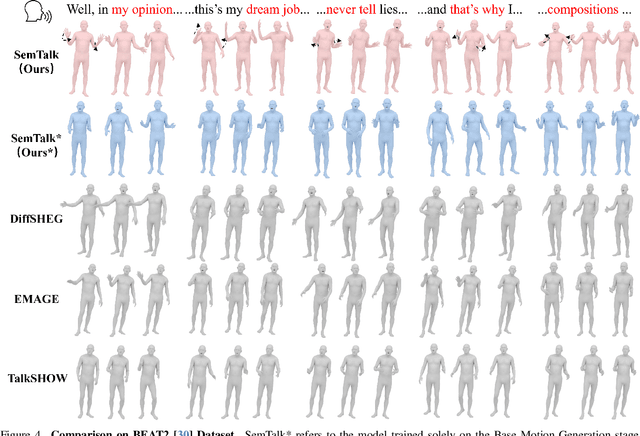

SemTalk: Holistic Co-speech Motion Generation with Frame-level Semantic Emphasis

Dec 21, 2024

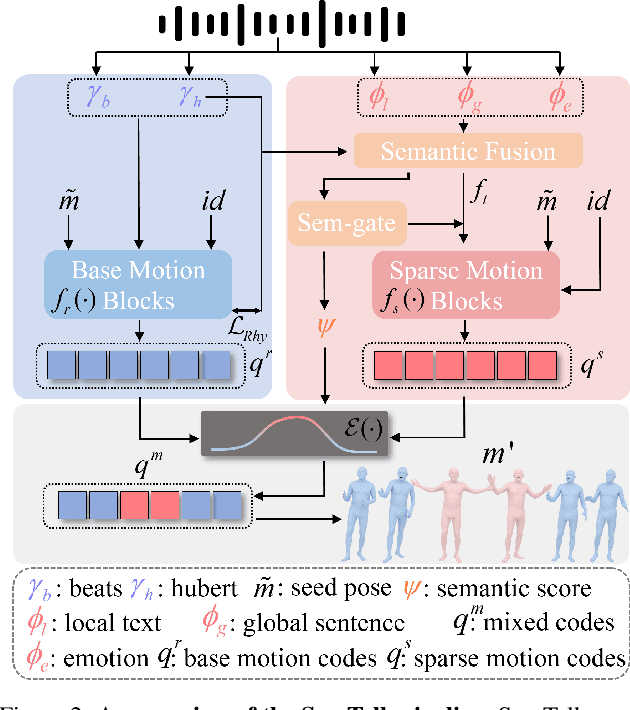

Abstract:A good co-speech motion generation cannot be achieved without a careful integration of common rhythmic motion and rare yet essential semantic motion. In this work, we propose SemTalk for holistic co-speech motion generation with frame-level semantic emphasis. Our key insight is to separately learn general motions and sparse motions, and then adaptively fuse them. In particular, rhythmic consistency learning is explored to establish rhythm-related base motion, ensuring a coherent foundation that synchronizes gestures with the speech rhythm. Subsequently, textit{semantic emphasis learning is designed to generate semantic-aware sparse motion, focusing on frame-level semantic cues. Finally, to integrate sparse motion into the base motion and generate semantic-emphasized co-speech gestures, we further leverage a learned semantic score for adaptive synthesis. Qualitative and quantitative comparisons on two public datasets demonstrate that our method outperforms the state-of-the-art, delivering high-quality co-speech motion with enhanced semantic richness over a stable base motion.

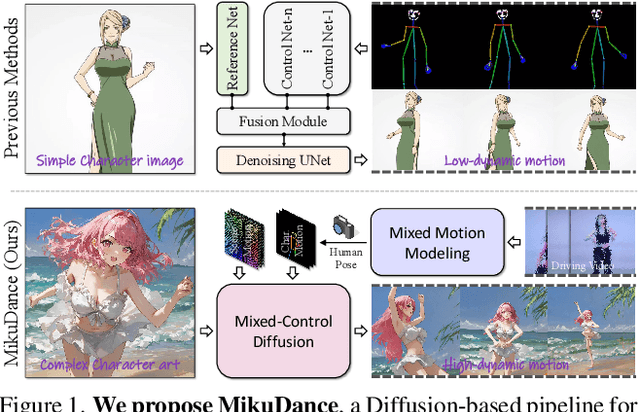

MikuDance: Animating Character Art with Mixed Motion Dynamics

Nov 14, 2024

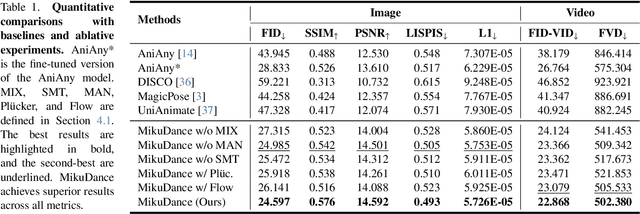

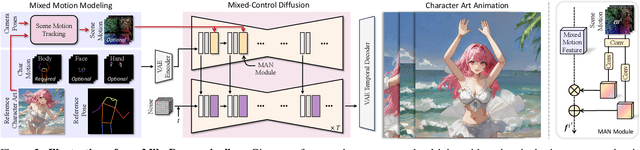

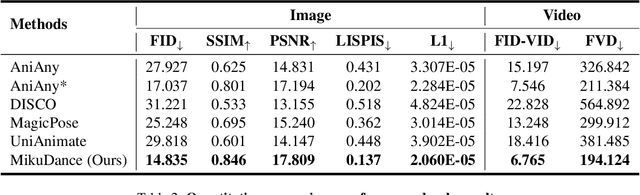

Abstract:We propose MikuDance, a diffusion-based pipeline incorporating mixed motion dynamics to animate stylized character art. MikuDance consists of two key techniques: Mixed Motion Modeling and Mixed-Control Diffusion, to address the challenges of high-dynamic motion and reference-guidance misalignment in character art animation. Specifically, a Scene Motion Tracking strategy is presented to explicitly model the dynamic camera in pixel-wise space, enabling unified character-scene motion modeling. Building on this, the Mixed-Control Diffusion implicitly aligns the scale and body shape of diverse characters with motion guidance, allowing flexible control of local character motion. Subsequently, a Motion-Adaptive Normalization module is incorporated to effectively inject global scene motion, paving the way for comprehensive character art animation. Through extensive experiments, we demonstrate the effectiveness and generalizability of MikuDance across various character art and motion guidance, consistently producing high-quality animations with remarkable motion dynamics.

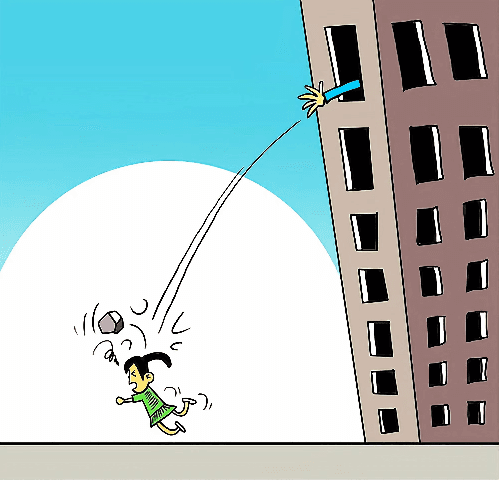

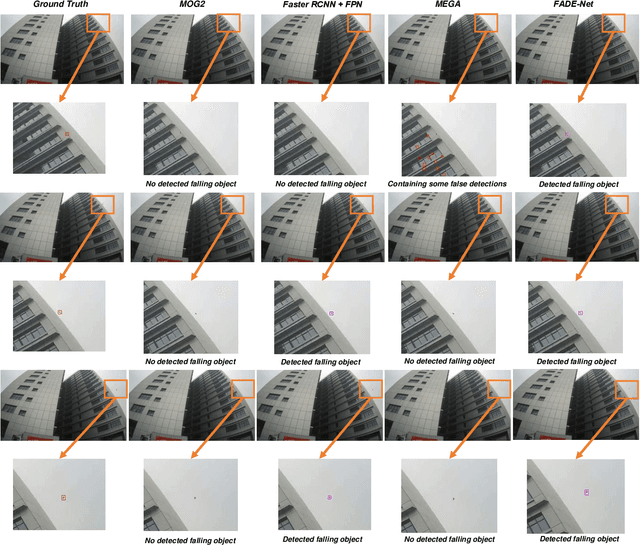

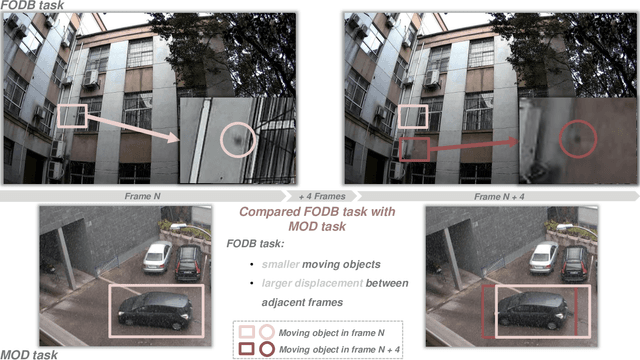

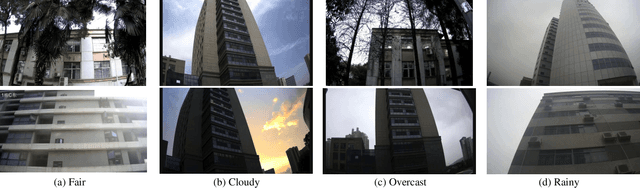

FADE: A Dataset for Detecting Falling Objects around Buildings in Video

Aug 11, 2024

Abstract:Falling objects from buildings can cause severe injuries to pedestrians due to the great impact force they exert. Although surveillance cameras are installed around some buildings, it is challenging for humans to capture such events in surveillance videos due to the small size and fast motion of falling objects, as well as the complex background. Therefore, it is necessary to develop methods to automatically detect falling objects around buildings in surveillance videos. To facilitate the investigation of falling object detection, we propose a large, diverse video dataset called FADE (FAlling Object DEtection around Buildings) for the first time. FADE contains 1,881 videos from 18 scenes, featuring 8 falling object categories, 4 weather conditions, and 4 video resolutions. Additionally, we develop a new object detection method called FADE-Net, which effectively leverages motion information and produces small-sized but high-quality proposals for detecting falling objects around buildings. Importantly, our method is extensively evaluated and analyzed by comparing it with the previous approaches used for generic object detection, video object detection, and moving object detection on the FADE dataset. Experimental results show that the proposed FADE-Net significantly outperforms other methods, providing an effective baseline for future research. The dataset and code are publicly available at https://fadedataset.github.io/FADE.github.io/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge