Zhichao Sun

LIGM

IVC-Prune: Revealing the Implicit Visual Coordinates in LVLMs for Vision Token Pruning

Feb 03, 2026Abstract:Large Vision-Language Models (LVLMs) achieve impressive performance across multiple tasks. A significant challenge, however, is their prohibitive inference cost when processing high-resolution visual inputs. While visual token pruning has emerged as a promising solution, existing methods that primarily focus on semantic relevance often discard tokens that are crucial for spatial reasoning. We address this gap through a novel insight into \emph{how LVLMs process spatial reasoning}. Specifically, we reveal that LVLMs implicitly establish visual coordinate systems through Rotary Position Embeddings (RoPE), where specific token positions serve as \textbf{implicit visual coordinates} (IVC tokens) that are essential for spatial reasoning. Based on this insight, we propose \textbf{IVC-Prune}, a training-free, prompt-aware pruning strategy that retains both IVC tokens and semantically relevant foreground tokens. IVC tokens are identified by theoretically analyzing the mathematical properties of RoPE, targeting positions at which its rotation matrices approximate identity matrix or the $90^\circ$ rotation matrix. Foreground tokens are identified through a robust two-stage process: semantic seed discovery followed by contextual refinement via value-vector similarity. Extensive evaluations across four representative LVLMs and twenty diverse benchmarks show that IVC-Prune reduces visual tokens by approximately 50\% while maintaining $\geq$ 99\% of the original performance and even achieving improvements on several benchmarks. Source codes are available at https://github.com/FireRedTeam/IVC-Prune.

MHA2MLA-VLM: Enabling DeepSeek's Economical Multi-Head Latent Attention across Vision-Language Models

Jan 16, 2026Abstract:As vision-language models (VLMs) tackle increasingly complex and multimodal tasks, the rapid growth of Key-Value (KV) cache imposes significant memory and computational bottlenecks during inference. While Multi-Head Latent Attention (MLA) offers an effective means to compress the KV cache and accelerate inference, adapting existing VLMs to the MLA architecture without costly pretraining remains largely unexplored. In this work, we present MHA2MLA-VLM, a parameter-efficient and multimodal-aware framework for converting off-the-shelf VLMs to MLA. Our approach features two core techniques: (1) a modality-adaptive partial-RoPE strategy that supports both traditional and multimodal settings by selectively masking nonessential dimensions, and (2) a modality-decoupled low-rank approximation method that independently compresses the visual and textual KV spaces. Furthermore, we introduce parameter-efficient fine-tuning to minimize adaptation cost and demonstrate that minimizing output activation error, rather than parameter distance, substantially reduces performance loss. Extensive experiments on three representative VLMs show that MHA2MLA-VLM restores original model performance with minimal supervised data, significantly reduces KV cache footprint, and integrates seamlessly with KV quantization.

Speech-Language Models with Decoupled Tokenizers and Multi-Token Prediction

Jun 14, 2025

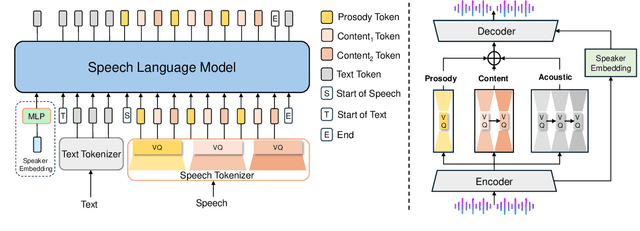

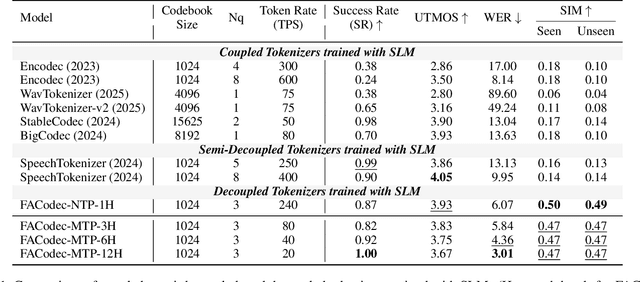

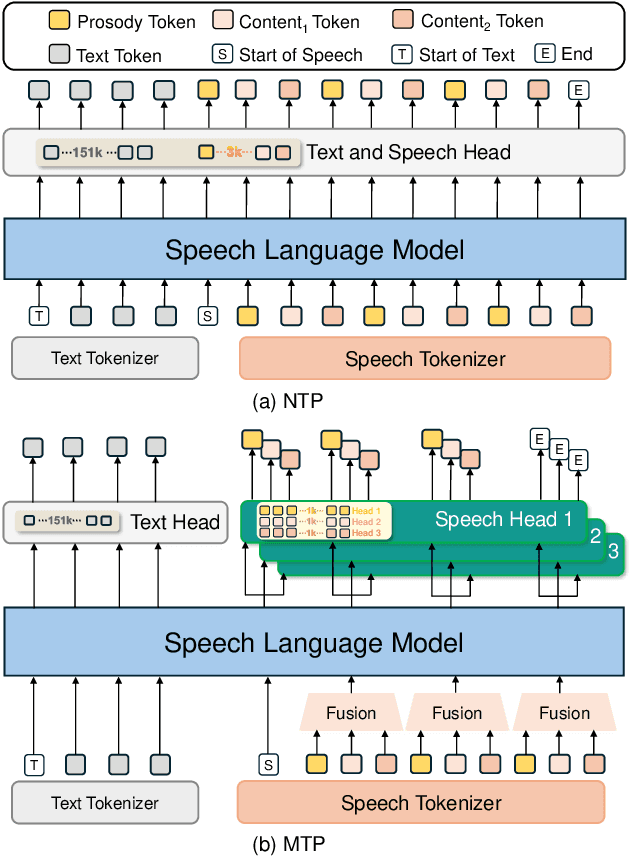

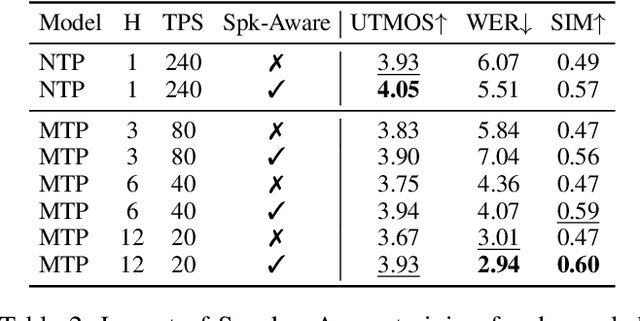

Abstract:Speech-language models (SLMs) offer a promising path toward unifying speech and text understanding and generation. However, challenges remain in achieving effective cross-modal alignment and high-quality speech generation. In this work, we systematically investigate the impact of key components (i.e., speech tokenizers, speech heads, and speaker modeling) on the performance of LLM-centric SLMs. We compare coupled, semi-decoupled, and fully decoupled speech tokenizers under a fair SLM framework and find that decoupled tokenization significantly improves alignment and synthesis quality. To address the information density mismatch between speech and text, we introduce multi-token prediction (MTP) into SLMs, enabling each hidden state to decode multiple speech tokens. This leads to up to 12$\times$ faster decoding and a substantial drop in word error rate (from 6.07 to 3.01). Furthermore, we propose a speaker-aware generation paradigm and introduce RoleTriviaQA, a large-scale role-playing knowledge QA benchmark with diverse speaker identities. Experiments demonstrate that our methods enhance both knowledge understanding and speaker consistency.

MIFNet: Learning Modality-Invariant Features for Generalizable Multimodal Image Matching

Jan 20, 2025

Abstract:Many keypoint detection and description methods have been proposed for image matching or registration. While these methods demonstrate promising performance for single-modality image matching, they often struggle with multimodal data because the descriptors trained on single-modality data tend to lack robustness against the non-linear variations present in multimodal data. Extending such methods to multimodal image matching often requires well-aligned multimodal data to learn modality-invariant descriptors. However, acquiring such data is often costly and impractical in many real-world scenarios. To address this challenge, we propose a modality-invariant feature learning network (MIFNet) to compute modality-invariant features for keypoint descriptions in multimodal image matching using only single-modality training data. Specifically, we propose a novel latent feature aggregation module and a cumulative hybrid aggregation module to enhance the base keypoint descriptors trained on single-modality data by leveraging pre-trained features from Stable Diffusion models. We validate our method with recent keypoint detection and description methods in three multimodal retinal image datasets (CF-FA, CF-OCT, EMA-OCTA) and two remote sensing datasets (Optical-SAR and Optical-NIR). Extensive experiments demonstrate that the proposed MIFNet is able to learn modality-invariant feature for multimodal image matching without accessing the targeted modality and has good zero-shot generalization ability. The source code will be made publicly available.

Shape Transformation Driven by Active Contour for Class-Imbalanced Semi-Supervised Medical Image Segmentation

Oct 18, 2024

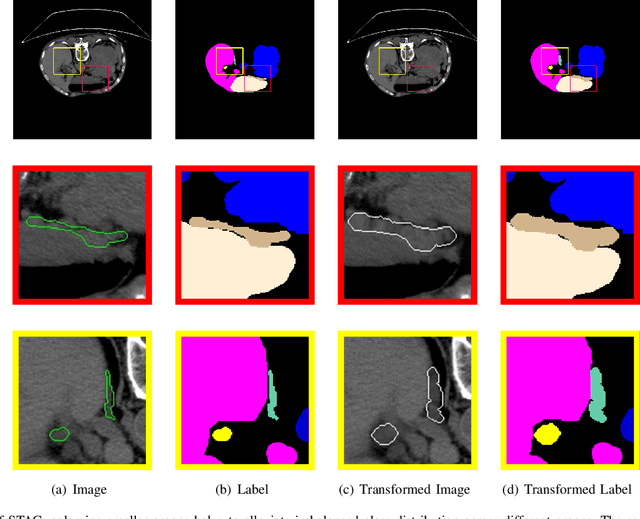

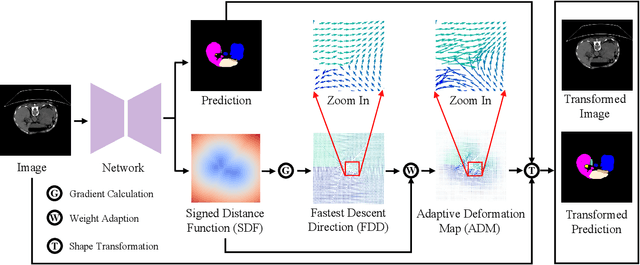

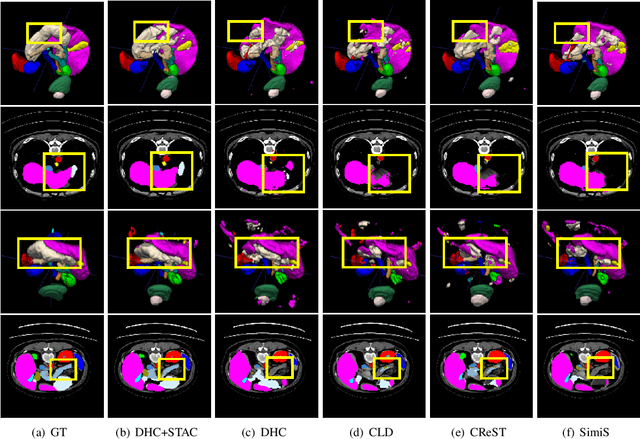

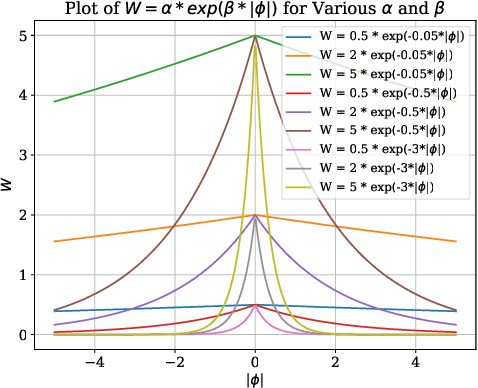

Abstract:Annotating 3D medical images demands expert knowledge and is time-consuming. As a result, semi-supervised learning (SSL) approaches have gained significant interest in 3D medical image segmentation. The significant size differences among various organs in the human body lead to imbalanced class distribution, which is a major challenge in the real-world application of these SSL approaches. To address this issue, we develop a novel Shape Transformation driven by Active Contour (STAC), that enlarges smaller organs to alleviate imbalanced class distribution across different organs. Inspired by curve evolution theory in active contour methods, STAC employs a signed distance function (SDF) as the level set function, to implicitly represent the shape of organs, and deforms voxels in the direction of the steepest descent of SDF (i.e., the normal vector). To ensure that the voxels far from expansion organs remain unchanged, we design an SDF-based weight function to control the degree of deformation for each voxel. We then use STAC as a data-augmentation process during the training stage. Experimental results on two benchmark datasets demonstrate that the proposed method significantly outperforms some state-of-the-art methods. Source code is publicly available at https://github.com/GuGuLL123/STAC.

Spatial-aware Attention Generative Adversarial Network for Semi-supervised Anomaly Detection in Medical Image

May 21, 2024

Abstract:Medical anomaly detection is a critical research area aimed at recognizing abnormal images to aid in diagnosis.Most existing methods adopt synthetic anomalies and image restoration on normal samples to detect anomaly. The unlabeled data consisting of both normal and abnormal data is not well explored. We introduce a novel Spatial-aware Attention Generative Adversarial Network (SAGAN) for one-class semi-supervised generation of health images.Our core insight is the utilization of position encoding and attention to accurately focus on restoring abnormal regions and preserving normal regions. To fully utilize the unlabelled data, SAGAN relaxes the cyclic consistency requirement of the existing unpaired image-to-image conversion methods, and generates high-quality health images corresponding to unlabeled data, guided by the reconstruction of normal images and restoration of pseudo-anomaly images.Subsequently, the discrepancy between the generated healthy image and the original image is utilized as an anomaly score.Extensive experiments on three medical datasets demonstrate that the proposed SAGAN outperforms the state-of-the-art methods.

Position-Guided Prompt Learning for Anomaly Detection in Chest X-Rays

May 20, 2024

Abstract:Anomaly detection in chest X-rays is a critical task. Most methods mainly model the distribution of normal images, and then regard significant deviation from normal distribution as anomaly. Recently, CLIP-based methods, pre-trained on a large number of medical images, have shown impressive performance on zero/few-shot downstream tasks. In this paper, we aim to explore the potential of CLIP-based methods for anomaly detection in chest X-rays. Considering the discrepancy between the CLIP pre-training data and the task-specific data, we propose a position-guided prompt learning method. Specifically, inspired by the fact that experts diagnose chest X-rays by carefully examining distinct lung regions, we propose learnable position-guided text and image prompts to adapt the task data to the frozen pre-trained CLIP-based model. To enhance the model's discriminative capability, we propose a novel structure-preserving anomaly synthesis method within chest x-rays during the training process. Extensive experiments on three datasets demonstrate that our proposed method outperforms some state-of-the-art methods. The code of our implementation is available at https://github.com/sunzc-sunny/PPAD.

Noised Autoencoders for Point Annotation Restoration in Object Counting

Dec 12, 2023Abstract:Object counting is a field of growing importance in domains such as security surveillance, urban planning, and biology. The annotation is usually provided in terms of 2D points. However, the complexity of object shapes and subjective of annotators may lead to annotation inconsistency, potentially confusing the model during training. To alleviate this issue, we introduce the Noised Autoencoders (NAE) methodology, which extracts general positional knowledge from all annotations. The method involves adding random offsets to initial point annotations, followed by a UNet to restore them to their original positions. Similar to MAE, NAE faces challenges in restoring non-generic points, necessitating reliance on the most common positions inferred from general knowledge. This reliance forms the cornerstone of our method's effectiveness. Different from existing noise-resistance methods, our approach focus on directly improving initial point annotations. Extensive experiments show that NAE yields more consistent annotations compared to the original ones, steadily enhancing the performance of advanced models trained with these revised annotations. \textbf{Remarkably, the proposed approach helps to set new records in nine datasets}. We will make the NAE codes and refined point annotations available.

Dual Structure-Preserving Image Filterings for Semi-supervised Medical Image Segmentation

Dec 12, 2023Abstract:Semi-supervised image segmentation has attracted great attention recently. The key is how to leverage unlabeled images in the training process. Most methods maintain consistent predictions of the unlabeled images under variations (e.g., adding noise/perturbations, or creating alternative versions) in the image and/or model level. In most image-level variation, medical images often have prior structure information, which has not been well explored. In this paper, we propose novel dual structure-preserving image filterings (DSPIF) as the image-level variations for semi-supervised medical image segmentation. Motivated by connected filtering that simplifies image via filtering in structure-aware tree-based image representation, we resort to the dual contrast invariant Max-tree and Min-tree representation. Specifically, we propose a novel connected filtering that removes topologically equivalent nodes (i.e. connected components) having no siblings in the Max/Min-tree. This results in two filtered images preserving topologically critical structure. Applying such dual structure-preserving image filterings in mutual supervision is beneficial for semi-supervised medical image segmentation. Extensive experimental results on three benchmark datasets demonstrate that the proposed method significantly/consistently outperforms some state-of-the-art methods. The source codes will be publicly available.

CaSAR: Contact-aware Skeletal Action Recognition

Sep 17, 2023

Abstract:Skeletal Action recognition from an egocentric view is important for applications such as interfaces in AR/VR glasses and human-robot interaction, where the device has limited resources. Most of the existing skeletal action recognition approaches use 3D coordinates of hand joints and 8-corner rectangular bounding boxes of objects as inputs, but they do not capture how the hands and objects interact with each other within the spatial context. In this paper, we present a new framework called Contact-aware Skeletal Action Recognition (CaSAR). It uses novel representations of hand-object interaction that encompass spatial information: 1) contact points where the hand joints meet the objects, 2) distant points where the hand joints are far away from the object and nearly not involved in the current action. Our framework is able to learn how the hands touch or stay away from the objects for each frame of the action sequence, and use this information to predict the action class. We demonstrate that our approach achieves the state-of-the-art accuracy of 91.3% and 98.4% on two public datasets, H2O and FPHA, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge