Jianyu Yang

SAR ATR Method with Limited Training Data via an Embedded Feature Augmenter and Dynamic Hierarchical-Feature Refiner

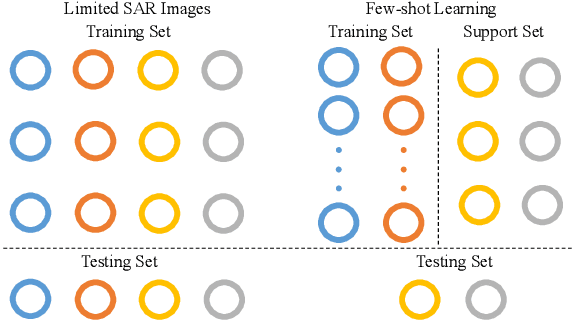

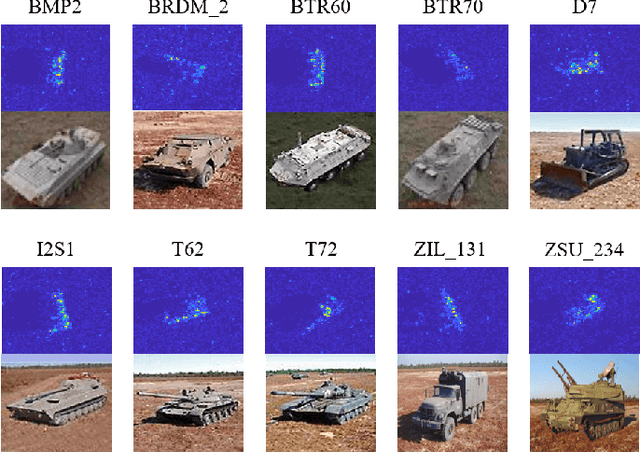

Sep 01, 2023Abstract:Without sufficient data, the quantity of information available for supervised training is constrained, as obtaining sufficient synthetic aperture radar (SAR) training data in practice is frequently challenging. Therefore, current SAR automatic target recognition (ATR) algorithms perform poorly with limited training data availability, resulting in a critical need to increase SAR ATR performance. In this study, a new method to improve SAR ATR when training data are limited is proposed. First, an embedded feature augmenter is designed to enhance the extracted virtual features located far away from the class center. Based on the relative distribution of the features, the algorithm pulls the corresponding virtual features with different strengths toward the corresponding class center. The designed augmenter increases the amount of information available for supervised training and improves the separability of the extracted features. Second, a dynamic hierarchical-feature refiner is proposed to capture the discriminative local features of the samples. Through dynamically generated kernels, the proposed refiner integrates the discriminative local features of different dimensions into the global features, further enhancing the inner-class compactness and inter-class separability of the extracted features. The proposed method not only increases the amount of information available for supervised training but also extracts the discriminative features from the samples, resulting in superior ATR performance in problems with limited SAR training data. Experimental results on the moving and stationary target acquisition and recognition (MSTAR), OpenSARShip, and FUSAR-Ship benchmark datasets demonstrate the robustness and outstanding ATR performance of the proposed method in response to limited SAR training data.

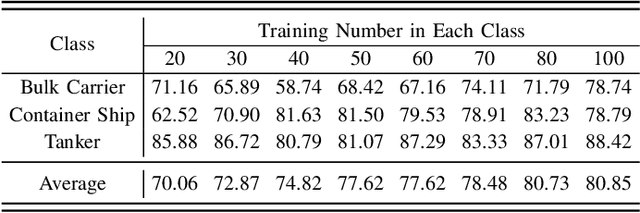

Semi-Supervised SAR ATR Framework with Transductive Auxiliary Segmentation

Aug 31, 2023Abstract:Convolutional neural networks (CNNs) have achieved high performance in synthetic aperture radar (SAR) automatic target recognition (ATR). However, the performance of CNNs depends heavily on a large amount of training data. The insufficiency of labeled training SAR images limits the recognition performance and even invalidates some ATR methods. Furthermore, under few labeled training data, many existing CNNs are even ineffective. To address these challenges, we propose a Semi-supervised SAR ATR Framework with transductive Auxiliary Segmentation (SFAS). The proposed framework focuses on exploiting the transductive generalization on available unlabeled samples with an auxiliary loss serving as a regularizer. Through auxiliary segmentation of unlabeled SAR samples and information residue loss (IRL) in training, the framework can employ the proposed training loop process and gradually exploit the information compilation of recognition and segmentation to construct a helpful inductive bias and achieve high performance. Experiments conducted on the MSTAR dataset have shown the effectiveness of our proposed SFAS for few-shot learning. The recognition performance of 94.18\% can be achieved under 20 training samples in each class with simultaneous accurate segmentation results. Facing variances of EOCs, the recognition ratios are higher than 88.00\% when 10 training samples each class.

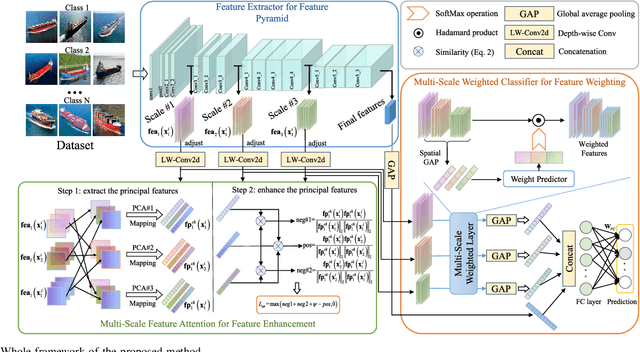

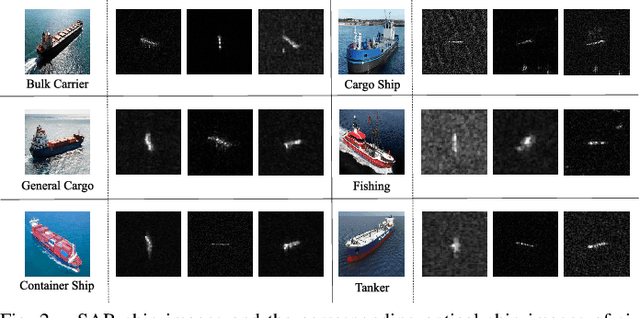

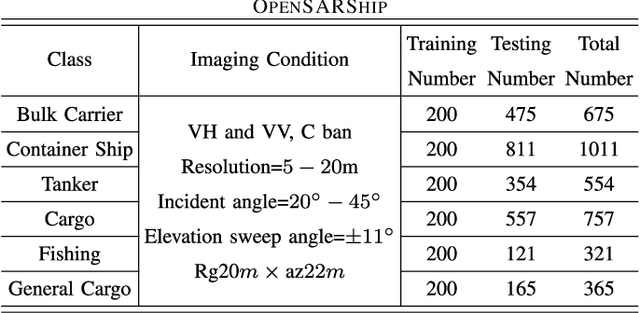

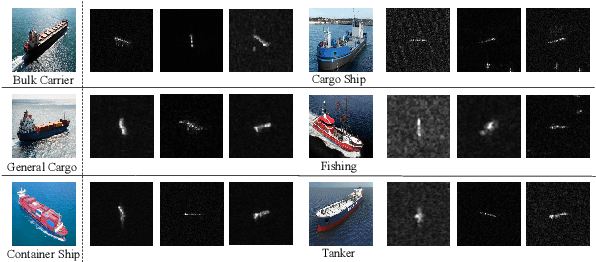

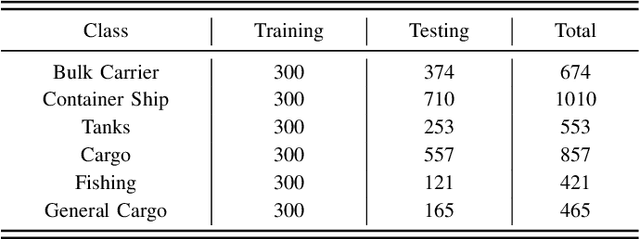

SAR Ship Target Recognition Via Multi-Scale Feature Attention and Adaptive-Weighed Classifier

Aug 20, 2023

Abstract:Maritime surveillance is indispensable for civilian fields, including national maritime safeguarding, channel monitoring, and so on, in which synthetic aperture radar (SAR) ship target recognition is a crucial research field. The core problem to realizing accurate SAR ship target recognition is the large inner-class variance and inter-class overlap of SAR ship features, which limits the recognition performance. Most existing methods plainly extract multi-scale features of the network and utilize equally each feature scale in the classification stage. However, the shallow multi-scale features are not discriminative enough, and each scale feature is not equally effective for recognition. These factors lead to the limitation of recognition performance. Therefore, we proposed a SAR ship recognition method via multi-scale feature attention and adaptive-weighted classifier to enhance features in each scale, and adaptively choose the effective feature scale for accurate recognition. We first construct an in-network feature pyramid to extract multi-scale features from SAR ship images. Then, the multi-scale feature attention can extract and enhance the principal components from the multi-scale features with more inner-class compactness and inter-class separability. Finally, the adaptive weighted classifier chooses the effective feature scales in the feature pyramid to achieve the final precise recognition. Through experiments and comparisons under OpenSARship data set, the proposed method is validated to achieve state-of-the-art performance for SAR ship recognition.

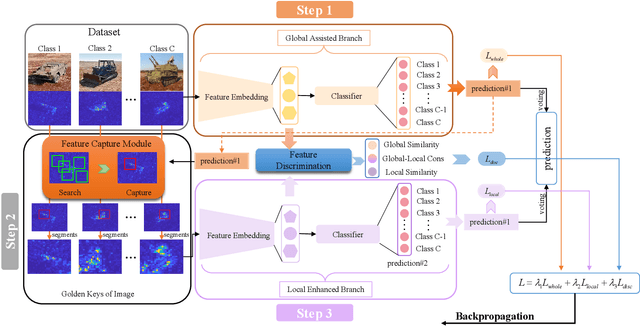

Crucial Feature Capture and Discrimination for Limited Training Data SAR ATR

Aug 20, 2023

Abstract:Although deep learning-based methods have achieved excellent performance on SAR ATR, the fact that it is difficult to acquire and label a lot of SAR images makes these methods, which originally performed well, perform weakly. This may be because most of them consider the whole target images as input, but the researches find that, under limited training data, the deep learning model can't capture discriminative image regions in the whole images, rather focus on more useless even harmful image regions for recognition. Therefore, the results are not satisfactory. In this paper, we design a SAR ATR framework under limited training samples, which mainly consists of two branches and two modules, global assisted branch and local enhanced branch, feature capture module and feature discrimination module. In every training process, the global assisted branch first finishes the initial recognition based on the whole image. Based on the initial recognition results, the feature capture module automatically searches and locks the crucial image regions for correct recognition, which we named as the golden key of image. Then the local extract the local features from the captured crucial image regions. Finally, the overall features and local features are input into the classifier and dynamically weighted using the learnable voting parameters to collaboratively complete the final recognition under limited training samples. The model soundness experiments demonstrate the effectiveness of our method through the improvement of feature distribution and recognition probability. The experimental results and comparisons on MSTAR and OPENSAR show that our method has achieved superior recognition performance.

SAR Ship Target Recognition via Selective Feature Discrimination and Multifeature Center Classifier

Aug 20, 2023Abstract:Maritime surveillance is not only necessary for every country, such as in maritime safeguarding and fishing controls, but also plays an essential role in international fields, such as in rescue support and illegal immigration control. Most of the existing automatic target recognition (ATR) methods directly send the extracted whole features of SAR ships into one classifier. The classifiers of most methods only assign one feature center to each class. However, the characteristics of SAR ship images, large inner-class variance, and small interclass difference lead to the whole features containing useless partial features and a single feature center for each class in the classifier failing with large inner-class variance. We proposes a SAR ship target recognition method via selective feature discrimination and multifeature center classifier. The selective feature discrimination automatically finds the similar partial features from the most similar interclass image pairs and the dissimilar partial features from the most dissimilar inner-class image pairs. It then provides a loss to enhance these partial features with more interclass separability. Motivated by divide and conquer, the multifeature center classifier assigns multiple learnable feature centers for each ship class. In this way, the multifeature centers divide the large inner-class variance into several smaller variances and conquered by combining all feature centers of one ship class. Finally, the probability distribution over all feature centers is considered comprehensively to achieve an accurate recognition of SAR ship images. The ablation experiments and experimental results on OpenSARShip and FUSAR-Ship datasets show that our method has achieved superior recognition performance under decreasing training SAR ship samples.

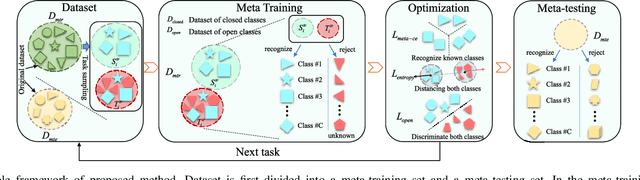

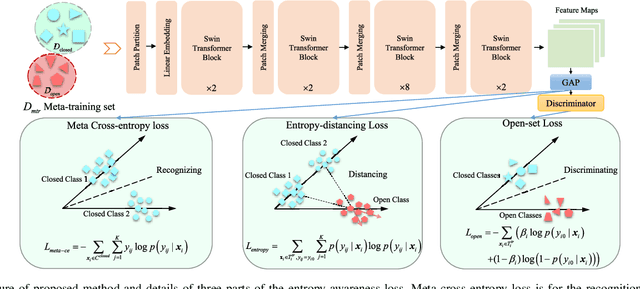

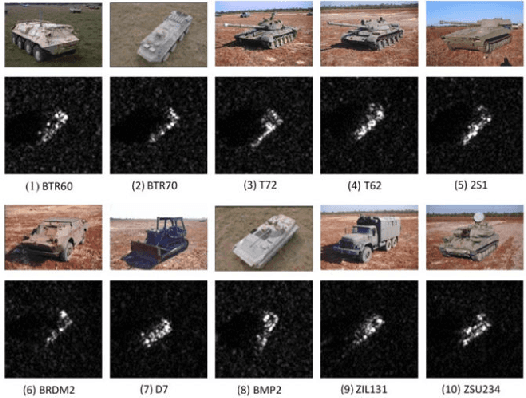

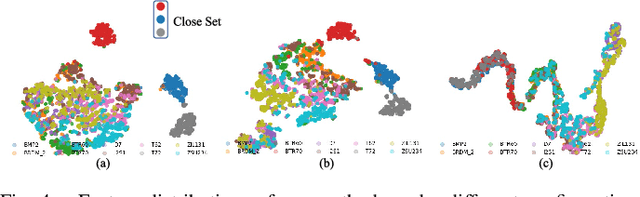

An Entropy-Awareness Meta-Learning Method for SAR Open-Set ATR

Aug 20, 2023

Abstract:Existing synthetic aperture radar automatic target recognition (SAR ATR) methods have been effective for the classification of seen target classes. However, it is more meaningful and challenging to distinguish the unseen target classes, i.e., open set recognition (OSR) problem, which is an urgent problem for the practical SAR ATR. The key solution of OSR is to effectively establish the exclusiveness of feature distribution of known classes. In this letter, we propose an entropy-awareness meta-learning method that improves the exclusiveness of feature distribution of known classes which means our method is effective for not only classifying the seen classes but also encountering the unseen other classes. Through meta-learning tasks, the proposed method learns to construct a feature space of the dynamic-assigned known classes. This feature space is required by the tasks to reject all other classes not belonging to the known classes. At the same time, the proposed entropy-awareness loss helps the model to enhance the feature space with effective and robust discrimination between the known and unknown classes. Therefore, our method can construct a dynamic feature space with discrimination between the known and unknown classes to simultaneously classify the dynamic-assigned known classes and reject the unknown classes. Experiments conducted on the moving and stationary target acquisition and recognition (MSTAR) dataset have shown the effectiveness of our method for SAR OSR.

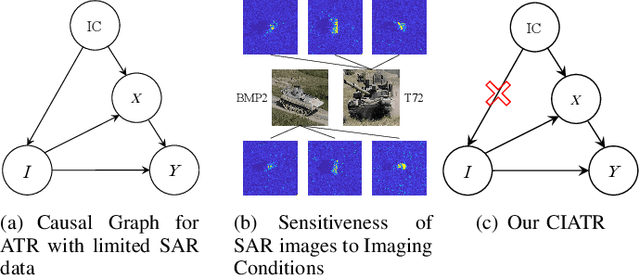

Unveiling Causalities in SAR ATR: A Causal Interventional Approach for Limited Data

Aug 18, 2023

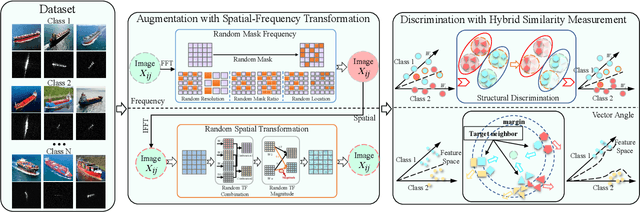

Abstract:Synthetic aperture radar automatic target recognition (SAR ATR) methods fall short with limited training data. In this letter, we propose a causal interventional ATR method (CIATR) to formulate the problem of limited SAR data which helps us uncover the ever-elusive causalities among the key factors in ATR, and thus pursue the desired causal effect without changing the imaging conditions. A structural causal model (SCM) is comprised using causal inference to help understand how imaging conditions acts as a confounder introducing spurious correlation when SAR data is limited. This spurious correlation among SAR images and the predicted classes can be fundamentally tackled with the conventional backdoor adjustments. An effective implement of backdoor adjustments is proposed by firstly using data augmentation with spatial-frequency domain hybrid transformation to estimate the potential effect of varying imaging conditions on SAR images. Then, a feature discrimination approach with hybrid similarity measurement is introduced to measure and mitigate the structural and vector angle impacts of varying imaging conditions on the extracted features from SAR images. Thus, our CIATR can pursue the true causality between SAR images and the corresponding classes even with limited SAR data. Experiments and comparisons conducted on the moving and stationary target acquisition and recognition (MSTAR) and OpenSARship datasets have shown the effectiveness of our method with limited SAR data.

Causal SAR ATR with Limited Data via Dual Invariance

Aug 18, 2023

Abstract:Synthetic aperture radar automatic target recognition (SAR ATR) with limited data has recently been a hot research topic to enhance weak generalization. Despite many excellent methods being proposed, a fundamental theory is lacked to explain what problem the limited SAR data causes, leading to weak generalization of ATR. In this paper, we establish a causal ATR model demonstrating that noise $N$ that could be blocked with ample SAR data, becomes a confounder with limited data for recognition. As a result, it has a detrimental causal effect damaging the efficacy of feature $X$ extracted from SAR images, leading to weak generalization of SAR ATR with limited data. The effect of $N$ on feature can be estimated and eliminated by using backdoor adjustment to pursue the direct causality between $X$ and the predicted class $Y$. However, it is difficult for SAR images to precisely estimate and eliminated the effect of $N$ on $X$. The limited SAR data scarcely powers the majority of existing optimization losses based on empirical risk minimization (ERM), thus making it difficult to effectively eliminate $N$'s effect. To tackle with difficult estimation and elimination of $N$'s effect, we propose a dual invariance comprising the inner-class invariant proxy and the noise-invariance loss. Motivated by tackling change with invariance, the inner-class invariant proxy facilitates precise estimation of $N$'s effect on $X$ by obtaining accurate invariant features for each class with the limited data. The noise-invariance loss transitions the ERM's data quantity necessity into a need for noise environment annotations, effectively eliminating $N$'s effect on $X$ by cleverly applying the previous $N$'s estimation as the noise environment annotations. Experiments on three benchmark datasets indicate that the proposed method achieves superior performance.

A deep deformable residual learning network for SAR images segmentation

Aug 15, 2023

Abstract:Reliable automatic target segmentation in Synthetic Aperture Radar (SAR) imagery has played an important role in the SAR fields. Different from the traditional methods, Spectral Residual (SR) and CFAR detector, with the recent adavance in machine learning theory, there has emerged a novel method for SAR target segmentation, based on the deep learning networks. In this paper, we proposed a deep deformable residual learning network for target segmentation that attempts to preserve the precise contour of the target. For this, the deformable convolutional layers and residual learning block are applied, which could extract and preserve the geometric information of the targets as much as possible. Based on the Moving and Stationary Target Acquisition and Recognition (MSTAR) data set, experimental results have shown the superiority of the proposed network for the precise targets segmentation.

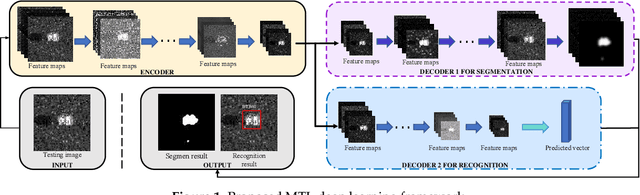

When Deep Learning Meets Multi-Task Learning in SAR ATR: Simultaneous Target Recognition and Segmentation

Aug 14, 2023

Abstract:With the recent advances of deep learning, automatic target recognition (ATR) of synthetic aperture radar (SAR) has achieved superior performance. By not being limited to the target category, the SAR ATR system could benefit from the simultaneous extraction of multifarious target attributes. In this paper, we propose a new multi-task learning approach for SAR ATR, which could obtain the accurate category and precise shape of the targets simultaneously. By introducing deep learning theory into multi-task learning, we first propose a novel multi-task deep learning framework with two main structures: encoder and decoder. The encoder is constructed to extract sufficient image features in different scales for the decoder, while the decoder is a tasks-specific structure which employs these extracted features adaptively and optimally to meet the different feature demands of the recognition and segmentation. Therefore, the proposed framework has the ability to achieve superior recognition and segmentation performance. Based on the Moving and Stationary Target Acquisition and Recognition (MSTAR) dataset, experimental results show the superiority of the proposed framework in terms of recognition and segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge