Yunhai Han

On the Surprising Effectiveness of Spectrum Clipping in Learning Stable Linear Dynamics

Dec 03, 2024

Abstract:When learning stable linear dynamical systems from data, three important properties are desirable: i) predictive accuracy, ii) provable stability, and iii) computational efficiency. Unconstrained minimization of reconstruction errors leads to high accuracy and efficiency but cannot guarantee stability. Existing methods to remedy this focus on enforcing stability while also ensuring accuracy, but do so only at the cost of increased computation. In this work, we investigate if a straightforward approach can simultaneously offer all three desiderata of learning stable linear systems. Specifically, we consider a post-hoc approach that manipulates the spectrum of the learned system matrix after it is learned in an unconstrained fashion. We call this approach spectrum clipping (SC) as it involves eigen decomposition and subsequent reconstruction of the system matrix after clipping all of its eigenvalues that are larger than one to one (without altering the eigenvectors). Through detailed experiments involving two different applications and publicly available benchmark datasets, we demonstrate that this simple technique can simultaneously learn highly accurate linear systems that are provably stable. Notably, we demonstrate that SC can achieve similar or better performance than strong baselines while being orders-of-magnitude faster. We also show that SC can be readily combined with Koopman operators to learn stable nonlinear dynamics, such as those underlying complex dexterous manipulation skills involving multi-fingered robotic hands. Our codes and dataset can be found at https://github.com/GT-STAR-Lab/spec_clip.

AsymDex: Leveraging Asymmetry and Relative Motion in Learning Bimanual Dexterity

Nov 20, 2024

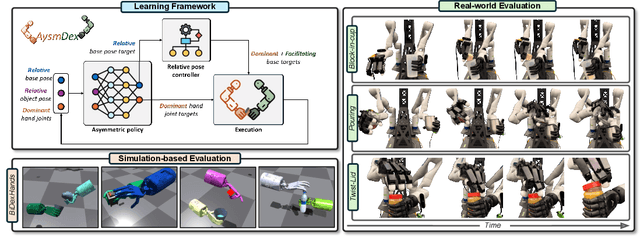

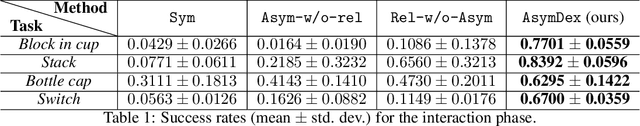

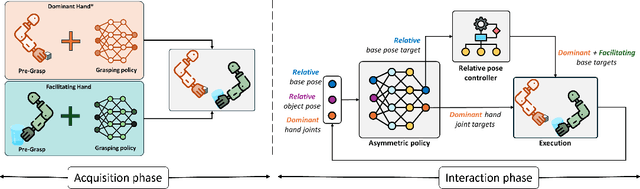

Abstract:We present Asymmetric Dexterity (AsymDex), a novel reinforcement learning (RL) framework that can efficiently learn asymmetric bimanual skills for multi-fingered hands without relying on demonstrations, which can be cumbersome to collect. Two crucial ingredients enable AsymDex to reduce the observation and action space dimensions and improve sample efficiency. First, AsymDex leverages the natural asymmetry found in human bimanual manipulation and assigns specific and interdependent roles to each hand: a facilitating hand that moves and reorients the object, and a dominant hand that performs complex manipulations on said object. Second, AsymDex defines and operates over relative observation and action spaces, facilitating responsive coordination between the two hands. Further, AsymDex can be easily integrated with recent advances in grasp learning to handle both the object acquisition phase and the interaction phase of bimanual dexterity. Unlike existing RL-based methods for bimanual dexterity, which are tailored to a specific task, AsymDex can be used to learn a wide variety of bimanual tasks that exhibit asymmetry. Detailed experiments on four simulated asymmetric bimanual dexterous manipulation tasks reveal that AsymDex consistently outperforms strong baselines that challenge its design choices, in terms of success rate and sample efficiency. The project website is at https://sites.google.com/view/asymdex-2024/.

KOROL: Learning Visualizable Object Feature with Koopman Operator Rollout for Manipulation

Jun 29, 2024Abstract:Learning dexterous manipulation skills presents significant challenges due to complex nonlinear dynamics that underlie the interactions between objects and multi-fingered hands. Koopman operators have emerged as a robust method for modeling such nonlinear dynamics within a linear framework. However, current methods rely on runtime access to ground-truth (GT) object states, making them unsuitable for vision-based practical applications. Unlike image-to-action policies that implicitly learn visual features for control, we use a dynamics model, specifically the Koopman operator, to learn visually interpretable object features critical for robotic manipulation within a scene. We construct a Koopman operator using object features predicted by a feature extractor and utilize it to auto-regressively advance system states. We train the feature extractor to embed scene information into object features, thereby enabling the accurate propagation of robot trajectories. We evaluate our approach on simulated and real-world robot tasks, with results showing that it outperformed the model-based imitation learning NDP by 1.08$\times$ and the image-to-action Diffusion Policy by 1.16$\times$. The results suggest that our method maintains task success rates with learned features and extends applicability to real-world manipulation without GT object states.

Learning Prehensile Dexterity by Imitating and Emulating State-only Observations

Apr 12, 2024

Abstract:When human acquire physical skills (e.g., tennis) from experts, we tend to first learn from merely observing the expert. But this is often insufficient. We then engage in practice, where we try to emulate the expert and ensure that our actions produce similar effects on our environment. Inspired by this observation, we introduce Combining IMitation and Emulation for Motion Refinement (CIMER) -- a two-stage framework to learn dexterous prehensile manipulation skills from state-only observations. CIMER's first stage involves imitation: simultaneously encode the complex interdependent motions of the robot hand and the object in a structured dynamical system. This results in a reactive motion generation policy that provides a reasonable motion prior, but lacks the ability to reason about contact effects due to the lack of action labels. The second stage involves emulation: learn a motion refinement policy via reinforcement that adjusts the robot hand's motion prior such that the desired object motion is reenacted. CIMER is both task-agnostic (no task-specific reward design or shaping) and intervention-free (no additional teleoperated or labeled demonstrations). Detailed experiments with prehensile dexterity reveal that i) imitation alone is insufficient, but adding emulation drastically improves performance, ii) CIMER outperforms existing methods in terms of sample efficiency and the ability to generate realistic and stable motions, iii) CIMER can either zero-shot generalize or learn to adapt to novel objects from the YCB dataset, even outperforming expert policies trained with action labels in most cases. Source code and videos are available at https://sites.google.com/view/cimer-2024/.

MimicTouch: Learning Human's Control Strategy with Multi-Modal Tactile Feedback

Nov 01, 2023

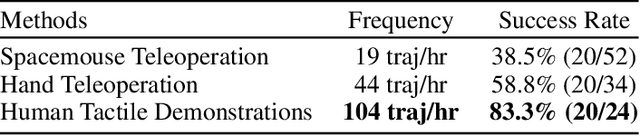

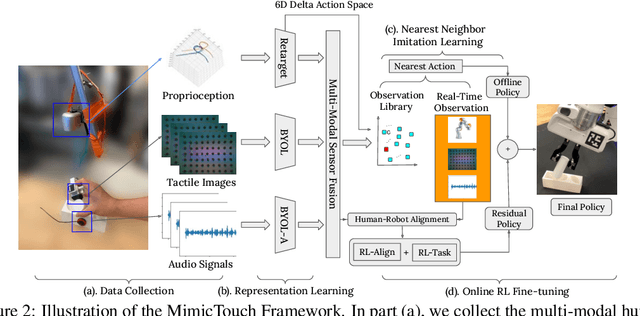

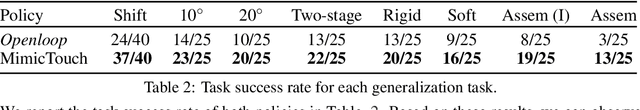

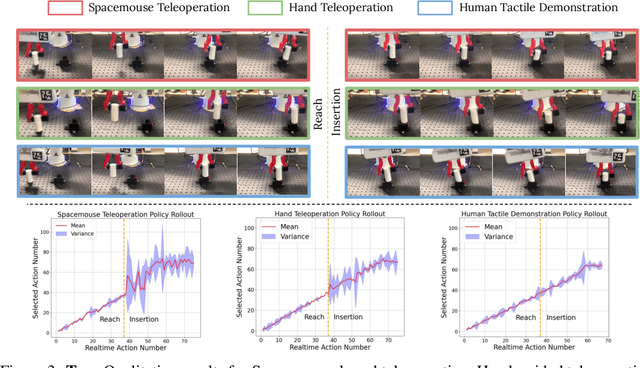

Abstract:In robotics and artificial intelligence, the integration of tactile processing is becoming increasingly pivotal, especially in learning to execute intricate tasks like alignment and insertion. However, existing works focusing on tactile methods for insertion tasks predominantly rely on robot teleoperation data and reinforcement learning, which do not utilize the rich insights provided by human's control strategy guided by tactile feedback. For utilizing human sensations, methodologies related to learning from humans predominantly leverage visual feedback, often overlooking the invaluable tactile feedback that humans inherently employ to finish complex manipulations. Addressing this gap, we introduce "MimicTouch", a novel framework that mimics human's tactile-guided control strategy. In this framework, we initially collect multi-modal tactile datasets from human demonstrators, incorporating human tactile-guided control strategies for task completion. The subsequent step involves instructing robots through imitation learning using multi-modal sensor data and retargeted human motions. To further mitigate the embodiment gap between humans and robots, we employ online residual reinforcement learning on the physical robot. Through comprehensive experiments, we validate the safety of MimicTouch in transferring a latent policy learned through imitation learning from human to robot. This ongoing work will pave the way for a broader spectrum of tactile-guided robotic applications.

On the Utility of Koopman Operator Theory in Learning Dexterous Manipulation Skills

Mar 23, 2023Abstract:Recent advances in learning-based approaches have led to impressive dexterous manipulation capabilities. Yet, we haven't witnessed widespread adoption of these capabilities beyond the laboratory. This is likely due to practical limitations, such as significant computational burden, inscrutable policy architectures, sensitivity to parameter initializations, and the considerable technical expertise required for implementation. In this work, we investigate the utility of Koopman operator theory in alleviating these limitations. Koopman operators are simple yet powerful control-theoretic structures that help represent complex nonlinear dynamics as linear systems in higher-dimensional spaces. Motivated by the fact that complex nonlinear dynamics underlie dexterous manipulation, we develop an imitation learning framework that leverages Koopman operators to simultaneously learn the desired behavior of both robot and object states. We demonstrate that a Koopman operator-based framework is surprisingly effective for dexterous manipulation and offers a number of unique benefits. First, the learning process is analytical, eliminating the sensitivity to parameter initializations and painstaking hyperparameter optimization. Second, the learned reference dynamics can be combined with a task-agnostic tracking controller such that task changes and variations can be handled with ease. Third, a Koopman operator-based approach can perform comparably to state-of-the-art imitation learning algorithms in terms of task success rate and imitation error, while being an order of magnitude more computationally efficient. In addition, we discuss a number of avenues for future research made available by this work.

Leveraging Heterogeneous Capabilities in Multi-Agent Systems for Environmental Conflict Resolution

Jun 03, 2022

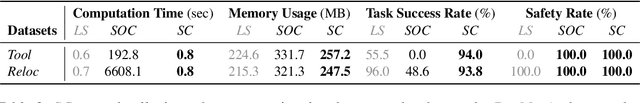

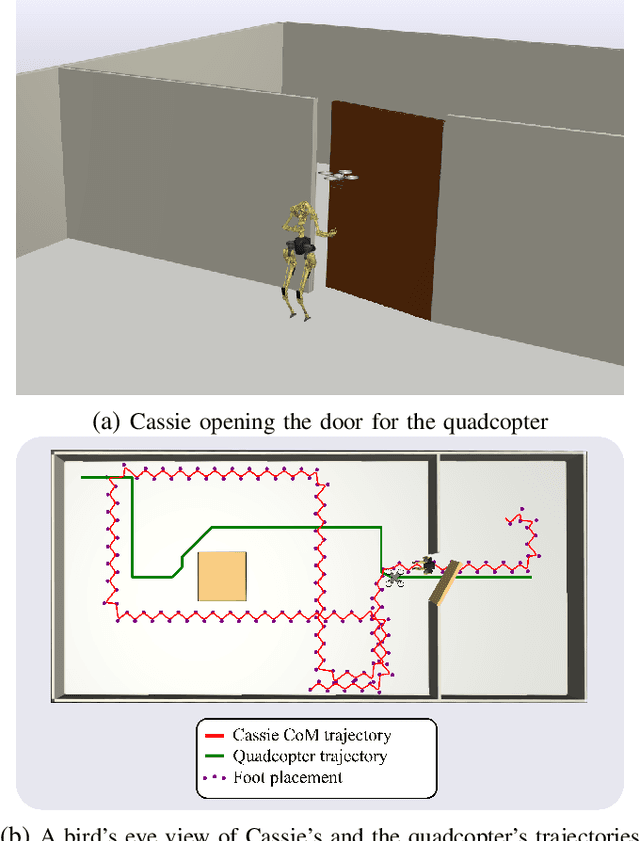

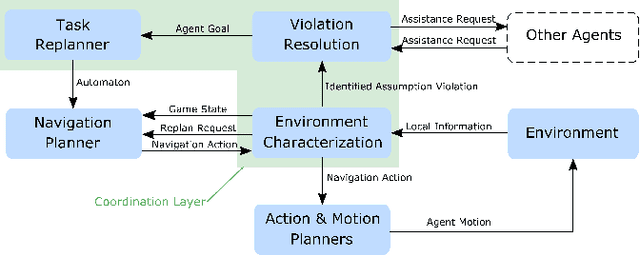

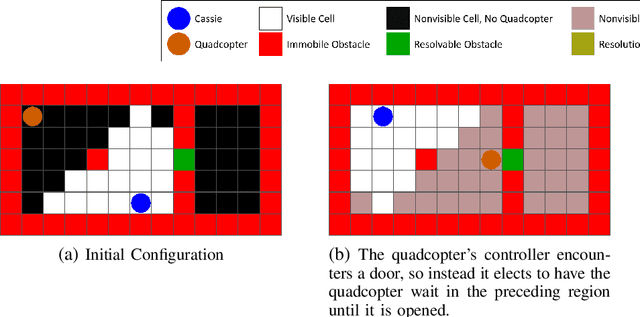

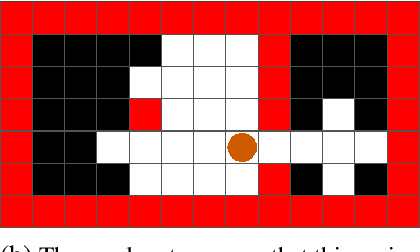

Abstract:In this paper, we introduce a high-level controller synthesis framework that enables teams of heterogeneous agents to assist each other in resolving environmental conflicts that appear at runtime. This conflict resolution method is built upon temporal-logic-based reactive synthesis to guarantee safety and task completion under specific environment assumptions. In heterogeneous multi-agent systems, every agent is expected to complete its own tasks in service of a global team objective. However, at runtime, an agent may encounter un-modeled obstacles (e.g., doors or walls) that prevent it from achieving its own task. To address this problem, we take advantage of the capability of other heterogeneous agents to resolve the obstacle. A controller framework is proposed to redirect agents with the capability of resolving the appropriate obstacles to the required target when such a situation is detected. A set of case studies involving a bipedal robot Digit and a quadcopter are used to evaluate the controller performance in action. Additionally, we implement the proposed framework on a physical multi-agent robotic system to demonstrate its viability for real world applications.

Learning Generalizable Vision-Tactile Robotic Grasping Strategy for Deformable Objects via Transformer

Dec 20, 2021

Abstract:Reliable robotic grasping, especially with deformable objects such as fruits, remains a challenging task due to underactuated contact interactions with a gripper, unknown object dynamics and geometries. In this study, we propose a Transformer-based robotic grasping framework for rigid grippers that leverage tactile and visual information for safe object grasping. Specifically, the Transformer models learn physical feature embeddings with sensor feedback through performing two pre-defined explorative actions (pinching and sliding) and predict a grasping outcome through a multilayer perceptron (MLP) with a given grasping strength. Using these predictions, the gripper predicts a safe grasping strength via inference. Compared with convolutional recurrent networks (CNN), the Transformer models can capture the long-term dependencies across the image sequences and process spatial-temporal features simultaneously. We first benchmark the Transformer models on a public dataset for slip detection. Following that, we show that the Transformer models outperform a CNN+LSTM model in terms of grasping accuracy and computational efficiency. We also collect our fruit grasping dataset and conduct online grasping experiments using the proposed framework for both seen and unseen fruits. Our codes and dataset are public on GitHub.

Real-to-Sim Registration of Deformable Soft Tissue with Position-Based Dynamics for Surgical Robot Autonomy

Nov 03, 2020

Abstract:Autonomy in robotic surgery is very challenging in unstructured environments, especially when interacting with deformable soft tissues. This creates a challenge for model-based control methods that must account for deformation dynamics during tissue manipulation. Previous works in vision-based perception can capture the geometric changes within the scene, however, integration with dynamic properties toachieve accurate and safe model-based controllers has not been considered before. Considering the mechanic coupling between the robot and the environment, it is crucial to develop a registered, simulated dynamical model. In this work, we propose an online, continuous, real-to-sim registration method to bridge from 3D visual perception to position-based dynamics(PBD) modeling of tissues. The PBD method is employed to simulate soft tissue dynamics as well as rigid tool interactions for model-based control. Meanwhile, a vision-based strategy is used to generate 3D reconstructed point cloud surfaces that can be used to register and update the simulation, accounting for differences between the simulation and the real world. To verify this real-to-sim approach, tissue manipulation experiments have been conducted on the da Vinci Researach Kit. Our real-to-sim approach successfully reduced registration errors online, which is especially important for safety during autonomous control. Moreover, the result shows higher accuracy in occluded areas than fusion-based reconstruction.

A 2D Surgical Simulation Framework for Tool-Tissue Interaction

Oct 26, 2020

Abstract:The control and task automation of robotic surgical system is very challenging, especially in soft tissue manipulation, due to the unpredictable deformations. Thus, an accurate simulator of soft tissues with the ability of interacting with robot manipulators is necessary. In this work, we propose a novel 2D simulation framework for tool-tissue interaction. This framework continuously tracks the motion of manipulator and simulates the tissue deformation in presence of collision detection. The deformation energy can be computed for the control and planning task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge