Xinpei Ni

Should We Learn Contact-Rich Manipulation Policies from Sampling-Based Planners?

Dec 12, 2024

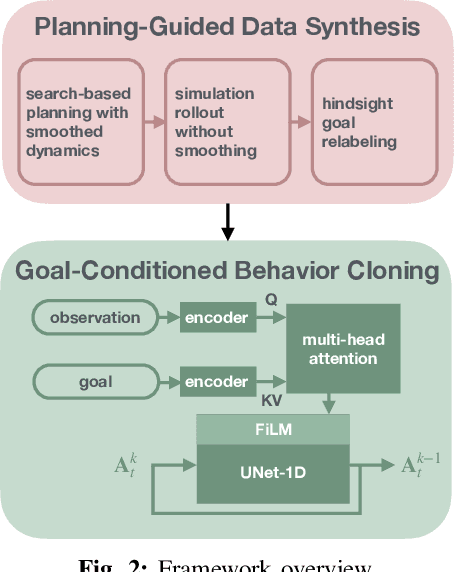

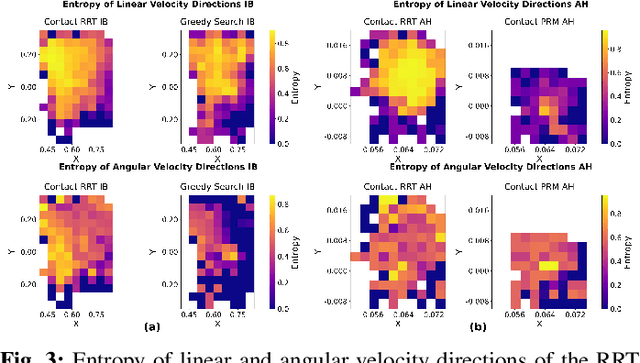

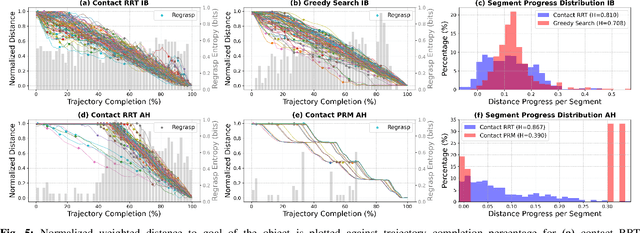

Abstract:The tremendous success of behavior cloning (BC) in robotic manipulation has been largely confined to tasks where demonstrations can be effectively collected through human teleoperation. However, demonstrations for contact-rich manipulation tasks that require complex coordination of multiple contacts are difficult to collect due to the limitations of current teleoperation interfaces. We investigate how to leverage model-based planning and optimization to generate training data for contact-rich dexterous manipulation tasks. Our analysis reveals that popular sampling-based planners like rapidly exploring random tree (RRT), while efficient for motion planning, produce demonstrations with unfavorably high entropy. This motivates modifications to our data generation pipeline that prioritizes demonstration consistency while maintaining solution diversity. Combined with a diffusion-based goal-conditioned BC approach, our method enables effective policy learning and zero-shot transfer to hardware for two challenging contact-rich manipulation tasks.

Is Linear Feedback on Smoothed Dynamics Sufficient for Stabilizing Contact-Rich Plans?

Nov 14, 2024

Abstract:Designing planners and controllers for contact-rich manipulation is extremely challenging as contact violates the smoothness conditions that many gradient-based controller synthesis tools assume. Contact smoothing approximates a non-smooth system with a smooth one, allowing one to use these synthesis tools more effectively. However, applying classical control synthesis methods to smoothed contact dynamics remains relatively under-explored. This paper analyzes the efficacy of linear controller synthesis using differential simulators based on contact smoothing. We introduce natural baselines for leveraging contact smoothing to compute (a) open-loop plans robust to uncertain conditions and/or dynamics, and (b) feedback gains to stabilize around open-loop plans. Using robotic bimanual whole-body manipulation as a testbed, we perform extensive empirical experiments on over 300 trajectories and analyze why LQR seems insufficient for stabilizing contact-rich plans. The video summarizing this paper and hardware experiments is found here: https://youtu.be/HLaKi6qbwQg?si=_zCAmBBD6rGSitm9.

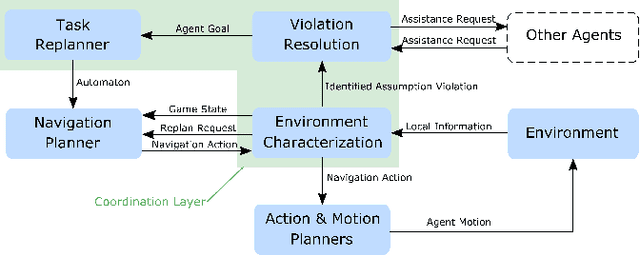

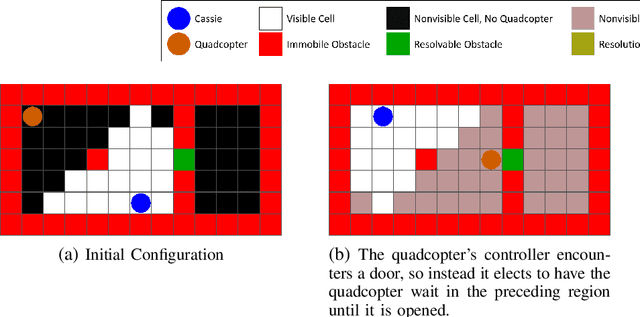

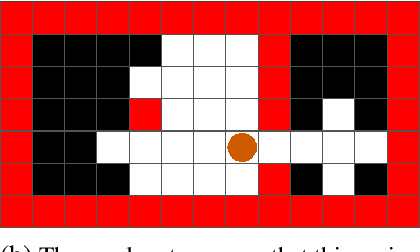

Leveraging Heterogeneous Capabilities in Multi-Agent Systems for Environmental Conflict Resolution

Jun 03, 2022

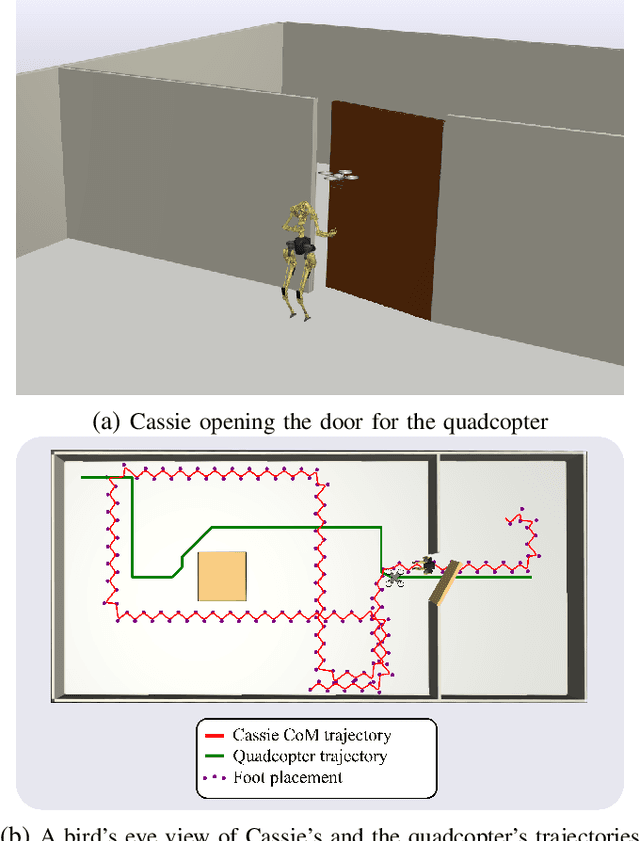

Abstract:In this paper, we introduce a high-level controller synthesis framework that enables teams of heterogeneous agents to assist each other in resolving environmental conflicts that appear at runtime. This conflict resolution method is built upon temporal-logic-based reactive synthesis to guarantee safety and task completion under specific environment assumptions. In heterogeneous multi-agent systems, every agent is expected to complete its own tasks in service of a global team objective. However, at runtime, an agent may encounter un-modeled obstacles (e.g., doors or walls) that prevent it from achieving its own task. To address this problem, we take advantage of the capability of other heterogeneous agents to resolve the obstacle. A controller framework is proposed to redirect agents with the capability of resolving the appropriate obstacles to the required target when such a situation is detected. A set of case studies involving a bipedal robot Digit and a quadcopter are used to evaluate the controller performance in action. Additionally, we implement the proposed framework on a physical multi-agent robotic system to demonstrate its viability for real world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge