Zhaodong Yang

AsymDex: Leveraging Asymmetry and Relative Motion in Learning Bimanual Dexterity

Nov 20, 2024

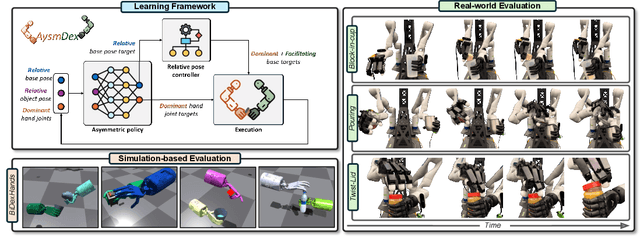

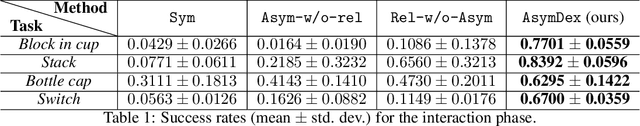

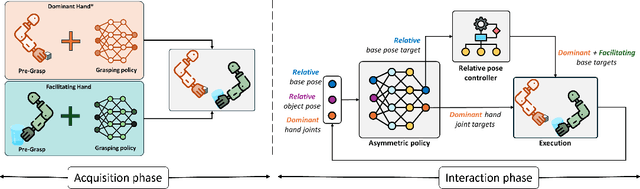

Abstract:We present Asymmetric Dexterity (AsymDex), a novel reinforcement learning (RL) framework that can efficiently learn asymmetric bimanual skills for multi-fingered hands without relying on demonstrations, which can be cumbersome to collect. Two crucial ingredients enable AsymDex to reduce the observation and action space dimensions and improve sample efficiency. First, AsymDex leverages the natural asymmetry found in human bimanual manipulation and assigns specific and interdependent roles to each hand: a facilitating hand that moves and reorients the object, and a dominant hand that performs complex manipulations on said object. Second, AsymDex defines and operates over relative observation and action spaces, facilitating responsive coordination between the two hands. Further, AsymDex can be easily integrated with recent advances in grasp learning to handle both the object acquisition phase and the interaction phase of bimanual dexterity. Unlike existing RL-based methods for bimanual dexterity, which are tailored to a specific task, AsymDex can be used to learn a wide variety of bimanual tasks that exhibit asymmetry. Detailed experiments on four simulated asymmetric bimanual dexterous manipulation tasks reveal that AsymDex consistently outperforms strong baselines that challenge its design choices, in terms of success rate and sample efficiency. The project website is at https://sites.google.com/view/asymdex-2024/.

Deep Image Style Transfer from Freeform Text

Dec 13, 2022

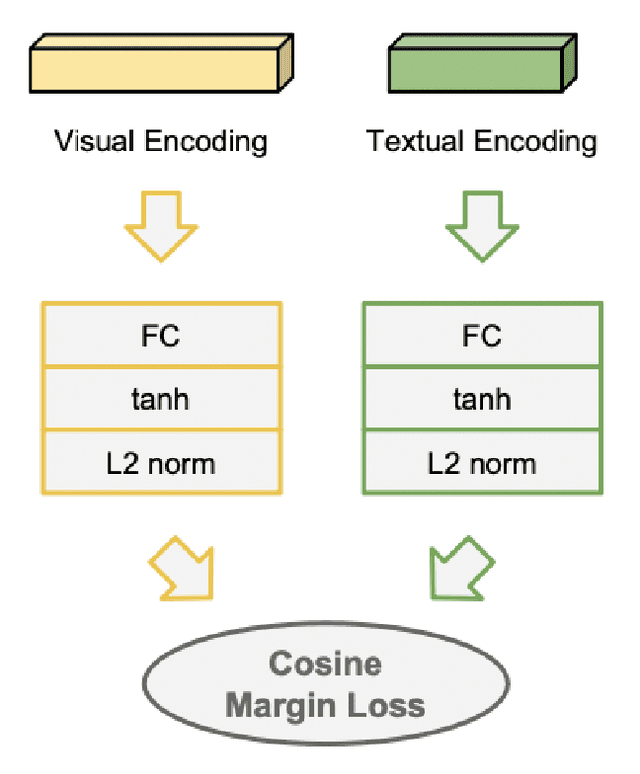

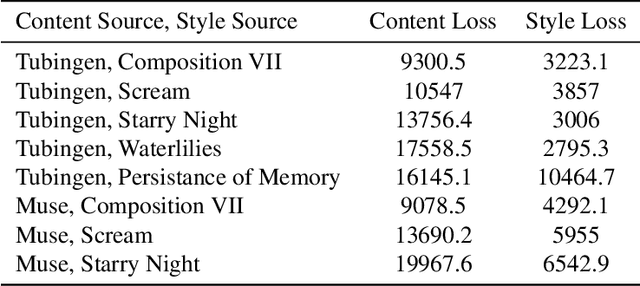

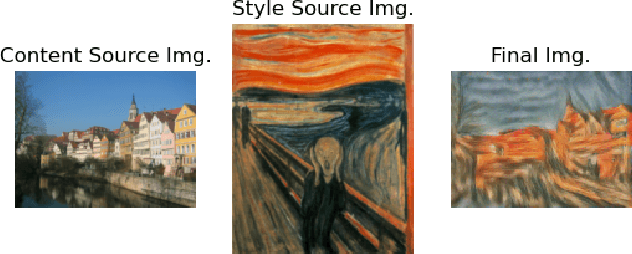

Abstract:This paper creates a novel method of deep neural style transfer by generating style images from freeform user text input. The language model and style transfer model form a seamless pipeline that can create output images with similar losses and improved quality when compared to baseline style transfer methods. The language model returns a closely matching image given a style text and description input, which is then passed to the style transfer model with an input content image to create a final output. A proof-of-concept tool is also developed to integrate the models and demonstrate the effectiveness of deep image style transfer from freeform text.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge