Yuanhao Wang

TokBench: Evaluating Your Visual Tokenizer before Visual Generation

May 26, 2025Abstract:In this work, we reveal the limitations of visual tokenizers and VAEs in preserving fine-grained features, and propose a benchmark to evaluate reconstruction performance for two challenging visual contents: text and face. Visual tokenizers and VAEs have significantly advanced visual generation and multimodal modeling by providing more efficient compressed or quantized image representations. However, while helping production models reduce computational burdens, the information loss from image compression fundamentally limits the upper bound of visual generation quality. To evaluate this upper bound, we focus on assessing reconstructed text and facial features since they typically: 1) exist at smaller scales, 2) contain dense and rich textures, 3) are prone to collapse, and 4) are highly sensitive to human vision. We first collect and curate a diverse set of clear text and face images from existing datasets. Unlike approaches using VLM models, we employ established OCR and face recognition models for evaluation, ensuring accuracy while maintaining an exceptionally lightweight assessment process <span style="font-weight: bold; color: rgb(214, 21, 21);">requiring just 2GB memory and 4 minutes</span> to complete. Using our benchmark, we analyze text and face reconstruction quality across various scales for different image tokenizers and VAEs. Our results show modern visual tokenizers still struggle to preserve fine-grained features, especially at smaller scales. We further extend this evaluation framework to video, conducting comprehensive analysis of video tokenizers. Additionally, we demonstrate that traditional metrics fail to accurately reflect reconstruction performance for faces and text, while our proposed metrics serve as an effective complement.

Diff-Unfolding: A Model-Based Score Learning Framework for Inverse Problems

May 16, 2025Abstract:Diffusion models are extensively used for modeling image priors for inverse problems. We introduce \emph{Diff-Unfolding}, a principled framework for learning posterior score functions of \emph{conditional diffusion models} by explicitly incorporating the physical measurement operator into a modular network architecture. Diff-Unfolding formulates posterior score learning as the training of an unrolled optimization scheme, where the measurement model is decoupled from the learned image prior. This design allows our method to generalize across inverse problems at inference time by simply replacing the forward operator without retraining. We theoretically justify our unrolling approach by showing that the posterior score can be derived from a composite model-based optimization formulation. Extensive experiments on image restoration and accelerated MRI show that Diff-Unfolding achieves state-of-the-art performance, improving PSNR by up to 2 dB and reducing LPIPS by $22.7\%$, while being both compact (47M parameters) and efficient (0.72 seconds per $256 \times 256$ image). An optimized C++/LibTorch implementation further reduces inference time to 0.63 seconds, underscoring the practicality of our approach.

Unsupervised Detection of Distribution Shift in Inverse Problems using Diffusion Models

May 16, 2025Abstract:Diffusion models are widely used as priors in imaging inverse problems. However, their performance often degrades under distribution shifts between the training and test-time images. Existing methods for identifying and quantifying distribution shifts typically require access to clean test images, which are almost never available while solving inverse problems (at test time). We propose a fully unsupervised metric for estimating distribution shifts using only indirect (corrupted) measurements and score functions from diffusion models trained on different datasets. We theoretically show that this metric estimates the KL divergence between the training and test image distributions. Empirically, we show that our score-based metric, using only corrupted measurements, closely approximates the KL divergence computed from clean images. Motivated by this result, we show that aligning the out-of-distribution score with the in-distribution score -- using only corrupted measurements -- reduces the KL divergence and leads to improved reconstruction quality across multiple inverse problems.

GarmentCrafter: Progressive Novel View Synthesis for Single-View 3D Garment Reconstruction and Editing

Mar 11, 2025Abstract:We introduce GarmentCrafter, a new approach that enables non-professional users to create and modify 3D garments from a single-view image. While recent advances in image generation have facilitated 2D garment design, creating and editing 3D garments remains challenging for non-professional users. Existing methods for single-view 3D reconstruction often rely on pre-trained generative models to synthesize novel views conditioning on the reference image and camera pose, yet they lack cross-view consistency, failing to capture the internal relationships across different views. In this paper, we tackle this challenge through progressive depth prediction and image warping to approximate novel views. Subsequently, we train a multi-view diffusion model to complete occluded and unknown clothing regions, informed by the evolving camera pose. By jointly inferring RGB and depth, GarmentCrafter enforces inter-view coherence and reconstructs precise geometries and fine details. Extensive experiments demonstrate that our method achieves superior visual fidelity and inter-view coherence compared to state-of-the-art single-view 3D garment reconstruction methods.

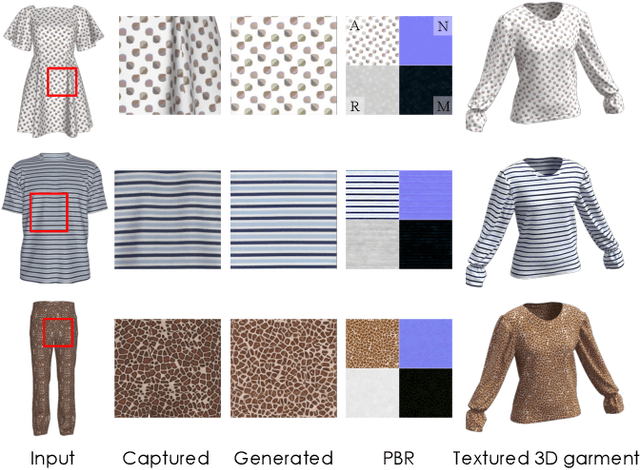

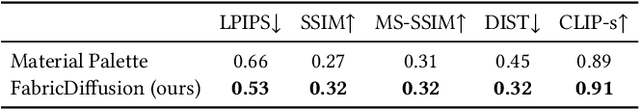

FabricDiffusion: High-Fidelity Texture Transfer for 3D Garments Generation from In-The-Wild Clothing Images

Oct 02, 2024

Abstract:We introduce FabricDiffusion, a method for transferring fabric textures from a single clothing image to 3D garments of arbitrary shapes. Existing approaches typically synthesize textures on the garment surface through 2D-to-3D texture mapping or depth-aware inpainting via generative models. Unfortunately, these methods often struggle to capture and preserve texture details, particularly due to challenging occlusions, distortions, or poses in the input image. Inspired by the observation that in the fashion industry, most garments are constructed by stitching sewing patterns with flat, repeatable textures, we cast the task of clothing texture transfer as extracting distortion-free, tileable texture materials that are subsequently mapped onto the UV space of the garment. Building upon this insight, we train a denoising diffusion model with a large-scale synthetic dataset to rectify distortions in the input texture image. This process yields a flat texture map that enables a tight coupling with existing Physically-Based Rendering (PBR) material generation pipelines, allowing for realistic relighting of the garment under various lighting conditions. We show that FabricDiffusion can transfer various features from a single clothing image including texture patterns, material properties, and detailed prints and logos. Extensive experiments demonstrate that our model significantly outperforms state-to-the-art methods on both synthetic data and real-world, in-the-wild clothing images while generalizing to unseen textures and garment shapes.

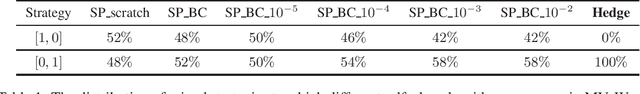

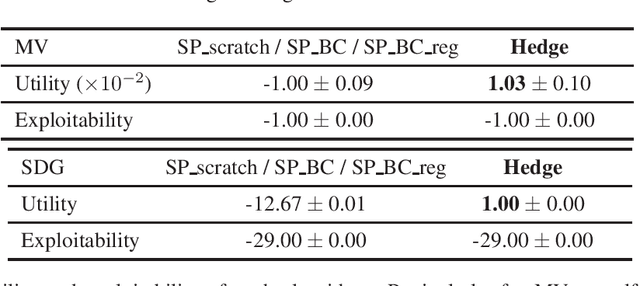

Towards Principled Superhuman AI for Multiplayer Symmetric Games

Jun 06, 2024

Abstract:Multiplayer games, when the number of players exceeds two, present unique challenges that fundamentally distinguish them from the extensively studied two-player zero-sum games. These challenges arise from the non-uniqueness of equilibria and the risk of agents performing highly suboptimally when adopting equilibrium strategies. While a line of recent works developed learning systems successfully achieving human-level or even superhuman performance in popular multiplayer games such as Mahjong, Poker, and Diplomacy, two critical questions remain unaddressed: (1) What is the correct solution concept that AI agents should find? and (2) What is the general algorithmic framework that provably solves all games within this class? This paper takes the first step towards solving these unique challenges of multiplayer games by provably addressing both questions in multiplayer symmetric normal-form games. We also demonstrate that many meta-algorithms developed in prior practical systems for multiplayer games can fail to achieve even the basic goal of obtaining agent's equal share of the total reward.

Directional Smoothness and Gradient Methods: Convergence and Adaptivity

Mar 06, 2024

Abstract:We develop new sub-optimality bounds for gradient descent (GD) that depend on the conditioning of the objective along the path of optimization, rather than on global, worst-case constants. Key to our proofs is directional smoothness, a measure of gradient variation that we use to develop upper-bounds on the objective. Minimizing these upper-bounds requires solving implicit equations to obtain a sequence of strongly adapted step-sizes; we show that these equations are straightforward to solve for convex quadratics and lead to new guarantees for two classical step-sizes. For general functions, we prove that the Polyak step-size and normalized GD obtain fast, path-dependent rates despite using no knowledge of the directional smoothness. Experiments on logistic regression show our convergence guarantees are tighter than the classical theory based on L-smoothness.

Is RLHF More Difficult than Standard RL?

Jun 25, 2023

Abstract:Reinforcement learning from Human Feedback (RLHF) learns from preference signals, while standard Reinforcement Learning (RL) directly learns from reward signals. Preferences arguably contain less information than rewards, which makes preference-based RL seemingly more difficult. This paper theoretically proves that, for a wide range of preference models, we can solve preference-based RL directly using existing algorithms and techniques for reward-based RL, with small or no extra costs. Specifically, (1) for preferences that are drawn from reward-based probabilistic models, we reduce the problem to robust reward-based RL that can tolerate small errors in rewards; (2) for general arbitrary preferences where the objective is to find the von Neumann winner, we reduce the problem to multiagent reward-based RL which finds Nash equilibria for factored Markov games under a restricted set of policies. The latter case can be further reduce to adversarial MDP when preferences only depend on the final state. We instantiate all reward-based RL subroutines by concrete provable algorithms, and apply our theory to a large class of models including tabular MDPs and MDPs with generic function approximation. We further provide guarantees when K-wise comparisons are available.

Breaking the Curse of Multiagency: Provably Efficient Decentralized Multi-Agent RL with Function Approximation

Mar 02, 2023Abstract:A unique challenge in Multi-Agent Reinforcement Learning (MARL) is the curse of multiagency, where the description length of the game as well as the complexity of many existing learning algorithms scale exponentially with the number of agents. While recent works successfully address this challenge under the model of tabular Markov Games, their mechanisms critically rely on the number of states being finite and small, and do not extend to practical scenarios with enormous state spaces where function approximation must be used to approximate value functions or policies. This paper presents the first line of MARL algorithms that provably resolve the curse of multiagency under function approximation. We design a new decentralized algorithm -- V-Learning with Policy Replay, which gives the first polynomial sample complexity results for learning approximate Coarse Correlated Equilibria (CCEs) of Markov Games under decentralized linear function approximation. Our algorithm always outputs Markov CCEs, and achieves an optimal rate of $\widetilde{\mathcal{O}}(\epsilon^{-2})$ for finding $\epsilon$-optimal solutions. Also, when restricted to the tabular case, our result improves over the current best decentralized result $\widetilde{\mathcal{O}}(\epsilon^{-3})$ for finding Markov CCEs. We further present an alternative algorithm -- Decentralized Optimistic Policy Mirror Descent, which finds policy-class-restricted CCEs using a polynomial number of samples. In exchange for learning a weaker version of CCEs, this algorithm applies to a wider range of problems under generic function approximation, such as linear quadratic games and MARL problems with low ''marginal'' Eluder dimension.

Learning Rationalizable Equilibria in Multiplayer Games

Oct 20, 2022

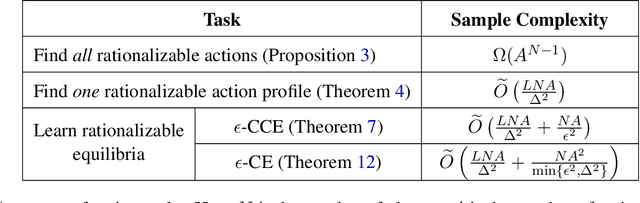

Abstract:A natural goal in multiagent learning besides finding equilibria is to learn rationalizable behavior, where players learn to avoid iteratively dominated actions. However, even in the basic setting of multiplayer general-sum games, existing algorithms require a number of samples exponential in the number of players to learn rationalizable equilibria under bandit feedback. This paper develops the first line of efficient algorithms for learning rationalizable Coarse Correlated Equilibria (CCE) and Correlated Equilibria (CE) whose sample complexities are polynomial in all problem parameters including the number of players. To achieve this result, we also develop a new efficient algorithm for the simpler task of finding one rationalizable action profile (not necessarily an equilibrium), whose sample complexity substantially improves over the best existing results of Wu et al. (2021). Our algorithms incorporate several novel techniques to guarantee rationalizability and no (swap-)regret simultaneously, including a correlated exploration scheme and adaptive learning rates, which may be of independent interest. We complement our results with a sample complexity lower bound showing the sharpness of our guarantees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge